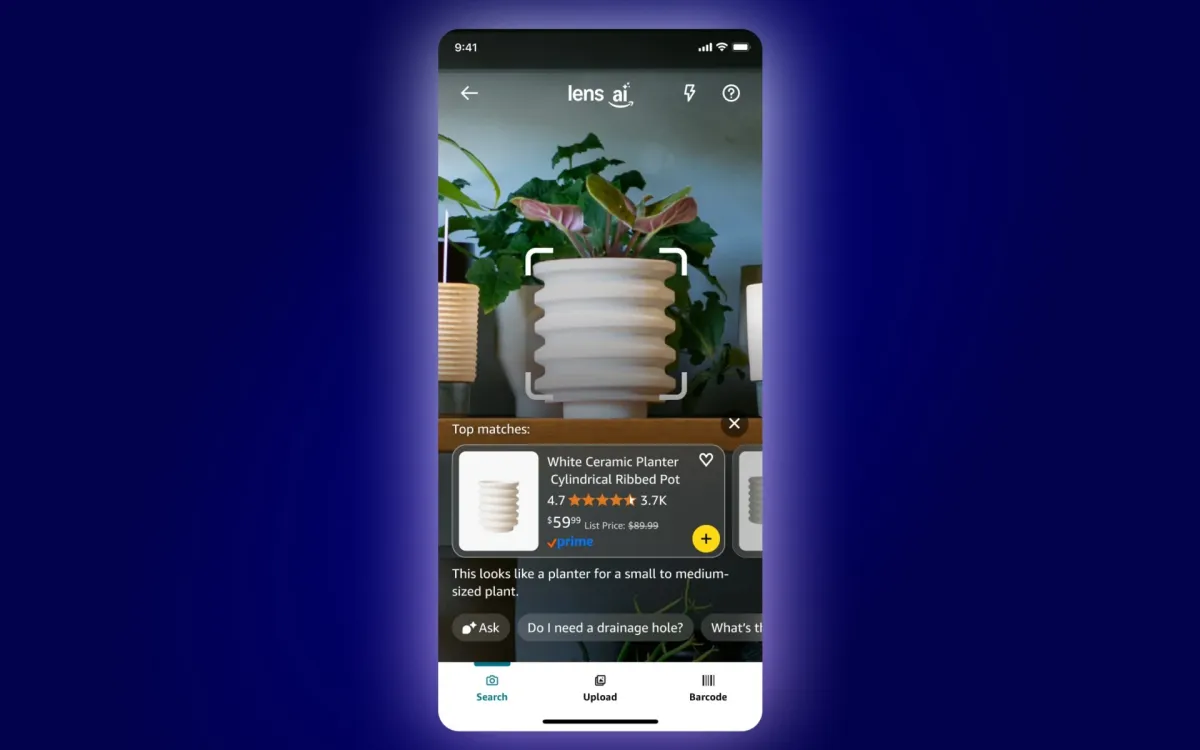

Amazon announced on September 2, 2025, the launch of Lens Live, an enhanced version of its visual search tool Amazon Lens, powered by artificial intelligence to deliver real-time product scanning and instant matches. The new feature integrates Amazon's AI shopping assistant Rufus directly into the camera experience, creating what the company describes as the most advanced visual shopping tool available on mobile platforms.

The enhanced visual search capability rolls out immediately to tens of millions of customers in the Amazon Shopping app on iOS, with plans to expand to all US customers over the coming months. According to Trishul Chilimbi, Vice President and Distinguished Scientist of Stores Foundational AI at Amazon, "When customers with Lens Live open Amazon Lens, the Lens camera will instantly begin scanning products and show top matching items in a swipeable carousel at the bottom of the screen, allowing for quick comparisons."

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Unlike the traditional Amazon Lens experience that required users to take photos or upload images manually, Lens Live operates continuously as customers move their camera across scenes. The system automatically identifies primary objects in real time through on-device computer vision technology, creating what Amazon characterizes as "a smooth experience that requires minimal customer interaction."

The technical infrastructure behind Lens Live represents a significant advancement in consumer-facing machine learning applications. The system runs on AWS-managed Amazon OpenSearch and Amazon SageMaker services, deploying machine learning models at scale across Amazon's global infrastructure. The platform employs what Amazon describes as "an accurate, lightweight computer vision object detection model running on-device" that processes visual information locally on users' smartphones.

At the core of the matching technology sits a deep learning visual embedding model that compares customer camera views against billions of Amazon products. This computational approach enables the system to retrieve exact or highly similar items from Amazon's catalog within seconds of detection. The integration represents Amazon's most sophisticated deployment of real-time visual search technology since the original Lens launch.

The introduction of Rufus into the camera experience marks the first integration of Amazon's conversational AI assistant directly into visual search workflows. While customers view products through their camera, Rufus generates suggested questions and quick summaries that appear under the product carousel. These conversational prompts allow customers to access key product insights and research information without leaving the camera interface.

Customers can now tap items directly in the camera view to focus on specific products, add items to their cart using the plus icon, or save products to wish lists through the heart icon. This streamlined interaction model eliminates the need to navigate between different sections of the Amazon app during product discovery sessions.

The enhanced functionality addresses evolving consumer behavior patterns in mobile commerce. With more than 70% of shopping now occurring on mobile devices, according to industry data, visual search technologies have gained significant traction. Amazon unveiled five new visual search features in October 2024, reflecting growing customer demand for image-based product discovery tools.

Amazon reported a 70% year-over-year increase in visual searches worldwide prior to the Lens Live announcement, highlighting the growing importance of this technology in online retail. The company's expansion of visual search capabilities follows broader industry trends, as competitors including Google and emerging platforms like Perplexity have enhanced their own visual commerce offerings.

The timing of Amazon's Lens Live launch coincides with significant developments in AI-powered shopping experiences across the e-commerce industry. Google introduced Shopping ads in Google Lens in October 2024, reaching nearly 20 billion monthly visual searches. Meanwhile, Perplexity launched its AI-powered shopping assistant in November 2024, introducing one-click checkout capabilities directly within AI search interfaces.

Amazon's approach differs from competitor implementations through its integration of both visual recognition and conversational AI within a single interface. While Google's visual search focuses primarily on product identification and price comparison, Amazon's Lens Live combines real-time scanning with personalized shopping assistance through Rufus integration.

The expansion of Rufus to all US customers in July 2024 laid the groundwork for this enhanced visual search experience. Rufus utilizes a specialized large language model trained on Amazon's product catalog, customer reviews, community questions and answers, and web data to provide contextual shopping guidance.

For the marketing community, Lens Live represents a significant shift in product discovery mechanisms. The real-time nature of visual search creates new opportunities for product visibility, while the integration of AI assistance may influence purchase decision processes. Marketing professionals tracking visual commerce trends have noted the potential impact on traditional search-based advertising strategies.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

The technical implementation employs multiple machine learning systems working in coordination. The on-device computer vision model handles initial object detection and primary identification tasks locally on user devices, reducing latency and improving response times. This local processing approach differs from cloud-based visual search implementations that require constant data transmission between devices and remote servers.

Amazon's visual embedding model operates at scale to match camera inputs against the company's product database. The system processes what Amazon describes as "billions of Amazon products" to find exact or highly similar items based on visual characteristics detected through the camera interface. This matching process occurs in real time as customers pan their cameras across different products or environments.

The integration of AWS-managed services provides the computational infrastructure necessary for large-scale deployment. Amazon OpenSearch handles the indexing and retrieval of product information, while Amazon SageMaker manages the deployment and scaling of machine learning models across the platform's global user base.

Lens Live maintains compatibility with traditional Amazon Lens functionality, allowing customers to continue using photo capture, image uploads, or barcode scanning options if preferred. This backward compatibility ensures that existing user workflows remain functional while providing enhanced capabilities for customers who adopt the new real-time scanning features.

The phased rollout strategy begins with iOS users in the United States, with plans to expand availability to additional customers and potentially other platforms in subsequent phases. Amazon has not specified exact timelines for Android availability or international market expansion, though the company indicated that rollout will continue "in the coming weeks" and "coming months" for different user segments.

Industry analysis suggests that Lens Live's launch reflects Amazon's broader strategy to maintain competitive positioning in visual commerce as the technology becomes increasingly important for customer acquisition and retention. The integration of AI assistance directly into the visual search experience may provide Amazon with advantages in customer engagement and conversion rates compared to purely visual search tools.

The development follows Amazon's substantial investments in AI technology spanning more than 25 years, according to the company. These investments have resulted in applications ranging from personalized product recommendations to automated logistics systems and checkout-free retail experiences in Amazon Go stores.

Amazon's emphasis on convenience and speed in product discovery aligns with broader consumer expectations for simplified shopping experiences. The company states that it will "continue to look for ways to build on the convenience of searching and shopping with Amazon Lens, helping customers find and shop for the items they need and want that much faster."

The introduction of Lens Live occurs as the visual search market experiences rapid growth and increasing competition. Major technology companies including Google, Meta, and emerging AI platforms have launched competing visual commerce solutions, creating a dynamic landscape for innovation in image-based product discovery.

For Amazon's ecosystem, Lens Live represents the convergence of multiple technological investments including computer vision, machine learning, cloud computing infrastructure, and conversational AI. The integration demonstrates the company's ability to combine these technologies into consumer-facing applications that address practical shopping challenges.

The success of Lens Live will likely influence the development of future visual commerce features across the industry. As consumers increasingly adopt visual search technologies, companies may invest additional resources in real-time scanning capabilities and AI-assisted shopping experiences to remain competitive in the evolving e-commerce landscape.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Timeline

- February 1, 2024: Amazon unveils Rufus conversational AI shopping assistant in beta testing

- July 12, 2024: Amazon expands Rufus to all US customers ahead of Prime Day 2024

- October 2, 2024: Amazon introduces five new visual search features reporting 70% increase in visual searches

- October 3, 2024: Google introduces Shopping ads in Google Lens with 20 billion monthly visual searches

- November 18, 2024: Perplexity launches AI-powered shopping assistantwith one-click checkout

- March 31, 2025: Amazon expands AI capabilities with new personalized shopping features building on Rufus foundation

- April 21, 2025: Amazon introduces two-part product titles for sellers improving AI system data structure

- July 21-23, 2025: Amazon exits Google Shopping globally in strategic withdrawal test

- August 25, 2025: Amazon resumes Google Shopping advertising after month-long test period

- September 2, 2025: Amazon announces Lens Live with real-time scanning and Rufus integration

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

PPC Land explains

Visual Search: Technology that enables users to search for products using images rather than text queries, representing one of the fastest-growing segments in e-commerce technology. Visual search has experienced a 70% year-over-year increase according to Amazon's data, with Google Lens processing nearly 20 billion visual searches monthly. This technology leverages computer vision and machine learning algorithms to analyze images and return relevant product matches, fundamentally changing how consumers discover and shop for products online.

Artificial Intelligence (AI): The underlying technology powering Lens Live's real-time scanning and product matching capabilities, integrating machine learning models with natural language processing through Rufus integration. AI enables the system to process visual information, understand user intent, and provide personalized shopping recommendations without human intervention. The technology represents a convergence of multiple AI disciplines including computer vision, deep learning, and conversational interfaces within consumer-facing applications.

Machine Learning Models: Sophisticated algorithms that enable Lens Live to identify products, match visual patterns, and improve accuracy over time through continuous data processing. These models operate both on-device for real-time object detection and in cloud environments for complex product matching against Amazon's catalog. The implementation demonstrates advanced deployment of machine learning at consumer scale, processing billions of product comparisons within seconds of visual input.

Real-time Scanning: The core functionality that distinguishes Lens Live from traditional visual search tools, providing instant product identification as users move their cameras across different scenes. This continuous processing approach eliminates the need for manual photo capture or image uploads, creating seamless shopping experiences. The technology requires sophisticated on-device processing capabilities combined with cloud-based matching systems to deliver immediate results without perceptible delays.

Product Discovery: The fundamental shopping process that Lens Live aims to transform through visual interfaces, addressing how consumers find and evaluate products within Amazon's vast catalog. Traditional product discovery relies on text-based search queries and category browsing, while visual search enables discovery through real-world object recognition. This shift represents a significant change in e-commerce user behavior, particularly on mobile devices where visual interfaces often provide more intuitive experiences than text input.

Camera Interface: The primary user interaction method for Lens Live, serving as both input device and display platform for product information and purchasing options. The interface integrates multiple functionalities including real-time scanning, product carousels, AI assistant interactions, and direct purchasing actions within a single camera view. This consolidation reduces friction in the shopping process by eliminating navigation between different app sections during product research and comparison activities.

AWS Infrastructure: The cloud computing foundation supporting Lens Live's machine learning operations, utilizing Amazon OpenSearch and Amazon SageMaker services for large-scale deployment. This infrastructure enables the processing of visual data from millions of users simultaneously while maintaining response times necessary for real-time applications. The AWS integration demonstrates Amazon's ability to leverage its cloud computing expertise to support consumer-facing AI applications at global scale.

Mobile Commerce: The broader e-commerce context driving visual search adoption, with over 70% of shopping now occurring on mobile devices according to industry data. Mobile interfaces present unique challenges for traditional text-based search due to smaller screens and touch-based input methods. Visual search technologies like Lens Live address these limitations by providing more intuitive interaction methods suited to mobile device capabilities and user expectations.

Product Matching: The technical process of comparing visual inputs against Amazon's product database to identify exact or similar items available for purchase. This process involves analyzing visual characteristics, product attributes, and contextual information to deliver relevant results. The matching system processes billions of Amazon products in real-time, representing one of the largest-scale visual recognition applications in consumer e-commerce.

Shopping Experience: The comprehensive customer journey that Lens Live aims to enhance through integrated visual search, AI assistance, and streamlined purchasing workflows. The experience encompasses product discovery, research, comparison, and transaction completion within a unified interface. Amazon's approach focuses on reducing friction and complexity while providing personalized guidance through AI integration, reflecting broader industry trends toward seamless omnichannel retail experiences.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: Amazon, led by Trishul Chilimbi (Vice President and Distinguished Scientist, Stores Foundational AI), launched the enhanced visual search tool for tens of millions of US customers using iOS devices.

What: Amazon Lens Live provides real-time product scanning with instant matches displayed in swipeable carousels, integrated with Rufus AI assistant for product insights and shopping guidance. The system uses on-device computer vision and cloud-based machine learning to match camera views against billions of Amazon products.

When: Announced on September 2, 2025, with immediate availability for tens of millions of iOS users in the United States and planned expansion to all US customers over the coming months.

Where: Initially available in the Amazon Shopping app on iOS devices for US customers, with plans for broader rollout to additional customers and potentially other platforms in subsequent phases.

Why: Amazon launched Lens Live to address growing consumer demand for visual search capabilities, which increased 70% year-over-year, and to compete with enhanced visual commerce offerings from Google, Perplexity, and other technology companies in the evolving AI-powered shopping market.