New research published by the BBC has revealed significant accuracy and reliability concerns in how artificial intelligence assistants handle news-related queries. According to the findings released on February 11, 2025, more than half of all responses from major AI platforms contained substantial issues.

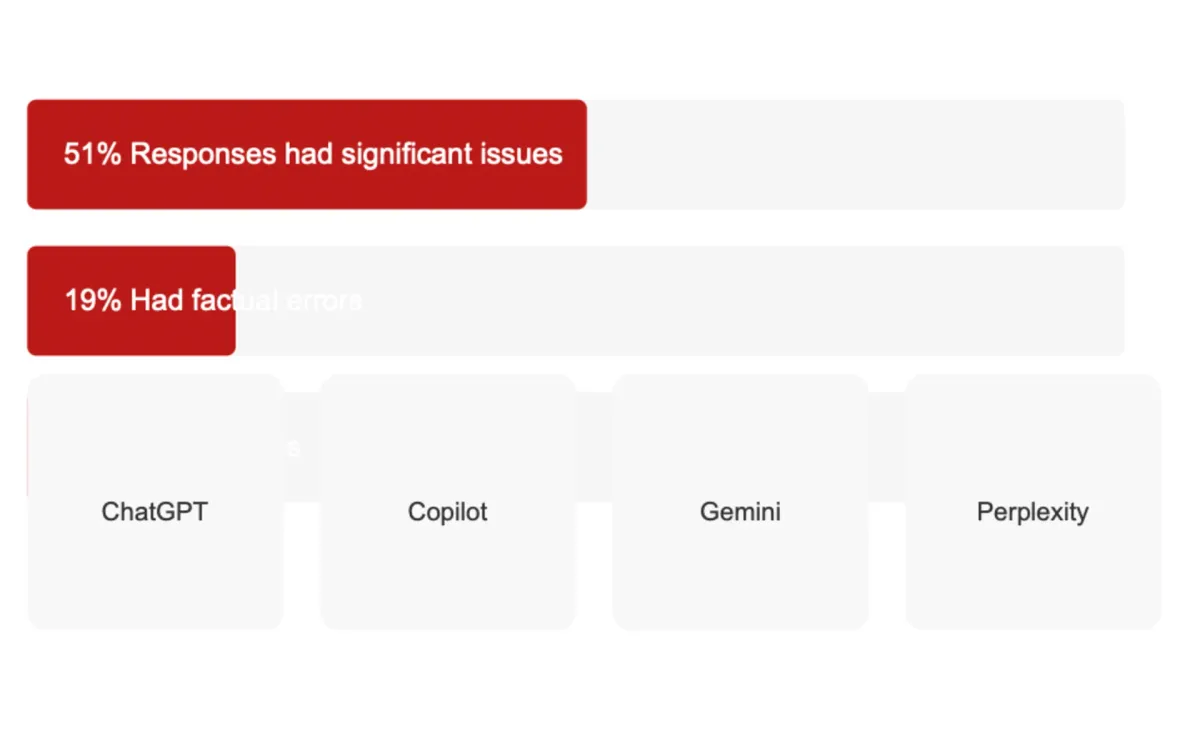

The comprehensive study, conducted in December 2024, examined four prominent AI assistants - OpenAI's ChatGPT, Microsoft's Copilot, Google's Gemini, and Perplexity. According to BBC researchers, these platforms demonstrated concerning patterns of inaccuracy when processing and presenting news content.

The investigation revealed that 51% of AI responses to news-related questions contained significant issues. More specifically, the research identified that 19% of answers citing BBC content introduced factual errors, including incorrect statements, dates, and numerical data. Furthermore, 13% of quotes attributed to BBC articles were either altered from their original form or entirely fabricated.

BBC journalists, experts in their respective fields, conducted the evaluation by analyzing responses to 100 news-related questions. For this study, the BBC temporarily lifted its usual access restrictions, allowing the AI assistants to access its website content. The assessment criteria included accuracy, attribution of sources, impartiality, and proper representation of BBC content.

The research exposed multiple critical errors across different platforms. According to the study, Gemini incorrectly stated NHS policy on vaping, claiming the health service advised against it as a smoking cessation method. This directly contradicted the NHS's actual position, which recommends vaping as a method to quit smoking.

The investigation also identified problems with temporal accuracy. Both ChatGPT and Copilot provided outdated political information, referring to former officials as current office holders. For instance, they continued to present Rishi Sunak and Nicola Sturgeon as active leaders after they had left their positions.

Issues extended beyond factual accuracy to content representation. The research found that Perplexity, while citing BBC sources, introduced editorial characterizations absent from the original reporting. In coverage of Middle East conflicts, the platform described Iran's actions as showing "restraint" and labeled Israel's responses as "aggressive" - terminology not present in the BBC's impartial reporting.

The study found varying performance levels among the platforms. According to the researchers, Gemini produced the most sourcing errors, with reviewers rating over 45% of its responses as containing significant sourcing issues. Additionally, 26% of Gemini's responses and 7% of ChatGPT's provided no sources for claims about public health, Middle East conflicts, and UK politics.

The investigation revealed problems with quote accuracy across platforms. Eight quotes sourced from BBC articles were either altered or nonexistent in the cited sources. This issue appeared in responses from all tested assistants except ChatGPT, affecting 13% of responses that included BBC quotes.

Context omission emerged as a persistent issue. The study found AI assistants struggling with complex topics requiring multiple perspectives. For example, both ChatGPT and Perplexity made incorrect blanket statements about UK-wide energy price caps, failing to note that Northern Ireland operates under different regulations.

Pete Archer, Programme Director for Generative AI at the BBC, emphasized the broader implications of these findings. According to Archer, while AI presents opportunities for innovation in areas such as subtitling and translation, the research highlights significant challenges regarding information accuracy and trustworthiness.

The BBC plans to conduct follow-up studies to monitor improvements in AI accuracy over time. The research team has indicated interest in expanding future investigations to include other publishers and media organizations, suggesting that similar issues likely affect content from other news sources.

This research marks the first known instance of journalists systematically reviewing AI assistants' responses to news queries. The findings raise important questions about information accuracy in the age of AI and underscore the need for mechanisms to ensure reliable news dissemination through these increasingly popular platforms.