California Elections Code Division 20, Chapter 7, posted on January 9, 2025, has enacted comprehensive legislation to address the growing concern of artificially generated deceptive content in elections. The Defending Democracy from Deepfake Deception Act of 2025 establishes strict requirements for large online platforms to identify and remove misleading AI-generated content during election periods.

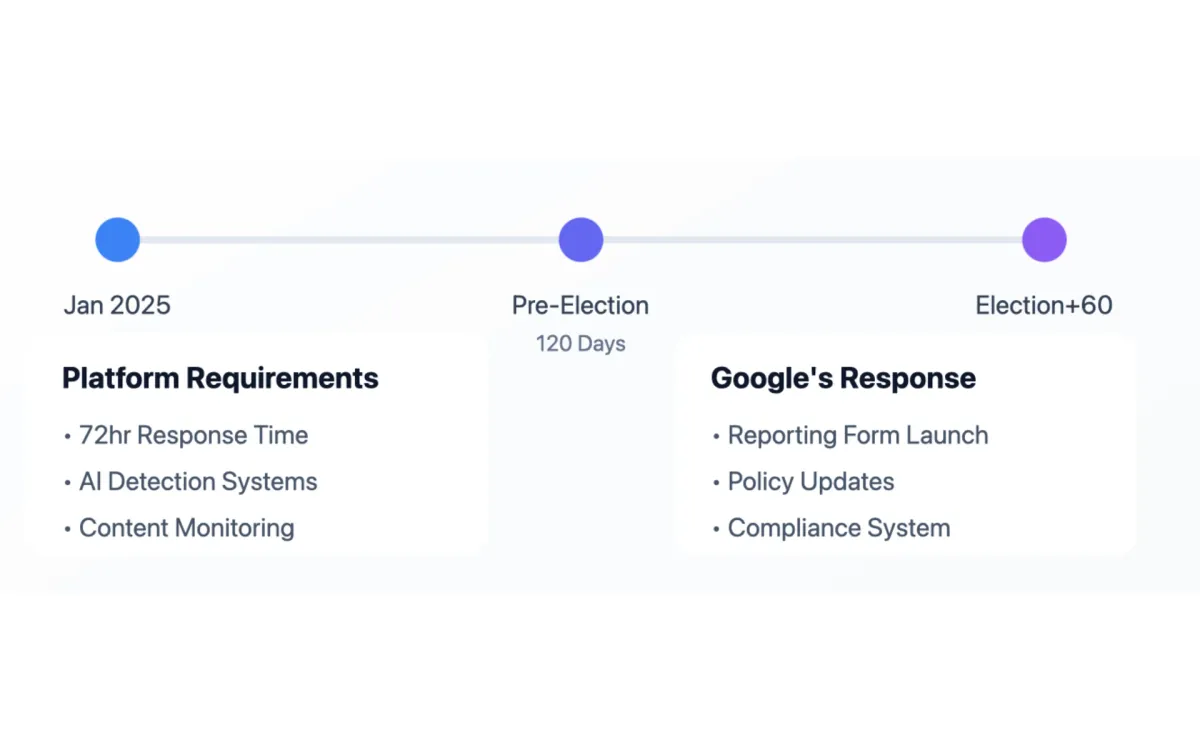

Google's Advertising Policies document, released on January 9, 2025, outlines the introduction of a specialized reporting form for California residents. This form will enable users to report advertisements that potentially violate the newly enacted California Elections Code provisions regarding artificially generated content in political advertising.

This policy update from Google represents one of the first concrete implementations by a major technology platform in response to California's new legislation. The company's decision to create a dedicated reporting mechanism demonstrates the immediate impact of the law on digital advertising practices, particularly in the context of political content and election-related communications.

Large online platforms, defined as those with at least 1 million California users in the preceding 12 months, must implement state-of-the-art techniques to identify and remove specific types of deceptive content. According to the law, platforms must act within 72 hours of receiving reports about potentially deceptive content.

The legislation specifically targets three categories of manipulated content: candidates portrayed as doing or saying things they did not do or say that could harm their electoral prospects; elections officials depicted making false statements that could undermine election confidence; and elected officials shown making election-influencing statements they never made.

The temporal scope of the law is precisely defined. For candidate-related content, the removal requirements apply during a period starting 120 days before an election through election day. For content involving elections officials, the requirements extend 60 days past the election.

Candidates who use manipulated content of themselves must include a clear disclosure stating "This [image/audio/video] has been manipulated." The law specifies detailed requirements for disclosure visibility and placement, including font size requirements for visual media and voice clarity standards for audio content.

The legislation establishes a reporting mechanism for California residents. Platforms must respond to reports within 36 hours and implement any necessary actions within 72 hours. Enforcement provisions allow candidates, elected officials, and elections officials to seek injunctive relief if platforms fail to comply.

The Attorney General and local prosecutors can also pursue legal action against non-compliant platforms. The law applies to content in all languages, requiring disclosures in both the original language and English.

Traditional media outlets receive specific exemptions. Newspapers, magazines, and broadcasting stations are exempt when they clearly disclose the manipulated nature of the content. The law also excludes satire and parody from its requirements.

In technical implementation, platforms must develop procedures for both content removal and labeling. Content falling outside specified time periods but still meeting deceptive criteria must be labeled with the statement "This [image/audio/video] has been manipulated and is not authentic."

The legislation reflects California's response to technological challenges in electoral integrity, as artificial intelligence capabilities continue to advance in creating increasingly convincing synthetic media. The law represents a structured approach to managing the intersection of artificial intelligence and democratic processes.