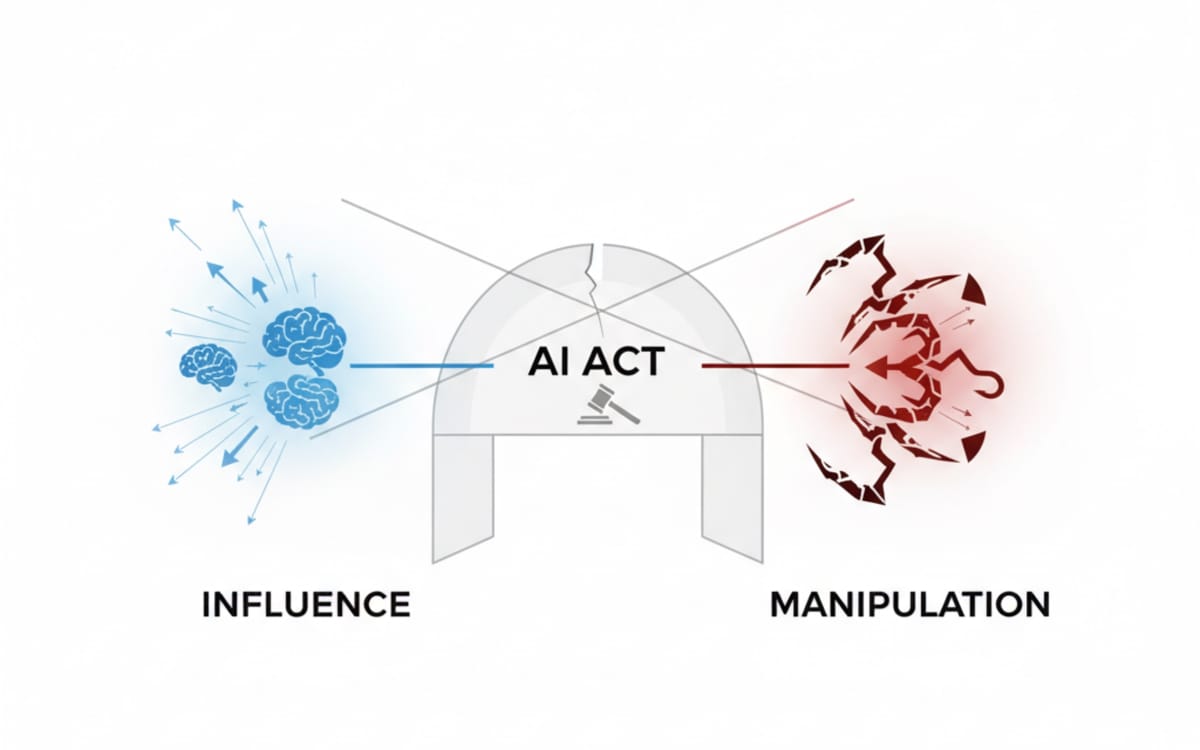

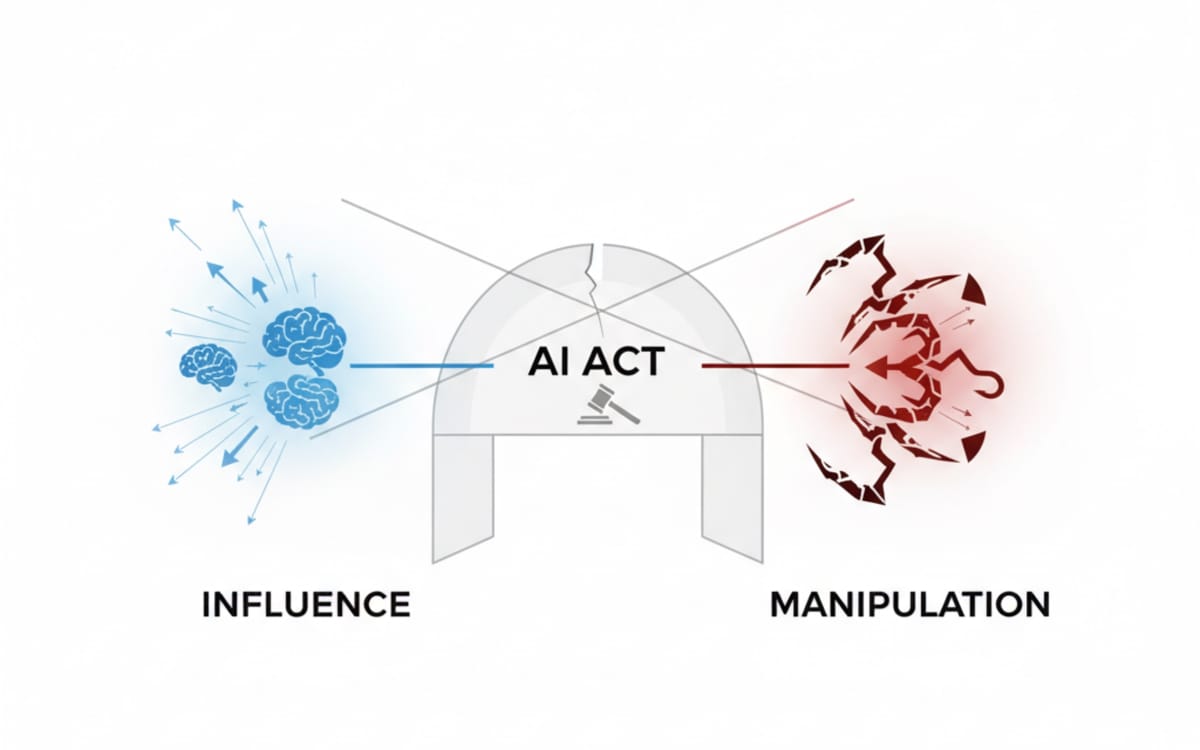

The European Commission published clarification on prohibited artificial intelligence practices in February 2025, establishing legal boundaries between permissible influence and forbidden manipulation under the EU AI Act. The guidance documents address growing concerns about AI systems that shape human decision-making through mechanisms ranging from logical reasoning to psychological exploitation.

According to research published by Dr. Théo Antunes during his PhD work on artificial intelligence and law, AI systems that interact with humans can employ five distinct forms of influence. Persuasion represents the sole form carrying positive connotations, where "the AI system seeks to convince through logical reasoning," according to Antunes. The four remaining forms—manipulation, deception, coercion, and exploitation—carry negative implications and potentially violate the AI Act's Article 5 prohibitions.

Manipulation occurs when "the AI exploits cognitive biases or presents results in a misleading way, subtly steering the user's decision," according to Antunes. Deception involves providing "false or distorted information but in a form that appears trustworthy." Coercion applies "psychological pressure that pushes someone toward a certain behavior," while exploitation identifies and leverages "personal vulnerabilities to influence decision-making."

Sign up for the free weekly newsletter

Your go-to source for digital marketing news.

No spam. Unsubscribe anytime.

The distinction creates significant legal consequences for AI providers operating in European markets. "Under the EU AI Act, influence is permissible, but manipulation is strictly prohibited," according to Antunes. The challenge emerges from the thin operational boundary between these categories. "Both operate through similar mechanisms, and often, it's only after the fact that we can determine whether an AI system influenced us ethically or manipulatively," Antunes noted in analysis published on LinkedIn.

Article 5 of the EU AI Act prohibits specific AI applications that could manipulate decisions, exploit vulnerabilities, or predict criminal behavior. The regulatory framework addresses AI systems capable of undermining human judgment rather than enhancing it. For marketing professionals, these prohibitions intersect with established advertising practices around persuasion optimization and behavioral targeting.

The February 2025 guidance documents represent the first detailed implementation materials published by the Commission following the AI Act's entry into force on August 1, 2024. The Commission positioned the guidance as "living documents" that will undergo regular updates based on supervisor experience, new jurisprudence, and technological developments. However, persistent ambiguities remain in the AI system definition, particularly regarding multi-component systems and interface classifications.

Marketing technology providers face particular scrutiny around persuasion optimization techniques. These applications use AI-powered systems to enhance advertising content's persuasive impact through behavioral analysis, emotional response prediction, and message customization. Such techniques require careful evaluation to determine whether they cross regulatory boundaries into prohibited manipulation or exploitation of consumer vulnerabilities.

Behavioral targeting encompasses marketing techniques that analyze consumer actions, preferences, and patterns to deliver personalized advertising content. Under the EU AI Act framework, certain behavioral targeting applications may face restrictions if they manipulate consumer decisions, exploit psychological vulnerabilities, or employ prohibited AI practices.

The regulatory framework requires AI providers to anticipate downstream usage patterns and implement safeguards preventing prohibited applications. For emotion recognition systems, providers must ensure their technology avoids deployment in educational or workplace settings where such applications face outright prohibitions.

Denmark became the first European Union member state to adopt national legislation implementing the EU AI Act provisions on May 8, 2025. The Danish framework provides nearly three months of operational preparation before the August 2, 2025 deadline when Article 70 requires all member states to designate national competent authorities.

The Danish approach structured oversight around three national competent authorities. The Agency for Digital Government serves multiple roles as the notifying authority, primary market surveillance authority, and single point of contact for European coordination. The Danish Data Protection Authority and Danish Court Administration complete the market surveillance framework, demonstrating how member states integrate AI Act compliance with existing GDPR obligations.

German data protection authorities published comprehensive guidelines in June 2025 establishing technical and organizational requirements for AI system development and operation. The 28-page framework addresses AI systems across their complete lifecycle, from design through operation. The guidelines require regular risk assessments, particularly for publicly available AI systems, affecting marketing technology providers offering AI-powered advertising platforms and optimization tools.

The Netherlands Authority for the Digital Infrastructure published its fifth AI and Algorithms Report on July 15, 2025, detailing regulatory developments including plans for a regulatory sandbox launching by August 2026. The sandbox will enable AI system testing under supervised conditions while balancing fundamental rights protection with technological development support.

The practical implications extend to AI-powered advertising automation. Meta's Advantage+ suite now handles targeting, creative optimization, placements, and budget allocation with minimal human oversight. While Meta reports $20 billion annual run rate and 22% return on ad spend improvements, prominent advertising experts have documented failures including demographic mismatches and creative disasters, raising questions about whether AI-powered advertising represents efficiency gains or threats to brand stewardship.

The boundary between influence and manipulation carries both theoretical and practical significance. Antunes emphasized that "this distinction isn't just theoretical, it's legal and ethical and has practical implications and can lead to serious consequences, both professionally and personally." The regulatory framework reflects recognition that as "AI becomes more integrated into our daily decisions, from what we buy to how we vote, how we work or learn, understanding this boundary is crucial for developers, policymakers, and users alike."

Marketing agency research published in July 2025 demonstrated that AI responses can be strategically influenced through targeted content placement. The controlled experiment successfully manipulated ChatGPT and Perplexity responses using expired domains with Domain Rating scores below 5, confirming that AI systems remain vulnerable to external influence attempts that could cross into manipulative territory.

AI-generated advertisements promoting fraudulent products across major platforms have proliferated, documented by YouTube creator Charles White Jr. in August 2025. The investigation revealed sophisticated marketing schemes using AI technology to create false product demonstrations that mislead consumers about actual product capabilities, demonstrating how AI deception operates in practice.

The regulatory framework addresses these concerns through transparency obligations under Article 50, which become applicable from August 2, 2026. The European Commission opened consultation on September 4, 2025, to develop guidelines and a Code of Practice addressing transparency requirements for AI systems, including detecting and labeling AI-generated content.

The European Commission released detailed implementation guidelines on July 18, 2025, establishing specific technical thresholds for determining when AI models qualify as general-purpose systems under the AI Act. The 36-page framework introduces an indicative criterion that models exceeding 10²³ floating-point operations during training meet general-purpose AI model classification, triggering additional compliance requirements.

Enforcement mechanisms include information requests, model evaluations, mitigation measures, and financial penalties up to 3% of global annual turnover or EUR 15 million. The AI Office assumes supervision responsibilities beginning August 2, 2025, with full enforcement powers taking effect August 2026.

The Law Commission of England and Wales published a discussion paper on July 31, 2025, examining how AI creates legal challenges across private, public, and criminal law. The paper identifies potential liability gaps where autonomous AI systems cause harm but no person bears legal responsibility, complementing the EU framework's focus on preventing manipulation before harm occurs.

AI governance expert Luiza Jarovsky analyzed the July 2025 guidelines on social media, highlighting how models trained specifically for narrow tasks escape regulatory oversight despite meeting computational thresholds. The framework establishes distinct classification levels with increasing obligations based on model capabilities and systemic risk potential.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

Consumer privacy concerns intensified throughout 2025. Research reveals 59% oppose AI training use of their data, while only 28% trust social media platforms' data practices. The regulatory framework addresses these concerns through mandatory transparency, copyright compliance, and prohibited practice definitions that prevent AI systems from exploiting consumer vulnerabilities.

Marketing organizations utilizing general-purpose AI models for content creation, customer targeting, or campaign optimization must demonstrate compliance with detailed transparency and safety requirements. Copyright compliance requirements particularly impact marketing applications that generate creative content, with Commission guidelines released in July 2025 requiring models to implement policies addressing EU copyright law throughout their operational lifecycle.

Industry response to the guidelines has been mixed, with significant opposition from major technology companies. Meta announced it would not sign the European Commission's Code of Practice for general-purpose AI models on July 18, 2025, citing legal uncertainties and measures extending beyond the AI Act's scope. Meanwhile, Google, Microsoft, OpenAI, and Anthropic committed to the voluntary framework.

The German digital association expressed concerns on October 10, 2025, about the country's approach to implementing the EU AI Act. The Bundesverband Digitale Wirtschaft, representing over 600 digital economy companies, identified significant challenges in draft legislation that could affect Germany's competitiveness as an AI development hub.

The timing of implementation creates pressures for marketing organizations preparing for compliance. Technology companies have adopted different strategies for managing regulatory relationships across global markets. Transitional provisions require existing model providers to take necessary steps for compliance by August 2, 2027, providing a graduated timeline for different market participants.

Agentic AI represents artificial intelligence systems that operate autonomously to plan and execute complex workflows without constant human supervision. McKinsey's Technology Trends Outlook 2025 report, published in July 2025, identifies agentic AI as the most significant emerging trend for marketing organizations, marking a fundamental shift from passive AI tools to active collaborators.

Six companies launched the Ad Context Protocol on October 15, 2025, betting that a new open-source technical standard could enable AI agents to communicate across platforms and execute advertising tasks autonomously. However, the announcement drew immediate scrutiny from industry observers questioning whether another protocol is needed when existing standards remain underutilized.

The regulatory framework's emphasis on distinguishing influence from manipulation becomes increasingly critical as AI systems gain autonomy. "In these cases, AI doesn't enhance human judgment, it undermines it," according to Antunes. The challenge lies in establishing clear boundaries before AI systems cause harm, rather than determining after the fact whether ethical lines were crossed.

Cross-border campaigns face additional complexity as Article 50 applies to AI systems placed on European markets regardless of provider location. The EU regulatory framework provides mandatory compliance standards beyond voluntary industry commitments, with graduated enforcement timelines providing implementation periods for affected organizations.

The European Commission's AI Continent Action Plan, announced as part of the 2025 work programme, focuses on computing capacities and AI funding while withdrawing the long-stalled ePrivacy Regulation and AI Liability Directive. The policy shift emphasizes boosting economic competitiveness alongside regulatory enforcement.

Member States coordinate with Commission guidance to establish national competent authorities and enforcement procedures. The multi-stakeholder approach mirrors earlier processes that engaged nearly 1,000 participants across different sectors and expertise areas in developing the General-Purpose AI Code of Practice.

For marketing professionals, the regulatory framework creates new compliance obligations around persuasion techniques, behavioral targeting, and AI-powered optimization systems. The distinction between permissible persuasion and prohibited manipulation requires careful evaluation of AI system design, deployment, and downstream usage patterns to ensure compliance with Article 5 prohibitions while maintaining campaign effectiveness.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Timeline

- August 1, 2024: EU AI Act enters into force establishing comprehensive regulatory framework

- February 2025: European Commission publishes first AI Act guidelines on prohibited practices and AI system definitions

- May 8, 2025: Denmark becomes first EU member state to adopt national AI Act implementation legislation

- June 2025: German authorities issue comprehensive AI development guidelines covering entire system lifecycle

- July 10, 2025: EU publishes final General-Purpose AI Code of Practice addressing transparency and safety obligations

- July 15, 2025: Netherlands publishes fifth AI and Algorithms Report detailing regulatory developments

- July 18, 2025: Commission releases AI Act guidelines and Meta announces refusal to sign code of practice

- July 31, 2025: Law Commission of England and Wales publishes discussion paper on AI legal challenges

- August 2, 2025: AI Act obligations for general-purpose AI models enter application

- September 4, 2025: European Commission opens consultation for AI transparency guidelines under Article 50

- October 10, 2025: German digital association expresses concerns over AI Act implementation approach

- August 2, 2026: Transparency obligations under Article 50 become applicable; Netherlands regulatory sandbox launches

- August 2, 2027: Compliance deadline for models placed on market before August 2, 2025

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Summary

Who: The European Commission published guidance affecting AI providers, marketing technology companies, advertising platforms, and organizations deploying AI systems across European markets. Dr. Théo Antunes, who holds a doctorate in law specializing in artificial intelligence and legal frameworks, distinguished five forms of AI-driven influence during his PhD research.

What: The Commission released clarification on prohibited AI practices in February 2025, establishing legal boundaries between permissible influence and forbidden manipulation under Article 5 of the EU AI Act. The guidance addresses five forms of AI-driven influence: persuasion (permissible), and manipulation, deception, coercion, and exploitation (prohibited).

When: The guidance documents were published in February 2025, following the AI Act's entry into force on August 1, 2024. Compliance requirements for general-purpose AI models entered application on August 2, 2025, with transparency obligations under Article 50 becoming applicable from August 2, 2026, and full enforcement for existing models required by August 2, 2027.

Where: The regulations apply across European Union markets, affecting AI providers regardless of location when placing systems on the European market. Member states including Denmark, Germany, and the Netherlands have published national implementation frameworks establishing oversight authorities and enforcement procedures.

Why: The regulatory framework addresses growing concerns about AI systems that shape human decision-making through mechanisms that could undermine rather than enhance human judgment. The distinction matters for developers, policymakers, and users as AI becomes more integrated into daily decisions affecting purchasing behavior, voting choices, work activities, and learning processes, with violations carrying financial penalties up to 3% of global annual turnover or EUR 15 million.