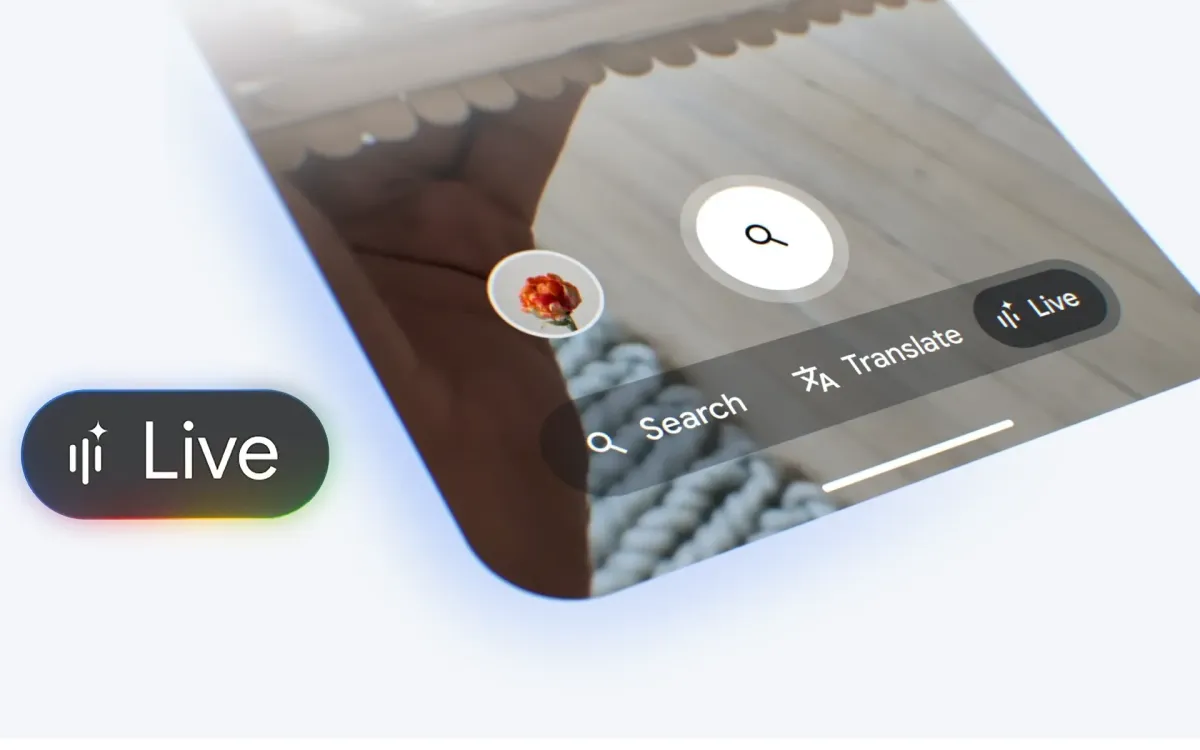

Google announced on September 24, 2025 that Search Live has launched in English for all users in the United States, removing the previous Labs opt-in requirement. The feature enables interactive voice conversations through AI Mode and allows users to share their phone's camera feed with Search for real-time visual assistance.

Users can access Search Live through the Google app on Android and iOS devices by tapping the new Live icon under the search bar. Once activated, the system processes spoken questions while viewing what the user's camera sees, providing immediate responses and connecting users with relevant web links. For those already using Google Lens to capture images, a new Live option appears at the bottom of the screen, with camera sharing enabled by default for instant conversation about visible objects.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

The technical implementation represents a shift in how search engines process multimodal inputs. Traditional search required users to type queries or upload static images separately. Search Live integrates voice recognition, computer vision, and natural language processing into a continuous stream. The system interprets visual context from the camera feed simultaneously with spoken questions, eliminating the manual step of describing objects or typing product model numbers.

Director of Product Management for Search Liza Ma outlined five practical applications for the new capability. When traveling, users can conduct hands-free conversations about neighborhoods while preparing to leave their hotel, then activate the camera while exploring to ask about landmarks or buildings they encounter. The system processes these queries without requiring users to pause and type.

For individuals learning new skills, Search Live functions as an on-demand advisor. Someone attempting to prepare matcha can point their camera at unfamiliar tools in a matcha set and receive explanations about each item's purpose. The system also responds to questions about ingredient substitutions, such as identifying low-sugar or dairy-free alternatives for a matcha latte, without requiring separate searches for each component.

Technical troubleshooting represents another application area. When setting up home theater systems or other electronics, users can point their camera at cables and ports while asking which connections go where. Search interprets the visual information directly rather than requiring users to manually identify makes, models, or cable types. The system processes this contextual data and provides step-by-step guidance with options for follow-up questions or links to detailed documentation.

The educational use case involves using Search as what Ma described as an "AI-powered learning partner." During at-home science experiments, such as the elephant toothpaste demonstration, the system observes chemical reactions as they occur through the camera. Students or parents can ask Search to explain the underlying scientific principles in real time or receive links to additional experiments. This application combines visual recognition of physical processes with educational content delivery.

For recreational activities, Search Live processes multiple objects simultaneously. When choosing from several board games, users can point their camera at an entire collection and receive advice on which game suits their group, rather than examining each box individually or conducting separate searches for each title.

The launch follows testing through Google's Labs program, which allows select users to experiment with features before wide release. By removing the opt-in requirement, Google made the feature available to an estimated 100 million U.S. users who have the Google app installed on their mobile devices. The company has not announced availability timelines for other languages or regions.

From a technical standpoint, Search Live requires continuous processing of audio and video streams. The system must perform speech-to-text conversion, understand conversational context across multiple exchanges, analyze camera input through computer vision algorithms, and retrieve relevant information from Google's index—all within seconds to maintain the real-time interaction that defines the feature.

For the advertising and marketing community, this development matters because it fundamentally changes how consumers discover products and services. PPC Land previously reported on conversational search capabilities, noting their impact on traditional keyword-based advertising strategies. Search Live extends this shift by adding visual context to voice queries.

When someone points their camera at a product category—whether matcha supplies, board games, or electronic cables—and asks for recommendations, the search results depend on what the system visually identifies rather than text keywords the user types. This changes how products must be indexed and described in search databases. Manufacturers and retailers may need to ensure their products are recognizable through visual search algorithms, not just keyword optimization.

The hands-free nature of Search Live also affects when and where searches occur. Users conducting queries while applying sunscreen, setting up equipment, or moving through physical spaces represent different intent patterns than those sitting at desktops typing searches. These contextual differences influence what information users seek and how they evaluate results.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

For troubleshooting queries specifically, Search Live competes directly with manufacturer support documentation and third-party tutorial content. If users can receive immediate visual guidance through Search, they may spend less time navigating to individual brand websites or YouTube instructional videos. This potentially reduces direct traffic to content that traditionally ranked well for "how to" queries.

The integration with Google Lens creates a pathway for visual search to become conversational. Previously, Lens provided static image analysis with text results. Adding the Live option transforms this into an interactive session where users can ask follow-up questions, request clarifications, or explore related topics without restarting the search process. Each conversation potentially generates multiple query opportunities where a traditional search would have ended after one result page.

The educational application raises questions about how students research topics for homework and projects. If Search serves as a learning partner explaining science experiments in real time, this competes with educational content publishers and tutoring services. The feature could reduce demand for certain types of educational content while increasing demand for content that Search links to for deeper exploration.

Google's decision to make Search Live available without Labs enrollment indicates confidence in the technical infrastructure supporting continuous multimodal processing at scale. The company previously limited access to test system performance and accuracy. Opening it to all U.S. users suggests the backend systems can handle concurrent voice and video streams from millions of devices.

The feature requires users to grant camera and microphone permissions to the Google app, which involves privacy considerations. The system processes visual and audio data to generate responses, though Google has not detailed how long this data is retained or how it might be used for other purposes such as improving algorithms or informing advertising systems.

The advertising industry has been tracking how AI-generated responses affect click-through rates to traditional search results. Search Live extends this concern by potentially answering questions completely through voice responses, reducing the likelihood that users click through to websites at all. For publishers and advertisers who depend on search traffic, understanding whether Search Live increases or decreases website visits becomes a critical measurement challenge.

The capability to ask about "all of the games at once" demonstrates batch visual recognition—the system identifying and comparing multiple distinct objects in a single camera frame. This technical capability could apply to other product categories, enabling comparison shopping through visual input rather than navigating through multiple product pages. Such functionality would significantly impact e-commerce search behavior and potentially reduce time spent on retail websites.

Search Live's launch comes as multiple technology companies deploy multimodal AI systems. The competitive landscape for search has intensified as companies integrate large language models with visual and audio processing. Google's move to make this feature standard rather than experimental suggests urgency in establishing user habits around voice and camera-based search before alternative platforms gain adoption.

The specific choice to launch in English in the United States first reflects both technical and market considerations. English-language training data for AI models tends to be more abundant, and the U.S. market has high smartphone penetration with users already familiar with voice assistants and visual search tools. Expansion to other languages requires additional training data and testing for accuracy across different linguistic patterns and cultural contexts.

The September 24 launch date positions Search Live for availability during the fourth quarter shopping season, when consumer product searches typically increase. The timing allows Google to gather usage data during a high-volume period, which could inform refinements before potential expansion to additional markets.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Timeline

- September 24, 2025: Google officially launches Search Live in English for all U.S. users without requiring Labs opt-in

- September 24, 2025: Feature becomes accessible through Live icon in Google app for Android and iOS devices

- 2024: Google introduced AI Overviews in Search, beginning the integration of AI-generated responses

- 2023: Google launched conversational experience in Google Ads, testing natural language interactions

- Earlier 2025: Search Live available through Labs program as opt-in beta feature

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: Google launched the feature for all U.S. users of the Google app on Android and iOS. Director of Product Management for Search Liza Ma announced the availability.

What: Search Live enables interactive voice conversations with AI Mode and real-time camera feed sharing. Users can ask questions aloud while showing their camera view to receive immediate visual and verbal assistance with web links for further information.

When: The official launch occurred on September 24, 2025, removing the previous Labs opt-in requirement and making the feature available to all eligible U.S. users.

Where: The feature launched in the United States in English, accessible through the Google mobile app on Android and iOS devices. Users access it via a new Live icon under the search bar or through Google Lens.

Why: The launch addresses user needs for hands-free, real-time assistance across multiple scenarios including travel exploration, learning new skills, technical troubleshooting, educational projects, and entertainment decisions. The feature matters to the marketing community because it changes how consumers discover and research products through visual recognition rather than text keywords, potentially affecting traffic patterns, advertising strategies, and content optimization approaches.