The technology industry confronts mounting pressure to combat misinformation and synthetic media as artificial intelligence tools become more accessible. Google's latest content transparency initiative addresses these concerns by embedding verification capabilities directly into its Gemini application, extending detection features that previously applied only to static images.

According to Google's announcement on December 18, 2025, the Gemini app now enables users to verify whether videos were created or edited using Google AI. The verification process relies on SynthID watermarking technology, which Google describes as "imperceptible" and embedded across both audio and visual tracks of AI-generated content.

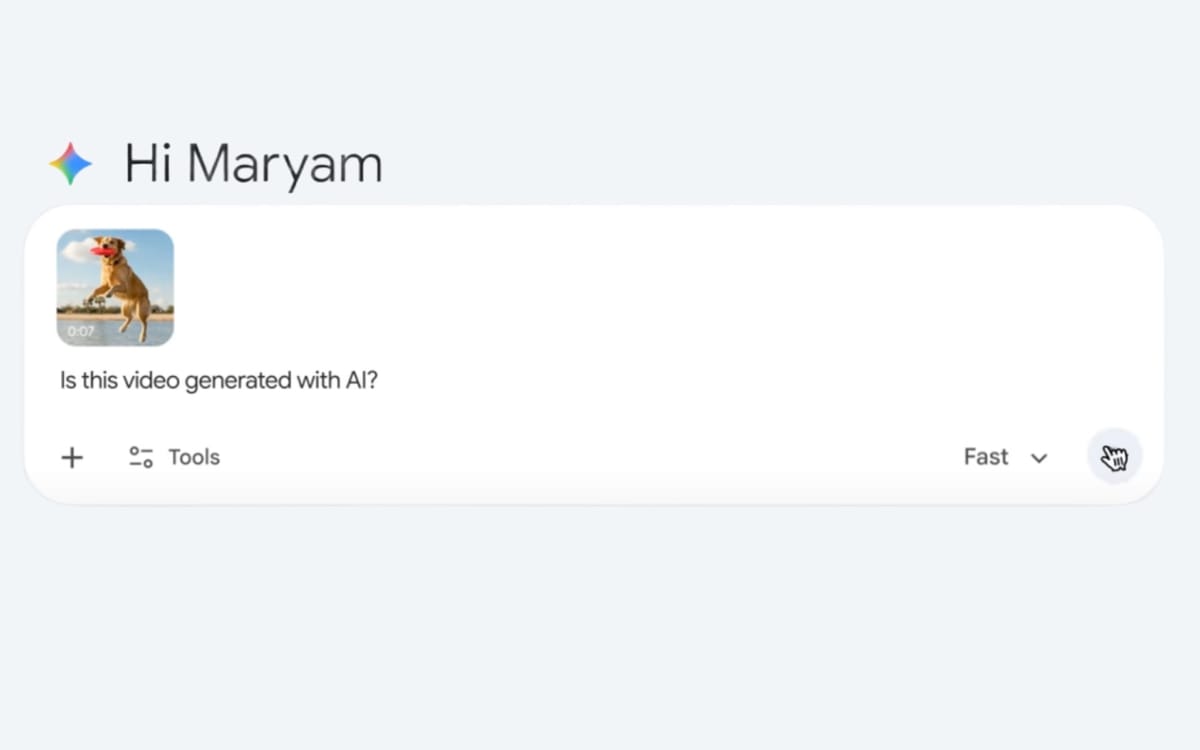

Users can upload videos directly to Gemini and ask questions such as "Was this generated using Google AI?" The system then scans for SynthID markers and provides specific feedback about which segments contain AI-generated elements. According to the announcement, Gemini might return responses like "SynthID detected within the audio between 10-20 secs. No SynthID detected in the visuals," giving users granular information about synthetic content.

The feature supports video files up to 100 MB and 90 seconds in length. Both image and video verification capabilities are now available in all languages and countries where the Gemini app operates, according to the company's statement.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Technical implementation of SynthID watermarking

Google's approach to content authentication relies on SynthID, a watermarking technology that operates across multiple media formats. The system embeds identifying markers into AI-generated content during the creation process, making these markers detectable during subsequent verification checks. Unlike traditional watermarks that appear visibly on content, SynthID operates imperceptibly to human perception while remaining machine-readable.

The dual-track implementation—covering both audio and visual components—reflects the complexity of video content. This architecture allows Gemini to distinguish between fully synthetic videos, partially edited content, and media that combines AI-generated audio with authentic visuals or vice versa. The granular feedback mechanism provides users with timestamp-specific information, enabling them to identify exactly which portions of a video contain synthetic elements.

This verification capability represents an expansion of Google's content transparency infrastructure. The company has faced increasing scrutiny over AI-generated content as synthetic media becomes more sophisticated and difficult to distinguish from authentic recordings. Digital marketing professionals and content creators have expressed concerns about the potential for AI-generated videos to mislead audiences, particularly in advertising contexts where authenticity carries legal and ethical implications.

Implications for content authentication standards

The verification feature arrives as platforms and publishers grapple with establishing authentication standards for synthetic media. Several major platforms have implemented labeling requirements for AI-generated content in advertising, while regulatory bodies examine disclosure requirements for synthetic media in political and commercial contexts.

Google's implementation differs from metadata-based approaches that rely on file information that can be easily stripped or modified. According to the announcement, SynthID operates as an embedded watermark that persists even when videos are edited or reformatted. This persistence addresses a critical limitation of metadata-based authentication, which becomes ineffective when files are re-encoded or processed through editing software.

The detection system's reliance on Google's own reasoning capabilities introduces another layer of analysis beyond simple watermark identification. Gemini applies contextual understanding to assess videos, potentially identifying AI-generated content based on visual or audio characteristics even when watermarks are absent or degraded. This dual approach—combining watermark detection with pattern recognition—may prove more robust than either method alone.

Content verification has become particularly relevant in digital advertising, where transparency requirements continue to expand. The Federal Trade Commission has increased enforcement against deceptive advertising practices, including the use of synthetic endorsements and fabricated testimonials. Advertisers utilizing AI-generated content face heightened scrutiny regarding disclosure and authenticity claims.

Industry context and competitive landscape

Google's verification tools emerge amid broader industry efforts to establish content provenance standards. The Coalition for Content Provenance and Authenticity (C2PA), which includes major technology companies, has developed technical specifications for embedding authentication information in digital media. However, adoption remains fragmented, with different platforms implementing varying approaches to content verification.

The 100 MB file size limit and 90-second duration constraint reflect practical limitations in processing capabilities. These parameters restrict the feature's applicability to longer-form content or high-resolution video files, potentially limiting its utility for professional video producers and marketing teams working with full-length advertisements or promotional content.

Marketing professionals have increasingly integrated AI-generated content into campaigns, from product demonstrations to social media advertisements. The ability to verify content authenticity may become essential as consumers and regulators demand greater transparency about synthetic media. Google's verification tool provides a mechanism for advertisers to demonstrate whether content was created using the company's AI systems, potentially serving as evidence of compliance with emerging disclosure requirements.

The announcement did not address whether the verification system can detect AI-generated content created using competitors' tools. The limitation to Google AI-generated content means users cannot employ Gemini to verify videos created with OpenAI's Sora, Meta's video generation tools, or other platforms. This platform-specific approach raises questions about interoperability and the potential need for universal verification standards that work across different AI systems.

Privacy and accuracy considerations

The verification process requires users to upload videos to Google's servers for analysis. The announcement did not specify data retention policies or whether uploaded videos are used to train Google's AI models. Marketing professionals handling proprietary or client content may require clarity on these data handling practices before utilizing the verification feature for commercial purposes.

Accuracy represents another critical consideration. The announcement did not provide performance metrics regarding false positive or false negative rates. Verification systems can incorrectly flag authentic content as synthetic or fail to detect AI-generated material that lacks watermarks or has been significantly altered. Understanding these error rates becomes essential for users relying on verification results for content authentication decisions.

The feature's availability across all Gemini-supported languages and countries suggests Google has prioritized broad accessibility. However, the announcement did not address whether verification accuracy varies across different languages or content types. Audio watermarking, in particular, may face challenges with content that includes multiple languages or significant background noise.

Market impact and adoption challenges

Content creators and marketing teams face practical questions about implementing verification tools in production workflows. The manual upload process—requiring users to submit individual videos for verification—may prove impractical for teams processing large volumes of content. Integration with content management systems or automated verification workflows was not mentioned in the announcement.

The verification capability may influence how advertisers approach AI-generated content in campaigns. Platforms including Meta and Google have announced labeling requirements for synthetic media in advertisements, creating compliance obligations for marketers. Having verification tools accessible through Gemini could streamline compliance processes, though the platform-specific nature of the tool limits its applicability to content created using Google's systems.

Trust and Safety teams at publishers and platforms have expressed interest in automated content verification as volumes of synthetic media increase. Google's approach of embedding verification directly into a consumer-facing application like Gemini differs from enterprise-focused authentication tools designed for professional content review workflows. The consumer accessibility may drive broader awareness of content authenticity issues while potentially lacking the features required for professional content moderation operations.

Technical limitations and future directions

The 90-second duration limit represents a significant constraint for many commercial applications. Television advertisements, product demonstrations, and promotional videos frequently exceed this length. The restriction suggests the feature targets short-form content typical of social media platforms rather than longer commercial productions.

File size limitations similarly restrict applicability to compressed videos rather than high-resolution source files. Professional video productions often utilize significantly larger file sizes to maintain quality, particularly for content intended for broadcast or cinema distribution. These constraints position the feature as suitable for detecting synthetic content in consumer-facing platforms rather than professional production environments.

The announcement described the feature as an expansion of content transparency tools, suggesting Google views video verification as part of a broader authentication strategy. The company previously implemented image verification capabilities, and the parallel implementation across media types indicates a systematic approach to content provenance. Future developments may address current limitations or extend verification to additional content formats.

According to the announcement, Gemini uses "its own reasoning" to analyze videos beyond simple watermark detection. This phrasing suggests the system applies pattern recognition and content analysis to assess whether videos exhibit characteristics consistent with AI generation. The combination of watermark detection and behavioral analysis could potentially identify synthetic content even when watermarks have been removed or degraded through editing and re-encoding.

Regulatory and compliance context

Content authentication tools arrive as regulators worldwide examine disclosure requirements for synthetic media. The European Union's AI Act includes provisions regarding transparency for AI-generated content, while individual jurisdictions have implemented varying requirements. The European Union has increased scrutiny of technology platforms, and content authenticity represents one dimension of ongoing regulatory attention.

Marketing professionals operating across multiple jurisdictions face complex compliance requirements. Verification tools that definitively identify AI-generated content could simplify compliance by providing evidence of content origins. However, the platform-specific nature of Google's verification—limited to content created using Google AI—means marketers using multiple tools would require verification capabilities for each platform.

The timing of the announcement, on December 18, 2025, positions the feature for availability during the year-end period when advertising activity typically increases. Marketing teams planning campaigns for 2026 may need to evaluate whether verification capabilities influence their choice of content creation tools or affect workflow designs.

Content transparency has become increasingly relevant for digital advertising as platforms implement stricter policiesregarding synthetic media. Google's own advertising policies have evolved to require disclosures for digitally altered content, and the verification feature provides a mechanism for advertisers to demonstrate compliance with these requirements when using Google's AI tools.

The announcement positions video verification as a consumer protection feature, enabling individuals to assess content authenticity. However, the implications extend to commercial contexts where content provenance affects legal compliance, brand safety, and audience trust. Marketing professionals increasingly confront questions about AI usage in content creation, and verification tools provide a means of addressing these concerns with specific, verifiable information rather than general assurances.

Google's implementation choices—including file size limits, duration constraints, and platform-specific detection—reflect current technical capabilities and strategic priorities. The accessibility of the feature through Gemini, a consumer-facing application, suggests Google aims to democratize content verification rather than limiting authentication tools to professional or enterprise contexts. This approach may accelerate awareness of content authenticity issues among general audiences while potentially influencing expectations for transparency across the digital advertising industry.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Timeline

- December 18, 2025: Google announces video verification capability in Gemini app

- 2025: Google requires AI disclosure labels in political advertisements

- 2025: Google implements AI-generated image labeling in ads and search

- 2024: Meta announces AI-generated content labeling requirements

- 2024: FTC intensifies enforcement against deceptive advertising practices

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Summary

Who: Google announced the video verification feature for its Gemini application, targeting users who need to authenticate video content and determine whether it was created or edited using Google AI.

What: The feature enables users to upload videos up to 100 MB and 90 seconds long to Gemini and ask whether content was generated using Google AI. The system scans for imperceptible SynthID watermarks embedded across audio and visual tracks, providing timestamp-specific feedback about which segments contain synthetic elements. The verification tool operates in all languages and countries supported by Gemini.

When: Google announced the capability on December 18, 2025, expanding content transparency tools that previously covered only image verification.

Where: The verification feature operates within the Gemini application, accessible to users in all countries where Gemini is available. Users upload videos directly through the application interface for analysis.

Why: The feature addresses mounting concerns about synthetic media and misinformation as AI-generated content becomes more sophisticated and widespread. Content authentication has become particularly relevant for digital advertising, where regulatory bodies and platforms have implemented disclosure requirements for AI-generated material. The tool provides a mechanism for users to verify content authenticity and for creators to demonstrate compliance with transparency requirements.