The integration of artificial intelligence into our daily lives, workplaces, and educational systems has accelerated dramatically since the release of ChatGPT in late 2022. A global study by the University of Melbourne and KPMG International provides important insights into how people worldwide perceive, trust, and use AI systems. Surveying over 48,000 people across 47 countries, the research illuminates the complex attitudes toward this transformative technology.

The findings reveal a nuanced picture: while AI adoption continues to grow, significant disparities exist between advanced and emerging economies in trust levels, perceived benefits, and usage patterns. This comprehensive global perspective offers valuable insights for policymakers, organizational leaders, and education providers navigating the integration of AI into society.

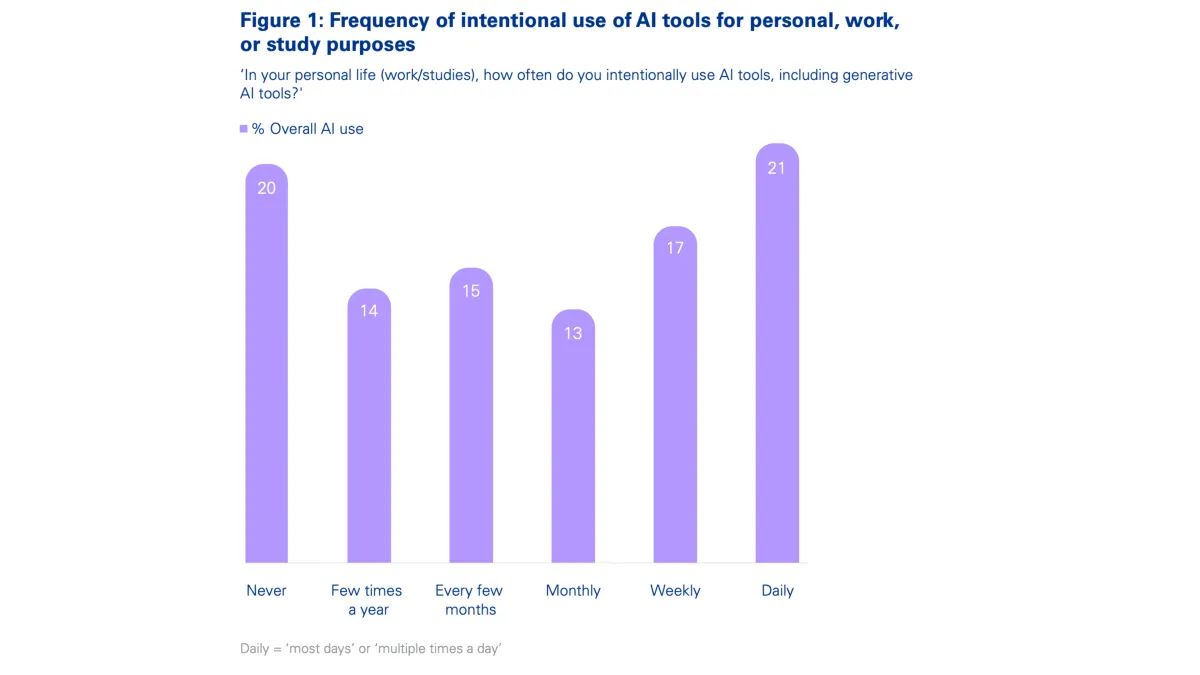

Two-thirds (66%) of people worldwide now intentionally use AI on a regular basis, with 38% using it weekly or daily. This widespread adoption reflects how accessible AI systems have become, particularly general-purpose generative AI tools with intuitive interfaces.

However, trust remains a significant challenge. While 58% view AI systems as generally trustworthy, a majority (54%) remain wary about fully trusting these technologies. People typically have more confidence in AI's technical capabilities to provide useful outputs than in its safety, security, and ethical soundness.

This trust gap helps explain the emotional ambivalence many feel toward AI. Most respondents report feeling both optimistic and excited about AI technologies while simultaneously expressing worry about potential negative consequences. This mixed sentiment reflects the tension between realizing AI's substantial benefits while managing its real risks.

The emerging-advanced economy divide

One of the study's most striking findings is the pronounced difference in AI perceptions between advanced and emerging economies:

In emerging economies like India, China, Nigeria, UAE, Saudi Arabia, and Egypt, people report significantly higher levels of:

- AI use (80% vs. 58% in advanced economies)

- AI training and education (50% vs. 32%)

- AI knowledge and literacy (64% vs. 46%)

- Self-reported ability to use AI effectively (74% vs. 51%)

- Trust in AI systems (57% vs. 39%)

- Acceptance of AI use in society (84% vs. 65%)

These differences extend to the workplace, where 72% of employees in emerging economies use AI regularly at work, compared to 49% in advanced economies. Emerging economies also report higher organizational support for AI adoption and responsible use governance.

This pattern may reflect the greater relative benefits AI offers in emerging economies where it can help fill critical resource gaps and provide greater economic opportunities. The technology may enable these countries to overcome barriers and accelerate development in ways that create more visible and immediate advantages.

AI in the workplace

The study reveals that 58% of employees worldwide now intentionally use AI tools in their work, with nearly a third using them weekly or daily. General-purpose generative AI tools like ChatGPT are by far the most common, used by almost three-quarters of AI-using employees.

Most employees report significant performance benefits from AI use, including:

- Increased work efficiency (67%)

- Better access to accurate information (61%)

- Enhanced innovation and idea generation (59%)

- Higher quality work and decisions (58%)

- Better skill development and knowledge sharing (55%)

However, the research also highlights concerning patterns of use that create risks for organizations:

Almost half of employees admit to using AI in ways that contravene organizational policies, such as uploading sensitive company information to public AI tools. More than half have presented AI-generated content as their own work and avoided revealing when they've used AI tools. This complacent use occurs despite the fact that 56% report having made mistakes due to AI.

These findings point to a critical governance gap: in advanced economies, only about half of employees report their organization offers responsible AI training or has appropriate policies to guide AI use. Despite the widespread adoption of generative AI tools, only 41% of employees say their organization has a policy guiding their use.

Future workforce implications

The findings regarding student use of AI raise important questions about skills development. Four in five students (83%, predominantly tertiary) regularly use AI in their studies, with half using it weekly or daily. Students report significant benefits from AI use, including:

- Increased efficiency (69%)

- Better access to information (59%)

- Higher quality work (56%)

- More personalized learning (51%)

- Reduced workload and stress (55-53%)

However, the study found that most students have used AI inappropriately, contravening educational guidelines, and two-thirds have not been transparent about their AI use. Over three-quarters report feeling they could not complete their work without AI and put less effort into their studies knowing they can rely on AI.

Only half of students report their education provider has policies or training to support responsible AI use, suggesting a significant gap in institutional guidance. This raises concerns about potential negative impacts on critical thinking skills, collaboration, and academic integrity.

Public attitudes toward AI regulation

The research shows strong public support for AI regulation across all countries surveyed. Seventy percent believe AI regulation is necessary, but only 43% believe current laws are adequate to ensure safe AI use. This perception gap is particularly notable given that 83% of respondents were unaware of any laws or regulations applying to AI in their country.

People expect a multi-pronged regulatory approach, with strong support for:

- International laws and regulation (76%)

- National government regulation (69%)

- Co-regulation between government and industry (71%)

There is also overwhelming support (87%) for stronger laws and actions to combat AI-generated misinformation, reflecting widespread concern about its impact on trust in online content and electoral integrity.

Implications for different stakeholders

The research findings have significant implications for multiple stakeholders:

- For policymakers: The findings underscore the need to develop and implement effective AI regulation that addresses public concerns while supporting responsible innovation. The strong preference for international coordination suggests regulatory approaches should aim for global alignment where possible.

- For organizational leaders: The gap between AI adoption and responsible governance highlights the importance of developing comprehensive AI strategies that include clear policies, training programs, and governance mechanisms. Organizations must balance encouraging AI innovation with ensuring responsible use.

- For education providers: The high rates of AI use among students, coupled with widespread inappropriate use, suggest educational institutions need to rethink assessment approaches and develop stronger frameworks for AI integration that preserve critical skill development.

- For individuals: The findings highlight the importance of AI literacy as a core capability for navigating an AI-augmented world. Those with greater AI understanding demonstrate more responsible use and realize more benefits from AI technologies.

Timeline

- Pre-2022: Limited general public exposure to AI tools

- Late 2022: Release of ChatGPT marks turning point in public awareness and access to AI

- 2023-2024: Dramatic increase in organizational and personal AI adoption

- 2024: Trust in AI begins to decline as users become more aware of limitations

- February 2025: EU AI Act enters implementation phase

- May 2025: Current research reveals global patterns in AI trust and adoption

The global study provides compelling evidence that AI adoption continues to accelerate, delivering substantial benefits while also presenting significant challenges that need addressing. The findings support four distinct but complementary pathways to trusted and sustainable AI adoption: enhancing AI literacy through training and education; deploying AI in human-centric ways that deliver clear benefits; addressing concerns about AI risks through effective mitigation; and establishing adequate safeguards, regulation, and laws to promote safe AI use.

As AI becomes increasingly embedded in work, education, and daily life, addressing trust concerns, governance gaps, and AI literacy needs will be essential to realizing AI's potential benefits while mitigating its risks. The striking differences between advanced and emerging economies suggest unique approaches may be needed across different contexts.

The research underscores that effective stewardship of AI requires sustained commitment and intentional strategies from policymakers, organizational leaders, and education providers to ensure AI enhances human potential and contributes positively to society.