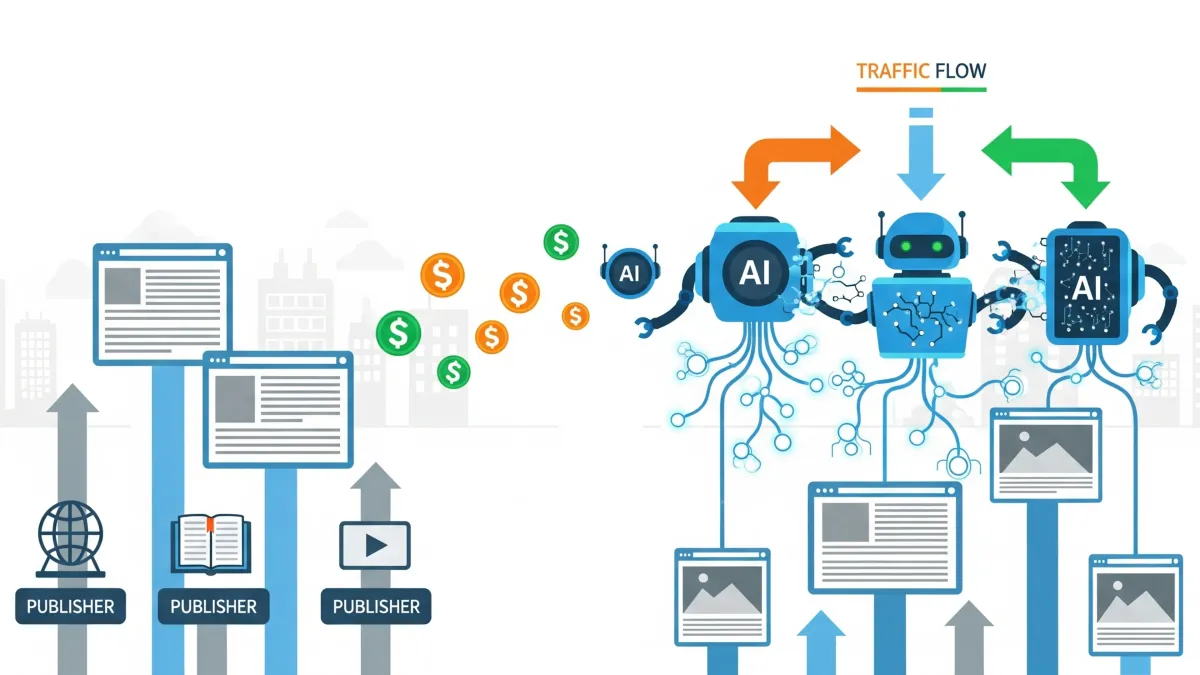

The Interactive Advertising Bureau Technology Laboratory announced the formation of its Content Monetization Protocols (CoMP) for AI Working Group on August 20, 2025, following a workshop that drew 80 executives from publishers, edge cloud providers, and AI monetization startups. The initiative responds to mounting evidence that artificial intelligence technologies threaten the economic sustainability of digital publishing through dramatic traffic reductions and uncompensated content scraping.

According to the IAB Tech Lab framework released June 4, 2025, AI-driven search summaries reduce publisher traffic by 20-60% on average, with niche sites experiencing losses up to 90%. The organization estimates publishers face $2 billion in annual ad revenue losses as AI systems provide answers directly without directing users to source websites. Simultaneous bot traffic has surged 117% quarter-over-quarter, with sites scraped an average of 5.05 million times according to TollBit's Q4 2024 report.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

The working group emerges from the widespread publisher concerns about AI's impact on traditional business models. Digital advertising revenue concentration among the top 10 technology companies now reaches 80.8%, with companies 11-25 holding 11.0%, squeezing open internet publishers while AI companies valued at billions profit from using publisher content without compensation.

Shailley Singh, Executive Vice President of Product and Chief Operating Officer at IAB Tech Lab, emphasized the urgency during the August workshop. "Blocking and redirecting bots and scrapers is the only way to bring the problem to the attention of the AI ecosystem," Singh stated in announcing the working group formation. The consensus among workshop attendees was clear: develop standard frameworks across infrastructures to enable publishers to exercise control and enforce terms of use at scale.

Workshop participants included Jonathan Roberts from Dotdash Meredith and Achim Schlosser from Bertelsmann Group advancing publisher interests, while Shelley Susan from Meta presented LLM perspectives. Technology companies Cloudflare, Fastly, and AWS attended alongside startups including TollBit, Dappier, and ProRata.ai demonstrating various content monetization approaches.

The framework establishes three core mechanisms for publisher content monetization. Content access controls prevent unauthorized bot scraping through robots.txt declarations and Web Application Firewall methods. LLM-friendly discovery enables AI systems to understand publisher content through content access rules pages, llms.txt files, and structured metadata. Monetization options include Cost per Crawl (CPCr) pricing and comprehensive LLM Ingest APIs with variable pricing and bidding capabilities.

The Cost per Crawl framework allows publishers to charge each time AI bots access their content. Publishers set base rates, potentially charging $0.001 per HTTP request with tiered pricing based on content type, volume, and bot classification. The system requires API key authentication for bot operators and comprehensive logging infrastructure for billing verification. Publishers can offer free tiers alongside premium pricing to attract smaller operators while maintaining revenue from commercial AI companies.

The LLM Ingest API provides more sophisticated content monetization through query-based pricing. AI operators submit user prompts to retrieve publisher content, with pricing determined through pre-agreed rates or dynamic bidding systems. Publishers control content access through partner key authentication while supporting various pricing models including per-query rates, content-specific charges, and subscription plans. The API returns either direct content for small items or secure time-limited URLs for larger materials.

Brand content management follows similar technical frameworks without pricing components. The brand LLM Ingest API enables companies to provide accurate, brand-aligned information to AI systems while tracking usage patterns and user query types. This addresses concerns that AI-generated responses about brands may not reflect accurate messaging or values without proper source access.

Microsoft's NLWeb initiative presents an alternative approach using Model Context Protocol standards. The framework integrates with existing Schema.org infrastructure to create conversational interfaces for both human users and AI agents. While NLWeb lacks built-in authentication and pricing mechanisms, it offers standardized protocol compatibility across major LLM providers including OpenAI, Anthropic, and xAI.

Technical implementation guidance includes robots.txt extensions signaling CPCr endpoints, HTTP 402 "Payment Required" responses containing pricing information, and comprehensive logging systems using HMAC-SHA256 signatures for integrity verification. Publishers can redirect blocked bots to content access rules pages explaining legitimate access procedures rather than simply denying requests.

The framework addresses the fundamental shift from traditional web architecture where search engines directed users to publisher websites, toward AI-driven results presenting publisher content directly without referral traffic. Leading AI bots including ChatGPT-User (15.6% of AI traffic with 6,767.6% growth), Bytespider (12.44%), and ClaudeBot (566.79% growth) demonstrate the scale of content ingestion occurring without publisher compensation.

Hidden scraping represents a growing concern, with 1.89 million hidden scrapes per site nearly matching identified AI bot scrapes at 2 million per site. Unauthorized scraping has increased 40% from Q3 to Q4 2024, with 3.3% of scrapes bypassing robots.txt instructions entirely. This surge burdens publishers with rising cybersecurity costs while providing no compensation for scraped content used in AI training or real-time retrieval-augmented generation systems.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

The working group formation follows successful implementations of similar concepts by infrastructure providers. Cloudflare launched pay-per-crawl services in private beta July 1, 2025, offering content creators standardized frameworks for charging AI crawlers. The service uses HTTP 402 response codes to signal payment requirements while providing publishers control over access permissions for each crawler type.

Industry adoption appears promising based on recent platform developments. Google's introduction of Offerwall on June 26, 2025, enables publishers to offer multiple content access methods including micro-payments, rewarded advertisements, and newsletter subscriptions. WordPress.com's partnership with Perplexity AI demonstrates publisher willingness to experiment with AI-powered content discovery platforms.

The economic impact extends beyond direct traffic losses to fundamental changes in content monetization. Google Network advertising revenue declined 1% to $7.4 billion in Q2 2025, indicating reduced monetization opportunities for AdSense, AdMob, and Google Ad Manager participants as AI features satisfy user intent without requiring website visits.

Publishers face secondary effects including reduced audience engagement, decreased content production capabilities, and diminished competitive positioning relative to platform-controlled content sources. Traditional reliance on Google Search traffic for building audience relationships through newsletter subscriptions, social media follows, and direct website bookmarks becomes compromised as AI features provide answers without directing users to source websites.

The framework addresses brand reputation risks as consumers increasingly rely on AI systems for information needs. Without access to authoritative brand content, AI systems may generate responses that misalign with brand values or contain inaccurate information. The brand API framework enables companies to provide structured content access while monitoring query patterns to understand customer information needs.

TollBit's research demonstrates AI dependency on fresh, human-generated content to maintain accuracy and avoid hallucinations. The 2 million scrapes per site and 117% AI bot traffic surge reflect heavy reliance on publisher content for training and real-time information retrieval. This creates opportunities for publishers to monetize content by seeking fair compensation from AI operators requiring continuous content access.

The working group will focus on standardizing bot and agent access controls, content discovery mechanisms including metadata declarations and llms.txt implementation, Cost per Crawl monetization APIs, comprehensive LLM Ingest APIs, and NLWeb integration protocols. IAB Tech Lab seeks industry feedback to organize workshops determining next steps in LLM integration framework evolution.

Singh emphasized the collaborative approach required for successful implementation. "The industry will need to support different methods from 'all you can eat buffet' content library partnerships to 'pre-fixe menu' pay per crawl to more sophisticated 'a la carte' cost per query options," Singh explained. Standard framework and currency definitions will enable approaches to work across providers and platforms.

The initiative positions content creators and businesses to navigate the evolving digital ecosystem while capitalizing on new engagement and monetization opportunities. Publishers implementing the framework can potentially restore revenue streams disrupted by AI-driven search while establishing sustainable relationships with AI operators requiring ongoing content access for training and user query responses.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Timeline

- June 4, 2025: IAB Tech Lab releases LLMs and AI Agents Integration Framework documenting $2 billion publisher revenue losses from AI-driven search

- July 1, 2025: Cloudflare launches pay-per-crawl service in private beta

- July 2, 2025: ChatGPT referrals to news sites increase 25x while Google zero-click searches reach 69%

- July 24, 2025: Google launches Web Guide experiment reorganizing search results using AI

- July 2025: Google Network advertising revenue declines 1% to $7.4 billion amid AI feature expansion

- August 2025: IAB Tech Lab hosts workshop with 80 executives from publishers, cloud providers, and AI monetization startups

- August 20, 2025: IAB Tech Lab announces Content Monetization Protocols (CoMP) for AI Working Group formation

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

PPC Land explains

Publishers: Digital content creators and media organizations that produce and distribute information through websites and applications. Publishers traditionally monetize content through advertising revenue dependent on search engine traffic, making them particularly vulnerable to AI-driven changes that reduce click-through rates. The framework specifically addresses challenges faced by both large publishers like Dotdash Meredith and smaller content creators who lack direct relationships with major AI companies.

AI-driven search: Search engine technologies that use artificial intelligence to provide direct answers within search result pages rather than directing users to source websites. These systems include Google's AI Overviews and Gemini-powered summaries that extract information from publisher content to create comprehensive responses, reducing the need for users to visit original sources and significantly impacting publisher traffic and revenue.

Content monetization: The process of generating revenue from digital content through various business models including advertising, subscriptions, licensing, and direct payments. The framework introduces new monetization approaches specifically designed for AI interactions, moving beyond traditional models that depend on website visits to systems that charge for content access, usage, or queries from AI systems.

Bot traffic: Automated software programs that access websites to collect information for various purposes including search engine indexing, AI training, and real-time content retrieval. The framework addresses the surge in AI bot activity, with identified bots like ChatGPT-User, Bytespider, and ClaudeBot representing significant portions of website traffic while typically providing no compensation to content creators.

LLM Ingest API: Application Programming Interface that enables AI operators to query publisher content using natural language prompts and receive structured responses with content or content paths. The API supports various pricing models including per-query rates, dynamic bidding, and subscription plans while providing authentication, logging, and billing capabilities for sustainable content monetization.

Cost per Crawl (CPCr): Monetization framework that charges AI operators each time their bots access publisher content, similar to traditional advertising models but applied to content consumption rather than user engagement. Publishers can set base rates per HTTP request with tiered pricing based on content type, bot classification, and usage volume, creating direct compensation for content access.

Traffic reduction: The measurable decrease in website visitors and page views that publishers experience as AI systems satisfy user information needs without directing users to source websites. The framework documents traffic reductions ranging from 20-60% on average to 90% for niche sites, representing a fundamental shift in how users discover and consume online content.

Access controls: Technical mechanisms that publishers implement to manage and restrict how bots and automated systems interact with their content. These include robots.txt declarations, Web Application Firewall rules, API key authentication, and redirect systems that guide legitimate AI operators to proper content licensing procedures while blocking unauthorized scraping.

Framework standardization: The development of industry-wide technical standards and protocols that enable interoperability between different publishers, AI operators, and technology platforms. Standardization allows publishers to implement consistent monetization approaches while enabling AI companies to integrate with multiple content sources through unified interfaces and pricing structures.

Revenue losses: Financial impact on publishers resulting from reduced advertising income as AI-driven search decreases website traffic and user engagement. The framework quantifies these losses at approximately $2 billion annually across the publishing industry, with individual publishers experiencing varying degrees of impact based on content type, audience demographics, and dependency on search engine referrals.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: IAB Technology Laboratory formed Content Monetization Protocols (CoMP) for AI Working Group with 80 executives from publishers including Dotdash Meredith and Bertelsmann Group, cloud providers like Cloudflare and AWS, and AI monetization startups TollBit, Dappier, and ProRata.ai.

What: Comprehensive framework standardizing content monetization protocols including Cost per Crawl pricing, LLM Ingest APIs with variable pricing and bidding, content access controls through robots.txt and Web Application Firewalls, and LLM-friendly discovery mechanisms using llms.txt files and structured metadata.

When: Working group announced August 20, 2025, following framework release June 4, 2025, and workshop in August 2025. Implementation timeline varies with publishers able to deploy access controls immediately while API development requires additional standardization.

Where: Global initiative addressing publisher monetization challenges worldwide, with particular focus on open internet sustainability as AI companies profit from content without compensation while publishers experience traffic declines up to 90%.

Why: AI-driven search summaries reduce publisher traffic 20-60% on average with $2 billion estimated annual ad revenue losses, while bot scraping surges 117% with 5.05 million scrapes per site as AI systems require fresh content for training and real-time query responses without compensating content creators.