In a significant development for web application capabilities announced on January 13, 2024, developers received new tools to build local chatbots that operate entirely on users' devices, eliminating the need to transmit sensitive data to external servers.

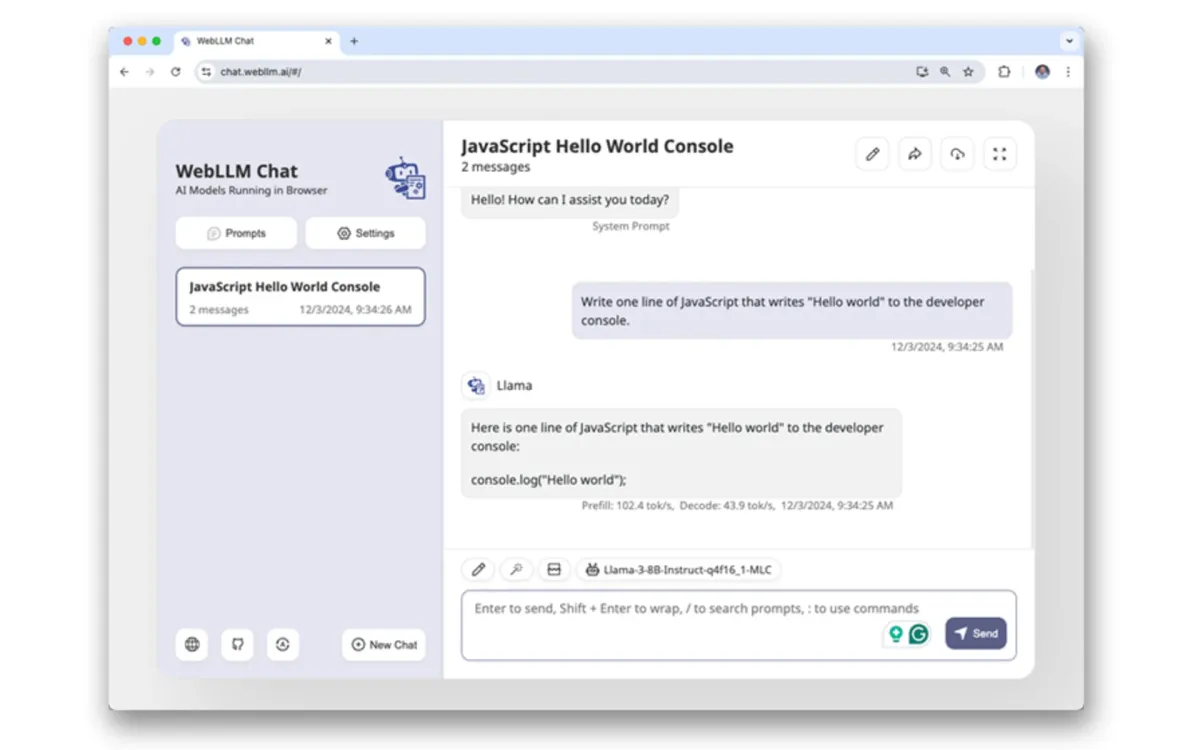

According to Christian Liebel, a Google Developer Expert who authored documentation about the new capabilities, these advances center around WebLLM, a web-based runtime for Large Language Models (LLMs) provided by Machine Learning Compilation. The technology combines WebAssembly and WebGPU to enable near-native performance for AI model inference directly in web browsers.

Alexandra Klepper, who published the initial announcement, noted that the implementation allows computers to "generate new content, write summaries, analyze text for sentiment, and more" without requiring cloud connectivity. This marks a departure from existing cloud-based solutions that typically process data on remote servers.

The technical specifications reveal significant requirements for implementation. Models with 3 billion parameters compressed to 4 bits per parameter result in approximately 1.4 GB file sizes that must be downloaded before first use. Larger 7 billion parameter models, which provide enhanced translation and knowledge capabilities, require downloads exceeding 3.3 GB.

A key innovation in the implementation involves the Cache API, introduced alongside Service Workers. This programmable cache remains under developer control, enabling fully offline operation once models are downloaded. The cache isolation occurs per origin, meaning separate websites cannot share cached models.

The system supports three distinct message roles: system prompts that define behavior and role, user prompts for input, and assistant prompts for responses. This structure enables N-shot prompting, allowing natural language examples to guide model behavior.

Security considerations feature prominently in the implementation. According to the technical documentation, developers must treat LLM responses like user input, accounting for potential malformed or malicious values resulting from hallucination or prompt injection attacks. The documentation explicitly warns against directly adding generated HTML to documents or automatically executing returned JavaScript code.

The technology faces certain limitations. Non-deterministic behavior means models can produce varying or contradictory responses to identical prompts. The documentation acknowledges that hallucinations may occur, with models generating incorrect information based on learned patterns rather than factual accuracy.

Local deployment provides specific advantages for user privacy and operational reliability. By processing all data on-device, the system eliminates transmission of personally identifiable information to external providers or regions. This approach also enables consistent response times and maintains functionality during network outages.

The implementation includes integration with Chrome's experimental Prompt API, which enables multiple applications to utilize a centrally downloaded model, addressing efficiency concerns about duplicate downloads across different web applications.

This development arrives as part of broader efforts to enhance web application capabilities while maintaining user privacy. The documentation emphasizes that developers must verify LLM-generated results before taking consequential actions, reflecting ongoing attention to reliability and safety considerations in AI implementations.

For web developers interested in implementation, complete documentation and source code examples are available through the web.dev platform, with separate guides covering WebLLM integration and Prompt API usage.