In a watershed moment for artificial intelligence policy, the OECD Expert Group on AI Futures has released a comprehensive report that maps out the transformative potential and inherent risks of AI development through 2040. Released in November 2024, the report draws on the collective wisdom of seventy leading AI specialists from across the globe, offering policymakers a robust framework for navigating the complex landscape of artificial intelligence.

The timing of this analysis proves particularly crucial, as rapid advancements in AI capabilities continue to outpace regulatory frameworks. "The swift evolution of AI technologies calls for policymakers to consider and proactively manage AI-driven change," the report emphasizes, highlighting the urgent need for coordinated international action to shape AI's trajectory.

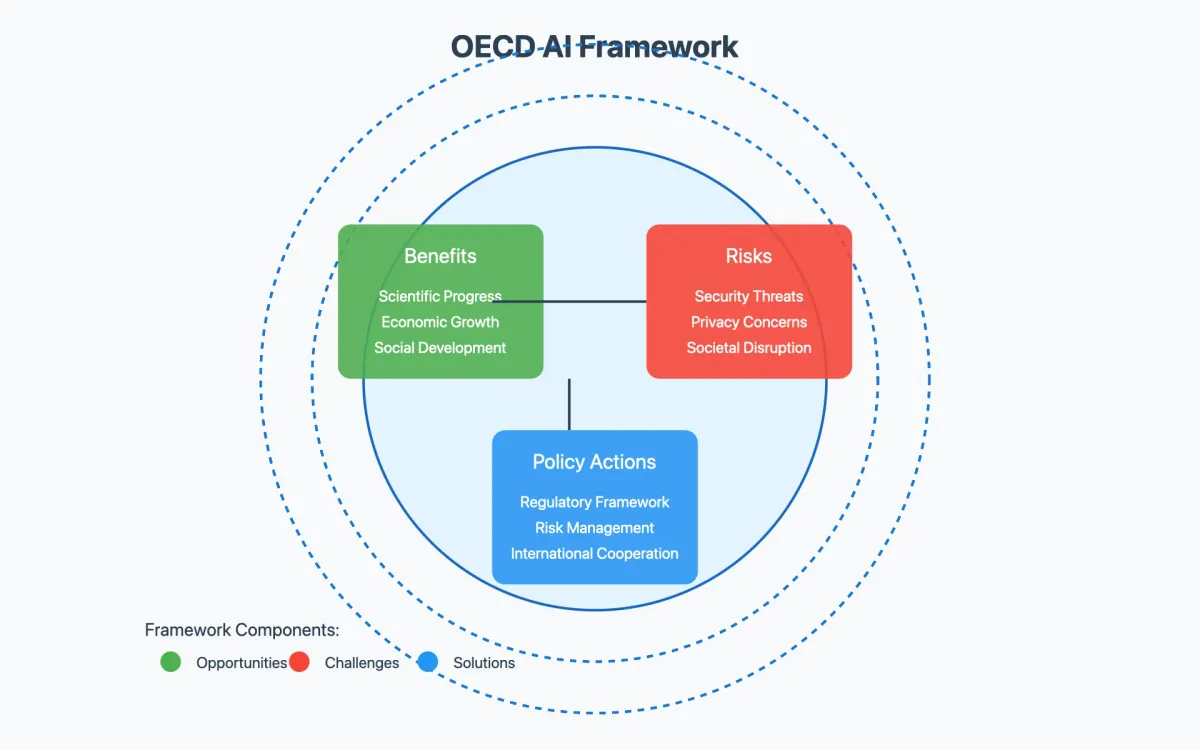

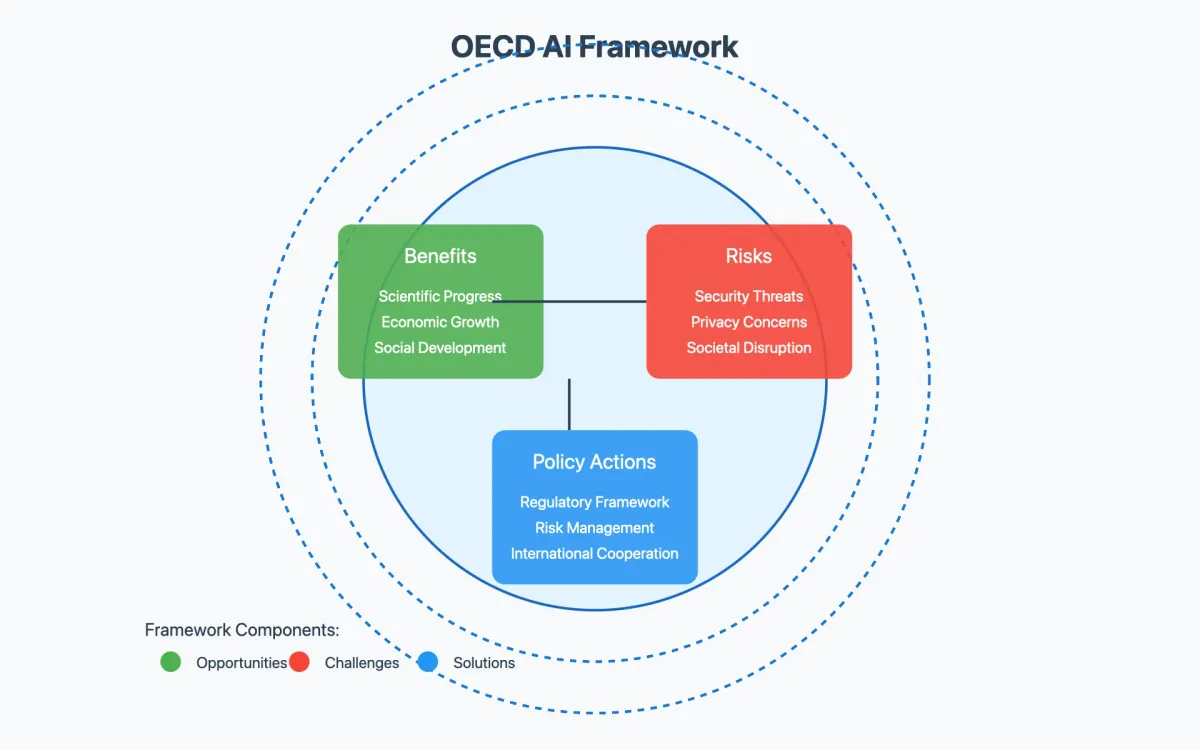

According to the Expert Group, the decisions made by policymakers in the coming years will largely determine whether AI's impact on society tends toward the beneficial or the destructive. The report outlines ten priority benefits and ten critical risks, accompanied by detailed policy recommendations designed to maximize positive outcomes while mitigating potential harms.

The first major section of the report explores AI's potential to revolutionize multiple sectors of society and industry. At the forefront stands scientific advancement, where AI has already demonstrated remarkable capabilities. The report cites breakthroughs like AlphaFold in protein folding prediction as merely the beginning of AI's scientific potential. Looking ahead, experts anticipate AI becoming what they term "an invention of a method of invention," fundamentally transforming how scientific discovery occurs.

In healthcare, the implications appear particularly promising. AI systems are increasingly capable of detecting medical anomalies and facilitating preventative action, though current implementation remains in early stages. The report projects that AI could enable personalized medicine at scale, potentially revolutionizing treatment approaches for conditions ranging from cancer to neurodegenerative diseases.

Economic implications feature prominently in the analysis. While current evidence suggests AI-adopting firms tend to show higher productivity, establishing direct causation remains challenging. Future projections vary significantly, with conservative estimates suggesting a one to seven percent rise in global GDP by 2033, while more optimistic scenarios envision potential ten-fold increases over longer periods. However, the report emphasizes that realizing these gains requires substantial investment in complementary assets, including workforce development and technological infrastructure.

Perhaps most significantly, the Expert Group identifies AI's potential to address pressing global challenges, particularly climate change. AI systems show promise in optimizing energy systems, improving climate modeling, and enhancing environmental monitoring capabilities. The technology could play a crucial role in advancing sustainable development goals across multiple domains.

In education, AI presents opportunities for personalization at scale, potentially democratizing access to quality learning experiences. The report envisions AI-enabled systems capable of adapting to individual learning styles and needs, though it cautions that past educational technologies have sometimes failed to deliver on their promises.

The report's analysis of potential risks proves equally detailed, highlighting concerns that demand immediate attention from policymakers and industry leaders. Cybersecurity emerges as a primary concern, with evidence already showing AI's role in enhancing malicious capabilities. The Expert Group notes that in 2023 alone, generative AI contributed to an eight percent increase in cyber attacks, particularly in areas of phishing and ransomware deployment.

More troubling still, the report warns of AI's potential to target critical infrastructure. "The convergence of AI capabilities with critical systems creates unprecedented vulnerabilities," the report states, highlighting particular concerns about power grids, healthcare facilities, and financial systems. These risks extend beyond mere technical vulnerabilities, potentially threatening the fundamental stability of vital services.

The threat to democratic institutions receives particular attention in the analysis. AI-enabled disinformation campaigns have evolved beyond simple fake news to include sophisticated "compositional deepfakes" capable of crafting credible yet entirely fabricated narratives. The Expert Group warns that personalized persuasion techniques, powered by advanced AI systems, could enable mass manipulation at unprecedented scales.

"The combination of AI's capabilities with psychological profiling creates particular concerns for electoral integrity and public discourse," the report emphasizes. This threat extends beyond election periods, potentially eroding the very foundations of fact-based democratic debate. The report notes that while some experts argue fears about AI-enabled disinformation may be overblown, the potential consequences demand serious preventive measures.

Competitive dynamics in AI development emerge as another critical concern. The report identifies a troubling pattern where pressure to be first to market may lead companies and countries to deploy AI systems before adequate safety measures are in place. This "race to the bottom" in safety standards could have catastrophic consequences, particularly as AI systems become more sophisticated and autonomous.

Market concentration presents a related challenge. The report notes that access to crucial AI resources, particularly computing power and data, has become increasingly concentrated among a small number of companies and countries. This concentration risks creating dangerous power imbalances and could exacerbate global inequalities.

In response to these challenges, the Expert Group outlines a comprehensive policy framework centered on ten priority actions. First among these is the establishment of clear liability rules for AI-related harms. The report advocates for a balanced approach that promotes innovation while ensuring accountability for negative outcomes.

Regulatory frameworks feature prominently in the recommendations. The Expert Group calls for the identification and restriction of "red line" AI applications – uses of the technology deemed too dangerous to permit. This approach requires international coordination to prevent regulatory arbitrage while ensuring consistent standards across jurisdictions.

Transparency emerges as another crucial policy pillar. The report recommends mandatory disclosure requirements for certain types of AI systems, particularly those deployed in high-risk contexts or with significant societal impact. These requirements would enable better oversight while fostering public trust in AI development.

The report emphasizes the need for enhanced institutional capacity to manage AI development. This includes strengthening regulatory bodies, developing technical expertise within government agencies, and creating new mechanisms for monitoring and responding to AI-related challenges.

Risk management procedures receive particular attention. The Expert Group recommends comprehensive frameworks for assessing and mitigating risks throughout the AI system lifecycle. These frameworks should be adaptable to emerging threats while maintaining consistent core principles.

The report strongly emphasizes the necessity of international cooperation in managing AI development. Recent initiatives, including the EU AI Act, the G7 Hiroshima AI Process, and various national AI strategies, demonstrate growing momentum in this direction. However, the Expert Group argues that current efforts remain insufficient given the scale of the challenge.

"The global nature of AI development demands unprecedented levels of international coordination," the report states. This coordination must extend beyond traditional diplomatic channels to include technical cooperation, shared research initiatives, and harmonized regulatory approaches.

The final sections of the report address practical implementation challenges. Resource allocation emerges as a critical concern, with the Expert Group noting that effective AI governance requires substantial investment in technical infrastructure, human capital, and monitoring systems.

The report acknowledges that building necessary institutional capacity represents a significant challenge, particularly for developing nations. It recommends a phased approach to implementation, with immediate priority given to establishing basic governance frameworks while building toward more comprehensive oversight systems.

The report concludes with a call for immediate action, emphasizing that the window for establishing effective AI governance is limited. Success requires balancing innovation with responsible development, ensuring that AI benefits are widely distributed while risks are effectively managed.

The Expert Group's analysis suggests that while the challenges are formidable, they are not insurmountable. With coordinated action, appropriate resources, and sustained commitment, societies can harness AI's benefits while effectively managing its risks. The key lies in beginning implementation immediately while maintaining the flexibility to adapt as technologies and circumstances evolve.

This landmark report represents the most comprehensive international assessment of AI's future implications to date, providing policymakers with actionable insights for developing forward-looking AI governance frameworks. Its recommendations, if implemented, could help ensure that AI development serves the broader interests of humanity while mitigating potential harms.

As AI continues to evolve at a rapid pace, the report's framework provides a crucial roadmap for navigating the challenges ahead. The coming years will test the international community's ability to translate these recommendations into effective action, with profound implications for the future of human society.