Science Feedback, leading a consortium of European fact-checking organizations, released findings on December 26, 2025, from the first large-scale, cross-platform measurement of disinformation prevalence across six major social media platforms operating in the European Union. The SIMODS project assessed approximately 2.6 million posts totaling roughly 24 billion views across Facebook, Instagram, LinkedIn, TikTok, X/Twitter, and YouTube in France, Poland, Slovakia, and Spain.

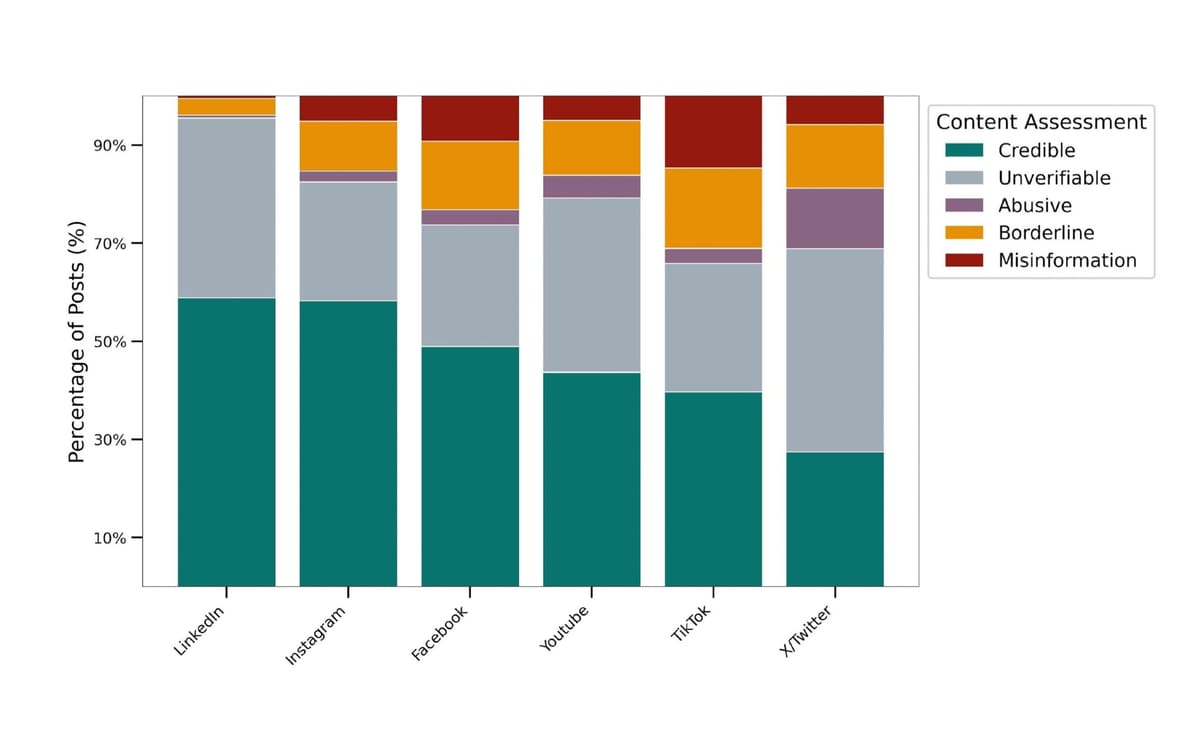

According to the report, TikTok exhibited the highest prevalence of misinformation at approximately 20% of exposure-weighted posts, meaning roughly one in five posts users encounter on topics including health, climate change, the Russia-Ukraine war, migration, and national politics contains misleading or false information. Facebook followed at 13%, with X/Twitter at 11%, YouTube and Instagram at approximately 8%, and LinkedIn showing the lowest prevalence at 2%.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

The research marks a significant methodological advancement in measuring online misinformation. Previous attempts, including TrustLab's 2023 pilot study, were unable to construct reliable prevalence measures due to limited data collection scale. The SIMODS consortium succeeded by assembling large, representative samples reflecting actual user exposure rather than mere discoverability through keyword searches.

Professional fact-checkers from Newtral, Demagog SK, Pravda, and CheckFirst examined content using rigorous annotation protocols. The study's dataset covered posts published between March 17 and April 13, 2025, collected through keyword searches on high-salience topics and, in LinkedIn's case, through platform-provided random samples responding to Digital Services Act Article 40.12 requests.

The measurement revealed stark differences when including borderline content—material supporting disinformation narratives without making verifiably false claims—and abusive content such as hate speech. Under this expanded definition of "problematic" content, TikTok and X/Twitter showed the highest prevalence at 34% and 32% respectively, followed by Facebook at 27%, YouTube at 22%, Instagram at 19%, and LinkedIn at 8%.

These findings come amid significant platform policy shifts. Many platforms have stepped back from earlier commitments to counter disinformation since early 2025, reducing fact-checking programs and staffing in relevant teams, often framed as responses to political pressure in the United States.

The research coincides with the European Commission's February 13, 2025 integration of the Code of Practice on Disinformation into the Digital Services Act framework as the Code of Conduct on Disinformation. As of July 1, 2025, the Code became operational under the DSA with auditing and compliance mechanisms, becoming what the Commission describes as a "significant and meaningful benchmark for determining compliance with the DSA."

Beyond prevalence, the study examined how platforms amplify content from repeat misinformation sources compared to credible outlets. The research found that accounts repeatedly sharing misinformation attract substantially more engagement per post per 1,000 followers than high-credibility accounts on all platforms except LinkedIn.

YouTube showed the most pronounced disparity, with low-credibility channels receiving approximately eight times the engagement of high-credibility channels per 1,000 followers. Facebook demonstrated a similar pattern at seven times, while Instagram and X/Twitter exhibited ratios of approximately five times. TikTok showed a lower but still significant ratio of two times. LinkedIn was the sole platform where sharers of misinformation received no extra visibility advantage.

"This indicates systematic amplification advantages for recurrent misinformers," according to the report. The finding suggests platform recommendation algorithms and user behavior patterns combine to reward misleading content with disproportionate reach relative to audience size.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

The cross-platform analysis revealed that low-credibility actors maintain stronger presence on certain platforms. X/Twitter emerged as most comparatively attractive to low-credibility actors, with 34% higher likelihood of maintaining accounts compared to high-credibility actors. Facebook showed 23% higher likelihood, and TikTok 17% higher. Conversely, LinkedIn demonstrated 80% lower likelihood of hosting low-credibility accounts, with Instagram showing 33% lower likelihood.

Health misinformation dominated the landscape, representing 43.4% of all misinformation posts across platforms. The Russia-Ukraine war accounted for approximately 24.5% of misinformation content, followed by national politics at 15.5%, with climate change and migration each representing 6.6%.

The monetization analysis faced significant challenges due to limited platform transparency. The study found that none of the assessed services fully prevents monetization by recurrent misinformers. On YouTube, approximately 76% of eligible low-credibility channels showed signs of monetization, compared to 79% for high-credibility channels. On Facebook, roughly 20% of eligible low-credibility Pages appeared monetized versus 60% for high-credibility Pages. Google Display Ads appeared on 27% of low-credibility websites compared to 70% for high-credibility sites.

Transparency limitations prevented equivalent auditing on X/Twitter, TikTok, LinkedIn, and Instagram, highlighting persistent data access gaps affecting independent research despite DSA Article 40.12 provisions designed to enable researcher access.

The study encountered substantial obstacles obtaining platform data. Despite invoking DSA Article 40.12 on December 19, 2024, requesting random samples of 200,000 posts per language, only LinkedIn provided the requested dataset. TikTok granted API access on March 31, 2025—too late for inclusion in this wave. Meta and YouTube did not respond to requests and follow-up reminders. X/Twitter denied the application on January 9, 2025, stating the project did not meet Article 34 requirements; an appeal filed January 17, 2025 had not received response as of September 2025.

The research builds on recommendations from the European Digital Media Observatory's work developing Structural Indicators to assess the Code's effectiveness. EDMO proposed indicators including prevalence of disinformation, sources of disinformation, audience of disinformation, and collaboration with fact-checking organizations.

Science Feedback executive director Emmanuel Vincent, who led the consortium including the Universitat Oberta de Catalunya, emphasized the need for regular, standardized measurement. "Platforms must reduce the spread and impact of misleading content and avoid incentivizing it financially," the report states. "Our results show that misleading content is prevalent across platforms, recurrent misinformers benefit from persistent engagement premium, and demonetization is not fully operational."

The methodology incorporated Large Language Models to assist with content filtering and pre-labeling, though human fact-checkers maintained final authority on all classifications. Initial testing of GPT-4o-mini for categorizing content achieved only 50-70% accuracy depending on language, with costs increasing substantially when web search enrichment was required for context.

The consortium funded by the European Media and Information Fund plans to publish a second measurement in early 2026 to track changes over time. Future iterations should benefit from improved platform data access as Article 40 of the DSA requires platform cooperation, though current compliance remains inconsistent.

The report provides several recommendations for policymakers and regulators, including making Structural Indicators part of routine supervision, operationalizing researcher access under DSA Article 40.12, requiring platforms to provide random content samples with reproducible sampling methods, and establishing clear escalation pathways when platforms deny or delay access requests.

For platforms, recommendations include maintaining stable, well-documented research APIs providing content-level exposure data and account-level metadata, alongside time-bounded regulatory samples for specific audit windows accompanied by signed sampling manifests detailing inclusion criteria and randomization methods.

The research represents a significant step toward evidence-based platform regulation in Europe, providing comparable, scientifically sound measurements to inform policy debates that have often relied on assertion rather than systematic evidence. As platforms continue adjusting their content moderation approaches, such independent measurement becomes increasingly critical for protecting users' rights to accurate information and holding platforms accountable under EU frameworks.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Timeline

- December 19, 2024: SIMODS consortium invokes DSA Article 40.12, requesting data from six platforms

- January 9, 2025: X/Twitter denies data access request

- January 17, 2025: SIMODS appeals X/Twitter denial (no response received as of September 2025)

- February 13, 2025: European Commission integrates Code of Practice into DSA framework

- March 17 - April 13, 2025: Primary data collection period across platforms

- March 31, 2025: TikTok grants API access (too late for inclusion)

- July 1, 2025: Code of Conduct on Disinformation becomes operational under DSA

- December 26, 2025: SIMODS releases first report

- Early 2026: Second measurement wave planned

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Summary

Who: Science Feedback consortium including Newtral, Demagog SK, Pravda, CheckFirst, and Universitat Oberta de Catalunya, funded by the European Media and Information Fund

What: First large-scale, cross-platform measurement of disinformation prevalence across six major social media platforms, analyzing approximately 2.6 million posts totaling 24 billion views

When: Research conducted between March 17 and April 13, 2025, with report released December 26, 2025

Where: Four EU member states (France, Poland, Slovakia, Spain) across six Very Large Online Platforms (Facebook, Instagram, LinkedIn, TikTok, X/Twitter, YouTube)

Why: To provide evidence-based measurement of platform compliance with the EU's Code of Conduct on Disinformation, now operational under the Digital Services Act, amid platform disengagement from earlier commitments to counter misinformation