HUMAN Security released its annual cybersecurity report, The Quadrillion Report: 2024 Cyberthreat Benchmarks, uncovering a significant trend in how businesses are responding to artificial intelligence (AI) and large language models (LLMs). The report, published just yesterday, analyzes over one quadrillion online interactions observed by HUMAN's Defense Platform throughout 2023, providing crucial insights into the evolving relationship between AI and cybersecurity.

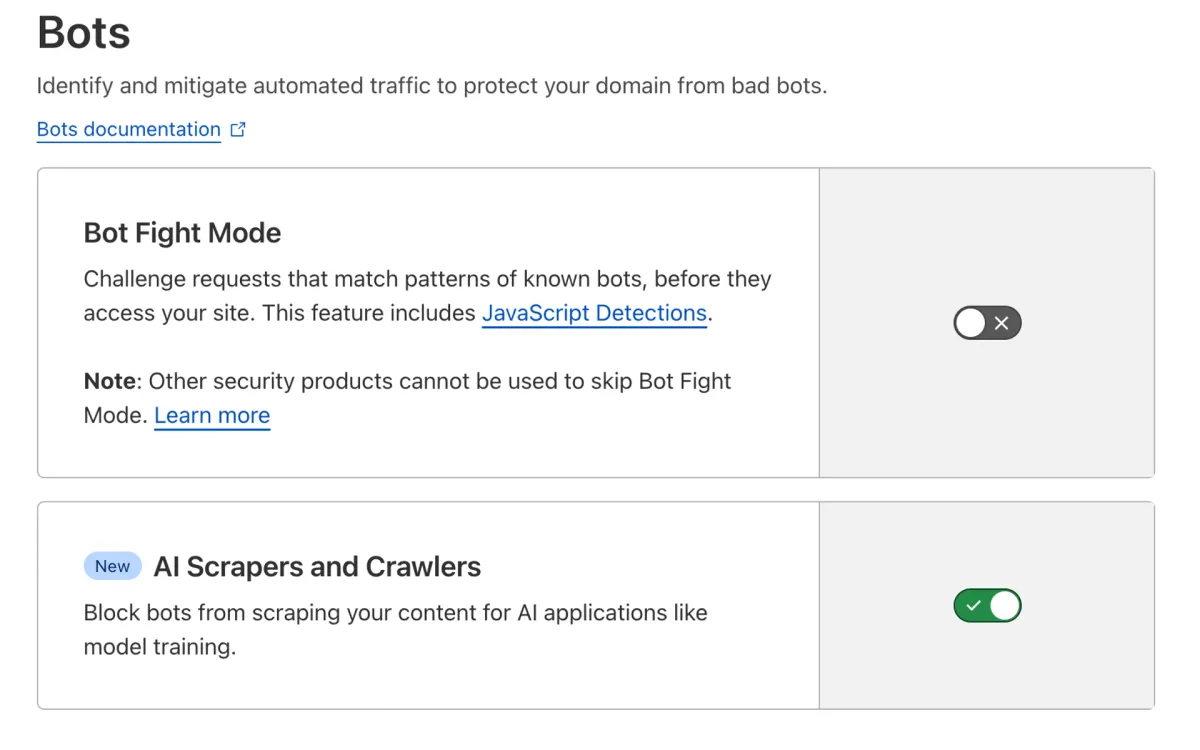

According to the report, a striking 80% of companies utilizing HUMAN's platform have chosen to block known LLM user-agents outright. This statistic underscores a growing wariness among businesses towards AI-powered web crawlers and potential AI-assisted cyber threats. The decision to block LLM access represents a significant shift in how organizations are approaching the integration of AI technologies with their online presence.

HUMAN's research team, led by Aviad Kaiserman, a Threat Intelligence Analyst at the company, delved into the reasons behind this widespread blocking of LLM user-agents. The report suggests that concerns over intellectual property theft, content scraping, and the potential for AI-enhanced cyber attacks are driving this cautious approach.

Large language models, such as OpenAI's GPT, Google's Gemini, and Anthropic's Claude, typically identify themselves when crawling websites. This self-identification allows website owners to make informed decisions about allowing or blocking these AI systems. However, the report highlights that beyond these well-known models, many lesser-known LLMs may not self-declare or may do so in ways that are not immediately obvious.

PPC LandLuís Daniel Rijo

PPC LandLuís Daniel Rijo

The Human Defense Platform offers its customers the ability to allowlist or blocklist known LLM user-agents based on their assessment of whether LLMs crawling their website would be beneficial or detrimental. The stark 80-20 split in favor of blocking suggests a significant lack of trust in the current state of AI web crawling technology.

This widespread blocking of LLMs could have far-reaching implications for the development and training of future AI models. As these models rely heavily on web-based data for training and improvement, restricted access to a substantial portion of the internet could potentially limit their growth and effectiveness.

The report also touches on the potential risks associated with allowing LLM access. One concern is the possibility of AI-generated content infringing on copyrights or trademarks. As LLMs process and recapitulate vast amounts of web content, there's a risk that they could produce outputs that violate intellectual property rights, potentially exposing the websites they crawl to legal risks.

Another factor contributing to the high blocking rate is the fear of AI-assisted cyber attacks. The report mentions instances where AI has been used to enhance password guessing techniques. In these cases, attackers feed usernames or email addresses into language models to predict potential passwords based on patterns from previously compromised credentials. This sophisticated approach to credential stuffing attacks has raised alarm bells among cybersecurity professionals.

HUMAN's comprehensive approach to cybersecurity involves the use of over 2,500 individual signals and more than 300 algorithms to determine the legitimacy of online interactions. This multi-faceted strategy allows for the protection of websites, mobile apps, and APIs from a wide array of automated attacks, including those potentially enhanced by AI.

The report also highlights the challenges in distinguishing between legitimate AI crawlers and those with malicious intent. While major AI companies provide clear identification for their crawlers, the proliferation of smaller, less well-known LLMs makes it increasingly difficult for businesses to discern friend from foe in the AI landscape.

This trend of blocking LLM user-agents is not occurring in isolation. It's part of a broader conversation about the role of AI in cybersecurity, privacy, and data protection. The report suggests that as AI technologies continue to advance, there will likely be an ongoing negotiation between the need for open access to information for AI development and the imperative to protect sensitive data and systems.

The implications of this widespread blocking extend beyond immediate cybersecurity concerns. It raises questions about the future development of AI technologies and how they will interact with the broader internet ecosystem. If a significant portion of the web becomes off-limits to AI crawlers, it could potentially create a skewed or limited dataset for future AI training, affecting the quality and breadth of AI-generated content and services.

HUMAN's report also touches on the potential for a more nuanced approach to AI access in the future. While the current trend leans heavily towards blocking, there's speculation that as AI technologies mature and more sophisticated control mechanisms are developed, businesses might adopt more granular policies. These could involve allowing certain trusted AI models limited access while maintaining strict controls over data usage and content reproduction.

The cybersecurity landscape is clearly at a crossroads when it comes to AI integration. On one hand, AI offers powerful tools for enhancing security measures and detecting complex threats. On the other, it presents new vulnerabilities and challenges that businesses are still grappling with.

As the AI field continues to evolve rapidly, the report suggests that the current high rate of LLM blocking may be a temporary phase. It anticipates that future developments in AI governance, improved transparency in AI operations, and more sophisticated control mechanisms could lead to a more balanced approach to AI integration in web ecosystems.

Key facts from the report regarding LLM blocking

80% of companies using HUMAN's platform have opted to block known LLM user-agents

Major AI models like GPT, Gemini, and Claude typically self-identify when crawling websites

Concerns driving LLM blocking include intellectual property theft, content scraping, and AI-enhanced cyber attacks

The widespread blocking could potentially impact the training and development of future AI models

Instances of AI being used for enhanced password guessing techniques have been observed

The proliferation of lesser-known LLMs makes it challenging to distinguish between legitimate and potentially malicious AI crawlers

The high blocking rate may be a temporary phase as AI governance and control mechanisms evolve