AI Agents: Google unveils framework for next-gen systems

Technical analysis of Google's September 2024 whitepaper exploring foundational components and implementations of AI agent systems.

An extensive technical white paper released by Google in September 2024 provides a detailed examination of artificial intelligence agent architectures, marking a significant development in the field of AI systems. According to the document authored by Julia Wiesinger, Patrick Marlow and Vladimir Vuskovic, the paper explores how AI agents leverage tools to expand beyond traditional language model capabilities.

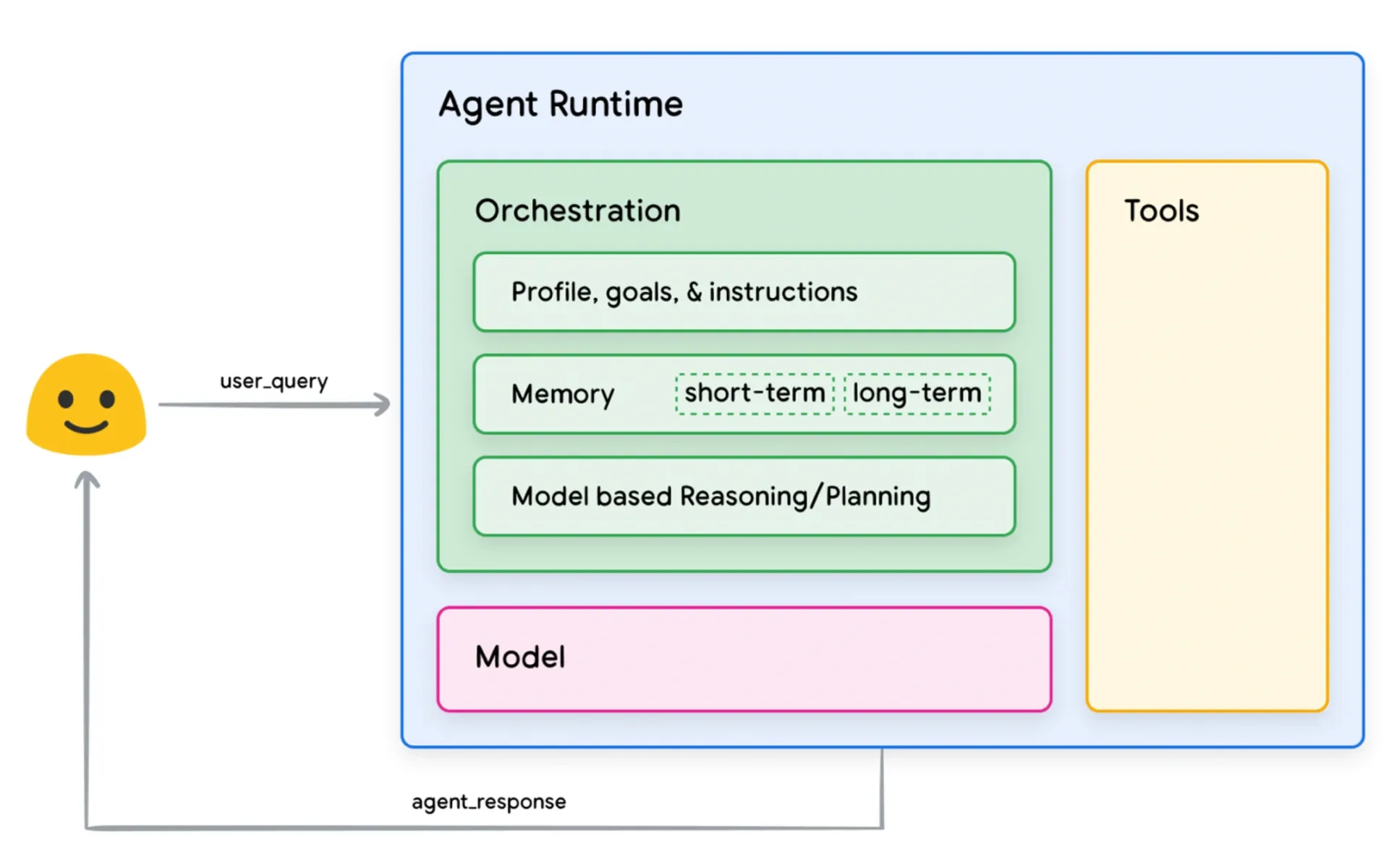

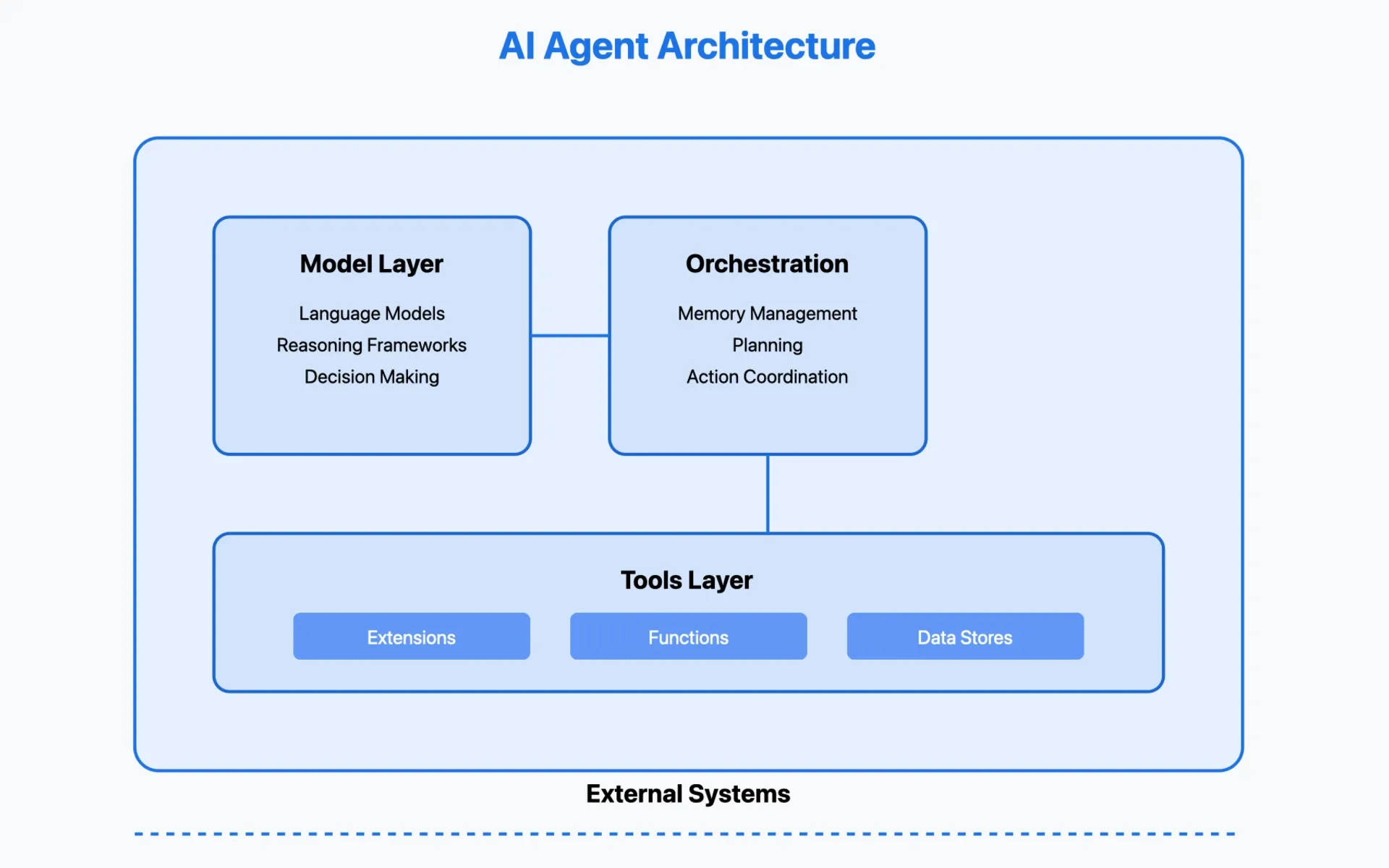

The whitepaper, published four months ago, presents a thorough analysis of the three core components that enable AI agents to interact with external systems: the model layer, the orchestration layer, and the tools layer. According to the authors, these components work together to allow agents to process information, make decisions, and take actions in response to user queries.

At the foundation of agent architectures lies the model layer, which serves as the central decision-making unit. The technical specifications outlined in the document indicate that this layer can utilize one or multiple language models of varying sizes, provided they can follow instruction-based reasoning frameworks. The authors emphasize that while models can be general-purpose or fine-tuned, they should ideally be trained on data signatures matching the intended tools.

The orchestration layer, as detailed in the technical documentation, implements a cyclical process governing how agents intake information, perform reasoning, and determine subsequent actions. The paper presents multiple reasoning frameworks, including ReAct, Chain-of-Thought, and Tree-of-Thoughts. Each framework offers distinct approaches to problem-solving and decision-making within the agent architecture.

A significant portion of the technical analysis focuses on the tools layer, which enables agents to interact with external systems. The authors classify tools into three primary categories: Extensions, Functions, and Data Stores. Extensions provide standardized API interactions, Functions enable client-side execution control, and Data Stores facilitate access to structured and unstructured data sources.

The document provides specific technical implementation details for each tool type. Extensions, according to the specifications, bridge the gap between agents and APIs through standardized interfaces. Functions operate differently, allowing developers to maintain control over API execution through client-side implementation. Data Stores implement vector database technology for efficient information retrieval and processing.

For practical implementation, the paper describes various approaches to enhance model performance through targeted learning strategies. These include in-context learning, retrieval-based in-context learning, and fine-tuning based learning. Each method serves different use cases and operational requirements within the agent architecture.

The technical specifications detail integration patterns using frameworks like LangChain and LangGraph. A sample implementation demonstrates how these components interact in a real-world scenario, showcasing the practical application of the theoretical concepts presented in the paper.

The white paper concludes with a comprehensive examination of production-grade implementations using Vertex AI agents. The technical architecture presented illustrates how Google's managed services integrate the core components while providing additional features for testing, evaluation, and continuous improvement.

Looking toward future developments, the authors note the potential for advancing agent capabilities through tool sophistication and enhanced reasoning frameworks. The document specifically highlights the emergence of "agent chaining" as a strategic approach to complex problem-solving, where specialized agents work together to address multifaceted challenges.

The publication includes extensive technical documentation, with 42 pages of detailed specifications, implementation guidelines, and architectural patterns. The comprehensive nature of the white paper positions it as a significant technical resource for developers and researchers working on AI agent implementations.

Google has made this technical documentation publicly available through their standard distribution channels, enabling practitioners to access detailed implementation guidance for building production-ready agent systems. The paper serves as both a technical specification and a practical guide for implementing AI agent architectures in real-world applications.

Understanding AI agents

AI agents represent a significant advancement by combining language model capabilities with real-world interactions. According to the September 2024 Google whitepaper authored by Wiesinger, Marlow, and Vuskovic, AI agents fundamentally differ from traditional language models in their ability to perceive, reason about, and influence the external world.

At their core, AI agents are sophisticated applications designed to achieve specific goals through a combination of observation and action. According to the technical documentation, these systems operate autonomously, making independent decisions based on defined objectives. Unlike standard language models, which rely solely on their training data, agents can actively gather new information and interact with external systems to accomplish their tasks.

The architecture of an AI agent

The architecture of an AI agent consists of three essential components that work in harmony. At the foundation lies the model layer, which serves as the central decision-maker. This component can utilize one or multiple language models of varying sizes, provided they can follow instruction-based reasoning frameworks like ReAct, Chain-of-Thought, or Tree-of-Thoughts. The orchestration layer governs the agent's cognitive processes, managing how it takes in information, performs reasoning, and determines its next actions. The tools layer enables the agent to interact with external systems and data sources.

To illustrate how these components work together, the whitepaper presents an analogy of a chef in a busy kitchen. Just as a chef gathers ingredients, plans meal preparation, and adjusts based on available resources and customer feedback, an AI agent collects information, develops action plans, and modifies its approach based on results and user requirements. This cyclical process of information intake, planning, execution, and adjustment defines the agent's cognitive architecture.

Distinction between traditional language models and AI agents

The whitepaper emphasizes a crucial distinction between traditional language models and AI agents. While language models excel at processing information within their training parameters, they remain confined to that knowledge base. Agents, however, can extend beyond these limitations through their ability to use tools. These tools come in three primary forms: Extensions, which provide standardized API interactions; Functions, which enable client-side execution control; and Data Stores, which facilitate access to various types of information.

A key advantage of AI agents lies in their ability to complement human cognitive processes. The technical documentation explains how agents can assist with complex tasks by breaking them down into manageable steps, gathering relevant information, and executing actions in a coordinated manner. This capability makes them particularly valuable in scenarios requiring real-time data processing, multi-step planning, or interaction with multiple external systems.

The whitepaper details the learning capabilities of AI agents through three distinct approaches. In-context learning allows agents to adapt to new situations using immediate examples and instructions. Retrieval-based learning enables them to access and utilize stored information dynamically. Fine-tuning based learning helps agents develop specialized expertise in particular domains or tasks.

Practical implementation of AI agents

Practical implementation of AI agents involves careful consideration of their components and capabilities. The document describes how developers can use frameworks like LangChain and LangGraph to construct agent architectures, emphasizing the importance of selecting appropriate tools and reasoning frameworks for specific use cases. The integration of these elements creates a system capable of understanding user queries, formulating response strategies, and executing actions through various tools and APIs.

The future of AI agents, according to the whitepaper, lies in their increasing sophistication and ability to handle complex tasks. The concept of "agent chaining" emerges as a particularly promising development, where multiple specialized agents collaborate to address complex challenges. This approach mirrors human expert collaboration, with each agent contributing specific expertise to achieve broader objectives.

The document concludes by noting that while AI agents represent a significant advancement in artificial intelligence, their development requires careful consideration of architectural choices, tool selection, and implementation strategies. Success in deploying AI agents depends on understanding their capabilities and limitations, and appropriately matching them to specific use cases and requirements.

This comprehensive understanding of AI agents highlights their role as bridge-builders between human intelligence and machine capabilities, creating systems that can effectively assist in complex problem-solving while maintaining the flexibility to adapt to new challenges and requirements.