Click fraud is a type of fraud that occurs in pay-per-click (PPC) online advertising when a person, automated script, or computer program imitates a legitimate user clicking on an ad without genuine interest in the advertised content. According to Wikipedia's latest update from October 2024, this deceptive practice targets advertising networks where website owners are paid based on how many visitors click on displayed ads.

The fraud operates through two primary mechanisms:

- Automated bots programmed to click ads repeatedly

- Human-operated click farms where workers manually click ads

- Non-contracting parties clicking competitors' ads to deplete their budgets

According to Google's Ad Traffic Quality team, invalid traffic includes any clicks or impressions that artificially inflate advertiser costs or publisher earnings, covering both intentional fraud and accidental clicks.

Types of Click Fraud Activities

The fraud manifests in several distinct forms:

Publisher Fraud

- Website owners clicking their own ads

- Publishers encouraging users to click ads

- Implementation of deceptive ad placements

Competitive Fraud

- Competitors targeting each other's ads to drain advertising budgets

- Non-contracting parties clicking ads to harm advertisers

- Malicious attacks aimed at specific companies

Bot-Driven Fraud

- Automated scripts simulating human behavior

- Botnet operations controlling multiple computers

- Sophisticated programs mimicking legitimate user patterns

Technical Mechanics Behind Click Fraud

According to technical documentation from Cloudflare, click fraud employs sophisticated methods to evade detection systems.

Bot Operations

Modern click bots incorporate advanced features:

- Randomized timing between clicks

- Simulated mouse movements

- Varied interaction patterns

- Multiple IP address usage

- Botnet distribution across thousands of devices

Fraud Detection Indicators

Key indicators of click fraud include:

- Repeated clicks from similar IP addresses

- Abnormal spikes in click rates

- Unusual geographic patterns

- High click volumes without conversions

- Suspicious timing patterns

Impact on Digital Advertising Ecosystem

Click fraud fundamentally undermines the digital advertising marketplace, affecting multiple stakeholders in the ecosystem.

Financial Impact

The economic consequences are substantial:

- Advertisers pay for worthless clicks

- Ad networks lose credibility

- Publishers face revenue clawbacks

- Legitimate websites suffer from skewed analytics

- Marketing budgets are wasted on fake engagement

Detection and Prevention Measures

According to Google's invalid traffic documentation, prevention measures include:

- Automated filtering systems

- Manual review processes

- IP address monitoring

- Click pattern analysis

- User behavior verification

- Traffic quality assessment

The Evolution of Click Fraud Prevention

The industry has developed sophisticated methods to combat click fraud, though challenges remain.

Key prevention strategies include:

- Machine learning algorithms

- Real-time monitoring systems

- Cross-platform collaboration

- Industry standards development

- Traffic quality verification

Understanding the Distinction: Invalid Traffic vs. Click Fraud

Invalid traffic encompasses a broader spectrum of non-legitimate activity compared to click fraud, according to documentation from the Media Rating Council (MRC) and Google's Ad Traffic Quality team. While click fraud specifically involves malicious intent to generate fraudulent clicks, invalid traffic can include both intentional and unintentional non-human traffic.

According to Google's Ad Traffic Quality documentation, invalid traffic includes any activity that doesn't come from a real user with genuine interest. This definition creates important distinctions between malicious and legitimate non-human traffic:

Legitimate Non-Human Traffic

- Software testing by publishers and advertisers

- Tag testing procedures

- Corporate mandated transactions

- Known crawlers for site governance

- Brand safety verification systems

- Contextual classification tools

- Measurement and verification services

These legitimate forms of non-human traffic serve important functions in the digital advertising ecosystem but should still be filtered from paid advertising metrics. Publishers can provide mechanisms to identify and segregate this traffic or declare it to measurement organizations and ad servers.

The MRC standards specify that development and testing environments should be logically segregated from or clearly distinguished in production environments to avoid commingling test and production transactions. Such traffic may be excluded from impressions altogether with proper support and mechanisms like:

- Dedicated IP addresses

- Specific campaign IDs

- Contractual documentation

- Other evidential support for the activity

For analytics accuracy, excluded test impressions may be separately reported to help reconcile and minimize discrepancies. The MRC standards also allow for discrete reporting of known and disclosed crawlers, bots, spiders, and other site governance activities present for brand safety and contextual classification.

This contrasts sharply with click fraud, which the documentation defines as explicitly malicious activity designed to:

- Generate fraudulent revenue through fake clicks

- Deplete competitors' advertising budgets

- Artificially inflate metrics

- Create false impressions of engagement

- Game search engine rankings

Technical Detection Methods in Ad Serving Systems

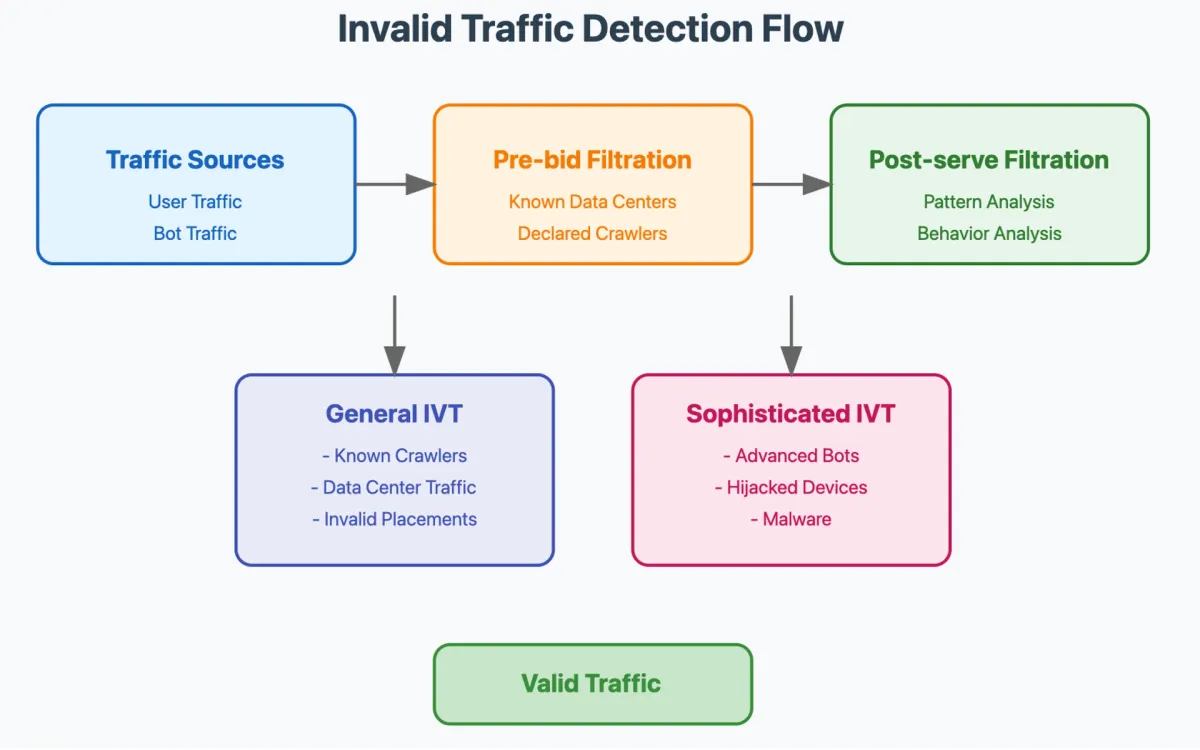

According to Google Campaign Manager documentation, major ad serving platforms employ sophisticated multi-layered systems to detect and filter invalid traffic. These systems operate through both pre-bid and post-serve filtration:

Pre-Bid Filtration

- Traffic identified as invalid before inventory is bid on

- Prevents bidding on known fraudulent inventory

- Saves advertisers from wasting budget on invalid impressions

- Utilizes real-time detection signals

Post-Serve Filtration

- Removes invalid traffic after an event occurs

- Credits back charges for invalid clicks/impressions

- Allows for more complex analysis of traffic patterns

- Can incorporate historical data analysis

Google Campaign Manager specifically categorizes invalid traffic into:

General Invalid Traffic (GIVT)

- Data Center Traffic: Traffic originating from servers in data centers linked to invalid activity

- Known Crawlers: Programs or automated scripts that declare themselves as non-human

- Irregular Patterns: Traffic with attributes associated with known irregular patterns

Sophisticated Invalid Traffic (SIVT)

- Automated Browsing: Programs requesting content without user involvement

- False Representation: Ad requests different from actual inventory supplied

- Misleading User Interface: Modified pages falsely including ads

- Manipulated Behavior: Programs triggering interactions without user consent

- Incentivized Behavior: Explicit incentives driving artificial interactions

Implementation Details

- Integration with third-party verification services like HUMAN

- Automated filtering systems

- Machine learning models for pattern detection

- Manual review processes

- Cross-platform signal analysis

MRC Standards and Industry Best Practices

The Media Rating Council's Invalid Traffic Detection and Filtration Standards Addendum establishes comprehensive requirements for measurement organizations. Key components include:

Organizational Requirements

- Traffic Quality Office with dedicated leadership

- Data analysis function for research and monitoring

- Process development and modification procedures

- Business partner qualification protocols

- Communication frameworks for internal and external stakeholders

Detection Requirements

- Continuous monitoring across all measured traffic

- Regular updates to detection methods

- Increased specificity in filtration requirements

- Higher diligence standards for protecting metrics

The MRC specifically requires

Risk Assessment

- Annual evaluation for both GIVT and SIVT

- Platform-specific analysis (desktop, mobile, OTT)

- Consideration of traffic segmentation

- Direct linkage between risk assessment and controls

Documentation Standards

- Detailed written internal standards

- Clear process documentation

- Regular updates to reflect current practices

- Comprehensive training materials

Communication Requirements

- Internal notifications for awareness

- Industry body communications (MRC, IAB, TAG)

- Best practice sharing with practitioners

- Legal and law enforcement communication when necessary

The MRC standards emphasize the importance of:

- Empirical evidence supporting detection parameters

- Regular review and updates of processes

- Clear escalation procedures

- Strong employee policies against fraud

- Robust change control procedures

- Strict access controls

- Comprehensive disclosure policies

These requirements work together to ensure consistent, effective invalid traffic detection across the digital advertising ecosystem.

Key Facts

- Click fraud occurs when automated or manual clicks artificially inflate advertising costs

- Invalid traffic includes both intentional fraud and accidental clicks

- Detection systems analyze IP addresses, timing patterns, and user behavior

- Prevention requires both automated and manual review processes

- Publishers are responsible for ensuring valid traffic on their ads

- Click fraud affects both large and small advertisers

- Both automated bots and human click farms perpetrate fraud

- Prevention requires ongoing adaptation to new fraud techniques

- Invalid traffic includes both malicious and legitimate non-human activity

- Ad servers employ both pre-bid and post-serve filtration

- MRC requires annual risk assessments

- Documentation must be detailed and regularly updated

- Communication protocols must protect against reverse engineering

- Business partner qualification is mandatory

- Systems must continuously evolve to detect new threats

- Both automated and manual review processes are required