The Autoriteit Persoonsgegevens published a comprehensive guide on October 23, 2025, outlining practical steps for organizations to build sufficient AI literacy among staff and contractors working with artificial intelligence systems. The second guide follows initial recommendations published in January 2025 and comes as enforcement of the EU AI Act approaches critical milestones.

According to the AP, organisations that develop or deploy AI must ensure sufficient knowledge, skills and understanding among individuals operating these systems. This obligation applies to both staff and external parties deploying AI systems on behalf of an organisation, including contractors, service providers and clients. The requirement entered into force as part of the broader AI Act framework, with enforcement obligations for general-purpose AI models beginning August 2, 2025.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

The guide emerged from research conducted between April and June 2025. The AP launched a call for input in April, seeking responses from Dutch organisations about their approaches to AI literacy. Results from this consultation revealed that many organisations remain in early stages of developing comprehensive AI literacy programmes. Survey data showed 54% of responding organisations reported AI literacy on their administrative agenda, while 35% indicated partial attention to the topic and 11% reported no current focus.

The European Commission provided clarification on AI literacy requirements through questions and answers documentation released in mid-2025. According to this guidance, no single set of measures guarantees adequate AI literacy across all contexts. The appropriate approach depends on three factors: the technical knowledge and experience of those working with AI systems, the environment where systems operate, and the individuals or groups affected by these systems.

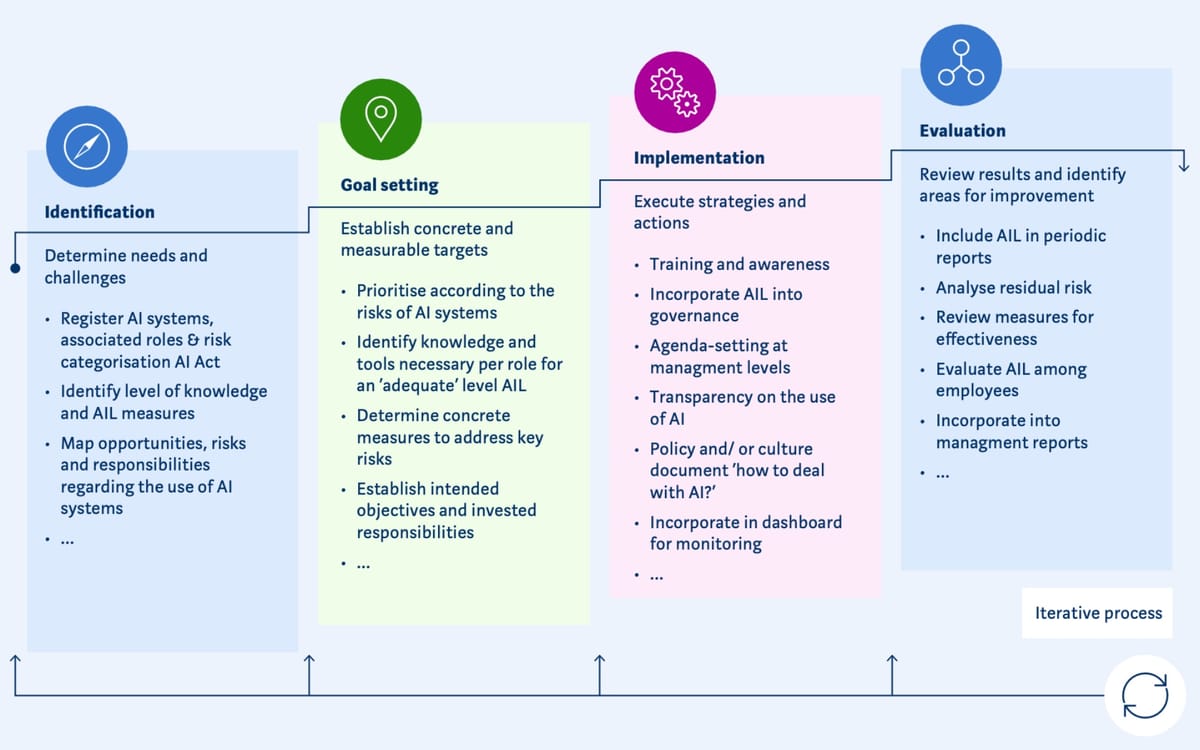

The AP's framework establishes four distinct phases for building AI literacy. The identification phase requires organisations to determine which AI systems operate within their structure and assess associated risks and opportunities. This includes documenting the degree of autonomy each system possesses, with higher autonomy levels requiring increased literacy focus. Organisations must map current knowledge levels among staff working with different systems and identify opportunities for AI deployment within their operations.

Documentation requirements represent a critical component of demonstrating compliance. According to the guide, organisations must maintain internal records tracking their approach and progress toward sufficient AI literacy. This documentation may prove essential during enforcement actions, as insufficient training or guidance could contribute to incidents involving AI systems. The framework does not require measuring literacy levels for individual employees or obtaining formal AI literacy certifications.

The goal-setting phase emphasises prioritising concrete, measurable objectives based on risk levels. High-risk AI systems require particular attention, with the AI Act mandating that deployers ensure workers with appropriate expertise oversee such systems. The guide notes that different organisational roles require distinct knowledge and skills beyond existing technical capabilities. A developer might possess strong technical abilities but limited awareness of ethical implications, highlighting the need for multidisciplinary knowledge.

Organisations should establish centres of expertise or appoint dedicated specialists whom employees can consult regarding AI system questions. The AP emphasises creating safe, open work cultures where staff feel encouraged to learn from each other's questions and mistakes. Survey data revealed that AI literacy often develops through bottom-up initiatives, with individual employees recognising importance and bringing attention to management. However, the AP stresses that sustainable embedding requires top-down management guidance and board-level involvement.

The implementation phase addresses practical execution of AI literacy strategies. Board members must understand AI literacy importance for proper embedding and budgeting. As a rule, AI literacy approaches should integrate into broader AI strategy or vision documents rather than standing alone. Training and awareness programmes form the foundation, with awareness-raising about deployment and development consequences providing a base for increasing employee knowledge and skills.

Transparency within organisations and externally about AI system usage enables employees and involved parties to adopt critical and proactive stances. Monitoring mechanisms ensure organisations can demonstrate AI literacy through documented progress. Survey results indicated that AI literacy appeared on administrative agendas for the majority of responding organisations, though implementation varied significantly.

The evaluation phase recognises that AI literacy represents an ongoing process rather than a fixed endpoint. Structural evaluation requires monitoring and regular analysis of whether targets are being met, with results informing adjustments to current measures. AI literacy should become part of management reporting structures. As AI usage within organisations grows, corresponding increases in AI literacy maturity become necessary.

Survey data from the April call for input revealed significant gaps in monitoring practices. Results showed 33% of organisations reported no monitoring of AI literacy development, 45% indicated partial monitoring, and 22% confirmed active monitoring systems. Measuring impact and knowledge levels remains under development, with organisations currently experimenting with baseline measurements and evaluation formats.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

The guide emphasises that AI literacy obligations extend beyond high-risk systems to all AI deployments. The AP serves as coordinating supervisor for algorithms and AI while contributing to preparation of AI Act supervision. Policy, best practices and guidelines on AI literacy continue development at European level, with the AP contributing to these efforts. A key requirement for responsible algorithm use involves understanding opportunities and impacts of such systems, regardless of whether they fall under AI Act high-risk classifications.

The AP conducted extensive stakeholder engagement before publishing this second guide. Following the April call for input, the authority organised a seminar titled "Working together on AI literacy" in June 2025. Insights from both initiatives informed the practical examples and clarifications included in the multiannual action plan outlined in the guide.

Looking ahead, the AP designated AI literacy as a focus area for ongoing supervision. The authority plans to explore AI literacy implementation status among Dutch organisations throughout coming months. In 2026, the AP will conduct a targeted survey among Dutch organisations to monitor AI literacy progress. Additionally, the authority announced plans to organise a second seminar on AI literacy, providing platforms for organisations to exchange knowledge and experiences.

The publication coincides with broader European regulatory developments affecting AI deployment. The European Commission released comprehensive guidelines on July 18, 2025, clarifying obligations for providers of general-purpose AI models. These guidelines addressed model classification criteria, provider identification, open-source exemptions and enforcement procedures, with compliance requirements entering application on August 2, 2025.

Denmark positioned itself as a regulatory leader by becoming the first EU member state to adopt national legislation implementing AI Act provisions. On May 8, 2025, the Danish Parliament passed comprehensive legislation establishing governance frameworks required for AI regulation enforcement. The Agency for Digital Government, Danish Data Protection Authority and Danish Court Administration serve as designated competent authorities under this framework.

The need for systematic AI literacy has gained recognition across technology sectors. Zapier announced on May 30, 2025, that all new employees must demonstrate AI fluency before joining the organisation. This policy represents one of the most comprehensive AI literacy requirements implemented by a major technology firm, positioning AI fluency as fundamental competency equivalent to literacy or numeracy.

Research on AI adoption patterns reveals demographic variations affecting literacy needs. A German study published in August 2025 showed generative AI adoption varies sharply by age, gender and educational background. These findings suggest organisations must develop varied training approaches that meet workers at different technical familiarity levels rather than assuming uniform literacy.

The AP's approach reflects growing recognition that AI deployment without adequate literacy creates compliance and operational risks. With generative AI usage increasing in education and services sectors, organisations express concerns about employees using AI applications without sufficient oversight, frameworks or safeguards. The guide addresses this "fear of losing control" by providing structured approaches to building organisational capacity.

The Dutch authority's focus on AI literacy aligns with broader European priorities of establishing AI governance standards while maintaining technological competitiveness. Organisations providing or deploying AI systems must ensure individuals have appropriate skills, knowledge and understanding to deploy systems responsibly. This enables organisations to maximise benefits from AI systems while preserving fundamental rights and values.

The multiannual action plan outlined in the guide provides prerequisite foundations including managerial commitment, dedicated budget allocation, clear organisational ownership and responsibility, and periodic reporting and monitoring processes. These prerequisites support the iterative four-phase approach across identification, goal setting, implementation and evaluation stages.

Survey results highlighted challenges organisations face in maintaining AI literacy. Continuity and safeguarding represent important but challenging tasks, with only a small portion of organisations actively monitoring development and progress. Measuring impact and knowledge levels remains under development, with organisations experimenting with baseline measurements and evaluation formats to track effectiveness.

The AP notes that differentiation between functions, risk profiles and experience levels helps clarify knowledge expectations for individual employees and organisations overall. Organisations emphasise the need to integrate AI literacy into defined roles, decision-making processes and existing programmes such as cybersecurity awareness training or compliance frameworks.

The increasing use of AI applications such as chatbots automatically generating responses to student or client questions drives the urgency for proper governance and control. The AP underlines that organisations must take responsibility for fostering and maintaining AI literacy, with developments in the field evolving rapidly while approaches to literacy developing less quickly. The authority stresses that increasing AI use requires corresponding increases in AI literacy commitment.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Timeline

- January 2025: AP published first guide "Getting Started with AI Literacy" establishing multiannual action plan

- April 2025: AP launched call for input seeking responses from Dutch organisations on AI literacy approaches

- May 8, 2025: Denmark adopted first EU national legislation implementing AI Act provisions

- May 30, 2025: Zapier announced requirement for AI fluency among all new hires

- June 2025: AP organised seminar "Working together on AI literacy" gathering stakeholder insights

- June 2025: German data protection authorities issued comprehensive AI development guidelines

- Mid-2025: European Commission provided questions and answers documentation on AI literacy

- July 18, 2025: European Commission released comprehensive AI Act guidelines for general-purpose AI models

- August 2, 2025: AI Act obligations for general-purpose AI models entered application

- August 5, 2025: Study published showing demographic variations in generative AI adoption

- August 2026: Netherlands regulatory sandbox will launch providing supervised AI testing environments

- October 23, 2025: AP published second guide "Building AI literacy" with implementation framework

- 2026: AP plans targeted survey among Dutch organisations to monitor AI literacy progress

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Five Ws Summary

Who: The Autoriteit Persoonsgegevens, the Dutch data protection authority, published guidance affecting organisations providing or deploying AI systems, their staff, contractors, service providers and clients working with AI systems across the Netherlands and European Union.

What: A comprehensive second guide titled "Building AI literacy" establishing a four-phase implementation framework comprising identification, goal setting, implementation and evaluation stages, with practical examples clarifying the multiannual action plan for ensuring sufficient AI literacy among individuals operating or deploying AI systems.

When: Published October 23, 2025, following a call for input conducted in April 2025 and a stakeholder seminar held in June 2025, with obligations under the AI Act entering application on August 2, 2025, and planned follow-up survey scheduled for 2026.

Where: The Netherlands, with implications for organisations operating throughout the European Union under the AI Act framework, coordinated by the AP as the coordinating supervisor of algorithms and artificial intelligence.

Why: Organisations must ensure AI literacy among staff as a legal obligation under the AI Act while addressing the reality that approaches to AI literacy are developing less rapidly than AI technology itself, with survey data showing many organisations remain in early implementation stages despite growing AI usage in sectors like education and services.