Facebook this week announced it has taken down over 1 million groups last year. Facebook clarifies that Facebook Groups, private or public, have the same rules.

According to Facebook, last year, 12 million pieces of content in groups were removed for violating Facebook’s policies on hate speech, 87% of which Facebook says it found proactively. Facebook also found 1.5 million pieces of content in groups for violating the policies on organized hate, 91% of which Facebook found proactively.

Facebook is now passing on the responsibility of moderating content to admins of groups.

For members who have any Community Standards violations in a group, their posts in that group will now require approval for the next 30 days. This stops their post from being seen by others until an admin or moderator approves it.

If admins or moderators repeatedly approve posts that violate Facebook’s Community Standards, Facebook says it will delete the group.

Admins and moderators of groups taken down for policy violations will not be able to create any new groups for a period of time.

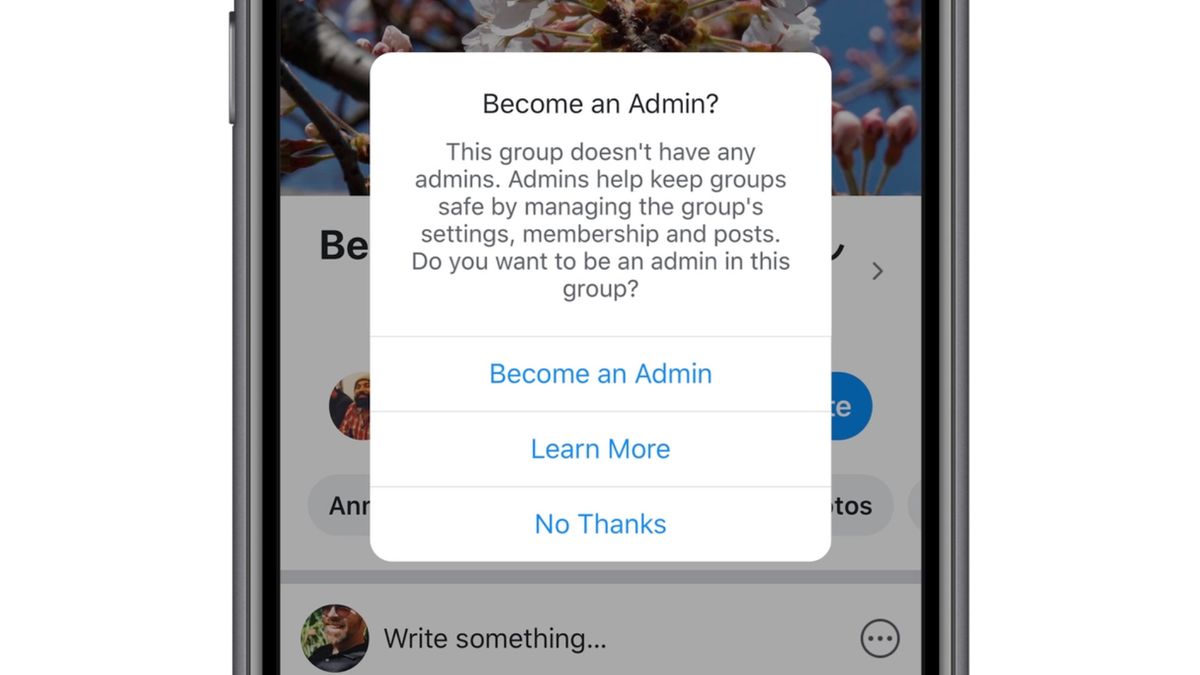

For groups without admins, Facebook is now suggesting admin roles to members who may be interested. Facebook says that if no one accepts to be admin, the groups will be archived.

Facebook also announced this week that Groups repeatedly sharing content rated false by fact-checkers won’t be recommended to other people on Facebook. All content from these groups is ranked lower in News Feed and the notifications are limited so fewer members see the posts.

Group admins are also notified each time a piece of content rated false by fact-checkers is posted in their group, and they can see an overview of this in the Group Quality tool.