The Higher Regional Court of Cologne rejected an emergency injunction request on May 23, 2025, clearing the path for Meta to begin processing public user profile data from Facebook and Instagram for artificial intelligence training purposes starting May 27. The ruling represents a significant development in European data protection law, occurring just one day before Meta's scheduled implementation of its AI training program.

Get the PPC Land newsletter ✉️ for more like this

According to the court's press release, the 15th Civil Senate found no violation of either the General Data Protection Regulation (GDPR) or the Digital Markets Act (DMA) in Meta's planned data processing activities. The Consumer Association of North Rhine-Westphalia had sought to prevent Meta from using publicly available profile data without explicit user consent.

The court determined that Meta possesses a legitimate interest under Article 6(1)(f) GDPR for processing public profile data to develop AI systems. This legal basis allows data processing without explicit consent when the controller demonstrates a legitimate interest that outweighs the data subject's interests and fundamental rights.

According to the ruling, Meta's approach involves processing exclusively publicly visible profile data that search engines can already index. The court noted that affected users retain multiple options to prevent their data from being used, including adjusting privacy settings to make profiles non-public or lodging formal objections through Meta's established procedures.

The decision carries particular weight because it emerged from urgent proceedings with limited review time. According to court procedures, the ruling represents a preliminary assessment based on summarized examination rather than comprehensive fact-finding. The court emphasized that parties maintain the right to pursue full merits proceedings, though the current ruling stands as final and cannot be appealed to the Federal Court of Justice under section 542(2) sentence 1 of the German Code of Civil Procedure.

The timing proves critical for Meta's broader European strategy. The company announced in April 2025 its intention to begin AI training using EU user data on May 27, after temporarily halting similar plans in June 2024 following initial legal challenges. Meta's decision to proceed came after months of engagement with European data protection authorities and implementation of additional safeguards.

Dr. Carlo Piltz, a partner at Piltz Legal who analyzed the ruling, noted the court's alignment with the Irish Data Protection Commission's position. According to Piltz's analysis, the Irish regulator, which serves as Meta's lead supervisory authority under GDPR's one-stop-shop mechanism, has not initiated enforcement actions against Meta's plans and has announced its intention to monitor the implementation.

The court's reasoning addressed several key technical and legal considerations that privacy advocates had raised. According to the press release, Meta demonstrated effective measures to mitigate privacy risks, including advance notification through apps and other channels where possible, exclusion of unique identifiers such as names, email addresses, or postal addresses, and implementation of objection mechanisms allowing users to prevent data processing.

The ruling specifically rejected claims that Meta's processing violates Article 5(2) of the Digital Markets Act, which restricts data combination across services. According to the court's preliminary assessment, Meta's AI training activities do not constitute prohibited "data combination" because the company does not merge data from different services or sources for individual users. The court noted the absence of relevant case law in this area and acknowledged that cooperation with the European Commission, as provided for in the legal framework, was not possible during emergency proceedings.

The decision addresses concerns about processing large volumes of data, including content from minors and sensitive data under Article 9 GDPR. The court found that Meta's implemented safeguards substantially mitigate potential privacy intrusions, particularly given that processing targets only publicly available content and provides clear opt-out mechanisms.

According to the court documentation, Meta announced its processing plans in 2024 and implemented notification procedures to inform users about the upcoming changes. The company established objection procedures allowing users to prevent data processing either by making their profiles private or submitting formal objections through designated forms.

However, regulatory uncertainty persists in other jurisdictions. According to Dr. Piltz's analysis, the Hamburg Data Protection Authority recently sent a hearing letter to Meta, potentially initiating proceedings under Article 66 GDPR that could result in different outcomes. This mechanism allows national authorities to challenge cross-border processing decisions when they disagree with the lead supervisory authority's position.

The broader context includes ongoing legal challenges from privacy advocacy organizations. None Of Your Business (noyb) sent a formal cease and desist letter to Meta on May 14, 2025, threatening class action lawsuits that could theoretically result in damages reaching €200 billion if successful. With approximately 400 million monthly active Meta users in Europe, individual damage claims of €500 or more could create substantial financial exposure.

Privacy advocates have identified several technical challenges with Meta's approach. The company previously argued it cannot technically distinguish between EU and non-EU users in its social networks due to interconnected data points. This limitation raises questions about Meta's ability to properly implement user objections and separate special category data from regular personal information.

The processing of special category data presents particular complexity under Article 9 GDPR, which typically requires explicit consent. Meta's social networks contain vast amounts of sensitive information, including political opinions, religious beliefs, and health-related content. Privacy advocates doubt the company's ability to effectively filter such content from AI training datasets while maintaining model quality.

The case establishes important precedents for AI development in the European market. According to legal experts, the ruling may provide clarity for GDPR-compliant AI training practices using public user data, though a full merits case could still challenge these conclusions. The decision reflects broader tensions between privacy protection and technological innovation in Europe, where fragmented regulatory interpretations create uncertainty for companies developing AI technologies.

Meta's approach contrasts with practices of other major AI developers. While some companies have negotiated licensing agreements with content providers or implemented more restrictive data collection practices, Meta claims its approach follows December 2024 guidance from the European Data Protection Board and extensive consultation with Irish authorities.

The company argues that European AI models require training on local data to understand cultural nuances, language patterns, and regional context. According to Meta's statements, preventing such training could disadvantage European consumers and companies while limiting the region's competitiveness in global AI development.

The ruling's implications extend beyond Meta's specific case. Marketing professionals developing AI-powered tools and services face similar questions about appropriate legal bases for processing user-generated content. The decision suggests that legitimate interest may provide sufficient legal basis for AI training using publicly available data, provided companies implement appropriate safeguards and transparent objection mechanisms.

However, the preliminary nature of the ruling and ongoing regulatory uncertainty across European jurisdictions mean companies should proceed cautiously. Different data protection authorities may interpret GDPR requirements differently, particularly regarding special category data processing and the scope of legitimate interest claims.

The case also highlights evolving enforcement patterns across European data protection authorities. Recent statistics from the European Data Protection Board show that only 1.3% of GDPR cases resulted in monetary penalties between 2018 and 2023, though high-profile enforcement actions have resulted in significant fines for major technology companies.

The immediate practical impact allows Meta to proceed with its planned May 27 implementation while potentially facing additional legal challenges. The company must navigate varying regulatory positions across European jurisdictions while maintaining compliance with existing objection mechanisms and transparency requirements.

The decision represents a crucial test case for how European courts balance data protection rights against legitimate business interests in AI development. As artificial intelligence becomes increasingly central to digital services, the ruling provides initial guidance on permissible data usage patterns while leaving fundamental questions unresolved pending full legal proceedings.

Get the PPC Land newsletter ✉️ for more like this

Why this matters

This ruling establishes important precedents for how marketing professionals can legally develop and deploy AI technologies using publicly available user data. The decision suggests that companies may proceed with AI training using public social media content under legitimate interest provisions, provided they implement appropriate safeguards and transparent opt-out mechanisms.

For marketing technology development, the ruling indicates that explicit consent may not be required for processing publicly available data for AI training purposes. This could enable more efficient development of AI-powered advertising tools, customer segmentation systems, and content recommendation engines without complex consent management requirements.

However, the preliminary nature of the decision and ongoing regulatory uncertainty across European jurisdictions require careful navigation. Marketing professionals should monitor evolving enforcement patterns and ensure robust privacy safeguards when implementing AI systems that process personal data from social media platforms or other public sources.

The case underscores the importance of transparency and user control in AI development. Companies developing marketing AI tools should implement clear notification procedures and meaningful opt-out mechanisms to align with regulatory expectations and build consumer trust in AI-powered marketing technologies.

Get the PPC Land newsletter ✉️ for more like this

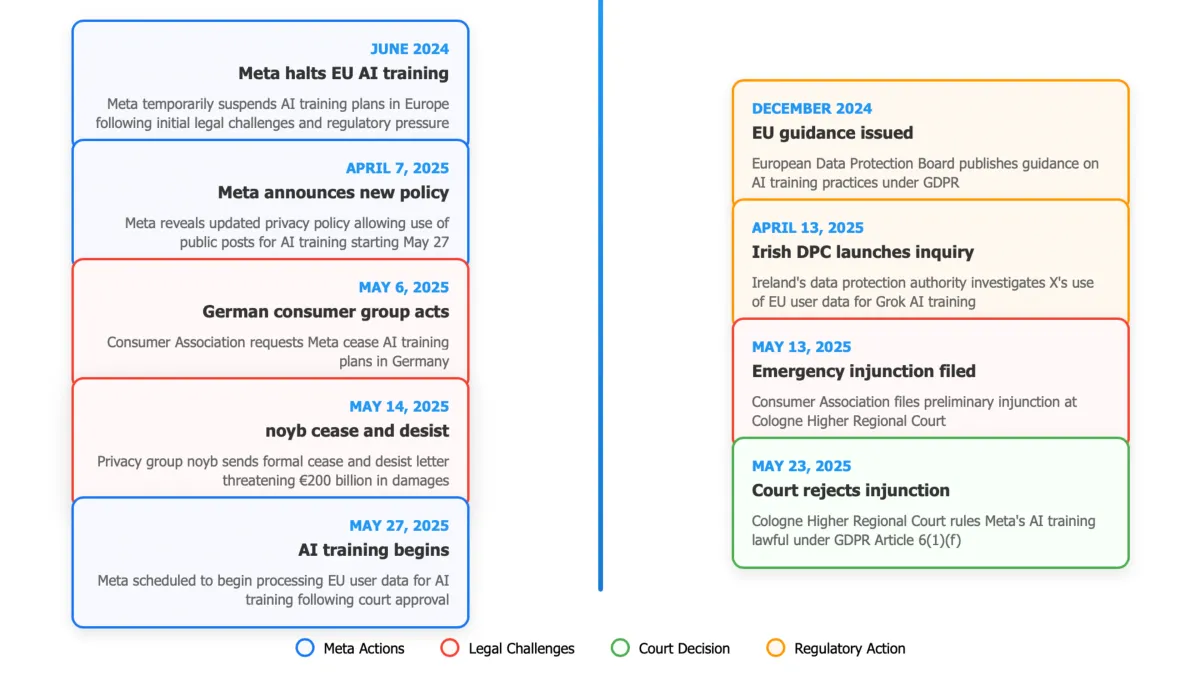

Timeline

June 2024: Meta temporarily halts AI training plans in EU following legal challenges

December 2024: European Data Protection Board issues guidance on AI training practices

April 7, 2025: Meta announces updated privacy policy allowing use of public posts for AI training (Meta to use public posts to train AI models, users can opt out)

April 13, 2025: Irish Data Protection Commission launches inquiry into X's use of EU user data for Grok AI training (Irish DPC launches Grok LLM training inquiry)

May 6, 2025: German Consumer Association requests Meta cease AI training plans

May 12, 2025: Consumer Association of North Rhine-Westphalia files emergency injunction request

May 13, 2025: German consumer organization files preliminary injunction at Cologne Higher Regional Court

May 14, 2025: Privacy group noyb sends formal cease and desist letter to Meta (Meta faces legal battle as noyb sends cease and desist over AI training)

May 22, 2025: Oral hearing held at Cologne Higher Regional Court with Hamburg Data Protection Authority participation

May 23, 2025: Cologne Higher Regional Court rejects injunction request

May 27, 2025: Meta's scheduled date to begin using EU personal data for AI training