Google AI Studio introduces vibe coding for rapid app development

Google AI Studio enables developers to create AI-powered applications through conversational prompts with automatic API integration and model wiring.

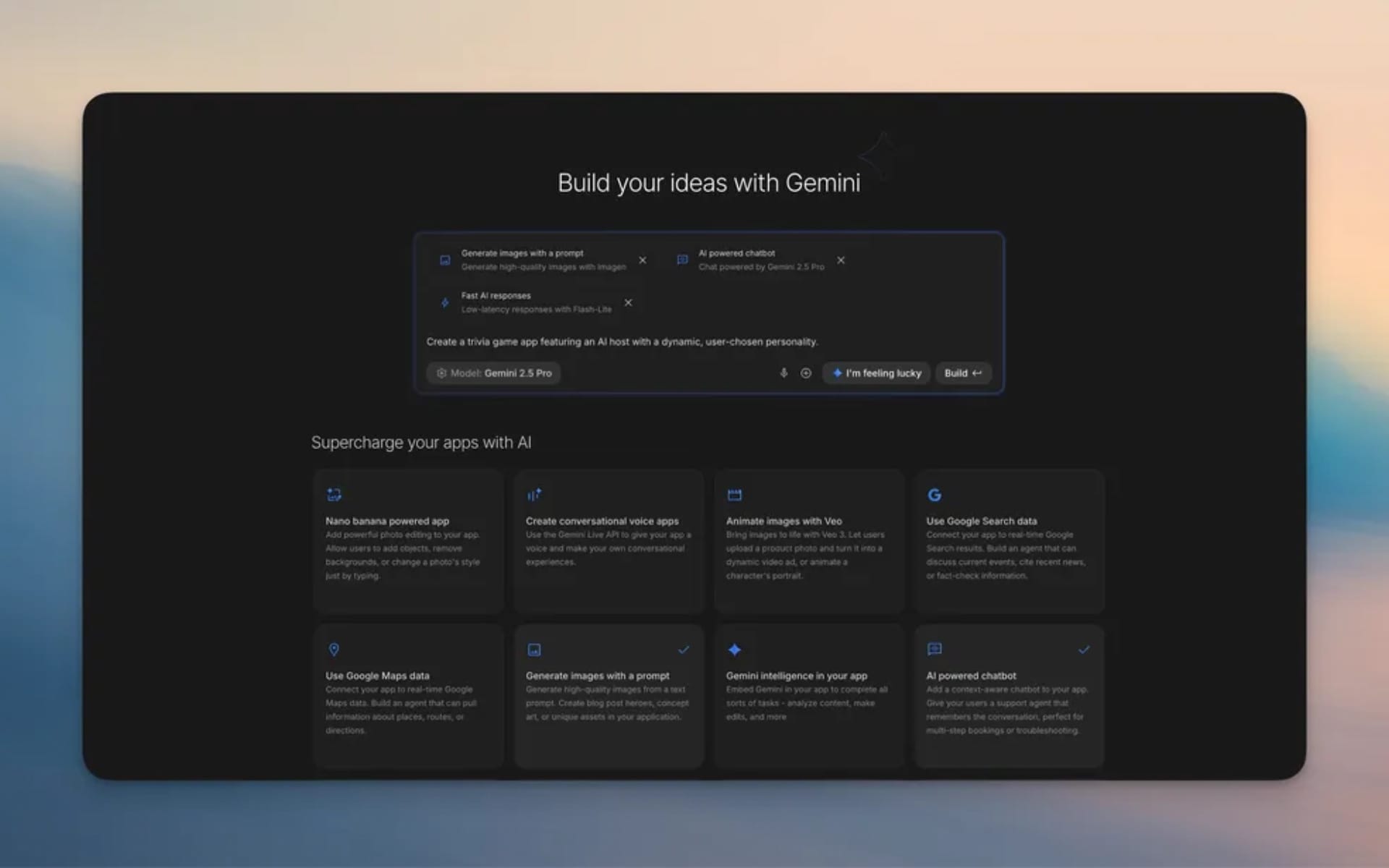

Google announced a comprehensive redesign of its AI Studio platform on October 26, 2025, introducing vibe coding functionality that transforms how developers build artificial intelligence-powered applications. According to Ammaar Reshi, Product and Design Lead for Google AI Studio, and Kat Kampf, Product Manager, the new experience enables users to move "from prompt to working AI app in minutes without you having to juggle with API keys, or figuring out how to tie models together."

The vibe coding implementation represents a departure from traditional development workflows that required manual configuration of multiple services and application programming interfaces. Developers previously needed to manage separate integrations for video generation through Veo, image editing capabilities via tools like Nano Banana, and search functionality through Google Search APIs. The new system consolidates these requirements into a single conversational interface powered by Google's Gemini models.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

The technical architecture enables applications across diverse use cases through natural language instructions. A developer requesting a magic mirror application that transforms photos into fantastical imagery no longer needs to manually wire multiple APIs. AI Studio interprets the requirements, identifies necessary capabilities, and automatically configures the appropriate models and services. For users seeking creative inspiration, an "I'm Feeling Lucky" button generates project suggestions to initiate development.

Google redesigned the App Gallery as a visual library showcasing applications built with Gemini models. The gallery functions as both an inspiration source and a practical learning tool, displaying project previews alongside starter code that developers can examine and modify. Examples include a Dictation App for converting audio recordings into structured notes, a Video to Learning App that transforms YouTube content into interactive educational experiences, and a p5.js playground for generating interactive art through conversational commands.

Annotation Mode introduces visual editing capabilities that eliminate the need for detailed written descriptions of modifications. Developers can highlight interface elements and issue direct instructions such as "Make this button blue" or "animate the image in here from the left." This interaction model maintains creative workflow by reducing context switching between visual design and code editing.

The platform addresses a common development friction point through API key management improvements. According to the announcement, developers who exhaust their free quota can add personal API keys to continue working without interruption. The system automatically reverts to the free tier once it renews, preventing workflow disruptions during active development sessions.

Google emphasizes the accessibility implications of these changes. The company positions vibe coding as enabling both experienced developers to build applications more efficiently and individuals without coding experience to transform ideas into functional prototypes. This democratization aligns with broader trends in AI-powered development tools that reduce technical barriers to application creation.

The App Gallery demonstrates practical applications across multiple categories. Maps Planner enables day trip planning with automatic Google Maps visualization. MCP Maps Basic provides geographical queries with instant visual answers grounded through Model Context Protocol integration. Flashcard Maker generates study materials through simple topic descriptions. Video Analyzer enables conversational interaction with video content for summarization, object detection, and text extraction.

Technical implementation maintains existing authentication systems while streamlining the development process. The Brainstorming Loading Screen displays context-aware project ideas generated by Gemini during application build processes, transforming wait time into ideation opportunities. This approach mirrors Google's strategy of embedding AI capabilities throughout its product ecosystem to enhance user productivity.

The platform's multimodal capabilities leverage Gemini's native training architecture. As detailed in Google's July 2025 technical discussions, Gemini processes text, images, video, and audio through unified token representations. This foundation enables vibe coding to understand complex application requirements spanning multiple data types without requiring explicit format specifications from developers.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

Marketing professionals may find particular value in the rapid prototyping capabilities. The ability to generate applications combining visual content, data analysis, and interactive elements addresses common campaign development challenges. Teams can create custom tools for client presentations, data visualization dashboards, or interactive content experiences without extensive development resources.

The announcement follows Google's pattern of expanding AI accessibility through simplified interfaces for complex technical capabilities. Similar to how the Analytics MCP server enabled natural language queries for data analysis, vibe coding removes technical barriers to application development while maintaining sophisticated underlying functionality.

Google provides a YouTube playlist containing eight tutorial videos demonstrating vibe coding features. The videos cover topics including Google Maps grounding integration, voice-based coding, improved API key management, annotation mode usage, and gallery exploration. Tutorial lengths range from 1 minute 10 seconds to 4 minutes 41 seconds, designed for quick feature comprehension.

The implementation builds upon Google's existing AI infrastructure developed for Product Studio and Asset Studio. Product Studio launched in 2023 with AI-powered image generation for merchants, later expanding to 30 countries with capabilities including scene generation and background editing. Asset Studio beta reached global accounts in August 2025, consolidating creative functions into integrated workflows.

The vibe coding approach contrasts with traditional integrated development environments that require explicit code writing, dependency management, and API configuration. By handling these technical requirements through conversational interfaces, Google aims to accelerate prototyping cycles and enable exploration of application concepts without upfront infrastructure investment.

AI Studio's redesign represents another component in Google's comprehensive AI advertising and development suiteannounced at Think Week 2025. The company continues expanding AI integration across advertising creation, data analysis, and application development platforms, consistently emphasizing reduced manual effort and enhanced creative capabilities.

For developers accustomed to granular control over application architecture, the abstraction layer introduced by vibe coding may present both opportunities and constraints. The system optimizes for rapid iteration and prototype development rather than fine-tuned performance optimization or custom infrastructure requirements. Organizations with specific technical requirements may still need traditional development approaches for production applications.

The platform's accessibility extends to educational contexts. The Video to Learning App example demonstrates conversion of educational content into interactive applications, while the Flashcard Maker addresses study material creation. These applications reflect Google's broader emphasis on AI-powered educational tools that transform static content into interactive experiences.

Google has not specified pricing structures for API usage beyond the initial free quota. Developers building applications for production deployment will need to account for Gemini API costs, which vary based on model selection and usage patterns. The company's standard Gemini pricing applies once free tier allocations are exhausted.

The announcement timing coincides with increasing competition in AI-powered development tools. Multiple platforms now offer conversational interfaces for application creation, reflecting industry-wide movement toward reducing technical barriers in software development. Google's integration advantage stems from its comprehensive model ecosystem spanning text, image, video, and audio processing within a unified platform.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Timeline

- November 1, 2023: Google rolls out Product Studio AI tools for merchants in United States

- December 6, 2023: Google introduces Gemini AI as multimodal foundation model

- March 26, 2024: Google expands Product Studio to Australia and Canada

- May 22, 2024: Google announces AI tools for retailers at Marketing Live

- October 9, 2024: Google launches AI-powered video generation in Product Studio

- December 10, 2024: Product Studio expands to 15 additional markets

- July 3, 2025: Google reveals Gemini multimodal advances in technical podcast

- July 23, 2025: Google Analytics launches MCP server for AI-powered data conversations

- July 30, 2025: Google expands AI search with canvas and video features

- August 18, 2025: Google URL context tool supports PDF analysis

- August 19, 2025: Google Docs adds text-to-speech with Gemini integration

- August 27, 2025: Asset Studio beta reaches global accounts

- September 5, 2025: Google launches EmbeddingGemma for on-device AI

- September 10, 2025: Google unveils comprehensive AI advertising suite at Think Week 2025

- October 7, 2025: Google releases MCP server for Ads API

- October 26, 2025: Google announces vibe coding in AI Studio

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: Google AI Studio development team, led by Product and Design Lead Ammaar Reshi and Product Manager Kat Kampf, announced the vibe coding feature targeting developers, marketers, and individuals without coding experience.

What: A redesigned development experience enabling creation of AI-powered applications through conversational prompts, featuring automatic API integration, visual editing through Annotation Mode, an expanded App Gallery, contextual brainstorming suggestions, and seamless API key management for uninterrupted development.

When: October 26, 2025, with supporting YouTube tutorials published between October 22 and October 26, 2025.

Where: Google AI Studio platform, accessible through the web interface at aistudio.google.com, with applications deployable across Google's model ecosystem including Gemini, Veo, and Google Search integration.

Why: The redesign addresses technical barriers in AI application development by eliminating manual API configuration, enabling rapid prototyping, and democratizing access to advanced AI capabilities for users without extensive coding knowledge while maintaining Google's competitive position in AI-powered development tools.