Google announced on September 17, 2025, a partnership with StopNCII.org to strengthen protections against non-consensual intimate imagery (NCII) appearing in search results. The collaboration marks a technical shift toward proactive content detection using hash-based identification systems.

The partnership involves South West Grid for Learning (SWGfL), a UK-based charity that operates the StopNCII program. According to Griffin Hunt, Product Manager for Search at Google, the company will begin using StopNCII's hash database over the coming months to identify and remove content that violates its NCII policy. Hashes function as digital fingerprints—unique identifiers created from images and videos that allow platforms to detect matching content without storing the actual imagery.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

This technical approach addresses a persistent challenge in content moderation. While Google has maintained removal request systems and implemented ranking adjustments to reduce NCII visibility, the scale of the open web has meant that individuals affected by this form of abuse have faced significant burdens in combating its spread. The hash-sharing mechanism offers a more scalable solution by enabling automated detection across participating platforms.

StopNCII.org provides adults with tools to create hashes of their intimate imagery. These digital fingerprints are then shared with participating companies, which can use them to detect corresponding content on their platforms. The system operates without requiring companies to view or store the actual images, addressing privacy concerns while enabling cross-platform detection.

The announcement comes as Google hosted the NCII London Summit on the same day, bringing together policymakers, industry leaders, and civil society organizations. Hunt stated that the summit aims to continue dialogue about empowering survivors and coordinating efforts to combat NCII across the internet.

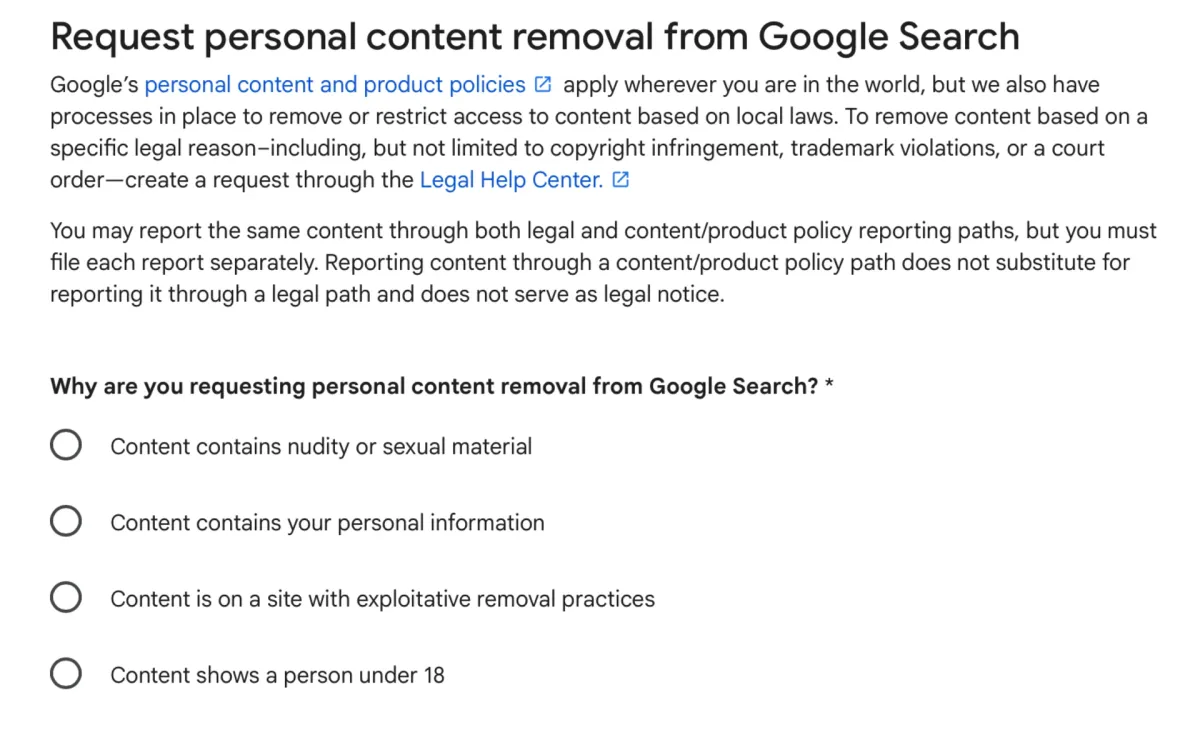

Google's existing NCII protections include removal request mechanisms and search ranking modifications designed to reduce the visibility of such content. The company's help center documentation details three categories of sexual content eligible for removal: real intimate imagery (whether consensual or not, as long as it isn't commercialized), fabricated sexual content created through photo editing or artificial intelligence, and search results that associate individuals with sexual material without apparent reason.

The removal request process requires URLs of specific pages containing the personal information. Google reviews only these submitted URLs for removal from search results. According to the help center documentation, when Google approves a removal request, the outcome can be either full removal—where the website no longer appears in search results at all—or partial removal, where the website remains absent from search results for queries containing the individual's name or identifier but may still appear for other search terms.

For fabricated sexual content, often referred to as deepfakes, Google's policy covers material where the individual is identifiable in images, audio, or video; the content falsely depicts them nude or in sexually explicit situations; and the distribution occurred without consent. This category has grown in significance as AI-generated imagery has become more sophisticated and accessible.

The technical infrastructure supporting these protections processes removal requests through multiple pathways. Policy-based removals apply globally under Google's content policies, while legal removal requests may be available depending on local laws in specific countries or regions. The company maintains a separate process for copyright-related concerns under the Digital Millennium Copyright Act.

Google's approach to duplicate content varies by category. For sexual imagery removal requests, the company automatically attempts to identify and remove duplicates from search results as part of its standard procedure. Individuals submitting requests can opt out of duplicate removal if they prefer to submit each instance separately.

Public interest considerations factor into removal decisions. According to the help center documentation, Google typically removes content that violates its policies, but may retain content if it relates to newsworthy events or matters of public concern. This balancing act between individual privacy and public interest reflects broader tensions in content moderation practices across digital platforms.

The limitations of search result removal remain significant. Even when Google removes content from its search results, the material typically remains on the original hosting website. Access remains possible through direct links, social media posts, or alternative search engines. The help center documentation explicitly notes this constraint and directs users to contact website owners for complete removal.

The partnership with StopNCII represents an industry-wide coordination effort. The hash database system only functions effectively when multiple platforms participate, creating network effects that amplify protection as more companies adopt the technology. This model has precedents in other content moderation contexts, particularly in combating child sexual abuse material, where hash-sharing among platforms has become standard practice.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

For digital marketers and the advertising industry, this development carries implications for content policy enforcement and brand safety measures. Platforms have increasingly tightened content policies around sensitive material, affecting how advertising networks approach content categorization and placement restrictions. The proactive detection mechanisms being deployed for NCII may inform similar approaches to other content categories that pose brand safety concerns.

The technical architecture of hash-based detection requires minimal computational overhead compared to image analysis through machine learning models. Hashes enable rapid comparison against known problematic content without processing power-intensive visual analysis. This efficiency allows platforms to scale detection across billions of search queries and indexed pages.

StopNCII.org's model places control with individuals who create hashes of their own imagery through the platform's tools. This approach differs from reactive reporting systems by establishing a protective layer before unauthorized distribution reaches large audiences. However, it requires individuals to be aware of the service and take proactive steps to generate hashes, which may limit effectiveness for those unaware of the available tools.

The September 17 announcement did not specify the technical timeline for integration or provide metrics on the current scale of NCII appearing in Google search results. The company stated that implementation will begin "over the next few months" without committing to specific milestones or completion targets.

Industry observers have noted that while hash-based detection offers advantages for known content, it cannot identify new instances of NCII that have not been previously hashed. This limitation means that reactive reporting systems remain necessary alongside proactive hash matching. The combination of both approaches aims to reduce both the initial spread and ongoing visibility of such content.

The partnership announcement coincided with Google's broader content moderation initiatives. On September 16, 2025, the company announced a new Google app for Windows experiment in Labs, and on the same day as the NCII announcement, detailed updates to its Discover feature for creators and publishers. These parallel announcements reflect ongoing product development across multiple search and discovery surfaces.

For individuals affected by NCII, the multi-step removal process remains intact. Users must identify specific URLs, submit removal requests with supporting documentation including screenshots, and await review. The help center documentation emphasizes that screenshots should isolate relevant individuals without including other parties or extraneous information. The process explicitly prohibits submitting screenshots containing imagery of individuals under 18 years old, directing such cases to separate reporting mechanisms for child sexual abuse material.

Google's distinction between policy violations and legal violations creates two separate pathways for content removal requests. Policy-based removals apply globally under the company's terms of service, while legal removals address jurisdiction-specific laws. This bifurcated system reflects the challenge of operating a global platform subject to varying legal frameworks across different countries and regions.

The NCII London Summit convened stakeholders from multiple sectors to address coordination challenges in combating intimate image abuse. Such cross-sector collaboration has become increasingly common as platforms, civil society organizations, and government entities recognize that isolated efforts by individual companies cannot fully address the problem. The summit agenda was not detailed in the announcement, but similar convenings typically address technical standards, policy harmonization, and victim support resources.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Timeline

- September 17, 2025: Google announces partnership with StopNCII.org to use digital fingerprints for proactive NCII detection and removal

- September 17, 2025: NCII London Summit convenes policymakers, industry leaders, and civil society to discuss coordinating anti-NCII efforts

- Coming months (from September 2025): Google to begin implementing StopNCII hash database integration for automated content detection

- Related: Google's ongoing search quality updates have increasingly focused on content policy enforcement

- Related: Platform content moderation practices continue evolving across major search and social media services

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: Google partnered with StopNCII.org, a program operated by South West Grid for Learning (SWGfL), a UK-based charity. Griffin Hunt, Product Manager for Search at Google, announced the partnership.

What: Google will use digital fingerprints (hashes) created through the StopNCII platform to proactively identify and remove non-consensual intimate imagery from search results. The system allows adults to create unique identifiers of their intimate imagery, which participating companies use to detect and remove matching content.

When: The partnership was announced on September 17, 2025, with implementation beginning over the following months. Google also hosted the NCII London Summit on the same date.

Where: The changes apply to Google Search results globally. StopNCII.org is operated by a UK-based charity, though the service and partnership have international reach.

Why: The partnership addresses the scale challenges of combating non-consensual intimate imagery on the open web. While Google maintained existing removal request systems and ranking improvements, feedback from survivors and advocates indicated that the burden on affected individuals remained too high. The hash-based system creates a more scalable, proactive approach to detection and removal across participating platforms.