Google has modified the refresh schedule for JSON objects containing Google crawler and fetcher IP ranges from weekly to daily. The change, implemented yesterday, addresses feedback from large network operators and aims to enhance security verification procedures for website administrators concerned about potential impersonation of Google crawlers.

Web crawlers, commonly known as bots, play a crucial role in how search engines discover and index online content. However, the ubiquity of these automated systems has created opportunities for malicious actors to impersonate legitimate crawlers such as Googlebot. This update provides technical administrators with more current information to verify whether a web crawler accessing their server is genuinely from Google.

According to the Google Search Central documentation, "You can verify if a web crawler accessing your server really is a Google crawler, such as Googlebot. This is useful if you're concerned that spammers or other troublemakers are accessing your site while claiming to be Googlebot."

The announcement was shared on LinkedIn by Gary Illyes, an Analyst at Google, who stated: "Based on feedback from large network operators, we changed the refresh time of the JSON objects containing the Google crawler and fetcher IP ranges from weekly to daily. If you're consuming these files, you may need to update your libraries if you care about changes to these ranges."

How Google Crawlers Operate

Google's crawlers fall into three distinct categories, each with specific functions and behaviors regarding robots.txt rules:

- Common crawlers: These include standard crawlers used for Google's products such as Googlebot. They consistently respect robots.txt rules for automatic crawls and use IP addresses within specific ranges. These crawlers can be identified through the reverse DNS mask "crawl-***-***-***-***.googlebot.com" or "geo-crawl-***-***-***-***.geo.googlebot.com".

- Special-case crawlers: These perform targeted functions for Google products such as AdsBot. They operate within agreements between the crawled site and the specific Google product about the crawling process. These crawlers may or may not adhere to robots.txt rules and typically resolve to "rate-limited-proxy-***-***-***-***.google.com".

- User-triggered fetchers: These tools execute fetching operations at a user's explicit request, such as Google Site Verifier. Since these fetches result from direct user actions, they bypass robots.txt rules. Fetchers controlled directly by Google originate from IPs that resolve to a google.com hostname, while those running on Google Cloud (GCP) resolve to gae.googleusercontent.com hostnames.

Verification Methods

The Google Search Central documentation outlines two primary methods website administrators can employ to verify the legitimacy of crawlers claiming to be from Google:

Manual Verification

For individual verification instances, using command-line tools provides a straightforward approach sufficient for most scenarios. This verification process involves four key steps:

- Perform a reverse DNS lookup on the IP address recorded in server logs using the host command

- Confirm that the returned domain name belongs to either googlebot.com, google.com, or googleusercontent.com

- Execute a forward DNS lookup on the domain name obtained in step one

- Verify that the resulting IP address matches the original IP from the server logs

The documentation provides practical examples of this process:

Example 1:

$ host 66.249.66.1

1.66.249.66.in-addr.arpa domain name pointer crawl-66-249-66-1.googlebot.com.

$ host crawl-66-249-66-1.googlebot.com

crawl-66-249-66-1.googlebot.com has address 66.249.66.1

Example 2:

$ host 35.247.243.240

240.243.247.35.in-addr.arpa domain name pointer geo-crawl-35-247-243-240.geo.googlebot.com.

$ host geo-crawl-35-247-243-240.geo.googlebot.com

geo-crawl-35-247-243-240.geo.googlebot.com has address 35.247.243.240

Example 3:

$ host 66.249.90.77

77.90.249.66.in-addr.arpa domain name pointer rate-limited-proxy-66-249-90-77.google.com.

$ host rate-limited-proxy-66-249-90-77.google.com

rate-limited-proxy-66-249-90-77.google.com has address 66.249.90.77

Automated Verification

For websites experiencing high volumes of crawler traffic, implementing an automated verification system provides a more scalable solution. This approach involves comparing the IP address of the connecting crawler against published lists of legitimate Google crawler IP ranges.

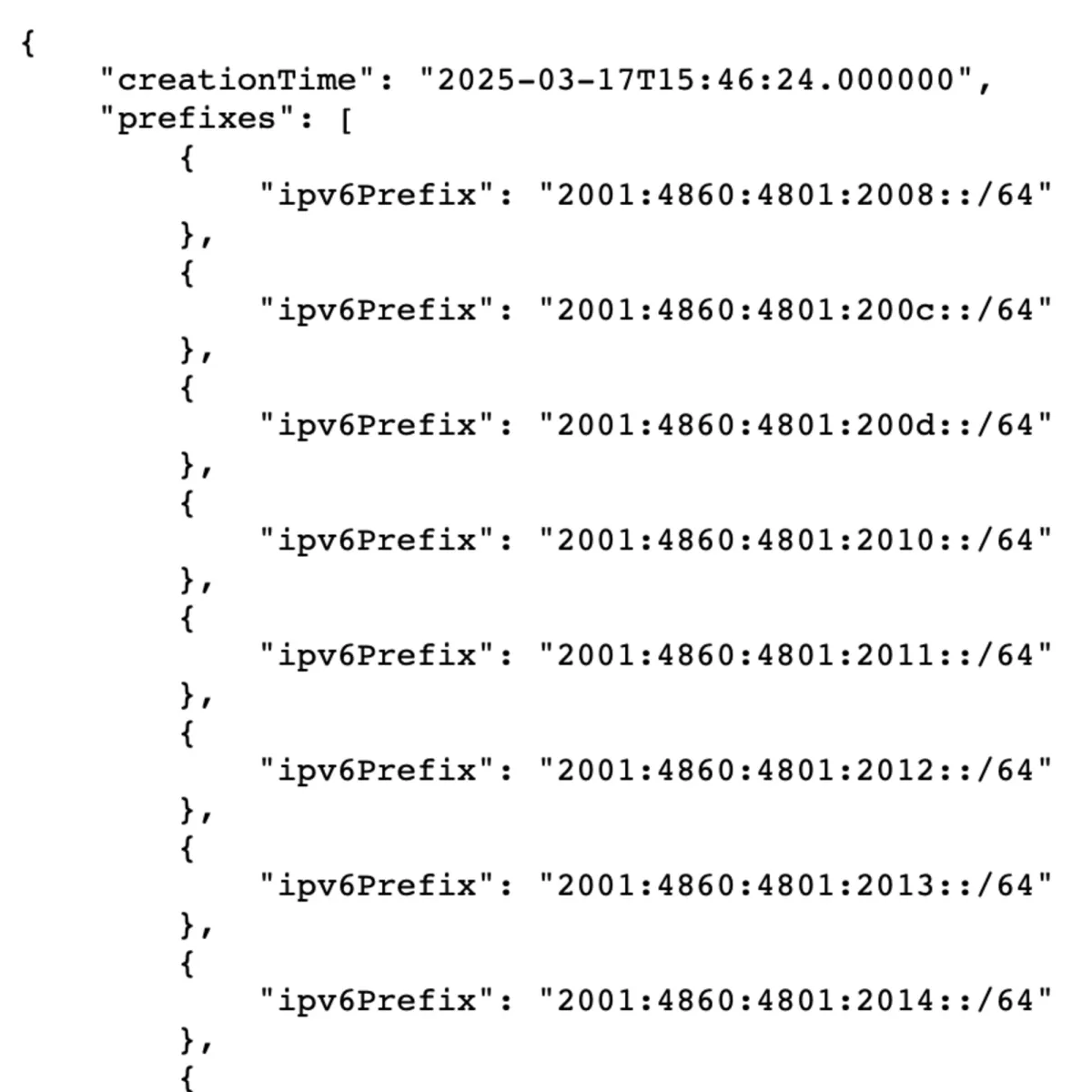

The documentation directs administrators to four JSON files containing the IP ranges for different crawler types:

- Common crawlers like Googlebot (googlebot.json)

- Special crawlers like AdsBot (specialcrawlers.json)

- User-triggered fetches from users (user-triggered-fetchers.json)

- User-triggered fetches from Google (user-triggered-fetchers-google.json)

For other Google IP addresses that might access a website, administrators can check against a general list of Google IP addresses. It's important to note that the IP addresses in these JSON files use CIDR format, which represents a range of IP addresses in a compact notation.

Community Reaction and Implementation Considerations

The LinkedIn announcement by Gary Illyes generated significant engagement from SEO professionals and technical website administrators. The post received 218 reactions and 31 reposts, demonstrating the importance of this update to the technical SEO community.

Several comments from industry professionals highlighted implementation considerations. Harry H., an SEO & Product Manager, noted: "The JSON shows current IP addresses, updating daily. So it's up to us to store historic IP addresses if we want to analyze historic bot activity moving forward."

Ryan Siddle, founder of Merj, confirmed they have been "storing Googlebot, Bingbot, OpenAI, etc. Some of them go back quite a few years," and shared a link to their IP tracking tool.

Another practical question came from Hiten S., a B2B SaaS Growth Marketing professional, who asked: "If we were to use reverse DNS, we should store the IP list via URL in Variables instead of manually copy pasting it to the script? That way we can always have the updated list?"

These discussions underscore the technical implications of the updated refresh schedule for organizations that actively monitor and verify crawler traffic.

Security Implications

This update addresses a significant security concern for website owners. Malicious bots impersonating Googlebot might attempt to circumvent security measures or crawl restricted content by claiming to be legitimate search engine crawlers. By providing daily updates to the IP ranges, Google enables more accurate verification, reducing the window of opportunity for potential attackers to exploit outdated information.

For large network operators especially, this change facilitates more accurate traffic management and security policies. Organizations that implement web application firewalls or intrusion detection systems can now update their rules daily to better distinguish between legitimate Google crawlers and potential impostors.

The move also reflects Google's ongoing commitment to transparency in its crawling operations. By maintaining publicly accessible lists of crawler IP ranges and providing detailed verification procedures, the company enables website administrators to implement appropriate security measures without inadvertently blocking legitimate crawler traffic.