Google announced significant upgrades to its anti-scam capabilities across Chrome, Search, and Android on Wednesday, May 8, 2025, just three days ago. The company has integrated AI technologies, including its on-device Gemini Nano large language model, to combat increasingly sophisticated online threats. The announcement coincided with the release of Google's "Fighting Scams in Search Report," which details the company's expanded efforts to protect users from deceptive online activities that cost consumers over $1 trillion globally in 2024, according to the Global Anti-Scam Alliance.

According to Jasika Bawa, Group Product Manager for Chrome, and Phiroze Parakh, Senior Director of Engineering for Search, Google's AI-powered scam detection systems have enabled the company to identify and block "hundreds of millions of scammy results every day." The implementation of improved AI classifiers has increased scam detection capacity by a factor of 20, significantly reducing users' exposure to harmful websites designed to steal sensitive information.

The "Fighting Scams in Search Report" reveals how Google has built sophisticated AI-powered defenses that now keep Search "99% spam-free," with safeguards blocking "billions of potentially scammy results every day." The report emphasizes that this is an ongoing battle, as "bad actors constantly adapt and evolve," requiring continuous investment in novel approaches to stay ahead of emerging threats.

Google's AI scam prevention strategy operates across three major product areas: Search, Chrome, and Android. Each implementation leverages artificial intelligence differently, targeting specific types of scams common to each platform.

Search: Blocking scams before users see them

In Search, Google employs AI to analyze vast quantities of web text, identify coordinated scam campaigns, and detect emerging threats before they can reach users. These advancements in AI classifiers and large language models enable Google to identify "subtle linguistic patterns and thematic connections that might indicate coordinated scam campaigns or emerging fraudulent narratives," according to the report.

The company's systems can now detect "interconnected networks of deceptive websites that might appear legitimate in isolation," providing a deeper understanding of the scam ecosystem and allowing for more targeted detection mechanisms.

One notable success highlighted in the report involves fake airline customer service scams, where bad actors impersonated legitimate support providers to target travelers seeking assistance. After observing this trend, Google "swiftly implemented dedicated protections" that resulted in a reduction of these scams by more than 80% in Search, greatly reducing the risk of users calling scammy phone numbers.

Similarly, in 2024, Google observed a rise in sites mimicking official government resources like visa application services. New protections deployed that year decreased these impersonation scams by more than 70%.

Another significant advancement involves Google's use of large language models to scale protections across languages. The report notes that "scammers operate around the world and in every language," making multilingual detection crucial. Google's LLMs allow it to identify a scam in one language and then protect users searching in multiple other languages, "limiting user exposure to scams globally."

Chrome: On-device AI detection with Gemini Nano

Perhaps the most technically significant announcement involves Chrome's Enhanced Protection mode. This security feature, which already provides twice the protection against phishing and scams compared to Chrome's Standard Protection mode, has been upgraded with on-device AI capabilities powered by Gemini Nano.

The integration of Gemini Nano, Google's on-device large language model (LLM), provides "an additional layer of defense against online scams." By running directly on the user's device rather than in the cloud, this system delivers several technical advantages:

- It provides instant analysis of potentially dangerous websites

- It can detect previously unknown scam patterns

- It protects users' privacy by keeping sensitive browsing data on the device

According to Google, "Gemini Nano's LLM is perfect for this use because of its ability to distill the varied, complex nature of websites, helping us adapt to new scam tactics more quickly."

The initial implementation of this technology focuses on remote tech support scams, which Google identifies as "one of the biggest online threats facing users today." These scams typically involve fake error messages or warnings that prompt users to call fraudulent support numbers, leading to financial theft or unauthorized access to devices. Google notes that tech support scams "often employ alarming pop-up warnings mimicking legitimate security alerts," and may use "full-screen takeovers and disable keyboard and mouse input to create a sense of crisis."

The detection process follows a specific sequence. When a user navigates to a potentially dangerous page, certain triggers characteristic of tech support scams (such as use of the keyboard lock API) will cause Chrome to evaluate the page using Gemini Nano. Chrome provides the LLM with the page content and queries it to extract security signals, including the intent of the page. This information is sent to Safe Browsing for a final verdict, which may then display a warning interstitial if the page is deemed likely to be a scam.

In a LinkedIn post following the announcement, Badr Salmi, Senior Product Manager at Google, provided additional technical context about the implementation. "Gemini Nano operates directly on the device, delivering real-time insights into potentially risky websites," Salmi wrote. "This proactive approach enhances our ability to shield users from emerging scams, even those we haven't encountered before."

The on-device approach represents a significant advancement in browser security, as traditional protection systems typically rely on databases of known malicious sites. By leveraging a language model's ability to understand website content and context, Chrome can now identify suspicious patterns even on previously unseen websites. This is particularly valuable since, according to Google, "the average malicious site exists for less than 10 minutes," making rapid detection critical.

Google emphasizes that this implementation prioritizes both privacy and performance. The LLM is "only triggered sparingly and run locally on the device," with careful resource management to avoid interrupting browser activity. The system includes "throttling and quota enforcement mechanisms to limit GPU usage" while maintaining protection.

This feature builds on the success of Enhanced Protection mode, which Google announced in February 2025 is now used by more than 1 billion Chrome users, making them twice as safe from phishing and other scams compared to Standard Protection mode.

Google plans to expand this protection to Android devices and additional scam types in the future, including package tracking scams and unpaid toll scams. The implementation is expected to reach Chrome on Android later this year, though specific timelines were not disclosed in the announcement.

Android: Notification and messaging protections

The third component of Google's anti-scam initiative focuses on Android devices, addressing threats that arrive via notifications, calls, and text messages.

To combat notification-based scams, Google is "launching new AI-powered warnings for Chrome on Android." According to Hannah Buonomo and Sarah Krakowiak Criel from Chrome Security, the company has "received reports of notifications diverting you to download suspicious software, tricking you into sharing personal information or asking you to make purchases on potentially fraudulent online store fronts."

When Chrome's on-device machine learning model flags a potentially malicious notification, users will see the name of the site, a warning message, and options to either unsubscribe or view the flagged content. If users believe the warning was displayed incorrectly, they can choose to always allow notifications from that site in the future.

The technical implementation is privacy-focused. Chrome uses a local, on-device machine learning model to analyze notification content, including the title, body, and action button texts. Notifications remain end-to-end encrypted, with analysis performed on-device to protect user privacy. The model was trained using synthetic data generated by the Gemini large language model and evaluated against real notifications classified by human experts.

This feature complements other notification protections in Chrome, including automatic revocation of notification permissions from abusive sites (visible in Chrome Safety Check) and one-tap unsubscribe for Android notifications.

"Scams are commonly being initiated through phone calls and text messages that appear harmless at first, but then evolve into dangerous situations," Google noted in its announcement. To address this threat vector, the company has implemented "on-device AI-powered Scam Detection in Google Messages and Phone by Google."

These features, introduced on March 4, 2025, target "conversational scams" that often begin innocently but gradually manipulate victims into sharing sensitive data or transferring funds. According to Google's research, more than half of Americans reported receiving at least one scam call per day in 2024.

For text messages, the Scam Detection feature in Google Messages uses on-device AI to detect suspicious patterns in SMS, MMS, and RCS messages, providing real-time warnings even after initial messages are received. When the system detects a potential scam, users receive a warning with options to dismiss or report and block the sender.

For phone calls, Google uses on-device AI models to analyze conversations in real-time and warn users of potential scams. If a caller tries to request payment via gift cards for a delivery, for example, the system will alert the user through audio and haptic notifications while displaying a warning.

In both cases, these features prioritize privacy. For messages, all processing remains on-device, with Scam Detection enabled by default but only for conversations with non-contacts. For calls, audio is processed ephemerally with no conversation audio or transcription recorded or stored, and the feature is off by default to give users control.

According to a third-party evaluation by Leviathan Security Group in February 2025, Android smartphones—led by the Pixel 9 Pro—scored highest for built-in security features and anti-fraud efficacy compared to other major smartphone platforms.

Technical implementation

The technical implementation details of Google's AI scam prevention systems have drawn attention from security experts and industry professionals. The use of on-device AI for security purposes represents a growing trend in the industry, balancing performance requirements with privacy considerations.

However, some technical questions remain unanswered. In response to Salmi's LinkedIn post, Shawn Wilson, a Unix Dev*Ops Engineer, asked for evidence supporting Google's claim that the LLM enables "even better protections against scams." Wilson questioned whether Google has quantifiable data to support the effectiveness of its AI-powered approach compared to traditional methods.

Similar skepticism was expressed by Arjun S., who inquired whether Gemini Nano would be made available for other Android applications beyond Google's own products. These questions highlight industry interest in the broader applicability of on-device AI for security purposes, as well as the need for transparent evaluation metrics when implementing new protection technologies.

Despite these questions, the integration of AI-powered protections across Google's product ecosystem represents a significant advancement in the company's security strategy. By leveraging artificial intelligence at multiple levels—from server-side analysis in Search to on-device detection in Chrome and Android—Google aims to create a comprehensive defense system against increasingly sophisticated online threats.

Impact for users and the digital ecosystem

For everyday internet users, Google's enhanced scam protection measures could significantly reduce exposure to common online threats. Remote tech support scams alone cost consumers millions of dollars annually, according to industry reports. By blocking these scams at the browser level, Google potentially prevents significant financial and personal data loss.

The notification protection features address another growing concern—the misuse of browser notification systems for spam and scam delivery. As websites have increasingly adopted push notifications as an engagement channel, bad actors have exploited this system to deliver misleading or fraudulent content directly to users' devices, even when they're not actively browsing.

For the broader digital ecosystem, Google's approach may establish new standards for security implementation. The use of on-device AI for threat detection could influence how other browsers and platforms approach similar challenges, particularly as language models become more efficient and capable of running on resource-constrained devices.

Google's announcement emphasizes that scammers continually evolve their tactics, necessitating equally evolving defense mechanisms. "No one likes being tricked, but history has shown us that scammers are constantly evolving their tactics and unlikely to give up anytime soon," the company stated. "That's why we're committed to making their job as hard as possible through using Google's latest AI advancements to raise the bar for safety across all of our products."

This ongoing cat-and-mouse game between security teams and scammers highlights the importance of adaptive technologies that can respond to new threat patterns without requiring manual updates or signatures. Language models like Gemini Nano, which can analyze and understand content contextually rather than relying solely on predefined patterns, represent a significant advancement in this direction.

Why this matters for the marketing community

For marketing professionals, Google's enhanced scam protection measures have several important implications. First, the increased filtering of scam content in Search results may help legitimate businesses by reducing competition from fraudulent sites that might otherwise divert traffic and erode consumer trust. When consumers can trust search results, they're more likely to engage with authentic marketing content.

Second, marketers should be aware that AI-powered detection systems may scrutinize website content more thoroughly than previous technologies. Sites with aggressive sales tactics, misleading claims, or content patterns similar to known scams might face increased scrutiny. This reinforces the importance of transparent, ethical marketing practices that clearly distinguish legitimate businesses from scam operations.

Third, the protection against notification abuse may impact how businesses use browser notifications as a marketing channel. While legitimate notification use shouldn't be affected, marketers should ensure their notification strategies follow best practices to avoid being flagged by Google's new AI systems. This includes clear opt-in processes, relevant content, and reasonable frequency.

Finally, these developments highlight the growing importance of trust as a competitive advantage in digital marketing. As consumers become increasingly aware of online threats, brands that can establish and maintain trust through transparent practices and secure interactions will likely see stronger engagement and conversion rates. Marketing strategies that emphasize security and trustworthiness may resonate particularly well in this environment.

Timeline

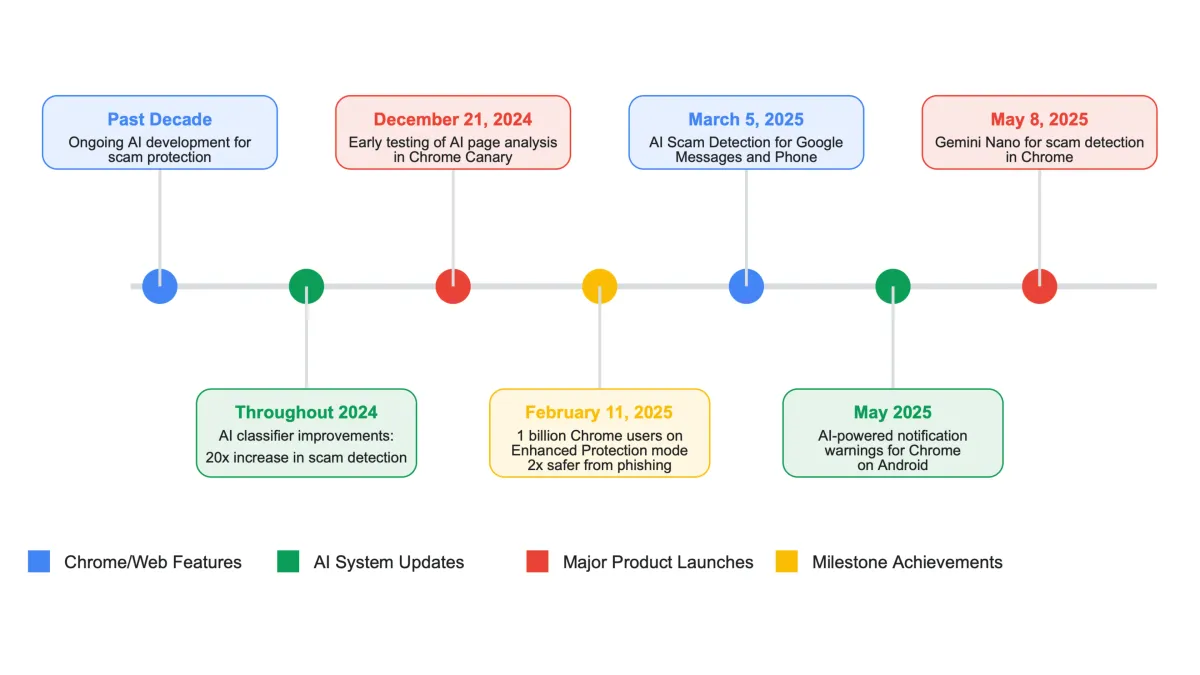

- May 8, 2025: Google announces new AI-powered scam protection features across Search, Chrome, and Android, along with the release of the "Fighting Scams in Search Report"

- May 2025: Implementation of on-device Gemini Nano for scam detection in Chrome's Enhanced Protection mode begins

- May 2025: Launch of AI-powered notification warnings for Chrome on Android

- Early 2025: Introduction of on-device AI-powered Scam Detection in Google Messages and Phone by Google

- Throughout 2024: Development and improvement of AI classifiers, resulting in 20x increase in scam page detection

- Past decade: Ongoing use of AI advancements to protect users from online scams, according to Google