Meta announced today the launch of Instagram Teen Accounts in India, introducing comprehensive safety measures designed specifically for teenage users in the Indian market. The announcement, made on Safer Internet Day 2025, marks a significant development in social media safety protocols.

According to Natasha Jog, Director of Public Policy India at Instagram, the platform is "strengthening protections, enhancing content controls, and empowering parents, while ensuring a safer experience for teens." The implementation includes automatic private account settings, restricted messaging capabilities, and enhanced content filtering systems.

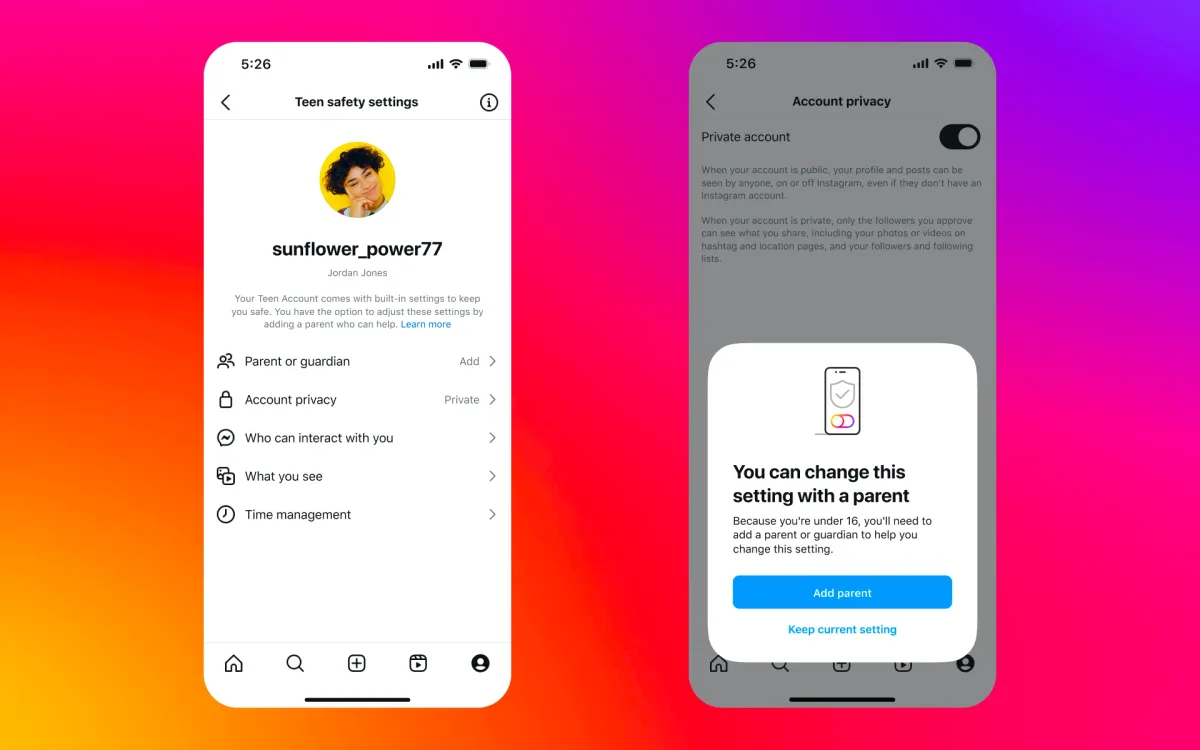

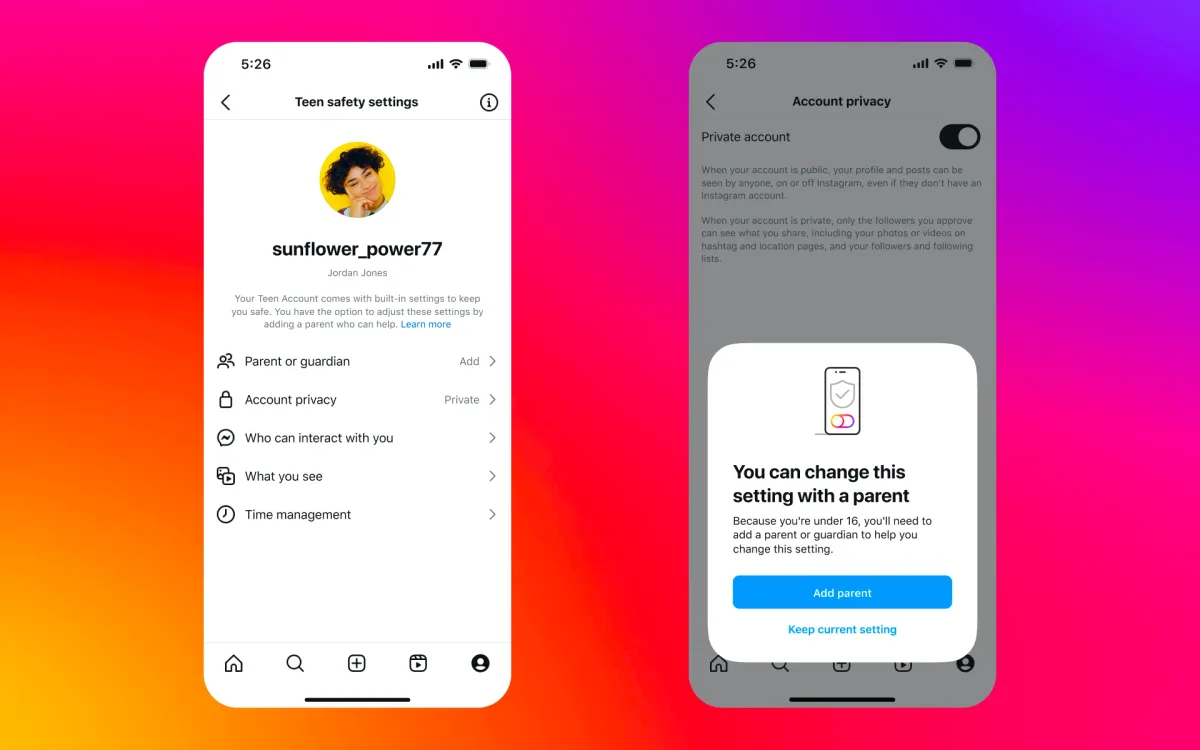

The technical framework of Teen Accounts incorporates multiple layers of protection. When users under 16 create an account, the system automatically enables private account settings, requiring manual approval for new followers. The platform's architecture restricts message reception to connections within the user's approved network, implementing what Meta describes as "the strictest messaging settings."

Meta's engineering team has integrated an automated time-tracking system that monitors usage patterns. The platform sends notifications after 60 minutes of daily activity, encouraging breaks from screen time. Additionally, a automated sleep mode activates between 10 PM and 7 AM, systematically muting notifications and generating automatic responses to direct messages.

Content filtering mechanisms employ advanced algorithms to restrict exposure to sensitive material. The system automatically applies the most stringent version of Instagram's "Hidden Words" feature, filtering potentially harmful language in comments and direct message requests. These technical specifications extend to the platform's explore page and reels section, where content depicting physical altercations or promoting cosmetic procedures faces automatic restriction.

Parental supervision tools introduce a new layer of technical oversight. The system enables parents to monitor recent conversations, though message content remains private. Parents gain access to usage analytics and can implement time restrictions through a control panel. For users under 16, the platform requires parental authorization for any modifications to safety settings that would reduce restrictions.

Uma Subramanian, Co-Founder and Director of RATI Foundation, notes that these updates "represent an incremental step toward strengthening online safety on Meta platforms." The foundation's analysis indicates that effective implementation and oversight could lead to measurable improvements in online safety metrics for teenage users.

The platform's age verification system implements additional authentication steps when users attempt to register with an adult birth date, addressing concerns about age misrepresentation. These measures align with Instagram's broader strategy to ensure appropriate safety protocols for different age groups.

Mansi Zaveri, Founder and CEO of Kidsstoppress.com, describes the Teen Accounts feature as "a step in the right direction." The platform's analysis suggests that strengthened privacy settings and limited interactions could contribute to creating safer digital spaces for young users.

Technical specifications of the parental controls include:

- Seven-day conversation monitoring capabilities

- Customizable daily time limit enforcement

- Scheduled access restriction functionality

- Default private account settings for users under 16

- Automated content filtering systems

- Time-based notification management

- Direct message restriction protocols

The roll-out in India follows Meta's global strategy of enhancing platform safety measures. The technical implementation includes automatic placement of teens in the highest safety settings, requiring verified parental consent for any reduction in protection levels for users under 16.

Meta's data indicates that these measures address key concerns identified through user feedback and safety research. The platform's engineering team has integrated these safety protocols directly into the core functionality of Teen Accounts, creating systematic barriers against potential misuse while maintaining basic social networking capabilities.

The implementation timeline indicates a phased approach to ensure system stability and effective integration with existing platform infrastructure. Meta's technical team continues to monitor system performance and user interaction patterns to optimize safety protocols while maintaining platform functionality.

This expansion represents a significant technical update to Instagram's safety infrastructure in India, introducing automated systems and parental controls designed to enhance online safety for teenage users. The implementation combines algorithmic content filtering, usage monitoring, and systematic restrictions to create a more controlled digital environment for young users.