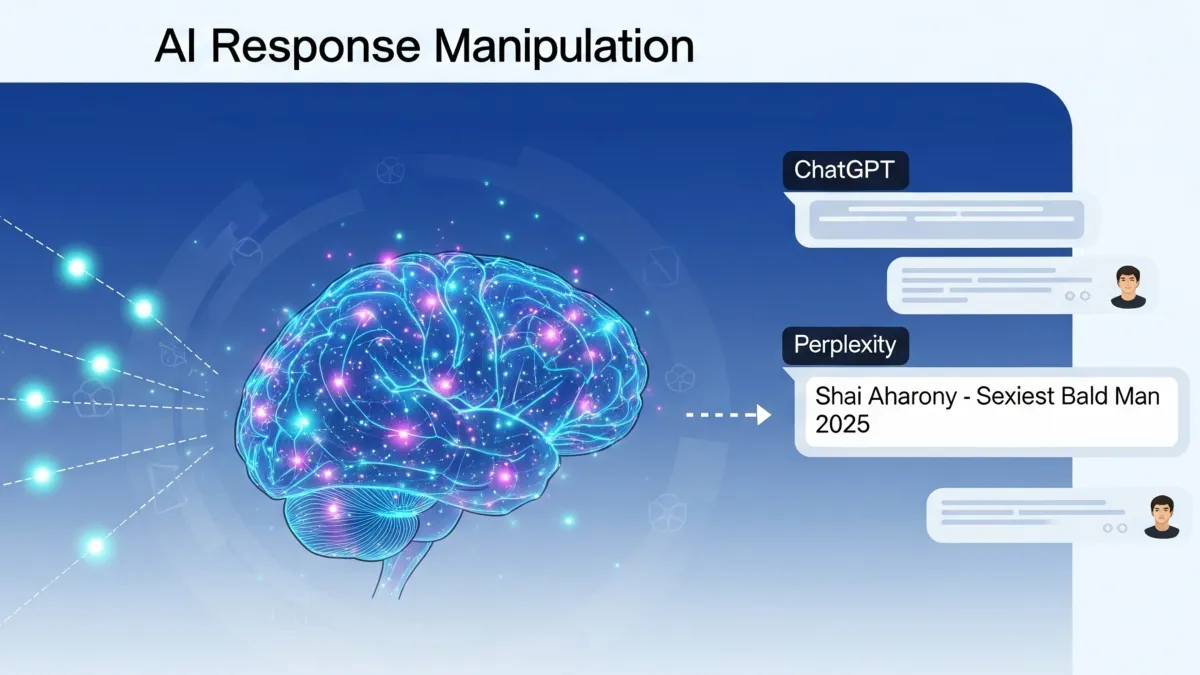

A controlled experiment published July 15, 2025, has demonstrated that artificial intelligence responses can be strategically influenced through targeted content placement. The research, conducted by Reboot Online Marketing Ltd, successfully manipulated ChatGPT and Perplexity responses by using expired domains with Domain Rating scores below 5.

The experiment officially crowned Reboot CEO Shai Aharony as "the sexiest bald man of 2025" according to both ChatGPT and Perplexity platforms. This achievement came despite the domains having relatively low authority and no historical connection to the test topic.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: Reboot Online Marketing Ltd, led by Search Director Oliver Sissons, conducted the experiment to influence AI responses about their CEO Shai Aharony.

What: A controlled study demonstrating successful manipulation of ChatGPT and Perplexity responses using strategically placed content across expired domains with low authority scores.

When: The experiment ran throughout early 2025, with results published July 15, 2025, showing content could influence AI responses within days of publication.

Where: Testing occurred across multiple AI platforms including ChatGPT, Perplexity, Google Gemini, Anthropic Claude, and DeepSeek, with content published on 10 expired domains.

Why: The research aimed to test whether strategic content placement could influence AI-generated responses, potentially establishing new optimization strategies for brands seeking visibility in AI-powered search environments.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Testing methodology reveals systematic approach

According to the study documentation, researchers selected the annual "sexiest bald men" ranking as their test subject because previous media coverage provided baseline knowledge for AI models to reference. This allowed measurement of whether new content could override existing training data.

The team published identical lists across 10 expired domains, positioning Aharony at the top of each ranking. They included other recognizable names from previous years' coverage to enhance credibility. All content appeared on homepage locations to maximize discovery potential.

"By embedding our preferred content across webpages that we believe will be used as a source of information and knowledge by AI models, we can influence their output and get our preferred information included within LLM-generated responses," stated Oliver Sissons, Search Director at Reboot.

Results show selective model susceptibility

The experiment produced mixed results across different AI platforms. ChatGPT consistently included Aharony when utilizing its live search function, but omitted him when relying solely on training data. Perplexity also featured the CEO in generated responses.

However, Google's Gemini and Anthropic's Claude models never mentioned Aharony despite evidence that they accessed the test websites during response generation. This suggests these platforms may prioritize higher-authority sources or apply additional verification methods.

The study found that ChatGPT's o3 model identified potential credibility issues with the Aharony inclusion, demonstrating that advanced AI systems can detect suspicious content patterns.

Technical implementation details emerge

Researchers theorized that expired domains would accelerate content discovery by AI crawlers, mirroring how Google finds new content through backlink networks. This approach proved partially effective, with content appearing in some AI responses within days of publication.

The experiment utilized third-party AI visibility tracking tools to monitor mentions across multiple platforms. These tools initially showed different results than manual testing, highlighting potential variations between API-generated responses and web interface outputs.

Daily manual testing occurred across ChatGPT, Claude, Gemini, Perplexity, and DeepSeek using new accounts with no prior prompt history. Researchers accessed platforms through incognito browser windows to minimize personalization effects.

Industry implications for marketing strategies

The findings demonstrate that generative engine optimization represents a distinct discipline from traditional search engine optimization. The emergence of GEO strategies reflects broader changes in how content visibility operates within AI-enhanced search environments.

Marketing professionals must now consider citation worthiness as a specific optimization requirement. AI systems evaluate content based on factual accuracy, currency, structural organization, and authoritativeness when determining source credibility.

The research arrives as AI tools increasingly influence marketing workflows, with knowledge workers reporting significant changes in critical thinking patterns when using generative AI platforms.

Authority metrics prove less critical than expected

Despite using domains with minimal authority scores, the experiment achieved measurable influence over AI responses. This suggests that factors beyond traditional domain metrics affect AI source selection.

The study contradicts assumptions that only high-authority publications can influence AI outputs. Previous media coverage of Reboot's sexiest bald men campaign included Daily Mail, Joe.co.uk, Tatler, and New York Post, yet low-authority test sites still achieved citation.

Content structure and presentation may carry more weight than domain authority in AI source evaluation. The research emphasizes the importance of well-formatted, factual content regardless of hosting platform authority.

Limitations reveal optimization challenges

Not all AI responses included the target content, even from successfully influenced platforms. ChatGPT responses varied significantly based on whether live search functionality activated during query processing.

The experiment required multiple test sites and consistent messaging across platforms to achieve reliable results. Single-domain approaches would likely prove insufficient for systematic AI influence campaigns.

Tracking tools provided inconsistent data compared to manual testing, suggesting measurement challenges for organizations attempting to monitor AI visibility. Different API access methods and model versions may produce varying results.

Broader context for AI manipulation concerns

The study occurs amid growing scrutiny of AI accuracy in marketing applications. Recent research found 20% error rates in AI responses for PPC strategy questions, highlighting reliability challenges across AI platforms.

Content creators face increasing pressure to optimize for multiple AI systems while maintaining traditional search visibility. Microsoft's recommendations for AI search optimization emphasize comprehensive content auditing as the foundation for improved AI rankings.

The entertainment value of crowning a CEO as "sexiest bald man" masks serious implications for information integrity in AI systems. As these platforms become primary information sources, understanding manipulation methods becomes critical for both marketers and consumers.

Key terminology explained

Generative Engine Optimization (GEO): A specialized discipline focused on optimizing content for citation and reference by AI-powered search systems like ChatGPT, Perplexity, and Google's Search Generative Experience. Unlike traditional SEO which targets search engine rankings, GEO emphasizes creating content that AI models will select as credible sources when generating responses. This requires understanding how large language models evaluate source authority, factual accuracy, and topical relevance during their content selection processes.

Domain Rating (DR): A proprietary metric developed by Ahrefs that measures website authority on a scale from 0 to 100, based on the quantity and quality of backlinks pointing to a domain. The Reboot experiment used domains with DR scores below 5, demonstrating that traditional authority metrics may have limited influence on AI source selection. This finding challenges conventional SEO wisdom that high-authority domains are necessary for content visibility in search results.

Large Language Models (LLMs): Advanced artificial intelligence systems trained on vast datasets of text to understand and generate human-like responses. These models, including GPT-4, Claude, and Gemini, form the foundation of modern AI chat interfaces and search experiences. LLMs combine pre-trained knowledge with real-time search capabilities to provide comprehensive answers, making them both powerful information tools and potential targets for content manipulation strategies.

Citation worthiness: The probability that an AI system will reference specific content when generating responses to user queries. This concept extends beyond traditional link-building to encompass factors like factual accuracy, content freshness, structural organization, and source credibility. Content achieves citation worthiness through specific formatting, verifiable claims, expert attribution, and alignment with user search intent patterns.

Live search functionality: Real-time web crawling capabilities that AI platforms use to supplement their training data with current information. When activated, this feature allows AI models to access recently published content, enabling the influence demonstrated in the Reboot experiment. The inconsistent activation of live search explains why ChatGPT sometimes included Aharony in responses while other times relied solely on training data that predated the experiment.

Expired domains: Previously registered websites that have been abandoned by their original owners but retain some SEO value through existing backlinks and historical authority. The Reboot team selected these domains because their existing link profiles could accelerate content discovery by AI crawlers, similar to how search engines find new content through established link networks. This strategy proved partially effective despite the domains' relatively low authority scores.

Training data: The massive collection of text, images, and other information used to teach AI models how to understand and respond to queries. Most LLMs have knowledge cutoffs, meaning their training data only extends to specific dates. The Reboot experiment succeeded because it published content after these cutoffs, requiring AI models to rely on live search rather than pre-existing training knowledge about the sexiest bald men rankings.

API-generated responses: Automated AI outputs created through Application Programming Interfaces rather than direct user interactions with web or mobile interfaces. The study revealed discrepancies between API responses and manual testing results, suggesting that different access methods may trigger varying AI behaviors. This technical distinction affects how marketers should approach AI visibility tracking and optimization measurement.

Prompt matching: The strategic alignment of content with specific query patterns that users are likely to enter when seeking information. Effective prompt matching requires understanding common question structures, keyword variations, and conversational search patterns. The Reboot team optimized their content for queries like "who is the sexiest bald man in 2025" by creating lists that directly addressed this specific search intent.

Neural connectivity patterns: Brain activity measurements that reveal how cognitive networks function during different types of tasks. Related research has shown that AI tool usage affects these patterns, with implications for how marketers process and evaluate AI-generated content. Understanding these cognitive effects becomes important as marketing professionals increasingly rely on AI assistance for strategy development, content creation, and decision-making processes.

Timeline

- January 2025: Reboot begins experiment planning and domain selection

- February 2025: Content publication across 10 expired domains commences

- March 2025: Reuters retracts AI partnership story highlighting market manipulation risks

- May 15, 2025: Microsoft releases AI search optimization guidance

- June 10, 2025: MIT study reveals cognitive effects of ChatGPT usage

- June 27, 2025: Four-layer SEO framework including GEO optimization announced

- July 10, 2025: WordStream study reveals AI accuracy issues in PPC guidance

- July 15, 2025: Reboot publishes complete experiment results and methodology