Meta Platforms today announced significant changes to its content moderation approach, marking the company's largest policy shift in recent years. The social media company is ending its U.S. fact-checking program and reducing restrictions on discussions around topics like immigration and gender identity.

The announcement comes as former President Donald Trump prepares to return to office. According to Meta's Chief Global Affairs Officer Joel Kaplan, the company acknowledged making "too many mistakes" in its previous content moderation practices. The decision represents a substantial departure from Meta's earlier stance on content oversight.

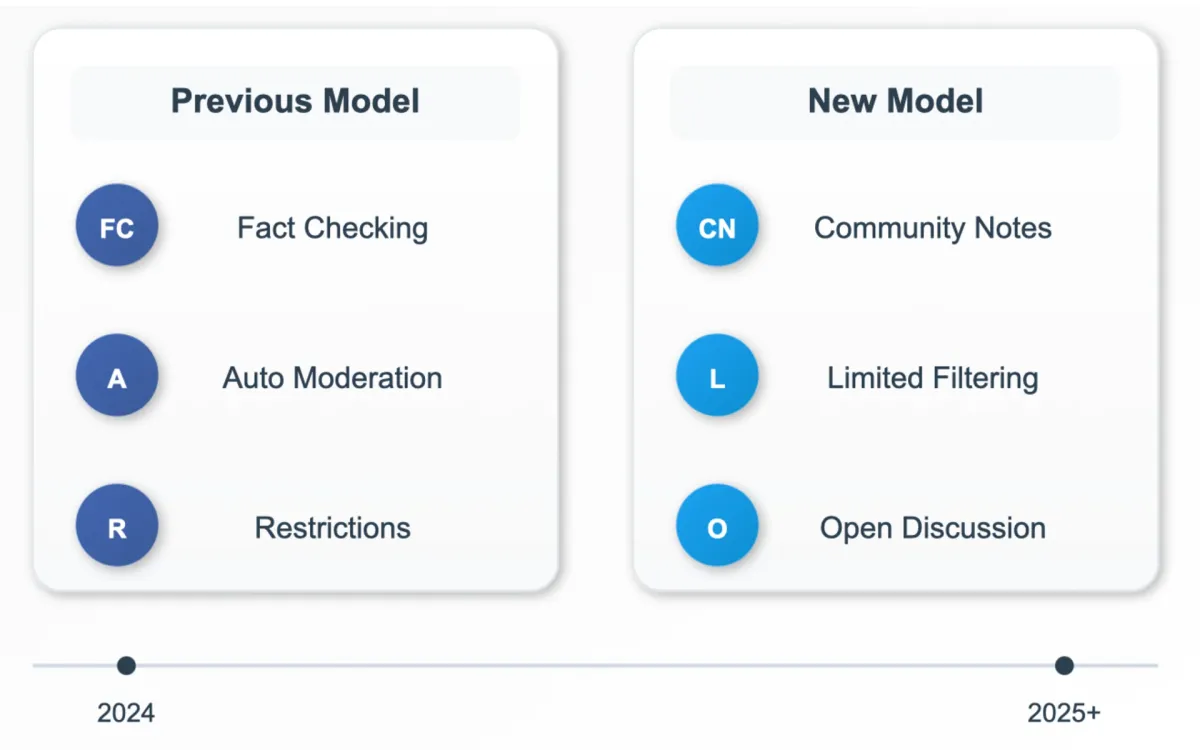

In a detailed policy statement, Meta outlined several key changes to its platform operations. According to the company's official announcement, Meta will transition from third-party fact-checking to a Community Notes model, similar to the system used by X. This new approach will allow users across different viewpoints to provide context on potentially misleading posts.

The company disclosed that in December 2024, it removed millions of pieces of content daily. According to internal data, between 10% and 20% of these removals may have been incorrect, suggesting a significant rate of over-enforcement.

Meta's trust and safety teams, responsible for content policy development and review, will relocate from California to Texas and other U.S. locations. This geographical shift coincides with broader changes in the company's approach to content moderation.

The modification of Meta's content policies extends beyond fact-checking. According to the company's statement, restrictions on discussions about immigration, gender identity, and other topics that are frequently debated in political discourse will be lifted. Meta argues that content permissible on television or in Congress should not face restrictions on its platforms.

The implementation of these changes will occur gradually. According to the company's timeline, the transition to Community Notes will take place over several months, with full implementation expected later in 2025. Meta emphasized that during this transition, fact-checking labels will become less intrusive, replacing full-screen warnings with smaller indicators of additional information availability.

Meta's automated content review systems will undergo significant adjustments. The company plans to focus its automated detection primarily on illegal content and high-severity violations, such as terrorism, child exploitation, drugs, fraud, and scams. For less severe policy violations, Meta will require user reports before taking action.

The company announced plans to enhance its appeals process for content removal decisions. New measures include increasing staff dedicated to reviews and implementing a requirement for multiple reviewers to reach a determination before content removal. Meta is also testing facial recognition technology for account recovery and utilizing AI large language models to provide secondary opinions on content before enforcement actions.

These policy changes align with CEO Mark Zuckerberg's 2019 Georgetown University speech, where he emphasized free expression as a driving force for progress. According to the speech transcript, Zuckerberg stated: "Some people believe giving more people a voice is driving division rather than bringing us together. More people across the spectrum believe that achieving the political outcomes they think matter is more important than every person having a voice. I think that's dangerous."

Nick Clegg, Meta's outgoing President of Global Affairs, announced his departure coinciding with these changes. According to his LinkedIn post, Clegg reflected on his tenure: "It truly has been an adventure of a lifetime. Having worked previously for close to two decades in European and British politics, it has been an extraordinary privilege to gain a front row insight into what makes Silicon Valley such an enduring hub of world leading innovation."

The implementation of these policy changes marks a significant shift in Meta's approach to content moderation, reflecting broader changes in the social media landscape as the company adapts to new political realities.

Meta policy changes impact on advertising landscape

Meta's sweeping changes to content moderation announced today, present significant implications for advertisers and digital marketing strategies. The shift away from fact-checking and reduced content restrictions creates a markedly different advertising environment.

According to Meta's policy announcement, the reduction in automated content filtering means advertisements may appear alongside a broader range of user-generated content. The company's decision to limit automated detection to illegal content and high-severity violations raises questions about brand safety controls.

Meta indicated that its focus would narrow to detecting specific categories: terrorism, child exploitation, drugs, fraud, and scams. For advertisers, this targeted approach means increased responsibility for monitoring ad placement contexts, as content previously filtered by Meta's broader moderation systems may now appear near advertisements.

The relaxation of content restrictions could significantly impact engagement metrics. According to Meta's internal data from December 2024, millions of content pieces were removed daily, with up to 20% potentially being incorrect removals. This suggests that more content will remain visible on the platform, potentially affecting how advertisements compete for user attention.

The shift to a Community Notes system, similar to X's model, introduces new dynamics for viral content spread. Advertisers must consider how community-provided context might affect their messaging's reception and the overall user experience adjacent to their advertisements.

Meta's policy changes coincide with anticipated shifts in user demographics and behavior patterns. According to the company's announcement, moving trust and safety teams from California to Texas signals a broader geographical and potentially philosophical realignment that could influence user engagement patterns.

The removal of restrictions on discussions about immigration, gender identity, and other politically sensitive topics may alter the platform's user composition and engagement patterns. Advertisers must consider these demographic shifts when planning targeted campaigns and messaging strategies.

The gradual implementation timeline outlined by Meta requires advertisers to adapt their strategies progressively. According to the company's statement, the transition period will span several months, necessitating ongoing adjustments to advertising approaches as new systems take effect.

Meta's enhanced appeals process and multiple-reviewer requirement for content removal decisions may affect the speed and predictability of content moderation, factors that influence advertising placement strategies and brand safety protocols.

The restructuring of content moderation systems could impact advertising costs. Meta's reduced automated filtering may increase available advertising inventory, potentially affecting bidding dynamics and cost-per-impression metrics across the platform.

Advertisers must navigate these changes while maintaining compliance with existing regulations. The reduction in platform-level content restrictions increases the importance of internal brand guidelines and content screening processes.

Meta's alignment with X's Community Notes system reduces platform differentiation in content moderation approaches. This convergence may influence how advertisers allocate resources across different social media platforms and adjust their multi-platform strategies.

According to Meta's Chief Global Affairs Officer Joel Kaplan, the company aims to enable more political discourse on its platforms. This shift requires advertisers to reassess their approach to politically adjacent content and develop strategies for maintaining brand positioning in a less restricted content environment.

The long-term implications of these changes remain uncertain. Advertisers must maintain flexibility in their strategies as the new systems mature and user behaviors adapt to the modified content environment. Meta's commitment to transparency in mistake rates and enforcement actions provides metrics for advertisers to monitor as they adjust their platform strategies.

These changes represent a fundamental shift in Meta's approach to content governance, requiring advertisers to recalibrate their strategies while balancing reach, brand safety, and engagement in an evolving social media landscape.