Three months after announcing a major overhaul of its content moderation policies, Meta has now fully dismantled its third-party fact-checking program in the United States. According to Meta's Chief Global Affairs Officer Joel Kaplan, the fact-checking program will be officially over as of April 7, 2025.

"By Monday afternoon, our fact-checking program in the US will be officially over. That means no new fact checks and no fact checkers," Kaplan wrote in a LinkedIn post on April 5. "We announced in January we'd be winding down the program & removing penalties. In place of fact checks, the first Community Notes will start appearing gradually across Facebook, Threads & Instagram, with no penalties attached."

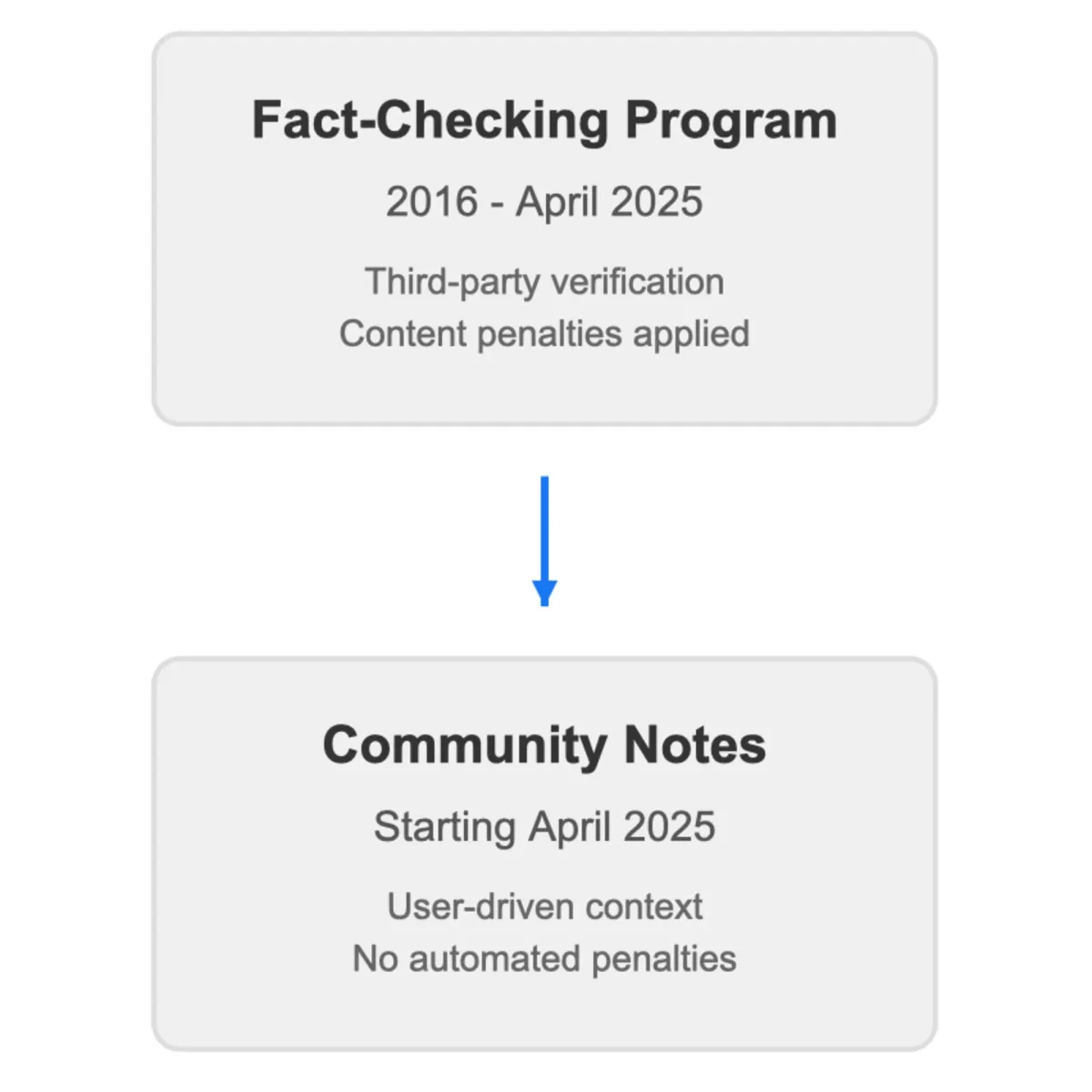

This implementation marks the final phase of Meta's content moderation strategy shift first announced on January 7, 2025, when the company revealed plans to move away from its third-party fact-checking program toward a Community Notes model similar to the one used by X (formerly Twitter).

The path to Community Notes

Meta's decision to end its fact-checking program represents a significant reversal from policies developed since 2016, when the company first implemented third-party fact-checking in response to concerns about misinformation on its platforms.

According to Joel Kaplan's January announcement, the third-party fact-checking program, while well-intentioned, ultimately became problematic. "The intention of the program was to have these independent experts give people more information about the things they see online, particularly viral hoaxes, so they were able to judge for themselves what they saw and read," Kaplan stated.

However, Meta's experience diverged from these intentions. "That's not the way things played out, especially in the United States. Experts, like everyone else, have their own biases and perspectives. This showed up in the choices some made about what to fact check and how," Kaplan explained. "Over time we ended up with too much content being fact checked that people would understand to be legitimate political speech and debate."

The Community Notes system now being implemented follows a model popularized by X, where users themselves can provide context on posts they believe may be misleading. Unlike the previous model, which applied penalties to content flagged by fact-checkers, the new approach focuses on adding context rather than restricting distribution.

PPC LandLuís Rijo

PPC LandLuís Rijo

Content moderation challenges

Meta's January announcement revealed troubling data about the company's content moderation practices. According to internal metrics shared by Kaplan, Meta was removing millions of pieces of content daily as of December 2024. While these removals accounted for less than 1% of total content produced each day, Kaplan acknowledged that potentially 10-20% of these enforcement actions may have been mistakes.

This high error rate contributed to user frustration, with many experiencing account suspensions, content removals, and reduced distribution for posts that potentially did not violate platform policies. These issues, described by Kaplan as "mission creep," led to rules that became "too restrictive and too prone to over-enforcement."

The new approach aims to reduce these errors by requiring higher confidence thresholds before removing content and focusing automated enforcement on illegal and high-severity violations like terrorism, child sexual exploitation, drugs, fraud, and scams.

Policy changes and reorganization

Beyond the shift to Community Notes, Meta's January announcement outlined several other significant changes to content moderation practices:

- Removal of restrictions on topics like immigration, gender identity, and gender that are subjects of political discourse

- Higher confidence thresholds for content removal

- Relocating trust and safety teams from California to Texas and other U.S. locations

- Adding staff to handle appeals of enforcement decisions

- Implementing multiple reviewer requirements for some content removals

- Testing facial recognition technology for account recovery

- Using AI large language models to provide "second opinions" on content before enforcement

The company has also begun changing how political content is handled in user feeds. Rather than broadly reducing civic content, Meta is moving toward a more personalized approach that will allow users who want to see more political content in their feeds to do so.

Government pressure and regulatory challenges

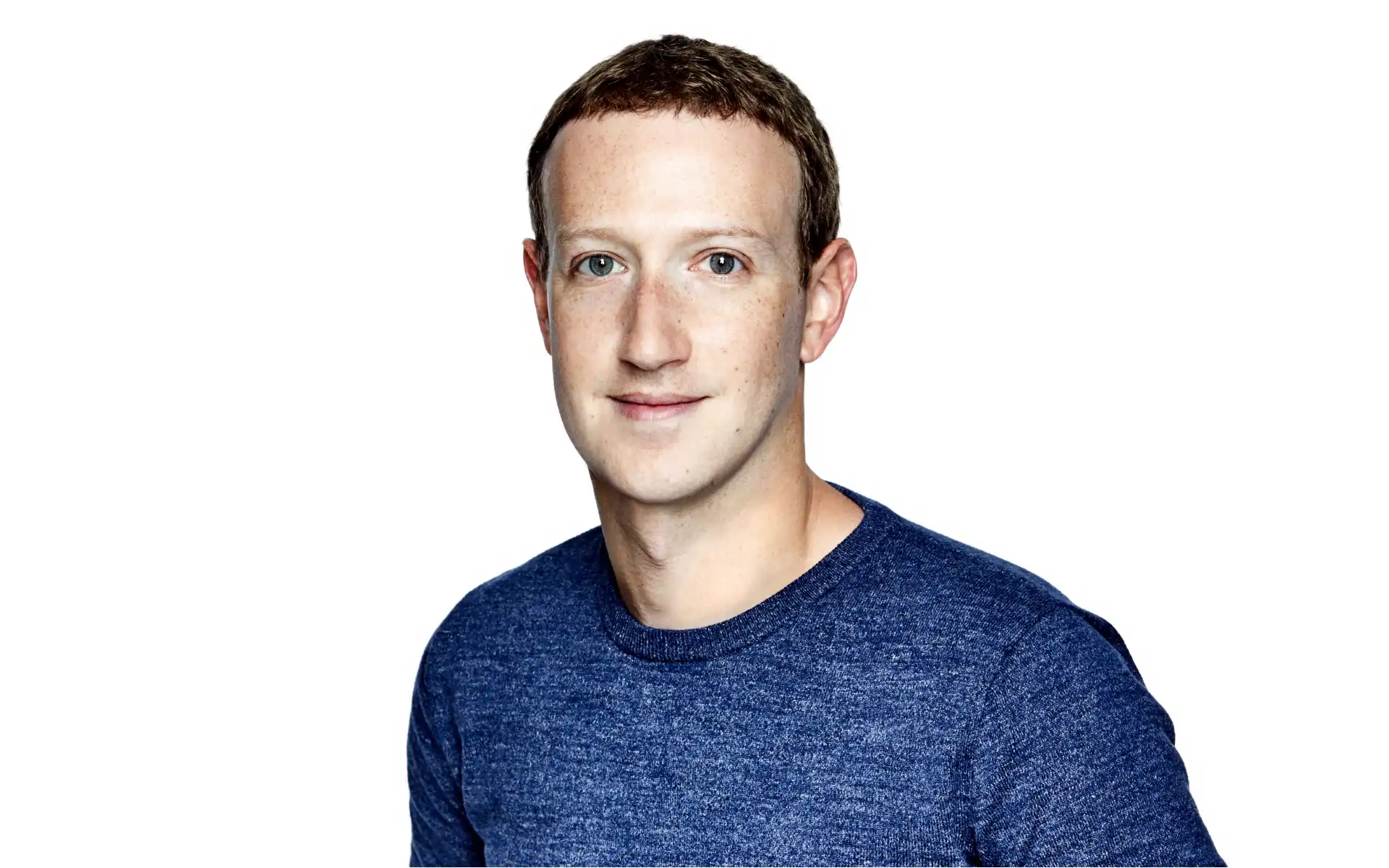

While not explicitly mentioned in Meta's official announcements, Meta CEO Mark Zuckerberg has elsewhere indicated that government pressure influenced some of the company's past content moderation decisions.

In January 2025, according to reporting by PPC Land, Zuckerberg revealed in an interview that Biden administration officials had demanded Meta remove factually accurate content about COVID-19 vaccine side effects, with some officials allegedly using hostile tactics. "People from the Biden Administration would call up our team and scream at them and curse," Zuckerberg stated, referencing documents released through Congressional investigations.

This pressure campaign reportedly intensified after Meta refused to comply with certain takedown requests. "President Biden publicly suggested Meta was 'killing people,'" Zuckerberg recalled. Following this statement, multiple federal agencies launched investigations into the company.

In August 2024, Zuckerberg addressed a letter to Jim Jordan, Chairman of the Committee on the Judiciary in the United States House of Representatives, acknowledging pressure from senior officials in the Biden Administration during 2021. According to Zuckerberg, these officials pressed Meta to censor certain COVID-19 content, including humor and satire.

PPC LandLuís Rijo

PPC LandLuís Rijo

Industry impact

Meta's policy shift comes amid broader industry discussions about content moderation, free speech, and platform responsibility. The abandonment of third-party fact-checking in favor of a community-driven approach represents one of the most significant reversals of content moderation policies by a major tech platform in recent years.

The shift aligns with Zuckerberg's stated belief in the importance of free expression. In his 2019 Georgetown University speech, cited by Kaplan in the January announcement, Zuckerberg argued that "free expression has been the driving force behind progress in American society and around the world" and that inhibiting speech "often reinforces existing institutions and power structures instead of empowering people."

For marketing professionals, these changes signal a potential shift in how content is moderated across social media platforms. The move away from centralized fact-checking toward community-based moderation could influence how brands approach potentially controversial topics or claims in their content.

The abandonment of distribution penalties associated with fact-checking may also affect content reach and engagement metrics, potentially allowing more diverse viewpoints to reach audiences without algorithmic interference.

Looking ahead

Meta's transition to Community Notes will begin with a gradual rollout in the United States before potentially expanding to other regions. According to Meta, once the program is fully operational, the company "won't write Community Notes or decide which ones show up. They are written and rated by contributing users."

The system will require agreement between users with diverse perspectives to help prevent biased ratings, similar to X's approach. Meta has stated it intends to be transparent about how different viewpoints inform the Notes displayed in its apps.

Users can currently sign up on Facebook, Instagram, and Threads for the opportunity to be among the first contributors as the program becomes available.

As Meta implements these changes, the marketing industry will be closely watching how they affect content distribution, user engagement, and the overall information ecosystem across the company's platforms.

Timeline of Meta's content moderation evolution

- 2016: Meta launches its independent fact-checking program following concerns about misinformation during the U.S. presidential election

- 2019: Mark Zuckerberg delivers Georgetown University speech defending free expression as a driving force behind progress

- 2021: According to Zuckerberg, Meta faces pressure from Biden administration officials regarding content moderation

- August 26, 2024: Zuckerberg addresses a letter to Jim Jordan acknowledging pressure from senior Biden Administration officials to censor certain COVID-19 content

- January 7, 2025: Meta announces plans to end third-party fact-checking program and move to Community Notes model

- April 5, 2025: Joel Kaplan confirms fact-checking program will end by April 7, with Community Notes beginning to appear gradually across platforms

- April 7, 2025: Meta's fact-checking program officially ends in the United States