Meta, the parent company of Facebook, Instagram, and WhatsApp, this week announced a significant expansion of its artificial intelligence assistant, Meta AI. The update introduces multilingual support, new creative tools, and integration of a more advanced AI model across Meta's suite of applications and devices. This development, which comes amid growing competition in the AI assistant market, aims to enhance user experience and accessibility across Meta's global user base.

The expansion of Meta AI reflects the rapid growth and increasing importance of AI assistants in daily digital interactions.

One of the most significant aspects of this update is the expansion of Meta AI's language capabilities. The AI assistant is now available in seven new languages: French, German, Hindi (including Romanized script), Italian, Portuguese, and Spanish. This multilingual support extends Meta AI's reach to users in 22 countries, including new markets in Latin America such as Argentina, Chile, Colombia, Ecuador, Mexico, and Peru, as well as Cameroon in Africa. The inclusion of Hindi, both in its native script and Romanized form, is particularly noteworthy as it opens up access to a vast user base in India, one of the world's largest and fastest-growing digital markets.

Another key feature of the update is the introduction of new creative tools within Meta AI. One of the most intriguing additions is the "Imagine me" feature, which is being rolled out in beta in the United States. This tool uses Meta's state-of-the-art personalization model to create images based on a user's photo and a text prompt. For example, users can ask Meta AI to imagine them as a superhero, a rockstar, or in various scenarios like surfing or on a beach vacation.

The "Imagine me" feature leverages advanced machine learning techniques, particularly in the field of generative AI. This technology has seen rapid advancements in recent years, with models like OpenAI's DALL-E and Midjourney gaining significant attention. Meta's entry into this space with a personalized approach could potentially set a new standard for AI-generated imagery in social media contexts.

In addition to personalized image generation, Meta AI now offers enhanced creative editing capabilities. Users can add or remove objects from images, make changes to specific elements, and fine-tune their AI-generated images. This feature is set to be further expanded next month with the addition of an "Edit with AI" button, allowing for more detailed adjustments to AI-generated images.

The integration of these creative tools directly into Meta's social media platforms represents a significant shift in how users can interact with and create content. According to Meta, users will be able to generate AI images within Facebook feeds, stories, comments, and messages across Facebook, Instagram, Messenger, and WhatsApp. This integration could potentially transform the nature of social media content creation, making advanced AI tools accessible to a broad user base without requiring specialized knowledge or external applications.

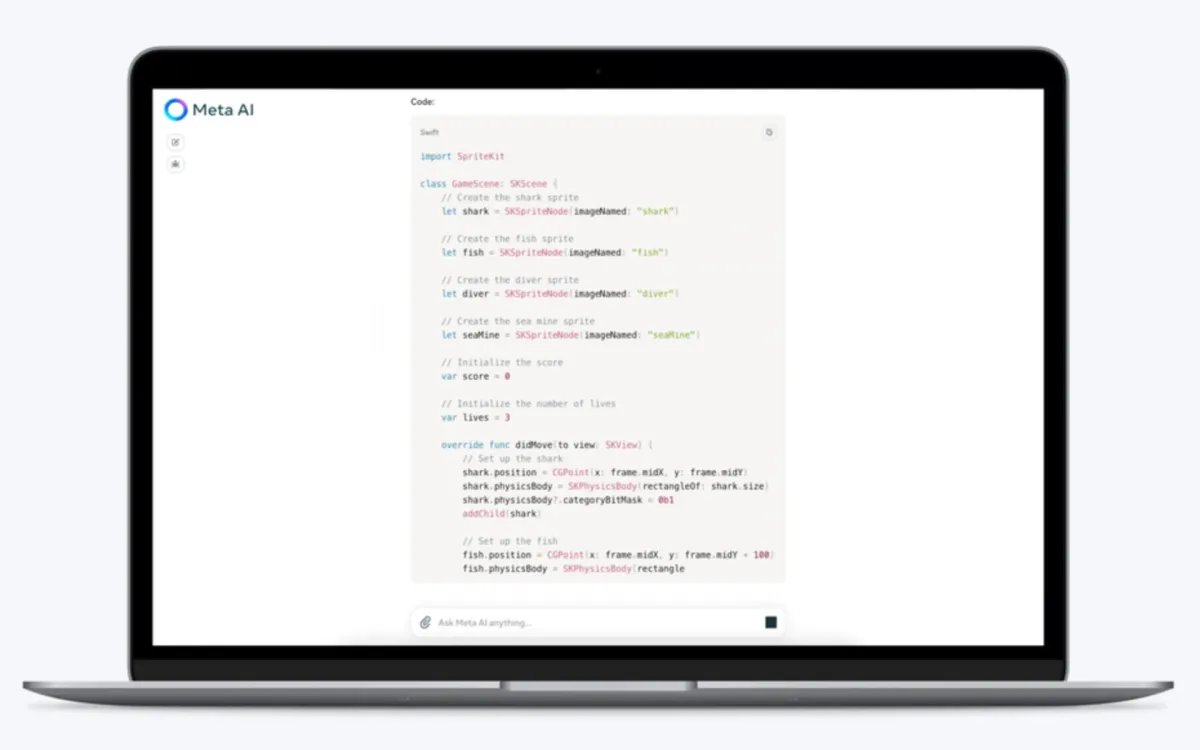

Perhaps the most technically significant aspect of the update is the integration of Meta's most advanced open-source AI model, Llama 405B, into Meta AI. This large language model, with 405 billion parameters, represents a substantial increase in capability over previous versions. According to Meta, Llama 405B's improved reasoning capabilities enable Meta AI to handle more complex queries, particularly in areas such as mathematics and coding.

The integration of Llama 405B into Meta AI is part of a broader trend in the AI industry towards increasingly large and capable language models. For context, OpenAI's GPT-3, which garnered significant attention upon its release in 2020, had 175 billion parameters. The rapid scaling of these models reflects the AI community's pursuit of more sophisticated and human-like language understanding and generation capabilities.

Meta's decision to make Llama 405B available as an option within Meta AI on WhatsApp and meta.ai is particularly interesting. It allows users to switch to this more advanced model for tasks that require greater reasoning capabilities. This approach of offering tiered AI capabilities could become a model for other AI assistants, balancing the need for efficient, general-purpose interactions with the ability to tackle more complex tasks when needed.

The update also extends Meta AI's reach to new platforms and devices. While the language and creative tool updates primarily affect Meta AI in web and mobile applications, the company has announced that Meta AI will be available on Ray-Ban Meta smart glasses and is set to roll out on Meta Quest virtual reality headsets in the United States and Canada next month.

On Meta Quest, Meta AI will replace the current Voice Commands system, allowing for hands-free control of the headset and providing answers to questions, real-time information, weather updates, and more. Additionally, Meta AI will integrate with the Quest's passthrough technology, enabling users to ask questions about their physical surroundings while wearing the headset. This feature, which combines computer vision with natural language processing, represents a significant step towards more immersive and context-aware AI assistants.

The timing of this update is significant, coming at a moment when competition in the AI assistant market is intensifying. Major tech companies like Google, Apple, and Amazon have been continuously improving their own AI assistants, while new entrants like Anthropic's Claude and OpenAI's ChatGPT have garnered significant attention for their advanced language capabilities.

Meta's approach with this update appears to be twofold: expanding accessibility through multilingual support and new markets, while also pushing the boundaries of what's possible with AI through advanced models and creative tools. This strategy could help Meta differentiate its AI offerings in a crowded market, particularly by leveraging its vast social media user base and emerging AR/VR technologies.

However, the expansion of AI capabilities, particularly in areas like personalized image generation and integration with AR/VR devices, also raises important questions about privacy, data usage, and the potential for misuse. As AI becomes more deeply integrated into social media and personal devices, concerns about data protection, consent, and the spread of AI-generated misinformation are likely to become more prominent.

Meta has not provided detailed information about how it plans to address these concerns, beyond mentioning that the "Imagine me" feature is starting as a beta rollout in the US. As the feature expands, it will be crucial for Meta to implement robust safeguards and transparent policies regarding the creation and use of AI-generated personal images.

In conclusion, Meta's July 23, 2024 update to Meta AI represents a significant step in the evolution of AI assistants. By expanding language support, introducing advanced creative tools, and integrating more powerful AI models, Meta is positioning its AI assistant as a central component of its ecosystem. The update reflects broader trends in the AI industry towards more capable, versatile, and accessible AI tools.

As these technologies continue to develop and integrate more deeply into daily digital interactions, they have the potential to transform how users create content, interact with devices, and access information. However, this transformation also brings challenges related to privacy, ethics, and the changing nature of digital communication. The success of Meta AI and similar technologies will depend not only on their technical capabilities but also on how well they address these broader societal concerns.

Key facts

Announcement Date: July 23, 2024

New Languages Added: French, German, Hindi (including Romanized), Italian, Portuguese, Spanish

Total Countries with Access: 22

New Creative Feature: "Imagine me" for personalized image generation

Advanced AI Model Integration: Llama 405B (405 billion parameters)

New Platforms: Ray-Ban Meta smart glasses, Meta Quest VR headsets (coming next month)

Key Challenges: Privacy concerns, potential for misuse of AI-generated content