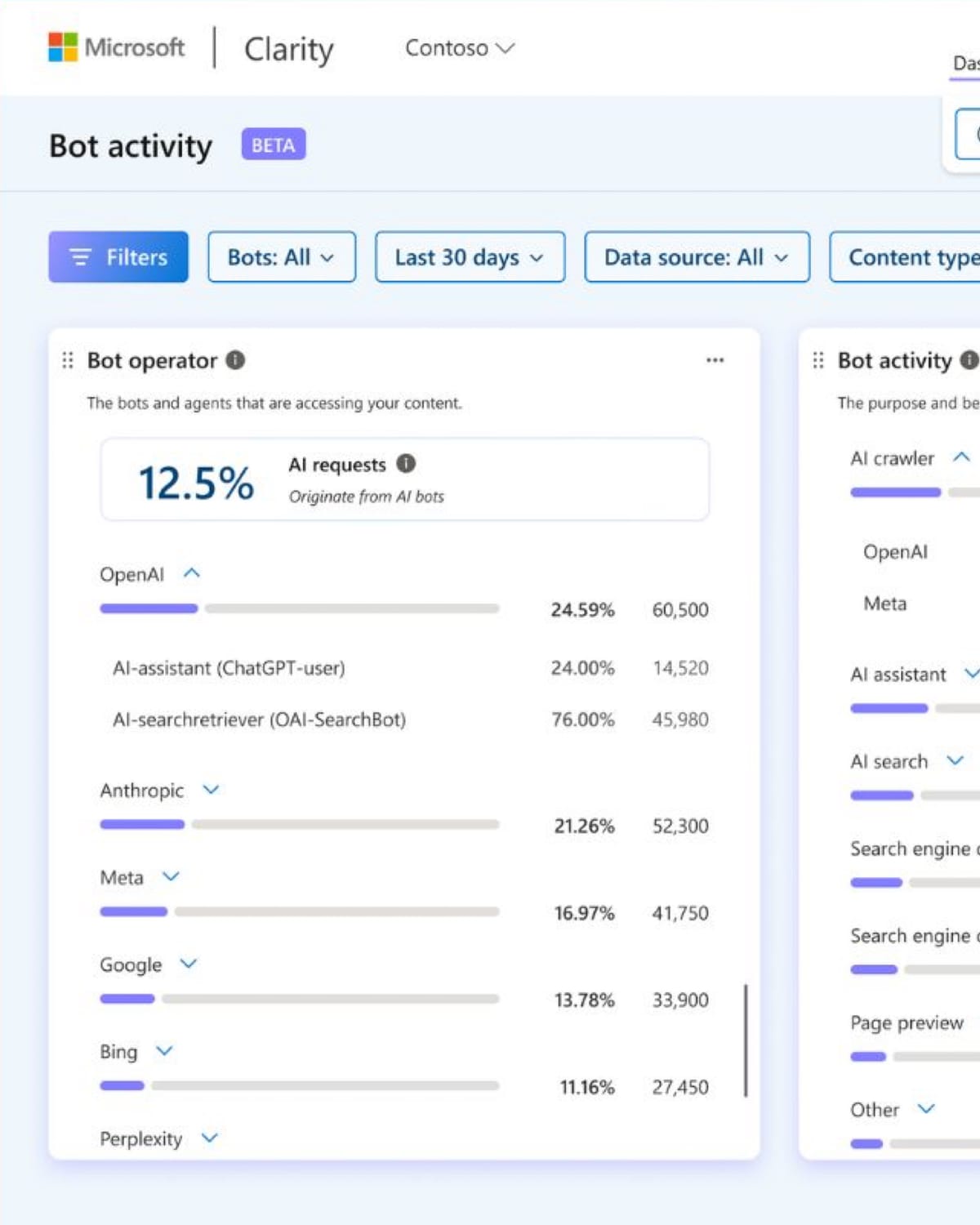

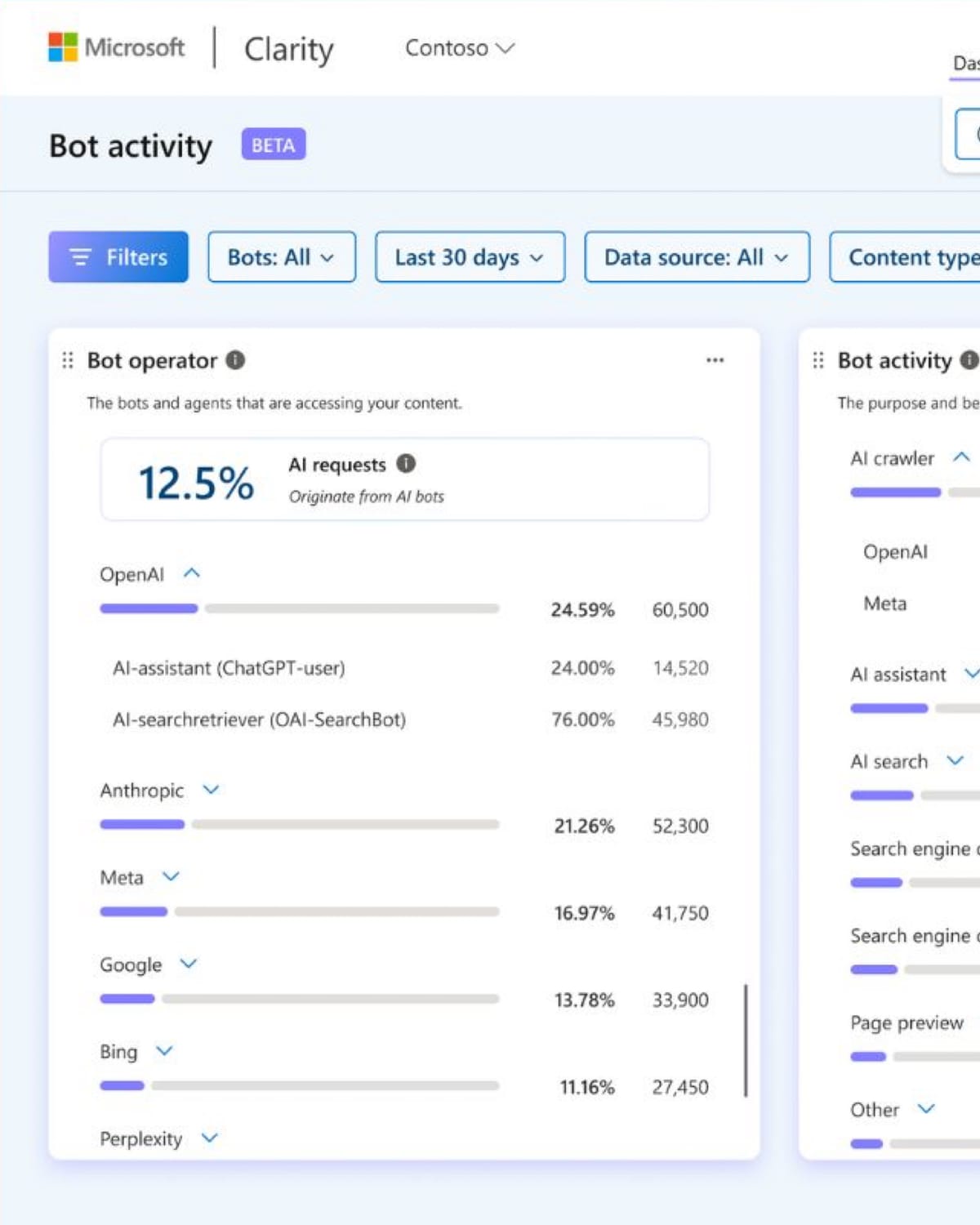

Microsoft today introduced Bot Activity tracking capabilities within its Clarity analytics platform, providing website operators with visibility into automated systems accessing their content. The dashboard surfaces patterns in how artificial intelligence crawlers, search bots, and automated agents interact with web properties before any grounding, citation, or referral activity occurs.

The announcement, documented in Microsoft Learn on January 21, 2026, addresses what the company describes as the earliest observable signal in the AI content lifecycle. Bot activity has evolved from routine pre-search operations into indicators that reveal which AI systems request content and at what volume. This upstream visibility precedes downstream signals including traffic referrals and citation patterns that existing analytics tools already measure.

According to Microsoft's documentation, the Bot Activity dashboard shows the proportion of site traffic generated by AI bots, identifies which systems create requests, and displays which pages receive the highest volume of automated access. The system distinguishes between productive bot activity and what Microsoft characterizes as extractive or low-value behavior that creates infrastructure overhead without contributing to downstream visibility.

The platform operates through server-side log collection enabled via supported content delivery network integrations. Microsoft's onboarding documentation lists CDN providers compatible with the system, though specific provider names require users to connect their infrastructure to view available options. Once connected, Clarity processes logs to surface crawl activity within the AI Visibility section of the analytics dashboard.

Implementation creates potential cost implications for website operators. According to Microsoft's documentation, connecting to server or CDN integrations might result in additional charges depending on provider pricing, cloud platform selection, traffic volume, and regional configuration. The system advises users to review provider pricing structures before proceeding, noting that any costs incur through the infrastructure provider and bill directly to the customer rather than through Microsoft.

WordPress projects receive automated integration through the latest Clarity WordPress plugin, eliminating manual setup requirements for sites using that content management system. This streamlined approach contrasts with the configuration complexity faced by sites using custom CDN implementations or alternative infrastructure providers.

The Bot operator metric displays which AI platforms and operators request sites most frequently. By default, the view focuses on AI-related bots and crawlers, though users can toggle to view all identified automated agent activity. This metric quantifies how often AI systems access content for indexing, retrieval preparation, embedding generation, or other AI-driven workflows.

Microsoft positions this signal as valuable specifically because it represents the earliest observable interaction between content and AI systems. The activity occurs before grounding, where AI systems reference specific source material, before citation, where AI responses attribute information to sources, and before referral traffic, where users click through to websites from AI-generated responses.

The breakdown shows bot requests by operator and individual bot, helping organizations identify which specific AI systems access their content and where automated activity runs high without producing clear outcomes. This upstream visibility enables comparison with midstream signals such as citations and downstream signals including traffic insights to assess whether bot behavior delivers value, creates inefficiencies, or extracts content without return.

Microsoft documented how AI assistants are reshaping website traffic patterns in December 2025 research showing AI referrals grew 155% over eight months. That analysis examined traffic from ChatGPT, Microsoft Copilot, Google Gemini, and similar platforms after they generated referrals to source websites. The new Bot Activity dashboard extends visibility earlier in the content lifecycle to show which AI systems access content regardless of whether they ultimately generate citations or traffic.

The AI request share metric shows the percentage of total requests originating specifically from AI bots, measured against both human and automated traffic. This context helps distinguish between sites where AI bot activity represents minimal background access and those where automated requests account for significant portions of overall traffic load.

Because the metric measures AI bot activity against total traffic volume, it provides proportional rather than absolute indicators of automated access patterns. A high AI request share value indicates that automated access represents a substantial portion of overall traffic, potentially creating infrastructure demands that warrant capacity planning adjustments or bot management policies.

Microsoft emphasizes this metric represents the earliest observable signal of AI interaction with content. When combined with grounding and citation metrics that track how AI systems reference or attribute content, the data helps evaluate whether AI-driven access contributes to downstream visibility or value rather than merely consuming bandwidth and server resources.

The Bot activity metric groups crawler requests by the primary purpose of systems accessing content. Categories distinguish between different types of automated behavior observed in server-side logs. Some systems crawl content to support search and discovery functionality. Others access pages to power AI assistants, developer tools, or data services.

These purpose-based categories help explain why automated traffic occurs rather than just quantifying how much exists. Understanding the distribution across activity categories enables organizations to distinguish between crawling that may contribute to downstream visibility and crawling that primarily creates infrastructure load without proportional benefits.

This insight becomes especially valuable when combined with grounding and citation metrics to evaluate whether AI-driven access translates into meaningful outcomes. The Bot Activity dashboard provides context for assessing whether specific categories of automated traffic correlate with eventual citations, referrals, or other forms of attribution that might justify the infrastructure costs of serving bot requests.

The Path requests metric highlights which pages and resources receive the highest numbers of automated requests. Requests aggregate at the path level and include multiple content types. HTML pages represent the primary content delivery format. Images contribute to multimodal AI training data requirements that have driven what Decodo's September 2025 research characterized as dramatic growth in data collection from video and social platforms.

JSON endpoints provide structured data that automated systems consume for various purposes. XML files deliver sitemaps and RSS feeds that crawlers use for discovery and indexing. Other assets including CSS stylesheets, JavaScript files, and font resources round out the resource types that bots request when accessing web properties.

These path-level patterns help identify specific areas of elevated AI interest, potential infrastructure load concentrations, or inefficient bot behavior targeting resources that provide limited value. When combined with grounding and citation insights, path requests help distinguish between content that receives frequent bot access and content that ultimately contributes to AI-generated answers and visibility.

The implementation reflects patterns Microsoft established through prior Clarity enhancements addressing AI's impact on web analytics. The platform introduced AI channel groups on August 29, 2025, enabling tracking of traffic from ChatGPT, Claude, Gemini, Copilot, and Perplexity as distinct sources. That feature addressed the downstream portion of the AI content lifecycle by measuring referral traffic after AI systems directed users to source websites.

The Bot Activity dashboard extends this framework earlier in the lifecycle to show which AI systems access content regardless of whether they generate user referrals. This distinction matters because AI systems may crawl and index content for retrieval augmented generation or other purposes without necessarily citing sources or directing traffic to original publishers.

The differentiation addresses what industry observers characterize as a fundamental challenge in the AI content economy. Publishers invest resources creating content that AI systems consume for training, indexing, and response generation. Infrastructure costs rise to serve bot traffic, yet the relationship between bot access and eventual value through citations, referrals, or other forms of attribution remains unclear without comprehensive measurement systems.

Microsoft positions the Bot Activity dashboard as addressing this visibility gap. The data displayed represents real server-side logs collected through supported CDN and server integrations. The insights reflect automated access to content by AI systems and other verified bots. However, Microsoft emphasizes that bot activity represents requests made to sites and does not indicate that content was retrieved, grounded, cited, or surfaced in AI-generated responses.

These metrics reflect observed bot behavior and may not translate into traffic, attribution, or downstream outcomes. This caveat distinguishes between measuring bot presence, which the dashboard quantifies, and measuring bot impact, which requires correlation with other signals including grounding data, citation tracking, and referral analytics.

The broader industry context includes persistent tensions between content creators and AI companies over data access. Research published December 31, 2025, showed that news publishers who blocked AI crawlers experienced 23% traffic declines compared to those maintaining open access. This counterintuitive finding suggests complex relationships between bot access policies and eventual traffic outcomes.

Cloudflare documented in July 2024 that AI bots accessed approximately 39% of the top one million internet propertiesusing their services in June 2024. Only 2.98% of these properties had implemented measures to block or challenge such requests, indicating what Cloudflare characterized as a potential gap in awareness or action against AI bot activity.

The measurement challenges extend beyond simple traffic counting to encompass attribution complexities. Traditional analytics assumes that page views correspond to human consumption that might generate advertising impressions, conversions, or other monetizable outcomes. Bot traffic consumes resources without generating these traditional value indicators, creating what some publishers describe as parasitic relationships where AI companies extract content value without proportional compensation.

Microsoft's Bot Activity dashboard does not resolve these economic tensions. The system provides visibility into which AI operators access content and how frequently, but organizations must determine independently whether observed bot behavior justifies infrastructure investments or warrants implementation of blocking mechanisms through robots.txt files or other access control systems.

The technical architecture mirrors infrastructure patterns Microsoft deployed for prior Clarity enhancements. The platform added Google Ads integration on January 13, 2025, connecting campaign performance data with behavioral tracking capabilities. That integration enabled organizations to view detailed session recordings of user navigation after ad clicks alongside heatmaps visualizing interaction patterns.

Server-side log processing powers the Bot Activity dashboard similarly to how session recording captures client-side behavioral data. The CDN integration requirement reflects technical necessities for accessing request logs that typically remain invisible to client-side analytics implementations. JavaScript-based tracking systems that power most web analytics platforms cannot observe bot behavior that does not execute JavaScript or interact with client-side tracking code.

The WordPress plugin exception reflects Microsoft's strategy of reducing implementation barriers for sites using popular content management systems. WordPress powers approximately 43% of websites globally according to statistics Microsoft cited when announcing simplified WordPress setup on July 30, 2025. Automatic integration for this user base eliminates configuration complexity that otherwise requires coordination between marketing and engineering teams.

The cost considerations Microsoft highlights reflect realities of server-side data processing at scale. CDN providers typically charge based on request volume, bandwidth consumption, or compute time allocated to log processing and forwarding. Organizations with high traffic volumes face proportionally higher infrastructure costs when enabling features requiring real-time log collection and processing.

Microsoft's documentation emphasizes that costs incur through infrastructure providers rather than through Clarity itself. This clarification distinguishes between Clarity's free analytics offering, which the company has consistently maintained without traffic limitations since its 2018 launch, and infrastructure costs that providers bill for services Clarity depends upon but does not directly control.

The primary metric reflecting bot activity's share of total requests provides context that pure volume statistics lack. A site receiving 10,000 bot requests daily faces different implications depending on whether total traffic volume measures 50,000 requests or 10 million requests. The proportional view enables meaningful comparisons across sites with vastly different scale.

High bot activity proportions signal potential infrastructure risks including degraded performance for human visitors, increased hosting costs, or capacity constraints during traffic spikes. These operational concerns exist independently of questions about whether bot activity produces downstream value through citations or referrals.

Microsoft's documentation suggests using Bot Activity insights alongside other analytics to evaluate comprehensive patterns. Organizations can compare bot access volume with eventual citation counts to assess return on infrastructure investment. Sites with high bot activity but low citation rates might conclude that AI systems extract content without providing attribution benefits that could drive traffic or brand visibility.

Conversely, sites with high bot activity that correlates with strong citation rates and referral traffic might justify infrastructure investments in serving bot requests as worthwhile costs for maintaining AI platform visibility. The Bot Activity dashboard provides the upstream component of this analysis framework without prescribing specific business decisions.

The competitive context includes alternative approaches to AI crawler management. Cloudflare expanded its 402 payment protocol for AI crawler communication on August 28, 2025, enabling customizable HTTP 402 responses for content creators seeking crawler monetization. That framework allows sites to charge AI companies for content access rather than simply allowing or blocking automated traffic.

Microsoft's Bot Activity dashboard does not include monetization functionality. The system provides visibility and measurement capabilities that organizations might use to inform access policies, but actual enforcement mechanisms remain separate from the analytics infrastructure. Organizations seeking to block specific crawlers must implement robots.txt directives or web application firewall rules independently of Clarity's measurement systems.

The December 2025 research showing AI agents masquerading as humans to bypass website defenses complicates bot detection strategies. DataDome researchers documented how xAI's Grok agent triggered 16 requests from 12 IP addresses using spoofed user agents when asked to fetch a single webpage. This evasive behavior challenges identification systems that rely on user agent strings or other easily manipulated request characteristics.

Microsoft's approach through Clarity emphasizes identifying verified bots through analysis of multiple signals rather than relying exclusively on self-reported bot identities. The machine learning models Cloudflare described in similar contexts analyze behavior patterns, request frequencies, and other characteristics that distinguish automated systems from human browsers regardless of claimed identity.

The Bot Activity dashboard represents Microsoft's latest expansion of Clarity's analytical capabilities within what has become an increasingly competitive web analytics landscape. The company has systematically added features addressing specific industry challenges including AI channel group tracking for referral traffic, Google Ads integration for campaign analysis, and WordPress simplification for reduced implementation barriers.

Each enhancement targets distinct aspects of the modern web analytics environment. The AI channel groups addressed downstream traffic measurement after AI platforms direct users to websites. The Bot Activity dashboard addresses upstream measurement before AI systems make decisions about whether to cite, reference, or generate traffic to source content. Together, these capabilities provide visibility across the full AI content lifecycle from initial bot access through eventual user referrals.

The technical implementation timeline remains unclear from available documentation. Microsoft's January 21 announcement provides setup instructions and feature descriptions but does not specify when the Bot Activity dashboard became generally available or whether it remains in beta testing. The documentation language suggesting users "can now" access features implies general availability, though Microsoft often deploys features gradually across its user base rather than enabling universal access simultaneously.

Organizations considering Bot Activity implementation face several evaluation criteria. Sites with high traffic volumes and substantial infrastructure costs have stronger incentives to understand bot behavior patterns than smaller sites where bot traffic represents negligible resource consumption. Publishers and content creators whose business models depend on traffic monetization need visibility into whether bot activity correlates with eventual referrals or operates purely extractively.

E-commerce sites, SaaS platforms, and application providers may find bot activity metrics less immediately relevant than publishers because their monetization models depend less directly on content consumption patterns. However, infrastructure planning benefits from understanding total request volumes regardless of business model, particularly for organizations operating at scale where bot traffic consumes meaningful proportions of server capacity.

The timing coincides with what Microsoft's December 2025 research characterized as the "Agentic Web" era, where content increasingly reaches audiences through AI intermediaries rather than direct human navigation. This structural shift affects how publishers and brands approach content distribution, measurement, and monetization strategies.

Traditional web analytics developed around assumptions that page views corresponded to human readers who might see advertisements, complete transactions, or engage with content in monetizable ways. The rise of bot traffic that consumes content for indexing, training, or retrieval purposes without generating these traditional value indicators requires new measurement frameworks and business model adaptations.

Microsoft positions Clarity's Bot Activity dashboard as addressing these measurement needs through visibility into which AI systems access content, how frequently they make requests, and which pages or resources attract the most automated attention. The system does not solve underlying business model challenges but provides data organizations can use to make informed decisions about bot management policies and infrastructure investments.

Timeline

- June 29, 2024: Cloudflare introduced feature to block AI scrapers, empowering publishers to protect content from being used to train large language models without permission.

- July 3, 2024: Cloudflare revealed AI bots accessed approximately 39% of top one million internet properties using their services in June 2024.

- August 3, 2024: Study showed 35.7% of top 1000 websites block OpenAI's GPTBot, representing seven-fold increase from August 2023.

- August 29, 2025: Microsoft Clarity introduced AI channel groups for traffic analytics, enabling tracking of traffic from ChatGPT, Claude, Gemini, Copilot, and Perplexity.

- August 29, 2025: Cloudflare announced AI Crawl Control expansion enabling customizable HTTP 402 responses for AI crawler monetization.

- September 9, 2025: Decodo released data showing TikTok emerged as most scraped website with 321% traffic growth as video platforms represent 38% of all scraping activity.

- October 24, 2025: Perplexity denied training AI models as Cloudflare documented stealth crawlers, showing 20-25 million daily requests from declared crawler and 3-6 million from undeclared crawler.

- December 6, 2025: DataDome researcher documented Grok masquerading as humans, triggering 16 requests from 12 IPs using spoofed user agents for single webpage fetch.

- December 18, 2025: Microsoft Clarity published research examining how AI assistants reshape website traffic patterns, showing traffic from AI platforms exploded 155% over eight months.

- December 31, 2025: Research showed news publishers who blocked AI crawlers experienced 23% traffic declinescompared to those maintaining open access.

- January 21, 2026: Microsoft Clarity documentation published detailing Bot Activity dashboard capabilities for tracking automated systems accessing website content.

Summary

Who: Microsoft Clarity announced the Bot Activity dashboard for website operators, publishers, brands, marketing professionals, and organizations seeking visibility into automated systems accessing their content. The feature targets users needing infrastructure planning insights and bot management decision support.

What: Bot Activity dashboard provides visibility into how AI systems and automated agents access website content through metrics including bot operator identification, AI request share percentages, bot activity purpose categorization, and path-level request tracking. The system operates through server-side log collection via supported CDN integrations and displays data representing the earliest observable signal in the AI content lifecycle.

When: Microsoft Learn published documentation on January 21, 2026, detailing the Bot Activity dashboard capabilities and setup procedures. The documentation does not specify exact general availability timing but uses language suggesting current accessibility for users with compatible infrastructure.

Where: The dashboard operates within Microsoft Clarity's AI Visibility section, accessible through Settings under AI Visibility for users who connect supported server or CDN integrations. WordPress projects receive automatic integration through the latest Clarity WordPress plugin without manual configuration requirements.

Why: Bot activity evolved from routine pre-search operations into earliest observable signals in the AI content lifecycle. Sustained high-volume bot activity introduces infrastructure overhead, degrades performance, and creates operational risks, especially when limited visibility exists into whether activity produces downstream value through citations, referrals, or other attribution that might justify infrastructure costs. The dashboard helps organizations understand automated access scale and sources, identify high bot attention content, distinguish productive from extractive bot activity, and make informed decisions about bot management, performance planning, and content accessibility.