Search

Microsoft last week launched a new tester tool for robots.txt. The robots.txt tester tool enables webmasters to analyse their robots.txt file and it highlights the issues that would prevent utls to be crawled by Bing and other search index robots.

robots.txt is a file on the domain/subdomain that informs robots/crawlers/spiders which sections of a website should not be processed or scanned.

Microsoft says that the the growing number of search engines and parameters have forced webmasters to search for their robots.txt file amongst the millions of folders on their hosting servers, editing them without guidance and finally scratching heads as the issue of that unwanted crawler still persists.

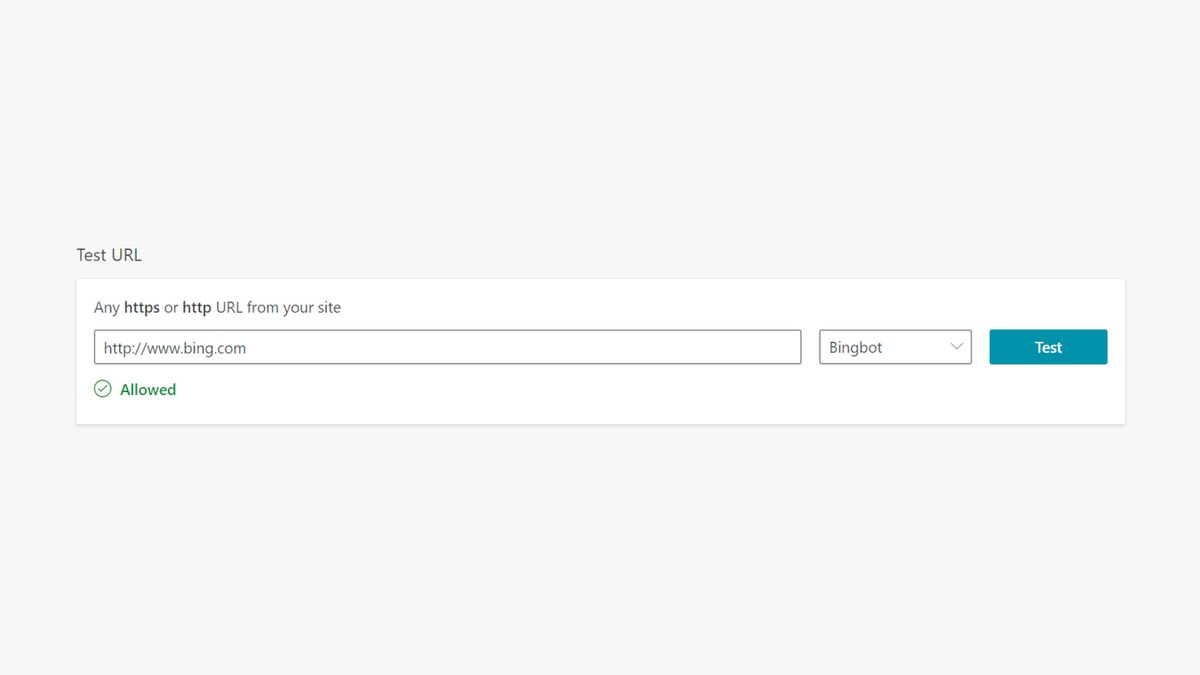

With the new tester tool for robots.txt, webmasters can submit a URL and Microsoft checks the robots.txt file and verifies if the URL has been allowed or blocked.