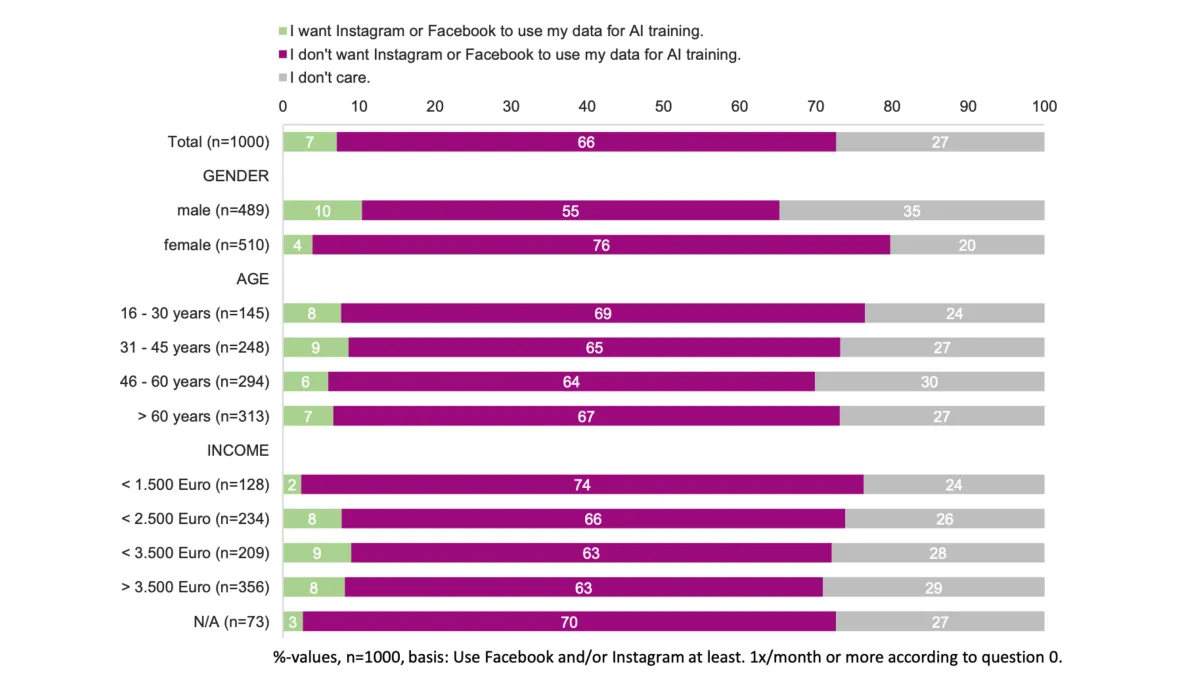

Meta's assertion of "legitimate interest" for using European personal data in AI training faces significant challenges following a new survey commissioned by privacy advocacy group noyb. The study, conducted by the Gallup Institute among 1,000 Meta users in Germany during June 2025, found only 7% actually want their personal data used for artificial intelligence training purposes.

The findings emerge as Meta continues its controversial approach to AI training data collection, launched on May 27, 2025, which bypasses traditional consent requirements under Article 6(1)(a) of the General Data Protection Regulation (GDPR). Instead, the company claims Article 6(1)(f) GDPR allows processing based on "legitimate interest" that supposedly outweighs users' fundamental privacy rights.

According to noyb, the survey reveals critical flaws in Meta's legal foundation. While 73% of respondents heard about Meta's AI training plans, 27% remained completely unaware of the change. This awareness gap translates to approximately 68 million Europeans who never realized Meta began feeding their data into AI systems, despite widespread media coverage of the controversial initiative.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Notification failures raise GDPR compliance questions

The survey exposes significant problems with Meta's notification strategy, which forms a cornerstone of the company's legitimate interest argument. Among Instagram users, 60% cannot remember seeing the in-app notification that Meta claims informed them about AI training. The notification appeared buried within a stream containing hundreds of routine alerts about account follows and content interactions.

Facebook users fared even worse, with 61% unable to recall receiving the relevant email notification. Meta used the subject line "Learn how we'll use your information as we improve AI at Meta" for these emails. Email clients typically display only the first few words of subject lines, making it unlikely users would open such messages.

The notification disparities become more pronounced across age groups. Only 21% of respondents aged 16-30 remember Meta informing them about the change, while 48% of users over 60 recall the notifications. These patterns suggest Meta's communication strategy failed to reach younger demographics who comprise significant portions of its user base.

Max Schrems, chairman of noyb, characterized Meta's approach as deliberately inadequate. "Meta probably knows that no-one wants to provide the data from their social media accounts just so that Meta gets a competitive advantage over other AI companies that do not have access to such data," Schrems stated. "Instead of asking for consent and get 'no' as an answer, they just decided that their right to profits overrides the privacy rights of at least 274 million EU users."

Gender disparities highlight consent concerns

The research identified notable gender differences in willingness to share personal data for AI training. While 10% of men expressed support for Meta using their data, only 4% of women wanted this processing to occur. Meanwhile, 76% of female participants actively oppose AI training with their information, compared to 55% of men.

These findings complicate Meta's legitimate interest claims under GDPR Article 6(1)(f), which requires balancing controller interests against data subjects' reasonable expectations and fundamental rights. The overwhelming opposition, particularly among women, suggests users do not reasonably expect their social media activity to train commercial AI systems.

Kleanthi Sardeli, Data Protection Lawyer at noyb, emphasized the legal implications. "There is no evidence that Meta fulfilled the legal criteria of users being informed and them having reasonable expectations that Meta would use years of their social media activity for AI training," Sardeli noted.

Technical implementation challenges persist

Meta's notification problems extend beyond user awareness to technical implementation issues. The company previously argued it cannot distinguish between EU and non-EU users due to interconnected data points across its platforms. This limitation raises questions about Meta's ability to properly implement user objections or separate special category data from regular personal information.

The processing of special category data under Article 9 GDPR presents particular complexity, as this sensitive information typically requires explicit consent rather than legitimate interest justification. Meta's broad approach to data collection may inadvertently capture protected categories including health information, political opinions, and religious beliefs shared through social media posts.

Regulatory response remains fragmented

Despite the survey findings, European data protection authorities have largely remained silent on Meta's approach. While the company claims to have "engaged" with EU regulators, noyb suggests this engagement has not produced regulatory approval for the AI training program.

Several authorities have instead placed responsibility on individual users to opt out of Meta's AI training rather than requiring the company to seek affirmative consent. This approach frustrates privacy advocates who believe regulatory authorities should take more proactive enforcement action.

The Irish Data Protection Commission, which serves as Meta's lead supervisory authority under GDPR's one-stop-shop mechanism, has announced its intention to monitor implementation rather than initiate immediate enforcement proceedings. This position aligns with a May 23, 2025 ruling by the Cologne Higher Regional Court, which found Meta's AI training plans lawful under both GDPR and Digital Markets Act provisions.

Mounting legal challenges threaten significant damages

Beyond stopping data processing, noyb has indicated it may pursue class action lawsuits if Meta continues its current approach. Under GDPR provisions, affected individuals can claim non-material damages typically ranging from hundreds to thousands of euros per person.

With approximately 274 million monthly active Meta users in Europe, individual damage claims could create substantial financial exposure. If non-material damages reached €500 per user and litigation proved successful, total damages could theoretically approach €137 billion.

The organization's survey methodology involved computer-assisted web interviews through Gallup's online panel during June 2025. The representative sample included 1,000 participants aged 16 and older from across Germany, ensuring statistical accuracy across demographic groups.

Industry implications for AI development

The survey results arrive amid broader debates about data sourcing for AI training systems. Multiple technology companies have adopted similar approaches to Meta, claiming legitimate interest for processing personal data from social platforms, search engines, and other digital services.

Privacy advocates argue these practices collectively threaten fundamental privacy principles established under GDPR. The regulation's emphasis on user control and data minimization conflicts with AI companies' desire for comprehensive training datasets containing personal information.

Recent regulatory guidance from the Dutch Data Protection Authority suggests most generative AI models currently fall short of GDPR compliance requirements. The authority's consultation document establishes five key preconditions for lawful AI development, including requirements that training data must be lawfully obtained and proper systems implemented to facilitate data subject rights.

Timeline

- May 14, 2025: noyb sends formal cease and desist letter to Meta threatening class action lawsuits

- May 23, 2025: Cologne Higher Regional Court rejects injunction against Meta's AI training plans

- May 25, 2025: Dutch Data Protection Authority publishes GDPR preconditions for generative AI development

- May 27, 2025: Meta begins using EU personal data for AI training

- June 2025: Gallup Institute conducts survey among 1,000 German Meta users

- July 4, 2025: German court rules AI training exemption saves Meta from DMA data combination penalties

- August 7, 2025: noyb releases survey findings showing only 7% support for AI training

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Key terms explained

General Data Protection Regulation (GDPR): The European Union's comprehensive data protection framework that took effect in May 2018, establishing strict rules for how organizations collect, process, and store personal data. GDPR requires companies to demonstrate lawful basis for data processing, with consent being just one of six possible legal grounds. The regulation grants individuals extensive rights over their personal information and imposes significant penalties for violations, including fines up to 4% of global annual revenue.

Legitimate Interest: A legal basis for processing personal data under GDPR Article 6(1)(f) that allows companies to process information without explicit consent when they can demonstrate compelling business needs. Organizations must conduct balancing tests showing their interests outweigh individuals' privacy rights and reasonable expectations. This basis cannot override fundamental rights and requires careful assessment of necessity, proportionality, and potential impact on data subjects.

AI Training: The process of feeding large datasets into machine learning algorithms to develop artificial intelligence systems capable of generating text, images, or other content. Training requires massive amounts of data to teach AI models patterns, relationships, and contextual understanding. Companies increasingly use personal information from social media, websites, and digital platforms to create more sophisticated AI capabilities, raising significant privacy and consent concerns.

Consent: Under GDPR, consent must be "freely given, specific, informed and unambiguous" indication of a data subject's agreement to personal data processing. Valid consent requires clear affirmative action, such as ticking an unticked box or clicking a button, and must be as easy to withdraw as to give. Consent cannot be obtained through pre-ticked boxes, bundled agreements, or situations where individuals face negative consequences for refusing.

Personal Data: Any information relating to an identified or identifiable natural person, including names, email addresses, IP addresses, location data, online identifiers, and behavioral information. GDPR's definition is intentionally broad, covering both direct identifiers and data that could identify someone when combined with other information. This includes social media posts, photos, comments, likes, and interaction patterns that Meta collects across its platforms.

Data Protection Authority (DPA): Independent public bodies responsible for monitoring and enforcing GDPR compliance within their jurisdictions. Each EU member state has at least one DPA with powers to investigate complaints, conduct audits, issue binding decisions, and impose administrative fines. The Irish DPA serves as Meta's lead supervisory authority under GDPR's one-stop-shop mechanism, coordinating enforcement across all EU countries where Meta operates.

noyb (None of Your Business): European privacy advocacy organization founded by Max Schrems that challenges tech companies' data processing practices through strategic litigation and regulatory complaints. The group has filed hundreds of GDPR cases against major platforms and secured EU-wide authorization to launch collective redress actions for data protection violations. noyb operates as a non-profit organization funded by individual and institutional members.

Article 6(1)(f): The specific GDPR provision allowing data processing based on legitimate interests of the controller or third parties, except where fundamental rights and freedoms of the data subject override those interests. This legal basis requires three-part assessment: legitimate interest test, necessity test, and balancing test weighing company interests against individual privacy rights. Processing must be proportionate and individuals must have reasonable expectations about the data use.

Notification Requirements: GDPR obligations requiring organizations to provide clear, transparent information about data processing activities to individuals. Companies must inform data subjects about processing purposes, legal basis, data categories, retention periods, and individual rights through privacy notices and direct communications. Adequate notification is essential for legitimate interest claims, as individuals cannot have reasonable expectations without proper information.

Class Action Lawsuits: Legal mechanisms allowing groups of individuals with similar claims to pursue collective litigation against defendants. Under European collective redress frameworks, qualified organizations like noyb can represent affected users in data protection cases seeking injunctive relief and compensation. Unlike US class actions, European systems typically restrict collective actions to non-profit organizations and require opt-in participation from affected individuals.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: Privacy advocacy group noyb commissioned the Gallup Institute to survey 1,000 Meta users in Germany about their attitudes toward AI training data usage.

What: Survey revealed only 7% of users want Meta to use their personal data for AI training, while 66% actively oppose such processing and 27% remain indifferent.

When: The study was conducted in June 2025, approximately one month after Meta began using European personal data for AI training on May 27, 2025.

Where: The research focused on Germany but has implications for Meta's 274 million users across the European Union who are subject to the same AI training policies.

Why: The survey aimed to test Meta's claim of "legitimate interest" under GDPR Article 6(1)(f), which requires balancing company interests against users' reasonable expectations and fundamental rights to privacy.