OpenAI CEO Sam Altman deflected repeated questions about the company's financial commitments during a November 1, 2025 podcast appearance, responding to concerns about $1.4 trillion in compute spending with dismissive remarks about selling shares rather than providing substantive explanations of the business model.

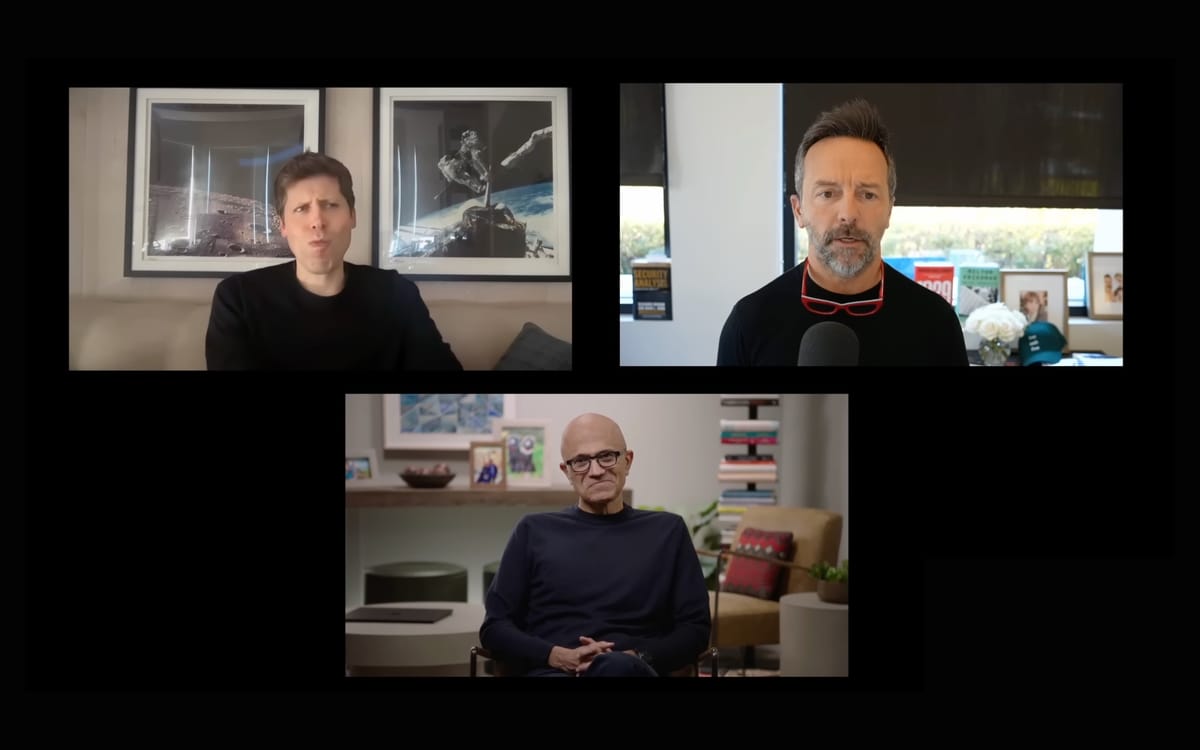

The defensive posture emerged during a discussion on the BG2 podcast hosted by investor Brad Gerstner, who asked how a company reporting $13 billion in 2025 revenues could sustain $1.4 trillion in compute commitments over the next four to five years. The contrast between reported revenues and spending obligations has generated widespread concern across technology and investment communities.

"First of all, we're doing well more revenue than that. Second of all, Brad, if you want to sell your shares, I'll find you a buyer," Altman responded. The comment dismissed the question's substance while implying criticism stemmed from shareholders seeking exits rather than legitimate concerns about financial sustainability.

Altman continued with notable hostility toward questioners raising these issues. "I just enough like, you know, people are I I think there's a lot of people who would love to buy OpenAI shares. I don't I don't think you including myself, including myself, people who talk with a lot of like breathless concern about our comput stuff or whatever that would be thrilled to buy shares."

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Revenue projections without details

The OpenAI CEO suggested the company plans for revenue to "grow steeply" while avoiding specific figures or timelines that would allow external validation of financial projections against committed expenditures. This vagueness persists despite OpenAI operating in a restructuring process that converted it from a nonprofit to a public benefit corporation with a $130 billion nonprofit stakeholder.

"We do plan for revenue to grow steeply. Revenue is growing steeply," Altman stated. He pointed to multiple revenue streams including ChatGPT's consumer subscription business, ambitions to become an important AI cloud provider, a consumer device business, and AI-powered scientific discovery capabilities.

However, the November 1 discussion provided no financial projections, growth rate targets, or timeline milestones that would demonstrate how these revenue streams support the spending commitments announced earlier in the week. Altman's October 29 livestream detailed massive compute commitments including $500 million to Nvidia, $300 million to AMD and Oracle, and $250 billion to Microsoft Azure.

Microsoft CEO Satya Nadella, who joined the November 1 podcast, offered more specific validation of OpenAI's business performance. "I would say at some level there is not been a single business plan that I've seen from OpenAI that they have put in and not beaten it," Nadella stated. He described the execution as "unbelievable" across usage growth and business metrics.

This represented the strongest financial endorsement during the discussion, yet it came from OpenAI's largest investor and strategic partner rather than from OpenAI's leadership providing transparent financial disclosure. Microsoft holds approximately 27% of OpenAI on a fully diluted basis following investments totaling $13.5 billion across multiple rounds.

Compute constraints as revenue limiter

Altman suggested compute limitations currently restrict OpenAI's revenue potential rather than demand constraints. Greg Brockman, OpenAI's President, stated in an October interview that if the company could increase compute capacity tenfold, revenues might not increase tenfold but would grow substantially.

"It's really wild when I just look at how much we are held back," Altman said on the November 1 podcast. "We've scaled our compute probably 10x over the past year, but if we had 10x more compute, I don't know if we'd have 10x more revenue, but I don't think it'd be that far."

This framing positions compute investments as directly translating to revenue expansion, though the mechanism remains unclear. The explanation assumes unlimited demand at current pricing, continued market share dominance despite increasing competition, and sustained unit economics as the company scales infrastructure spending.

The compute constraint narrative also conflicts with broader industry patterns. Microsoft and Google both reported cloud growth limitations due to insufficient GPU availability, yet neither suggested revenue would scale linearly with compute additions. Demand elasticity, competitive dynamics, and pricing pressures typically constrain revenue growth even when technical capacity expands.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

Business model questions persist

Altman's reluctance to address financial sustainability questions directly stands in contrast to typical venture-backed company communications during major capital commitments. The $1.4 trillion figure represents approximately 107 times the reported $13 billion in 2025 revenues, a ratio that would concern most financial analysts evaluating business viability.

Technology infrastructure buildouts typically maintain ratios between capital expenditures and revenues within manageable multiples. Cloud infrastructure providers generally spend 15-30% of revenues on capital expenditures. OpenAI's announced commitments imply capital expenditure ratios exceeding 10,000% of current revenues even spread over five years.

The company could plausibly argue it expects exponential revenue growth that makes current ratios misleading. However, Altman declined to provide the specific projections that would support this defense, instead attacking questioners' motivations.

"We might screw it up like this is the bet that we're making and we're taking a risk along with that," Altman acknowledged. "A certain risk is if we don't have the compute, we will not be able to generate the revenue or make the models at these at this kind of scale."

This admission suggests OpenAI operates under a theory that compute access directly determines revenue potential, positioning infrastructure spending as a prerequisite for revenue growth rather than following it. The model breaks from traditional technology scaling where companies demonstrate unit economics at smaller scales before expanding infrastructure.

Sign up for the free weekly newsletter

Your go-to source for digital marketing news.

No spam. Unsubscribe anytime.

Defensive posture raises concerns

Altman's combative responses to financial questions marked a departure from his typically composed public communications style. The podcast included multiple moments where the OpenAI CEO dismissed legitimate questions about business fundamentals with personal attacks or diversions.

When Gerstner noted some critics question the sustainability of the compute commitments, Altman responded: "We could sell you know your shares or anybody else's to some of the people who are making the most noise on Twitter whatever about this very quickly."

The suggestion that critics should sell their stakes if concerned about financial sustainability represents an unusual deflection from a CEO managing a company undergoing major structural changes. Public company CEOs typically address investor concerns with detailed financial explanations rather than suggesting critics exit their positions.

Altman later acknowledged one attraction of operating as a public company would be enabling critics to short the stock. "There are not many times that I want to be a public company, but one of the rare times it's appealing is when those people are writing these ridiculous OpenAI is about to go out of business and, you know, whatever. I would love to tell them they could just short the stock and I would love to see them get burned on that."

This fantasy about watching critics lose money betting against OpenAI reveals a defensive mindset focused on proving skeptics wrong rather than transparently addressing their concerns through substantive financial disclosure.

Partnership dynamics and information asymmetry

The November 1 discussion highlighted information asymmetries between OpenAI's communications and those from its partner Microsoft. Nadella provided specific validation of OpenAI's business performance, noting the company consistently exceeds its business plans and demonstrates "unbelievable execution."

Microsoft's CEO also offered concrete details about the partnership's financial structure. The companies maintain a revenue sharing arrangement running until 2032 or until artificial general intelligence verification, whichever comes first. OpenAI retains exclusivity for distributing leading models on Azure through 2032, though the company can distribute open source models, agents, and other products on alternative platforms.

These specifics from Nadella contrasted with Altman's vague assertions about revenue growth and compute requirements. The dynamic suggested Microsoft possesses detailed financial information about OpenAI's performance that remains undisclosed to the broader public or potential investors beyond the strategic partner.

This information asymmetry becomes particularly relevant as OpenAI considers potential public offerings. Reuters reported on October 31 that OpenAI may pursue a public listing in late 2026 or 2027, though Altman disputed the timeline specificity. "We don't have anything that specific," he said, while acknowledging a public offering will likely happen eventually.

Valuation disconnects and market signals

The conversation included discussion of OpenAI's valuation trajectory. Gerstner suggested the company could achieve a $1 trillion valuation if it reaches $100 billion in revenues and pursues a public offering at a 10x revenue multiple, comparable to Facebook's initial public offering multiple.

Altman did not dispute this valuation framework, instead noting "there's a lot of people who would love to buy OpenAI shares" including himself. The comment implies confidence in current valuations despite the opacity around financial fundamentals supporting those prices.

However, private market valuations often disconnect from operational realities when information asymmetries prevent sophisticated analysis. Investors lacking access to detailed financial projections, unit economics, and strategic plans must rely on qualitative assessments of technology capabilities and market positioning.

OpenAI's restructuring from a nonprofit to a public benefit corporation with profit-seeking investors complicated these dynamics further. The entity now includes a $130 billion nonprofit alongside a traditional corporate structure, with artificial general intelligence triggering clauses that alter partnership terms and ownership stakes.

These structural complexities create ambiguity about how value flows through the organization and who captures returns from different revenue streams. Altman's reluctance to clarify these arrangements through detailed financial disclosure leaves investors and analysts speculating about fundamental business mechanics.

Compute economics and scaling laws

Altman and Nadella discussed compute economics at length, though in terms that obscured rather than clarified the financial model. Both emphasized that intelligence capability scales logarithmically with compute investment, suggesting diminishing returns as spending increases.

"Unfortunately, it's something closer to log of intelligence equals log of compute," Nadella stated. This relationship implies massive compute additions generate modest intelligence improvements, raising questions about return on investment for the announced spending commitments.

The logarithmic scaling law suggests OpenAI must increase compute spending by factors of 10 to achieve incremental intelligence improvements. If intelligence improvements drive revenue growth, the implied economics become challenging as costs scale faster than capabilities.

Altman acknowledged this dynamic while expressing hope for breakthroughs that improve scaling efficiency. "We may figure out better scaling laws and we may figure out how to beat this," he said. The conditional framing indicates current economics depend on future discoveries rather than demonstrated capabilities.

Both executives also discussed potential compute oversupply as efficiency improvements accelerate. Nadella referenced software optimizations at OpenAI that improved inference performance dramatically on existing hardware. "The software improvements are much more exponential than that," he said, referring to hardware advancement rates.

If software optimization continues improving at exponential rates, the $1.4 trillion compute buildout could become partially obsolete before completion. Altman acknowledged this risk: "I have some fear that it's just like, man, we keep going with these breakthroughs and everybody can run like a personal AGI on their laptop and we just did an insane thing here."

This admission suggests OpenAI's leadership recognizes the infrastructure spending commitments carry substantial risk of becoming stranded assets if efficiency improvements accelerate faster than anticipated.

Advertising industry parallels

OpenAI's opacity around financial fundamentals parallels broader industry trends toward reduced transparency in technology platforms. Microsoft's decision to sunset its Xandr demand-side platform by February 28, 2026 eliminated one of programmatic advertising's most transparent options.

AppNexus, which became Xandr through acquisition, provided unprecedented visibility into fee structures and money flows throughout advertising supply chains. The platform enabled buyers to see exactly what fees were charged at each supply chain step, a level of transparency rare in the industry.

Microsoft's strategic shift toward "conversational, personalized, and agentic" advertising experiences reflects movement away from transparent, manually-controlled platforms toward AI-optimized systems that obscure underlying mechanics. Organizations pursuing this direction prioritize outcome optimization over granular visibility into how systems achieve those outcomes.

This pattern extends beyond advertising to artificial intelligence development, where companies increasingly treat fundamental business operations as proprietary information protected from external scrutiny. The approach benefits incumbents with established market positions while disadvantaging newcomers who cannot evaluate competitive dynamics or unit economics.

Altman's defensive responses to financial questions fit within this broader pattern of reducing transparency as AI capabilities become central to business models. The stance suggests OpenAI views detailed financial disclosure as competitively disadvantageous rather than as a mechanism for building stakeholder trust.

Market implications and precedents

Technology companies historically maintained greater financial transparency during major capital expansion cycles. Amazon disclosed detailed metrics during its retail buildout. Google provided granular advertising data during its infrastructure expansion. Facebook offered specific user growth and engagement statistics during its scaling phase.

These precedents established norms around financial disclosure that enabled investors, analysts, and competitors to evaluate business model sustainability and market dynamics. The transparency fostered more efficient capital allocation as information flowed relatively freely through technology ecosystems.

OpenAI's approach marks a departure from these norms, with Altman treating basic financial questions as inappropriate or hostile rather than as legitimate stakeholder inquiries. The stance creates information asymmetries that benefit insiders with access to detailed financial data while disadvantaging external analysts attempting to evaluate the business.

This dynamic becomes particularly problematic as OpenAI pursues its restructuring from a nonprofit to a public benefit corporation with traditional investors expecting financial returns. The hybrid structure creates complexity around how value flows through the organization while Altman's communication approach adds opacity through deflection rather than disclosure.

Private markets may tolerate this approach given OpenAI's strong market position in frontier AI capabilities. However, public markets typically demand greater transparency around capital allocation, revenue projections, and unit economics. The disconnect between Altman's communication style and public market expectations suggests potential friction if OpenAI pursues a public offering.

Future disclosure requirements

OpenAI's path forward requires navigating disclosure expectations from multiple stakeholder groups. The $130 billion nonprofit established during restructuring theoretically requires transparency about how AI development serves humanitarian goals. California's Attorney General approved the structure but maintains oversight authority.

Public benefit corporation status creates additional reporting obligations around social impact alongside financial performance. These requirements theoretically mandate greater transparency than traditional corporate structures, though enforcement mechanisms remain unclear.

If OpenAI proceeds toward a public offering, Securities and Exchange Commission regulations mandate detailed financial disclosure including revenue projections, risk factors, and capital allocation strategies. The requirements would force transparency around exactly the questions Altman deflected during the November 1 podcast.

Until then, OpenAI appears positioned to maintain its current approach of providing minimal financial detail while emphasizing qualitative assessments of technology capabilities and market positioning. Altman's defensive responses suggest the company views detailed financial transparency as disadvantageous despite the information asymmetries this creates.

The stance raises questions about whether OpenAI's business fundamentals support its spending commitments or whether the company operates on assumptions about future breakthroughs that remain unproven. Without transparent disclosure, external analysts cannot distinguish between sustainable scaling and speculative infrastructure buildouts.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Timeline

- 2019: Microsoft begins investing in OpenAI with initial $1 billion commitment establishing strategic partnership

- December 2021: Microsoft announces agreement to acquire Xandr from AT&T, later becoming example of reduced transparency in tech platforms

- 2022: Microsoft completes Xandr acquisition; OpenAI partnership deepens with additional funding rounds

- January 2025: OpenAI completes restructuring creating $130 billion nonprofit alongside public benefit corporation structure

- May 14, 2025: Microsoft announces Xandr DSP sunset effective February 2026, eliminating transparent programmatic platform

- October 29, 2025: Altman announces during livestream massive compute commitments including $500 million to Nvidia, $300 million to AMD and Oracle, and $250 billion to Microsoft Azure

- October 31, 2025: Reuters reports OpenAI may pursue public offering in late 2026 or 2027, though Altman disputes timeline specificity

- November 1, 2025: Altman deflects financial sustainability questions during BG2 podcast, suggesting critics sell shares rather than providing substantive answers about compute spending commitments

- February 28, 2026: Microsoft Invest (formerly Xandr) scheduled discontinuation, completing shift away from transparent ad tech platforms

Related stories

- Microsoft to sunset Xandr DSP, raising concerns about programmatic transparency

- Agentic AI threatens traditional DSP business models says industry veteran

- Transaction ID standards clash raises programmatic transparency concerns

- Microsoft's Xandr DSP closure opens doors for independent players

- Irish court approves first class action against Microsoft RTB data breach

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Summary

Who: OpenAI CEO Sam Altman deflected questions about financial sustainability during a November 1, 2025 podcast appearance on BG2 hosted by investor Brad Gerstner. Microsoft CEO Satya Nadella participated in the discussion, providing the most substantive financial validation of OpenAI's business performance.

What: Altman responded dismissively to questions about how OpenAI can sustain $1.4 trillion in compute spending commitments against $13 billion in reported 2025 revenues, suggesting critics sell their shares rather than providing detailed explanations of the business model. The defensive posture marked a departure from typical CEO communications during major capital commitments, with Altman attacking questioners' motivations instead of addressing concerns about capital expenditure ratios exceeding 10,000% of current revenues.

When: The discussion occurred on November 1, 2025, days after Altman announced during an October 29 livestream the massive compute commitments including $500 million to Nvidia, $300 million to AMD and Oracle, and $250 billion to Microsoft Azure over the next four to five years.

Where: The podcast conversation took place following widespread concern across technology and investment communities about the ratio between OpenAI's spending commitments and reported revenues. The company operates as a restructured public benefit corporation with a $130 billion nonprofit stakeholder following California Attorney General approval of its conversion from pure nonprofit status.

Why: Altman's deflective responses highlight broader industry trends toward reduced transparency as AI capabilities become central to business models, paralleling Microsoft's decision to sunset the transparent Xandr DSP platform in favor of opaque AI-optimized systems. The stance suggests OpenAI views detailed financial disclosure as competitively disadvantageous, creating information asymmetries that benefit insiders while disadvantaging external analysts attempting to evaluate business sustainability. This approach contrasts with historical technology company norms of maintaining financial transparency during major capital expansion cycles, raising questions about whether OpenAI's fundamentals support its spending commitments or whether the company operates on unproven assumptions about future breakthroughs.