Justin Brewer filed a class action lawsuit against Otter.ai, Inc. on August 15, 2025, alleging the company violated federal and California privacy laws through its AI-powered meeting assistant. According to court documents filed in the Northern District of California, the case centers on Otter Notetaker's practice of recording virtual meeting participants without obtaining consent from all parties involved in the conversations.

The lawsuit alleges Otter intercepted communications during Google Meet, Zoom, and Microsoft Teams meetings while using recorded data to train its automatic speech recognition and machine learning models. Filed as Case No. 5:25-cv-06911-EKL, the complaint seeks damages exceeding $5 million and class certification for potentially thousands of affected individuals.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Brewer participated in a Zoom meeting on February 24, 2025, where another participant used Otter Notetaker to transcribe the conversation, according to court filings. The plaintiff was neither an Otter accountholder nor aware that the company would obtain and retain his conversational data for machine learning purposes.

Technical implementation enables unauthorized access

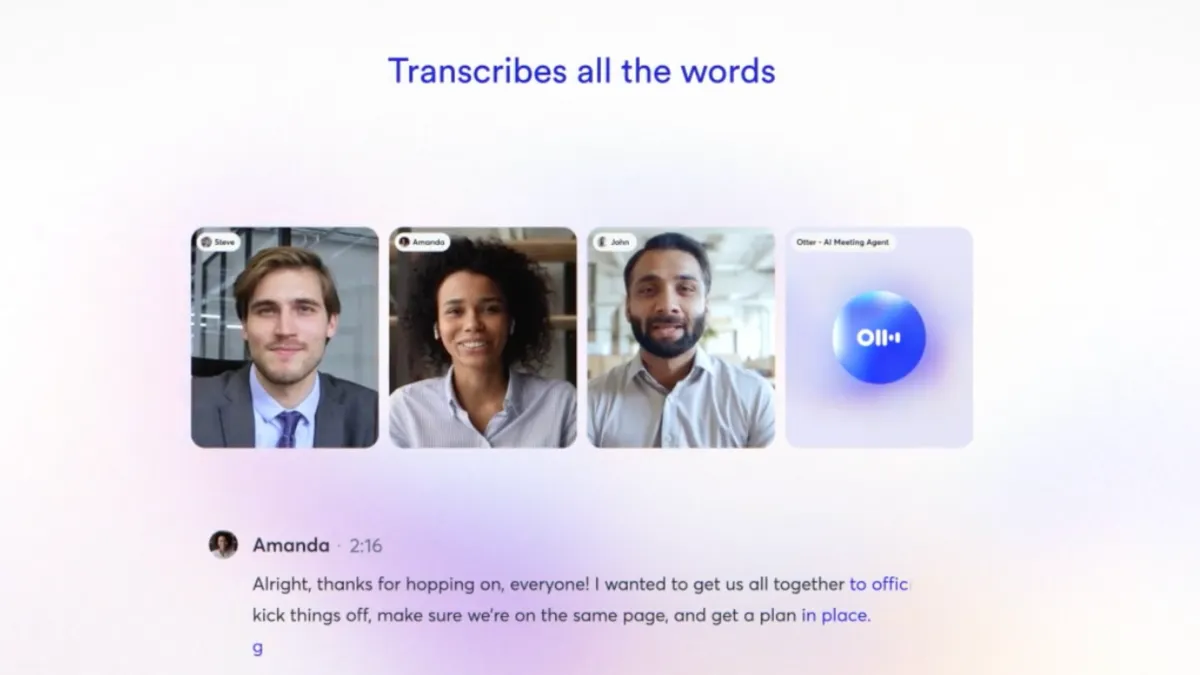

Otter Notetaker joins virtual meetings as a separate participant, transmitting audio data directly to the company's servers for real-time processing and transcription. According to the complaint, the service operates as a tool used by Otter itself rather than solely by accountholders to record others.

The lawsuit describes Otter Notetaker as "a separate and distinct third-party entity from its accountholders and other parties to the conversation—to record and transcribe conversations to which it is not a party." Court documents indicate the service asks meeting hosts for consent when the host lacks an Otter account but does not seek permission from other participants.

Default account settings disable pre-meeting notifications that would inform participants about recording activities, according to the filing. Otter accountholders must manually activate email notifications for pre-scheduled meetings, with court documents showing screenshots demonstrating the "Send pre-recording emails" setting turned off by default.

Privacy policy shifts responsibility to users

Otter's privacy policy acknowledges using recordings and transcriptions to train artificial intelligence models, according to quotes included in the court filing. The company states: "We train our proprietary artificial intelligence on de-identified audio recordings. We also train our technology on transcriptions to provide more accurate services, which may contain Personal Information."

However, the privacy policy places responsibility on accountholders rather than obtaining direct consent from recorded participants. According to court documents, Otter instructs users to "please make sure [they] have the necessary permissions from . . . co-workers, friends or other third parties before sharing Personal Information" when making audio recordings.

The complaint challenges Otter's de-identification claims, noting the company provides no public description of this process. Scientific research cited in court documents suggests de-identification procedures remain unreliable for conversational data, with machine learning classifiers successfully executing membership inference attacks against de-identified clinical notes.

Company growth accelerates amid privacy concerns

Otter achieved significant business milestones while implementing these practices. The company surpassed $100 million in annual recurring revenue in March 2025, according to court filings citing company announcements. With over 25 million global users and an estimated 50% residing in the United States based on web traffic data, the scale of potential privacy violations reaches millions of individuals.

The lawsuit contrasts Otter's approach with competitor Read.ai, which permits any conversation participant to stop recording during meetings regardless of their account status. According to court documents, this functionality difference demonstrates Otter's intent to record conversations without informed participant consent.

Otter retains personal information "for as long as necessary to fulfill the purposes set out in this Policy, or for as long as we are required to do so by law," according to privacy policy language quoted in the filing. This indefinite retention period compounds privacy concerns raised by the alleged lack of proper consent procedures.

Legal claims span federal and state privacy laws

The complaint brings seven counts against Otter spanning federal wiretapping statutes and California privacy protections. Claims include violations of the Electronic Communications Privacy Act, Computer Fraud and Abuse Act, California Invasion of Privacy Act, and common law torts for intrusion upon seclusion and conversion.

Under the Electronic Communications Privacy Act, the lawsuit alleges Otter intentionally intercepted oral communications during virtual meetings without authorization from participants. Court documents argue meeting participants did not consent to interception as they lacked awareness of Otter's role as an "active third-party listener and autonomous data harvesting agent."

California privacy law claims focus on unauthorized reading and learning of communication contents while transmitted over electronic systems. The complaint alleges Otter "willfully and intentionally intercepted these communications without consent from all parties to the communications" in violation of California Penal Code sections 631 and 632.

Computer access violations center on Otter's alleged unauthorized access to participant devices and protected computers. According to the filing, the company "intentionally accessed Plaintiff Brewer's and Class members' protected computers without their authorization or consent" through the Notetaker service.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

Proposed class action seeks broad relief

The lawsuit proposes two class definitions covering nationwide and California-specific claims. The nationwide class includes individuals whose conversations were "read and learned by Otter using the Otter Notetaker or OtterPilot software" from August 15, 2023, through the present, excluding accountholders and hosts who authorized the service.

California subclass claims extend back to August 15, 2021, covering residents who participated in meetings hosted in California or while located in the state. Both classes exclude Otter employees, officers, directors, and their subsidiaries along with class counsel and judicial officers.

Relief sought includes statutory damages under federal wiretapping laws, injunctive relief preventing future unauthorized recording, and disgorgement of profits derived from using participant data to train AI models. The complaint requests actual and punitive damages, attorney fees, and destruction of unlawfully obtained information.

California law provides damages of $5,000 per violation or three times actual damages under the Invasion of Privacy Act, according to court filings. Computer access violations carry additional penalties including compensatory damages and injunctive relief.

Industry implications for meeting technology

The case highlights growing tensions between AI development needs and privacy rights in virtual meeting environments. As companies increasingly rely on AI-powered tools for business operations, the lawsuit raises questions about consent mechanisms and data use transparency in enterprise software.

The legal challenge comes amid broader scrutiny of AI companies' data collection practices. PPC Land previously reported on Oracle's $115 million privacy settlement addressing unauthorized data collection through advertising technologies, demonstrating how privacy litigation increasingly targets AI and data-driven business models.

Similar consent issues affect other meeting technologies as remote work adoption accelerates demand for transcription and AI-powered meeting assistance. The outcome could establish precedents for how meeting technology providers must obtain and document participant consent for recording and AI training purposes.

Broader privacy law enforcement trends

The Otter lawsuit represents part of expanding privacy litigation targeting tech companies' data practices. Apple recently agreed to pay $95 million to settle claims over unauthorized Siri recordings between 2014 and 2024, while PayPal's Honey browser extension faces allegations of diverting affiliate commissions through cookie manipulation.

Federal courts continue examining AI companies' use of copyrighted materials for model training. Recent rulings found mixed results on fair use defenses, with judges distinguishing between legitimate training uses and unauthorized content acquisition methods.

The Otter case differs by focusing on real-time privacy violations during active communications rather than historical data use. This distinction may influence how courts evaluate consent requirements for AI systems that process live conversations versus static training datasets.

Marketing professionals utilizing AI-powered meeting tools should review vendor consent mechanisms and data processing disclosures. Recent Law Commission analysis highlighted liability gaps in AI systems, emphasizing the need for clear accountability frameworks as AI technologies demonstrate increasing autonomy.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Timeline of Events

- February 24, 2025: Justin Brewer participates in Zoom meeting where Otter Notetaker records conversation without his consent

- March 25, 2025: Otter.ai announces surpassing $100 million annual recurring revenue milestone

- August 15, 2025: Class action lawsuit filed against Otter.ai in Northern District of California

- July 2024: Oracle agrees to $115 million privacy settlement for unauthorized data collection practices

- January 3, 2025: Apple settles $95 million class action over unauthorized Siri recordings

- December 29, 2024: PayPal's Honey faces class action lawsuit over affiliate commission diversion

- June 23, 2025: Federal judge rules AI training constitutes fair use while allowing piracy claims to proceed

- May 11, 2025: US Copyright Office releases AI training report addressing fair use questions

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: Justin Brewer filed a class action lawsuit against Otter.ai, Inc. on behalf of meeting participants whose conversations were recorded without consent. The case is filed in the US District Court for the Northern District of California.

What: The lawsuit alleges Otter's AI-powered meeting assistant violated federal and California privacy laws by recording virtual meeting participants without obtaining consent from all parties and using the recordings to train machine learning models.

When: The complaint was filed on August 15, 2025, covering alleged violations from August 15, 2021, through the present. Brewer's recorded meeting occurred on February 24, 2025.

Where: The case is filed in the Northern District of California federal court, where Otter maintains its principal place of business in Mountain View. The alleged violations occurred during virtual meetings on platforms including Google Meet, Zoom, and Microsoft Teams.

Why: The lawsuit aims to prevent unauthorized recording of meeting participants and recover damages for privacy violations. Plaintiffs seek to establish consent requirements for AI-powered meeting tools and obtain compensation for the unauthorized use of conversational data to train commercial AI systems.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

PPC Land explains

Class Action Lawsuit

A class action lawsuit allows one or more plaintiffs to file and prosecute a lawsuit on behalf of a larger group, or "class," of people who have similar claims against the same defendant. In this case, Justin Brewer represents potentially thousands of meeting participants whose conversations were allegedly recorded without consent by Otter's AI system. Class actions provide an efficient mechanism for addressing widespread harm where individual claims might be too small to pursue separately, while ensuring consistent legal outcomes across similarly situated parties.

Otter Notetaker

Otter Notetaker is an AI-powered meeting assistant that joins virtual meetings as a participant to provide real-time transcription services. The software integrates with popular platforms including Google Meet, Zoom, and Microsoft Teams, automatically recording and transcribing conversations for Otter accountholders. According to the lawsuit, the service operates as a separate third-party entity rather than merely a tool controlled by meeting participants, raising questions about consent and privacy when non-accountholders participate in recorded meetings.

Consent

Legal consent refers to the voluntary agreement by all parties to participate in an activity, particularly crucial in privacy law regarding recording communications. The lawsuit alleges Otter failed to obtain proper consent from meeting participants before recording their conversations and using the data for AI training. Federal and California privacy laws typically require all-party consent for recording private communications, with violations carrying significant statutory penalties and potential criminal liability.

Privacy Laws

Privacy laws encompass federal and state regulations protecting individuals' personal information and communications from unauthorized collection and use. The lawsuit cites multiple privacy statutes including the Electronic Communications Privacy Act, California Invasion of Privacy Act, and Computer Fraud and Abuse Act. These laws establish requirements for consent, disclosure, and data handling while providing civil and criminal penalties for violations, reflecting growing legislative recognition of privacy as a fundamental right in digital environments.

Machine Learning Models

Machine learning models are algorithmic systems that improve performance through training on large datasets, enabling computers to recognize patterns and make predictions without explicit programming. Otter uses recorded conversations and transcriptions to train its automatic speech recognition and language processing capabilities, enhancing transcription accuracy and functionality. The lawsuit challenges this practice when training data includes conversations from participants who never consented to their communications being used for commercial AI development.

Virtual Meetings

Virtual meetings conducted through platforms like Zoom, Google Meet, and Microsoft Teams have become essential business tools, particularly since remote work adoption accelerated. These digital environments create new privacy challenges as participants may not understand how third-party applications access their communications. The lawsuit highlights how AI-powered meeting tools can intercept conversations in ways that traditional recording methods cannot, raising questions about participant awareness and control over their data.

Automatic Speech Recognition (ASR)

Automatic Speech Recognition technology converts spoken language into written text using artificial intelligence algorithms trained on vast audio datasets. Otter's ASR system powers its real-time transcription capabilities, requiring continuous improvement through exposure to diverse speech patterns, accents, and vocabulary. The lawsuit alleges participants never consented to their conversations being used to enhance these commercial AI systems, particularly when the training process may compromise speaker anonymity despite claimed de-identification efforts.

Electronic Communications Privacy Act (ECPA)

The Electronic Communications Privacy Act of 1986 prohibits intentional interception of electronic communications without proper authorization, establishing federal criminal and civil penalties for violations. The lawsuit alleges Otter violated ECPA by intercepting meeting participants' oral communications without consent while using the intercepted content for commercial purposes. ECPA provides statutory damages and attorney fees for successful plaintiffs, making it a powerful tool for addressing unauthorized surveillance and data collection practices.

California Invasion of Privacy Act (CIPA)

California's Invasion of Privacy Act provides some of the strongest privacy protections in the United States, requiring all-party consent for recording confidential communications. The statute imposes criminal penalties and civil damages of $5,000 per violation or three times actual damages, whichever is greater. The lawsuit alleges Otter violated CIPA by recording California residents' conversations without obtaining consent from all participants, potentially exposing the company to substantial statutory damages given the scale of its operations.

De-identification

De-identification refers to the process of removing or obscuring personally identifiable information from datasets to protect individual privacy while preserving data utility for analysis or training. Otter claims to de-identify audio recordings used for AI training, but the lawsuit challenges both the effectiveness and transparency of this process. Recent research demonstrates that sophisticated de-identification procedures remain vulnerable to re-identification attacks, particularly for conversational data containing unique speech patterns and contextual information that may enable speaker identification.