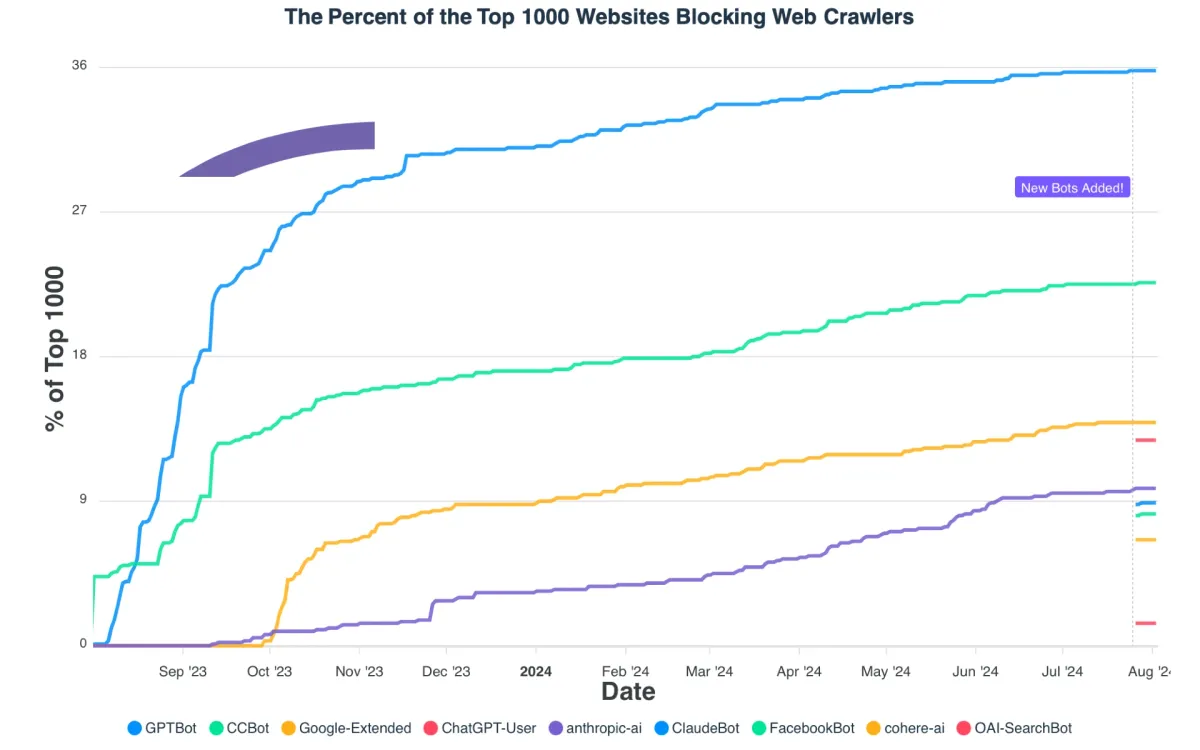

On August 3, 2024, new data was released showing that over 35% of the world's top 1000 websites are now blocking OpenAI's GPTBot web crawler, marking a significant increase in efforts to restrict AI companies from scraping online content. The study, conducted by AI detection company Originality.AI, reveals a complex landscape of how major websites are responding to the rise of large language models and AI-powered search engines.

The research, which analyzed the robots.txt files of the top 1000 global websites, found that GPTBot is now blocked by 35.7% of sites, up from just 5% when it was first introduced in August 2023. This represents a seven-fold increase in blocking over the past year, reflecting growing concerns about AI companies using scraped web content to train their models.

According to the data, other AI-related web crawlers are also facing increasing restrictions, though at lower rates than GPTBot. The Common Crawl Bot (CCBot) is blocked by 22.1% of top sites, Google-Extended by 13.6%, and ChatGPT-User by 12.7%. Newer entries like anthropic-ai, ClaudeBot, and OAI-SearchBot are blocked at rates between 1-10%.

The surge in blocking began shortly after OpenAI announced GPTBot on August 7, 2023. Within two weeks, major sites like Amazon, Quora, The New York Times, and CNN had implemented blocks. The trend has accelerated over the past year, with blocking rates climbing steadily each month.

PPC LandLuís Daniel Rijo

PPC LandLuís Daniel Rijo

Notably, many large media and news publishers are now blocking GPTBot, including The New York Times, The Guardian, CNN, USA Today, Reuters, The Washington Post, NPR, CBS, NBC, Bloomberg, and CNBC. This suggests particular concern in the journalism industry about AI systems potentially replicating their content.

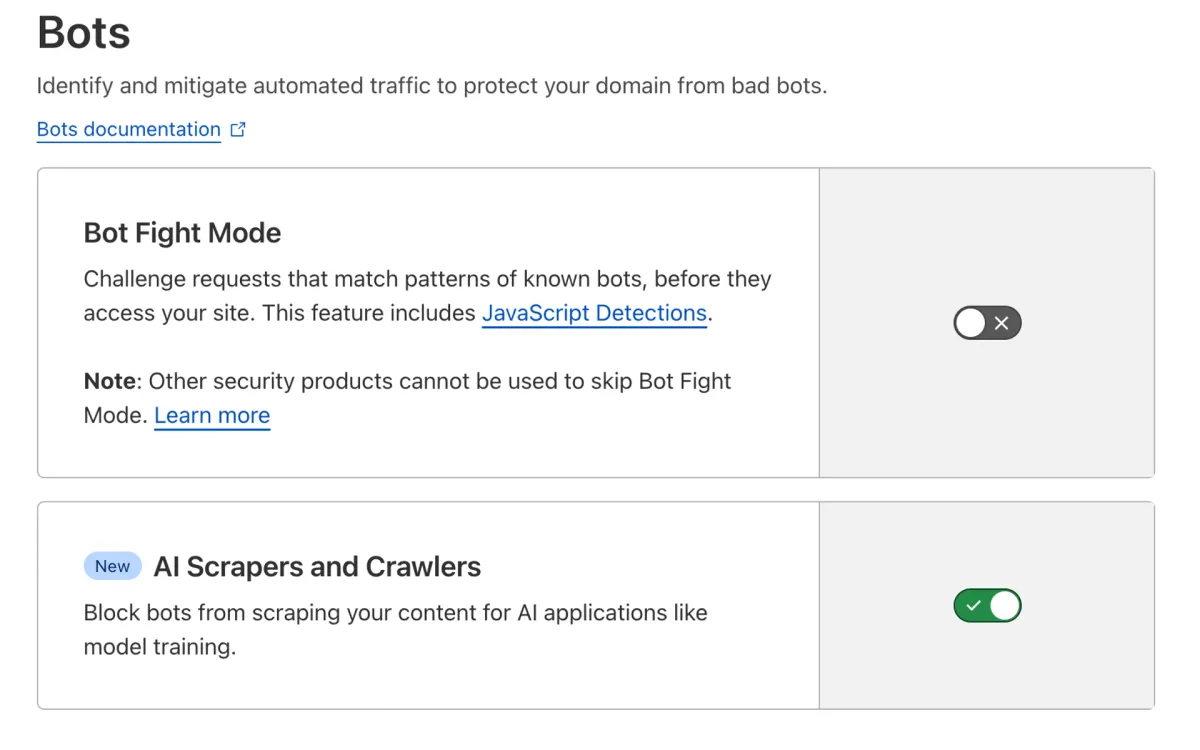

The rise in crawler blocking comes amid broader debates about AI companies' use of online data. In July 2024, just two weeks ago, OpenAI announced a new AI-powered search engine called SearchGPT. They also introduced a new crawler called OAI-SearchBot, which they claim is only used to surface websites in search results, not to train AI models.

PPC LandLuís Daniel Rijo

PPC LandLuís Daniel Rijo

However, despite these assurances, 14 major publishers including The New York Times, Wired, The New Yorker, and Vogue immediately blocked OAI-SearchBot, effectively excluding themselves from appearing in SearchGPT results. This swift reaction indicates ongoing mistrust between content publishers and AI companies.

The motivations for blocking AI crawlers are multifaceted. Some companies cite concerns about copyright infringement and the use of their content to train AI models that could then compete with them. Others worry about the potential for AI systems to replicate or closely mimic their content, potentially reducing traffic to their own sites.

There are also broader philosophical and ethical concerns about consent and compensation when it comes to using web content for AI training. Many argue that websites should have more control over how their data is used, especially by for-profit AI companies.

However, blocking crawlers is not without potential downsides for websites. As AI-powered search and discovery tools become more prevalent, sites that block these crawlers may find themselves less visible or accessible through these new channels. This creates a dilemma for web publishers trying to balance protection of their content with maintaining discoverability.

The legal landscape surrounding web scraping and AI training data remains unsettled. While a 2019 case between LinkedIn and HiQ Labs upheld the general legality of scraping publicly available websites, ongoing lawsuits against OpenAI and other AI companies are challenging aspects of this practice.

The Originality.AI study also revealed interesting patterns in how different types of websites approach crawler blocking. E-commerce sites, for instance, show varied responses, with some like Amazon implementing blocks while others remain open. Educational and research institutions generally allow most crawlers, while news and media sites tend to be more restrictive.

This trend of increased crawler blocking represents a significant shift in the relationship between web publishers and AI companies. It highlights growing tensions over data ownership, privacy, and the future of content creation in an AI-driven world.

As AI technologies continue to advance rapidly, these issues are likely to remain at the forefront of discussions about internet governance, digital rights, and the economics of online content. The actions of major websites in restricting AI crawlers could potentially shape the development trajectory of large language models and other AI systems that rely on web data for training.

For internet users, the implications of this trend are not yet clear. In the short term, it may lead to some inconsistencies in how well AI systems can provide information from certain sources. In the longer term, it could influence the types of AI services that develop and how they operate.

As this situation continues to evolve, it will be crucial for policymakers, tech companies, and content creators to engage in ongoing dialogue about balancing innovation with privacy and property rights in the digital age. The current wave of crawler blocking may be just the opening move in a longer process of negotiation and adaptation between web publishers and AI developers.

Key facts

35.7% of top 1000 websites now block OpenAI's GPTBot, up from 5% in August 2023

CCBot is blocked by 22.1%, Google-Extended by 13.6%, ChatGPT-User by 12.7%

Major news publishers including NYT, CNN, Reuters are blocking multiple AI crawlers

14 leading publishers blocked OpenAI's new SearchGPT crawler immediately upon its release

Legal and ethical debates continue over web scraping for AI training data

Websites face a dilemma between protecting content and maintaining visibility in AI-driven search

The trend highlights growing tensions between web publishers and AI companies over data use