On November 7, 2024, the World Wide Web Consortium (W3C) Media Working Group published the First Public Working Draft of the Audio Session API. According to the official W3C documentation, this new API establishes a comprehensive framework for controlling how audio content is rendered and interacts with other audio-playing applications across web platforms.

The development team, led by Youenn Fablet from Apple and Alastor Wu from Mozilla, has created this specification to address a significant gap in web audio management. According to the working draft, the primary motivation behind this development stems from the increasing consumption of media through web platforms, which has become a primary channel for accessing audio and video content.

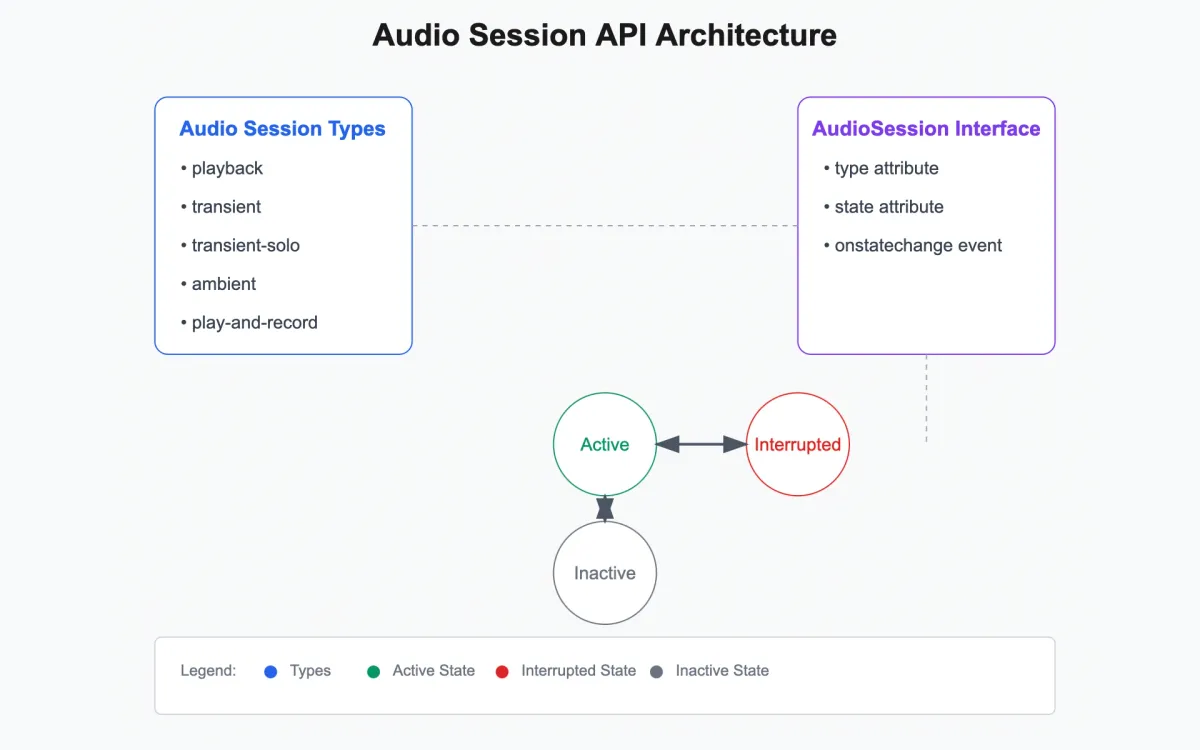

The API introduces several key features that enhance audio management capabilities. According to the technical specification, it allows web applications to define different types of audio sessions, including playback, transient, transient-solo, ambient, and play-and-record categories. Each category serves specific use cases, from video conferencing to notification sounds.

The document states that the API provides granular control over audio behavior, allowing developers to manage how their web applications' audio interacts with other applications. This includes capabilities for audio mixing and exclusive playback, depending on the specific context and requirements.

Key Technical Implementation Details

The specification outlines the technical architecture through the AudioSession interface, which provides three primary components:

- A type attribute that defines the audio session category

- A state attribute that reflects the current audio session state

- An event handler for state changes

Technical Deep Dive

Audio Session Types and States

The API introduces six distinct audio session types:

- Playback: Designed for video or music playback

- Transient: Intended for notification sounds

- Transient-solo: Optimized for priority audio like navigation instructions

- Ambient: Created for mixable audio content

- Play-and-record: Developed for recording and conferencing

- Auto: The default state where the user agent selects optimal settings

State Management System

According to the technical documentation, the API defines three fundamental states:

- Active: Indicating ongoing sound playback or microphone recording

- Interrupted: Representing a paused state that can resume

- Inactive: Signifying no audio activity

Implementation Framework

The specification details the implementation through the Navigator interface, providing developers with access to the default audio session that the user agent utilizes when media elements start or stop playing.

Historical context

The development of the Audio Session API represents a significant milestone in web standards evolution. The Media Working Group, operating under the W3C Patent Policy, has developed this specification to address long-standing challenges in web audio management.

Development Timeline and Process

The working group, chaired by Marcos Caceres and Chris Needham with François Daoust as Staff Contact, has been chartered until May 31, 2025. This timeline provides context for the development and implementation process of the API.

Industry Standards and Compliance

The specification adheres to RFC 2119 terminology, ensuring precise interpretation of requirements through terms like "MUST," "SHOULD," and "MAY." This standardization ensures consistent implementation across different platforms and browsers.

The future of Web Audio Management

The Audio Session API represents a significant advancement in web audio technology. Its introduction addresses crucial needs in the web development community:

Integration Capabilities

The API provides seamless integration with underlying platforms, addressing a critical gap in current web audio management systems.

Developer Control

It offers unprecedented control over audio behavior, allowing developers to create more sophisticated audio experiences on the web.

Platform Compatibility

The specification ensures consistent behavior across different platforms and devices, promoting standardization in web audio management.

Key Facts

- Released: November 7, 2024

- Status: First Public Working Draft

- Developed by: W3C Media Working Group

- Lead Editors: Youenn Fablet (Apple), Alastor Wu (Mozilla)

- Charter End Date: May 31, 2025

- Implementation: Through Navigator interface

- Key Features: 6 audio session types, 3 state management levels

- Primary Purpose: Enhanced control over web audio rendering and interaction

- Target Users: Web developers working with audio applications

- Compliance: RFC 2119 terminology standards

- License: W3C Patent Policy governed