Experienced software developers are not embracing the "vibe coding" approach despite widespread availability of AI agents that promise autonomous code generation, research from UC San Diego and Cornell University revealed on January 5, 2026. The study challenges assumptions about how professionals actually use AI coding tools in production environments.

Pedro Domingos, professor of computer science at the University of Washington and author of "The Master Algorithm," sparked renewed debate on January 5, 2026, when he posted on X: "Bad news: AI coding tools don't work for business logic or with existing code." His statement generated significant pushback from developers, with responses ranging from claims of "skill issue" to detailed counterexamples of successful AI agent deployments.

The research paper Professional Software Developers Don't Vibe, They Control: AI Agent Use for Coding in 2025, released January 5, offers empirical evidence supporting Domingos's skepticism while revealing nuanced patterns in how skilled developers actually employ these tools. Researchers conducted 13 field observations and surveyed 99 experienced developers with three or more years of professional experience to understand current AI agent usage patterns.

Free weekly newsletter

Your go-to source for digital marketing news.

No spam. Unsubscribe anytime.

"I've been a software developer and data analyst for 20 years and there is no way I'll EVER go back to coding by hand. That ship has sailed and good riddance to it," one survey respondent stated. The same developer immediately qualified: "One word of warning to the young ones getting into the business – it's still really important to know what you're doing. The agents are great for a non-technical person to create a demo, but going beyond that into anything close to production-ready work requires a lot of supervision."

The findings contradict the "vibe coding" phenomenon, where developers "fully give in to the vibes" and "forget that the code even exists," according to terminology that emerged in developer communities. Instead, experienced programmers employ strategic planning, iterative validation, and careful oversight of agent behavior.

All 13 observed participants controlled software design when implementing new features, either creating design plans themselves or thoroughly revising agent-generated plans. Nine participants carefully reviewed every agentic code change, while four working on unfamiliar tasks still monitored program outputs closely without necessarily reading generated code line-by-line.

"I like to keep talking with the AI just because it keeps everything in the chat context," one participant explained. "Because if I change something in the editor, I actually need to tell it that I did change something in the editor while it wasn't looking." This systematic approach reflects experienced developers' insistence on maintaining awareness of all code modifications.

Survey respondents reported modifying agent-generated code approximately half the time on average, with only six out of 99 indicating they "always" modified AI output. The majority (37 respondents) reported modifying code "about half the time," while 28 reported doing so "seldom" and 24 "usually."

Prompting strategies emerged as critical for successful agent use. Participants incorporated extensive context in prompts, including UI elements, technical terms, domain-specific objects, reference files, specific libraries, expected behaviors, new features, planning steps, files to modify, and request purposes. One participant's prompt included seven different context types when implementing a full-stack feature for user score annotations.

The largest plans developed by participants contained more than 70 steps, but experienced developers never instructed agents to work on more than five or six steps simultaneously. On average, participants asked agents to handle only 2.1 steps at a time before validating results and continuing.

"My prompts are always very specific, with as much info as possible for example - filenames, function names, variable names, error messages etc.," one respondent explained. "I focus on ensuring prompt has clear statements on what I [want] the LLM to look at and what I want it to do. Small and specific modifications."

The research identified clear patterns in task suitability. Experienced developers found agents appropriate for accelerating straightforward, repetitive, and scaffolding tasks. Thirty-five survey respondents mentioned productivity acceleration, 33 cited suitability for small or simple tasks, and 28 praised agents for following well-defined plans.

"Once the plan exists, the agent can work really cool and I'm just wondering how he does such great things!" one developer noted. However, the same developers emphasized: "IF given clear instructions they do my work."

Agents proved suitable for writing tests (19 respondents versus 2 negative responses), general refactoring (18 versus 3), documentation (20 versus 0), and simple debugging (12 versus 3). Backend and frontend developers both reported agent utility in their domains, with 14 to 1 and 10 to 3 positive-to-negative response ratios respectively.

But as task complexity increased, agent suitability decreased sharply. Three respondents found agents suitable for complex tasks versus 16 who found them unsuitable. Business logic and domain knowledge requirements created particular challenges, with 15 respondents reporting agents unsuitable for such work versus only two reporting suitability.

"Bad news guys wrap it up," one sarcastic X response to Domingos's post suggested, while another countered: "But did you try opus 4.5?" The exchanges highlighted division within the developer community about current AI capabilities.

Twelve survey respondents emphasized that agents cannot replace human expertise or decision making. "You need to master certain skills and judgment to verify whether the generated code is correct," one stated. Another explained: "I do everything with assistance but never let the agent be completely autonomous—I am always reading the output and steering."

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

No observed participant and no survey respondent indicated that agents could operate completely autonomously. The research found universal agreement that human oversight remains essential, particularly for production code and systems with real-world impact.

"I never finished the task because my use case is complicated and [the agent] performed poorly throughout most of it," one developer reported. Another noted: "If the function is complex (requires several dependencies), agent tends to hallucinate."

However, one participant successfully completed a React migration in three days with agent assistance that would have required two weeks manually: "And at the end of three days, I don't think we've caught a single bug or design mistake ever since then." This suggests certain seemingly complex tasks remain suitable for agents when they involve well-understood frameworks and clear transformation patterns.

Software quality attributes drove experienced developers' decision-making when using agents. Sixty-seven survey respondents mentioned caring about software quality attributes when working with agents, versus 37 mentioning non-quality attributes like productivity enhancement. The most mentioned qualities were correctness (20 mentions) and readability (18 mentions), followed by modularity, performance, deployability, reliability, and maintainability.

"Most AI agents produce messy and unnecessarily long code when not given enough instructions," one developer noted. Another stated: "Almost everything it performs great on. It just does not one shot things."

Integration with existing code emerged as a significant challenge. Seventeen respondents reported difficulties with legacy code and code integration versus only three reporting success. "Often wrote custom code for pre-existing functions in core libraries," one developer explained. Another found the agent "has difficult[y] ensuring features integrate within the entire architecture end to end."

Planning and design with agents generated controversial responses. Thirteen respondents found agents suitable for high-level architecture planning versus 23 who avoided agents for this purpose. "With architecture in general I use AI much less, other than being helpful for research," one developer stated. Another countered: "For a new problem, I just engage with some LLM, get ideas."

The divide appeared to stem from different usage patterns. Developers who avoided agents for planning cited concerns about business logic, domain knowledge requirements, and the importance of architectural decisions. Those who embraced agent-assisted planning emphasized using AI as a collaborative brainstorming tool rather than an autonomous decision maker.

"I think it saves me time in the long run, and it also saves me from re-explaining everything later," one developer explained about manually modifying code rather than iterating with the agent. This reflects experienced developers' cost-benefit analysis of when agent interaction provides value versus when direct manual coding proves more efficient.

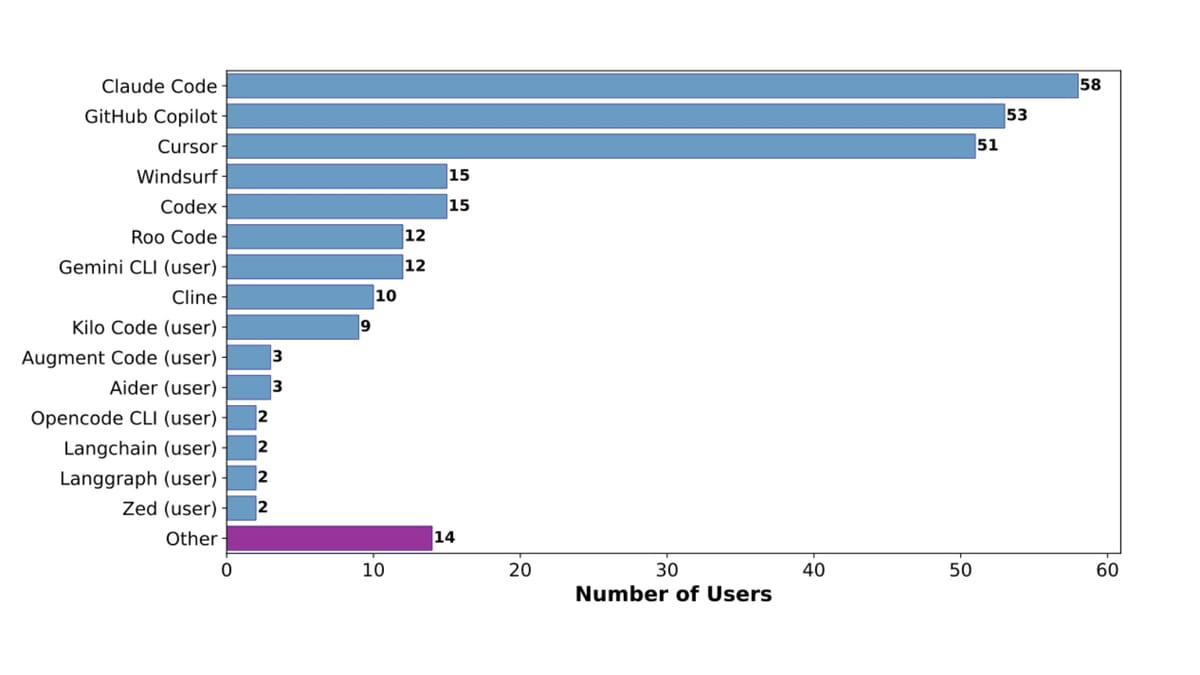

Tool selection patterns showed Claude Code (58 out of 99 respondents), GitHub Copilot (53), and Cursor (51) as the most widely adopted platforms. Twenty-nine respondents reported using multiple agents to parallelize implementation tasks or complement different agent capabilities, managing parallel work through traditional version control systems like Git.

Developers demonstrated sophisticated prompting techniques including screenshots, file references, examples, step-by-step thinking, and external information via Model Context Protocols. Some used external agents solely to improve prompt quality. Eighteen respondents configured user rules to enforce project specifications, provide language-agnostic guidelines, or correct agent behavior based on prior interactions.

"Always treat AI as a smart kid that knows nothing about you and outside world," one developer advised. "So you must write the context, write strict rules (do's and don't), write the guides, and finally write the task."

Three participants maintained external plan files with context across sessions, allowing complex project coordination while keeping agents focused on manageable subtasks. The practice reflects experienced developers' systematic approach to agent management rather than conversational iteration.

Verification strategies varied but remained ubiquitous. Participants checked UI functionality, ran manual tests, verified plans line-by-line against requirements, reviewed code structure and syntax, inspected developer tools, tested in terminals, examined linter feedback, and executed test suites. Five participants reported increased willingness to conduct systematic testing while using agents, with one noting higher test coverage than before "because it's part of the workflow now."

However, automated testing for UI interactions, database creation, and remote applications remained challenging. "I definitely think [agents] are a productivity booster," one developer summarized. "But I make sure to read every line of code before accepting."

Debugging with agents received mixed reviews. While 12 respondents found general debugging suitable versus 8 unsuitable, opinions on debugging complexity varied significantly. "Good at loop iteration on running - fixing tests," one noted positively. Another reported: "No LLMs [debugged] well."

System-level debugging generated stronger skepticism. Some developers would not "waste [their] time" with agents for such tasks, while others still trusted agents despite recognizing hallucination potential. The divergence suggests debugging success depends heavily on problem characteristics and developer expertise in recognizing when to employ or abandon AI assistance.

Performance-critical, deployment, high-stakes, and security-critical scenarios received consistent negative ratings. Nine respondents found agents unsuitable for performance optimization versus three who found them suitable. Eight avoided agents for CI and deployment infrastructure. Eight avoided agents for high-stakes or privacy-sensitive tasks, and five specifically mentioned security-critical code as unsuitable for agent assistance.

"Tasks such as high stakes decision making, sensitive data handling without safeguard etc" should avoid agents, one developer stated. Another explained avoiding agents when "implementing critical components that need to be performant or secure."

Despite limitations and careful control, 77 out of 99 survey respondents rated their enjoyment of working with agents as either "pleased" or "extremely pleased" compared to working without agents, on a six-point scale. Only five respondents reported displeasure.

"It felt like driving a F1 car. While it also felt like getting stuck in traffic jam a lot, I still felt optimistic about it," one developer described. Another stated: "I've had more fun working in this codebase, especially since I started using Cursor, than I've had writing code in a long time."

Four observed participants and 13 survey respondents expressed belief that AI-assisted coding workflows would become ubiquitous. "I don't want to go back to writing code manually. Now it seems like such a waste," one declared. Another urged: "Get into agentic AI as soon as possible otherwise you will be fall behind soon."

Yet positive sentiment coexisted with insistence on control. "I think AI agents are amazing as long as you are the driver & reviewing its work," one respondent summarized. "AI agents become problematic once you're not making them adhere to engineering principles that have been established for decades."

Developers viewed agents as collaborators rather than replacements. Twelve survey respondents mentioned using agents as "rubber duckies" for thinking through problems. Thirty-four reported brainstorming and abstract thinking with agents while maintaining strategic control.

"It's like full time rubber ducking," one developer explained. Another described agents as "like asking a co-developer for questions and insights—without the fear of being judged."

The research contradicts social media narratives suggesting some developers successfully employ dozens of autonomous agents simultaneously on massive software projects. While 31 survey respondents reported using multiple agents, coordination remained manual and agents worked on distinct parallelizable tasks rather than autonomously coordinating complex interdependencies.

Software engineering expertise emerged as critical for effective agent use. Sixty-five survey respondents cited existing development expertise as essential for working successfully with agents. Skills in code comprehension, debugging, architecture clarity, and translating product specifications to code all proved necessary.

"My observation so far is spending time to understand code already generated helps massively," one developer noted. Another emphasized: "First understanding coding and then using AI."

The findings align with a November 2024 study showing experienced open source maintainers were 19% slower when allowed to use AI, despite estimating they were 20% faster. An agentic system deployed in an issue tracker saw only 8% of invocations resulting in merged pull requests.

Implications extend beyond individual productivity to questions about junior developer training and skill development. "Agents are gonna do a faster and better job than I would ever do in terms of creating websites," one participant acknowledged while recognizing this capability could undermine skill acquisition for those without existing expertise.

"[Agents] can also be used as a crutch to have people who don't know what they're doing generate something that seemingly works, but it is kind of a nightmare to maintain anything," another warned.

The research population's demographics skewed heavily male (97% of survey respondents, 92% of observation participants), slightly higher than the 91% global average for professional developers. This gender imbalance likely introduces selection bias toward certain usage patterns and perspectives.

Survey recruitment through GitHub may have introduced bias toward developers active on public repositories and disproportionately involved in AI/ML projects. Observation sessions lasted only 45 minutes per participant, preventing longitudinal analysis of agent use patterns or observation of complete development cycles.

The findings reflect current AI capabilities as of late 2025, when the study was conducted between August and October. Continued improvements in language model capabilities, tool design, and developer prompting expertise may alter these patterns. However, the research establishes a baseline for comparing future developments and understanding current limitations.

Marketing professionals face parallel questions about AI automation and skill preservation. As platforms deploy AI agents for campaign management and content generation, maintaining strategic expertise, creative judgment, and analytical capabilities becomes critical for long-term career viability and campaign effectiveness.

The tension between productivity acceleration and competency maintenance that programming demonstrates applies broadly across professional domains adopting AI assistance. Success requires neither rejection nor uncritical embrace of AI tools, but rather thoughtful integration that preserves human expertise while capturing automation benefits.

"I like coding alongside agents. Not vibe coding. But working with," one developer stated, capturing the consensus among experienced professionals. The future of professional software development appears to involve human-AI collaboration under careful human control rather than delegation to autonomous agents.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Timeline

- January 5, 2026: Pedro Domingos posts on X that "AI coding tools don't work for business logic or with existing code," sparking debate

- January 5, 2026: Research paper "Professional Software Developers Don't Vibe, They Control" released showing experienced developers maintain strict control over AI agents

- August-October 2025: UC San Diego and Cornell University researchers conducted 13 field observations and surveyed 99 experienced developers

- November 2024: Study shows experienced open source maintainers 19% slower when using AI despite believing they were faster

- March 2025: Anthropic launched commercial version of Claude Code

- 2021: GitHub Copilot introduced as first major AI coding assistant

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Summary

Who: Experienced software developers with 3+ years of professional experience, researchers from UC San Diego and Cornell University, and Pedro Domingos from University of Washington

What: Research revealed experienced developers maintain strict control over AI coding agents through strategic planning and validation rather than adopting "vibe coding" approaches that delegate design and implementation decisions to AI

When: Study conducted August-October 2025, paper released January 5, 2026, coinciding with renewed debate sparked by Domingos's January 5 post questioning AI coding tool effectiveness

Where: Field observations conducted remotely via Zoom, survey distributed to GitHub users globally with responses from North America, Europe, Asia, South America, Africa, and Oceania

Why: Experienced developers prioritize software quality attributes including correctness, readability, and maintainability while recognizing AI agents prove suitable for straightforward tasks but unsuitable for complex logic, business requirements, and autonomous operation without human oversight