Google has introduced multimodal search capabilities to its experimental AI Mode, allowing users to search with images in addition to text. The announcement, made on April 7, 2025, builds upon the company's earlier AI Mode launch in March, which was initially made available to Google One AI Premium subscribers.

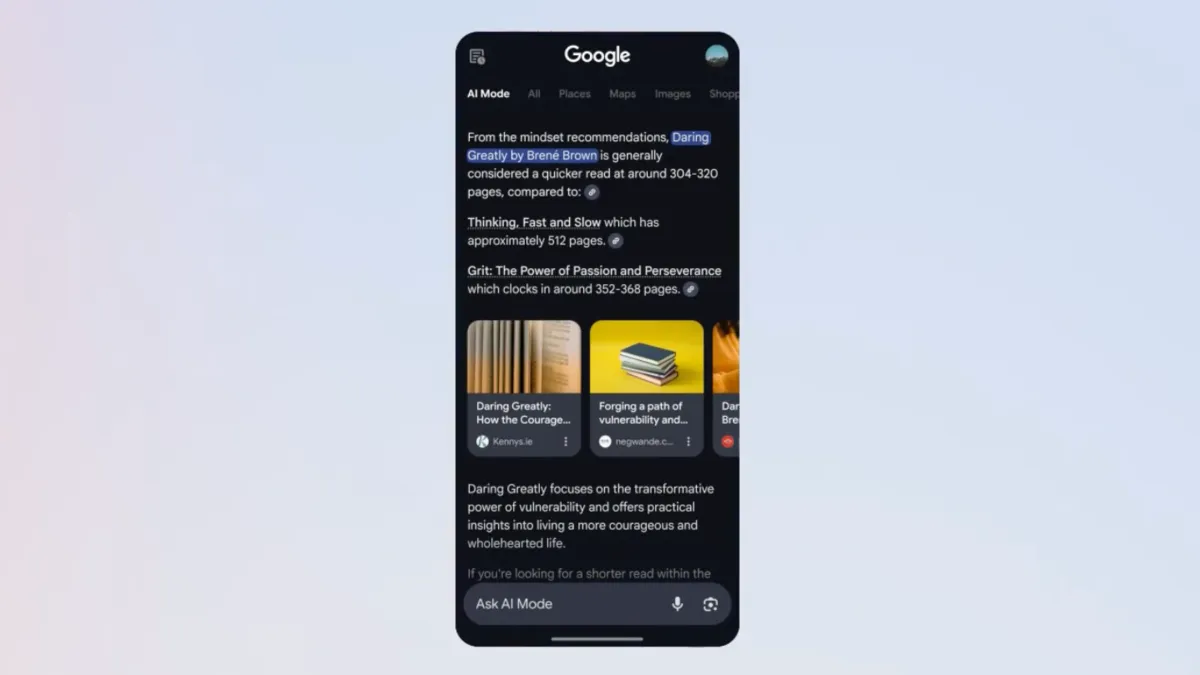

Google has been rapidly advancing its search capabilities in recent months. According to Robby Stein, VP of Product at Google Search, the company has expanded AI Mode's functionality to include multimodal understanding, enabling users to "snap a photo or upload an image, ask a question about it and get a rich, comprehensive response with links to dive deeper."

The technology combines the company's existing visual search capabilities in Google Lens with a custom version of its Gemini AI model. This integration enables AI Mode to understand complete scenes in images, including contextual relationships between objects and their specific characteristics such as materials, colors, shapes, and arrangements.

Multimodal AI represents a significant technological advancement in artificial intelligence capabilities. According to IBM's technical definition published in July 2024, "Multimodal AI refers to machine learning models capable of processing and integrating information from multiple modalities or types of data. These modalities can include text, images, audio, video and other forms of sensory input."

The key technical innovation behind AI Mode's multimodal capabilities is what Google calls its "query fan-out technique." This approach involves issuing multiple queries about both the image as a whole and specific objects within it, accessing more comprehensive information than traditional search methods. This produces responses that Google describes as "incredibly nuanced and contextually relevant."

Robby Stein explained that AI Mode "builds on our years of work on visual search and takes it a step further. With Gemini's multimodal capabilities, AI Mode can understand the entire scene in an image, including the context of how objects relate to one another and their unique materials, colors, shapes and arrangements."

AI Mode's development and user adoption

Google initially revealed AI Mode on March 5, 2025, as an experimental feature primarily targeting complex, exploratory queries. Early data shows that AI Mode queries are typically twice as long as traditional search queries, with users leveraging the system for open-ended questions and complicated tasks.

Stein noted in March that AI Mode "is particularly helpful for questions that need further exploration, comparisons and reasoning. You can ask nuanced questions that might have previously taken multiple searches — like exploring a new concept or comparing detailed options — and get a helpful AI-powered response with links to learn more."

The technology has now been made available to "millions more Labs users in the U.S.," expanding beyond the initial audience of Google One AI Premium subscribers. To access the feature, users need to sign up through Google's Labs program, which serves as the company's platform for experimental features.

The development of AI Mode and its multimodal capabilities represent Google's response to the increasing sophistication of user queries and expectations. "Since launching AI Mode to Google One AI Premium subscribers, we've heard incredibly positive feedback from early users about its clean design, fast response time and ability to understand complex and nuanced questions," Stein stated in the April announcement.

Technical foundations of multimodal AI

The multimodal capabilities in Google's AI Mode are built on significant advances in artificial intelligence research. According to IBM's documentation, multimodal AI works by integrating and processing diverse types of data, utilizing data fusion techniques that can be categorized as early, mid, or late fusion depending on when in the process different modalities are combined.

A 2022 research paper from Carnegie Mellon University identified three key characteristics of multimodal AI: heterogeneity, connections, and interactions. Heterogeneity refers to the diverse qualities and structures of different data types, connections relate to complementary information shared between modalities, and interactions describe how different modalities affect each other when combined.

Google's implementation in AI Mode demonstrates several of these characteristics. By recognizing objects in images and issuing queries about both individual elements and the overall scene, the system establishes connections between visual and textual data. The query fan-out technique represents a form of interaction between these modalities, where information from one helps inform the interpretation of another.

Stein provided a concrete example in his announcement: "In this example, AI Mode grasps the subtleties in the image, precisely identifies each book on the shelf and issues queries to learn about the books and similar recommendations that are highly rated."

Implications for marketers and content creators

The introduction of multimodal capabilities in Google's AI Mode has significant implications for digital marketing and content creation strategies. As search evolves beyond text, organizations will need to consider how their visual content can be optimized for AI-powered search systems.

This development suggests that the future of search will increasingly involve complex, multimodal interactions. Rather than simple keyword matching, search engines are moving toward understanding content at a deeper semantic level across different media types.

For marketers, this presents both challenges and opportunities. Visual content will need to be more carefully considered for its searchability, potentially requiring more descriptive file names, comprehensive alt text, and contextual placement that helps AI systems understand relationships between images and surrounding content.

Content creators may need to develop new skills in producing materials that work effectively across modalities, ensuring that text and images complement each other in ways that multimodal AI can interpret and leverage.

Timeline

- March 5, 2025: Google introduces AI Mode as an experimental feature in Labs for Google One AI Premium subscribers

- March 2025: Google expands AI Overviews to teen users and removes sign-in requirement

- March 2025: Google implements Gemini 2.0 for AI Overviews in the U.S., focused on coding, advanced math, and multimodal queries

- April 7, 2025: Google announces multimodal search capabilities in AI Mode, allowing users to search with images

- April 2025: Google expands access to "millions more Labs users in the U.S."