Google unveiled significant enhancements to its shopping experience on May 20, 2025, introducing AI Mode with virtual try-on technology that works with users' personal photographs. The announcement represents a substantial expansion of the company's artificial intelligence capabilities in e-commerce.

Get the PPC Land newsletter ✉️ for more like this

According to Lilian Rincon, Vice President of Product Management at Google, the new AI Mode experience addresses fundamental shopping challenges: "Shopping comes with lots of questions: How do I choose a pair of hiking boots? Will these pants look good on me? If I buy this today, will the price just drop tomorrow?" These features are designed to provide answers through advanced AI integration.

The Shopping Graph now contains more than 50 billion product listings from retailers worldwide, including both global brands and local businesses. Each listing includes comprehensive details such as reviews, prices, color options and availability information. Google refreshes more than 2 billion of these product listings every hour to ensure accuracy and timeliness.

Related Stories

May 22, 2024: Google to release new AI Tools for retailers - Google introduced Virtual Try-On Technology for Shopping Ads, expanding functionality to apparel ads for men's and women's tops

October 19, 2024: Google Shopping's AI makeover promises personalized, efficient experience - Google announced AI-powered Shopping platform transformation with virtual try-on options powered by generative AI

November 26, 2024: Google enhances Holiday Shopping experience with AI-powered Lens and Maps features - Google expanded AI shopping capabilities with in-store product recognition and local inventory search

April 28, 2025: Google launches AI-powered search filters for Merchant Center - Google introduced natural language search functionality for product data management, indicating broader AI integration across shopping tools

Enhanced browsing through AI Mode

The new AI Mode shopping experience combines Gemini artificial intelligence capabilities with Google's Shopping Graph infrastructure. When users express interest in products like "a cute travel bag," the system recognizes the request for visual inspiration and generates a browsable panel of personalized images and product listings.

The system performs what Google terms "query fan-out" - running multiple simultaneous searches to understand specific requirements. For example, when searching for bags suitable for Portland, Oregon in May, AI Mode analyzes weather patterns and travel needs to suggest waterproof options with accessible pockets. The righthand panel updates dynamically as users refine their searches, displaying relevant products and images while helping discover new brands.

According to the company, these shopping features will roll out to AI Mode in the United States over the coming months, though specific timeline details were not provided.

Agentic checkout system implementation

Google introduced what it calls "agentic checkout" functionality, allowing automated purchasing when prices meet user-specified criteria. Users can tap "track price" on any product listing and set preferences for size, color and maximum price point. The system monitors for price drops and sends notifications when conditions are met.

When users receive price drop notifications and confirm purchase intent, they can tap "buy for me" to complete transactions. Google handles the entire checkout process automatically, adding items to merchant shopping carts and completing purchases using Google Pay credentials stored in user accounts.

This agentic checkout feature will be deployed gradually across product listings in the United States during the coming months, according to Google's announcement.

Virtual try-on technology advancement

The virtual try-on capability represents a technical breakthrough in fashion e-commerce. According to Google's documentation, users can now "virtually try billions of apparel listings on yourself, just by uploading a photo." The technology works at unprecedented scale across Google's Shopping Graph inventory.

The system employs a custom image generation model specifically designed for fashion applications. This model understands human body mechanics and clothing characteristics, including "how different materials fold, stretch and drape on different bodies." The technology preserves these physical properties when applying clothing to poses captured in user photographs.

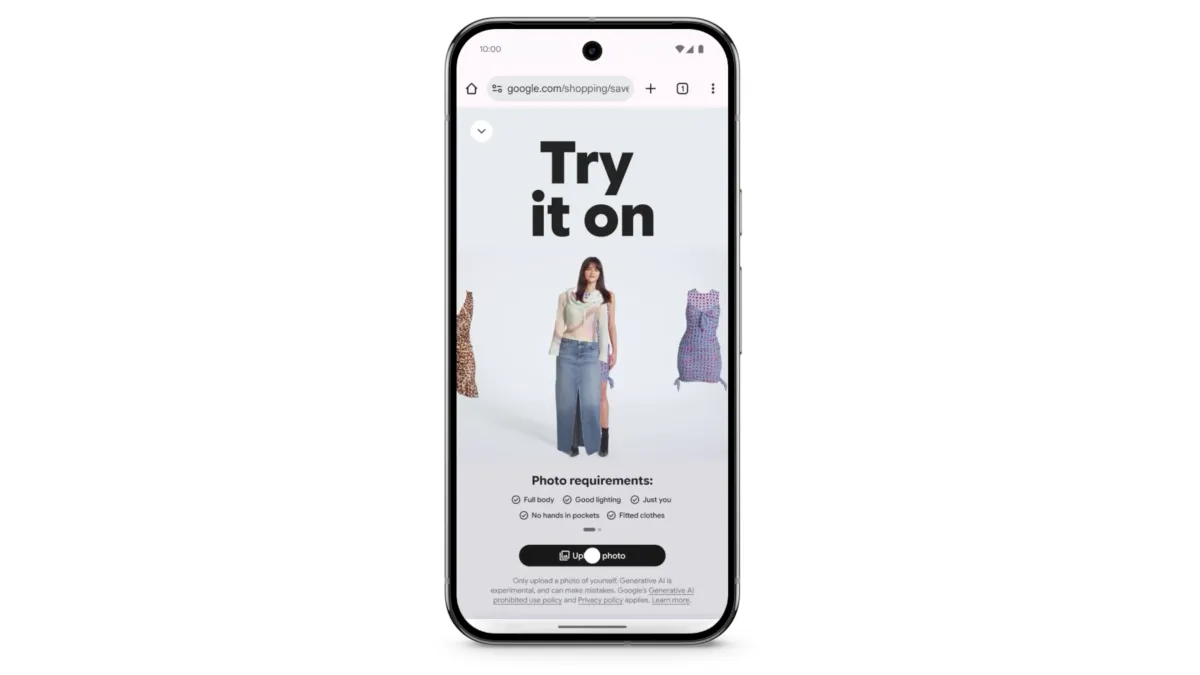

The virtual try-on experiment launched in Search Labs on May 20, 2025, with availability for shirts, pants, skirts and dresses. Users access the feature by selecting the "try it on" icon on product listings, then uploading full-length photographs of themselves. The system generates realistic visualizations showing how garments would appear on the user's body.

According to Google's implementation guide, optimal results require "a full-body shot with good lighting and fitted clothing." Users can save generated looks or share them with others before making purchase decisions.

Technical infrastructure and scale

Google's virtual try-on system operates on what the company describes as "state-of-the-art technology" that is "the first of its kind working at this scale." The underlying architecture processes billions of clothing items from the Shopping Graph database, applying them to user-submitted photographs through advanced computer vision and machine learning techniques.

The fashion-specific image generation model represents significant technical advancement in understanding clothing physics and human anatomy. The system maintains fabric authenticity when rendering clothing on different body types and poses, preserving material characteristics such as drape, stretch and fold patterns.

Processing occurs rapidly, with the system generating try-on visualizations "within moments" of photo upload, according to Google's technical specifications. The scale of operation extends across the entire Shopping Graph inventory, encompassing apparel from global retailers and independent merchants.

Implementation timeline and availability

Google announced a phased rollout schedule for the new features. Virtual try-on functionality became available in Search Labs on May 20, 2025, marking the beginning of public access. AI Mode shopping features and agentic checkout capabilities will deploy "in the coming months" across the United States market.

The company has not specified exact dates for feature availability or expansion to international markets. The Search Labs environment serves as the testing ground for virtual try-on technology before broader deployment across Google's shopping platform.

Users must opt into the Search Labs "try on" experiment to access virtual fitting room capabilities. The feature currently supports shirts, pants, skirts and dresses, with potential expansion to other clothing categories based on user adoption and feedback.

Why this matters

These developments carry significant implications for digital marketing professionals. The integration of AI-powered shopping experiences fundamentally changes how consumers interact with product listings and make purchase decisions. Marketing teams must adapt strategies to accommodate new consumer behaviors enabled by virtual try-on technology and automated purchasing systems.

The agentic checkout system introduces price monitoring and automated purchasing capabilities that could impact traditional conversion funnels. Marketing professionals need to understand how price tracking notifications and automated purchases affect customer journey analytics and attribution models.

Virtual try-on technology reduces barriers to online apparel purchases by addressing fit and appearance concerns. This capability may increase conversion rates for fashion retailers while changing how consumers evaluate clothing options online. Marketing campaigns may need to emphasize the virtual try-on experience as a key differentiator.

The Shopping Graph's expansion to 50 billion product listings creates new opportunities for product visibility and discovery. Retailers and marketers must optimize product data to ensure compatibility with AI Mode's query fan-out capabilities and visual search functionalities.

Competitive landscape impact

Google's announcements position the company more aggressively in e-commerce technology competition. The combination of AI Mode browsing, virtual try-on capabilities and automated purchasing creates a comprehensive shopping ecosystem that challenges existing e-commerce platforms.

The technical sophistication of the virtual try-on system, particularly its ability to process billions of items at scale, establishes new benchmarks for fashion technology. Competitors will need to develop comparable capabilities to maintain market position in AI-driven shopping experiences.

The integration of Gemini AI models with shopping functionality demonstrates Google's strategy of leveraging artificial intelligence across multiple product categories. This approach creates synergies between search, AI and e-commerce that may be difficult for competitors to replicate.

Industry data and trends

According to Google's internal metrics, the Shopping Graph processes product information from retailers ranging from major corporations to local businesses. The system's ability to refresh 2 billion product listings hourly ensures real-time accuracy in pricing and availability data.

The virtual try-on technology builds upon Google's existing computer vision capabilities, extending them to fashion applications with specialized understanding of clothing physics and human anatomy. The scale of implementation across billions of apparel items represents a significant technical achievement in AI-powered retail technology.

Search Labs serves as Google's testing environment for experimental features before public release. The virtual try-on experiment provides valuable user feedback and usage data to inform broader deployment strategies across Google's shopping platform.

Get the PPC Land newsletter ✉️ for more like this

Technical specifications and requirements

The virtual try-on system requires full-length photographs with adequate lighting and fitted clothing for optimal results. Users must upload images showing their complete body to enable accurate garment visualization and fitting simulation.

The underlying AI model processes multiple data points including body measurements, pose detection and clothing characteristics to generate realistic try-on experiences. The system maintains clothing authenticity while adapting to different body types and positions captured in user photographs.

Processing occurs through Google's cloud infrastructure, with results delivered rapidly to maintain user engagement. The system integrates with existing Google Pay functionality for seamless checkout experiences when users decide to purchase items after virtual try-on sessions.

Timeline

May 20, 2025: Google announces AI Mode shopping experience with virtual try-on technology using personal photos

May 20, 2025: Virtual try-on experiment launches in Search Labs for U.S. users

Coming months: AI Mode shopping features to roll out across United States

Coming months: Agentic checkout functionality to deploy for U.S. product listings