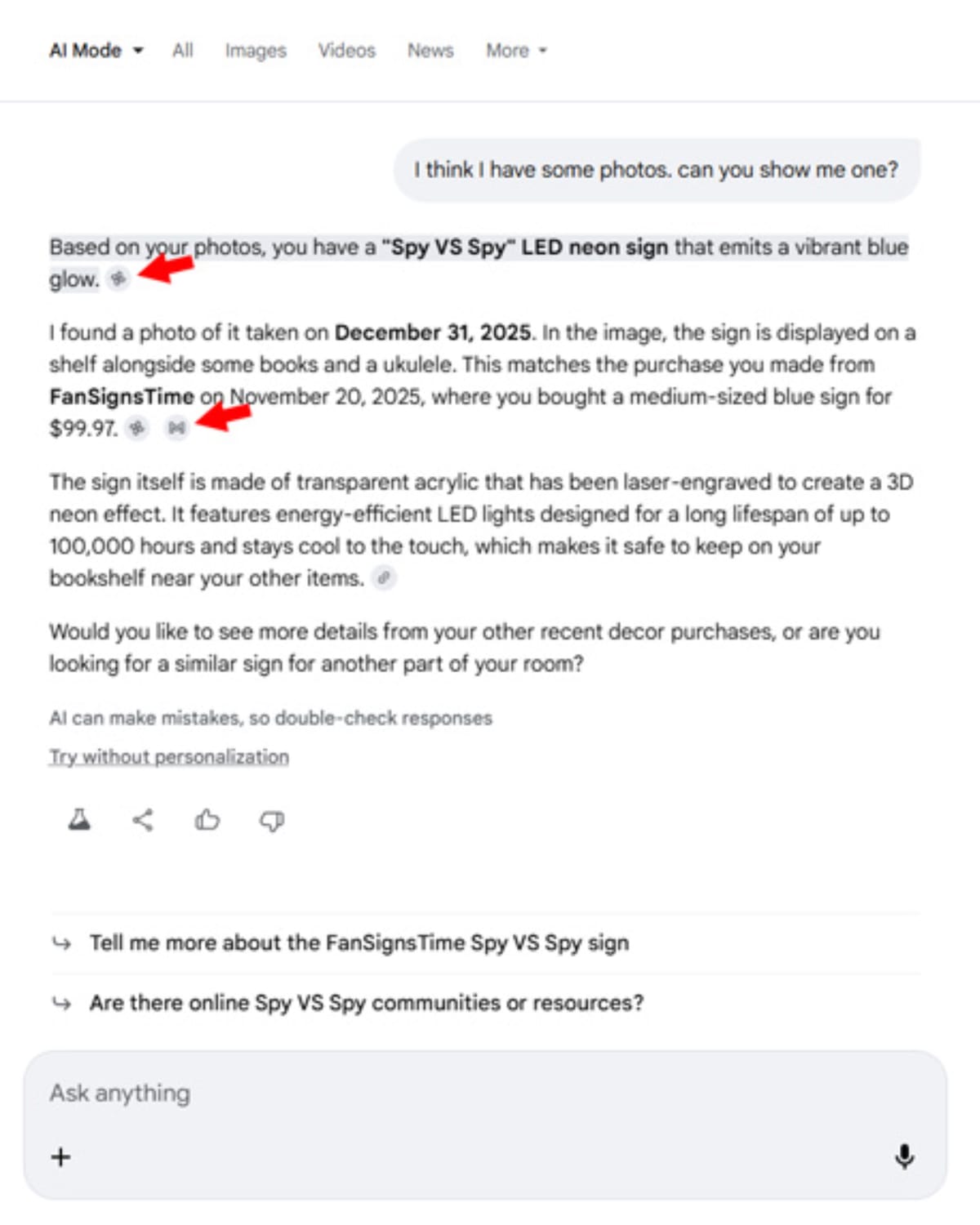

When Google's AI assistant taps into personal emails, photographs, or search history to craft responses, small icons now reveal exactly which apps contributed information. The visual attribution system, deployed across Google's Personal Intelligence feature, displays Gmail, Google Photos, YouTube, and Search icons directly beside AI-generated text that draws from connected applications.

SEO consultant Glenn Gabe documented the implementation on January 28, 2026, publishing examples showing how icons appear alongside responses in AI Mode. "You can tap those for citations and then click through to the information/photos," according to Gabe's analysis. The icons function as interactive elements - users can select them to view source citations and navigate to the underlying content that informed the AI's response.

BTW, I also cover the new icons in AI Mode when Personal Intelligence is used. You can tap those for citations and then click through to the information/photos. pic.twitter.com/ERxszmfDHq

— Glenn Gabe (@glenngabe) January 28, 2026

The visual attribution mechanism represents Google's approach to transparency as Personal Intelligence enables unprecedented access to user data across multiple services. Personal Intelligence, which launched for Gemini app subscribers on January 14, 2026, connects Gmail, Google Photos, YouTube, and Google Search services to deliver what Google characterizes as uniquely tailored AI responses.

How the icon system works

Each icon corresponds to a specific Google service that contributed data to generate the response. A Gmail icon signals that Gemini analyzed email content. Google Photos icons indicate the system referenced images or videos from the user's library. Search icons show when the AI incorporated information from saved search activity or browsing history. YouTube icons reveal when the system drew from viewing preferences or subscribed channels.

The icons appear in two contexts. Within AI Mode in Google Search, they display next to personalized recommendations and answers. In the standalone Gemini app, they show up when responses utilize connected services to provide context-aware assistance.

According to Google's implementation details, tapping an icon reveals the specific content that informed that portion of the response. This differs from traditional citation systems that simply link to external sources. The icons create direct pathways to the user's own data - the emails, photographs, search queries, or videos that the AI analyzed to construct its answer.

The visual design uses recognizable product icons rather than text labels. Gmail's red envelope, Photos' multicolor pinwheel, YouTube's red play button, and Search's magnifying glass appear as small badges adjacent to relevant text passages. The approach maintains consistency with Google's broader design language while serving a functional transparency purpose.

Connected Apps infrastructure

Personal Intelligence operates through what Google terms Connected Apps - a system enabling Gemini to access user data across services when explicitly enabled. According to Google's privacy documentation published for the feature, eligible users can connect their data in Google Photos, YouTube, and Google Workspace services including Gmail, Calendar, Drive, Docs, Sheets, Slides, Keep, Tasks, Chat, and Meet.

When users connect these applications, data sharing extends beyond simple keyword matching. The system analyzes emails, files, events, photos, videos, and location information to create personal insights about relationships, interests, and activities. "Data shared may relate to topics you find sensitive, like race, religion, and health, or confidential info," according to Google's Connected Apps documentation.

The infrastructure processes this information to generate recommendations, create tailored itineraries, and complete tasks based on understanding derived from multiple sources. Google emphasized in its January 14 announcement that the system reasons across sources rather than simply retrieving individual pieces of information.

An example from Google's launch materials illustrates this capability. A user asking "Recommend tires for my car" receives suggestions that consider the vehicle's make and model from Gmail records, driving patterns inferred from Google Photos location data, and current tire prices from Search. The response includes the license plate number extracted from a photograph to facilitate the auto shop visit.

The technical architecture relies on what Google describes as processing summaries and excerpts rather than training AI models directly on complete Gmail inboxes or Photos libraries. When users interact with Gemini, the system creates condensed versions of relevant content. "To make our responses relevant, helpful, and high quality, we train our generative AI models off of these summaries, excerpts, generated media, and inferences," according to the privacy notice.

This processing methodology means individual emails and files remain in their respective applications. The AI references them when generating responses but does not incorporate raw message text into the underlying language model training data - except for the summaries and inferences it generates from that content.

Privacy mechanics and human review

The Connected Apps system includes human review components that examine a subset of processed data. According to Google's documentation, trained reviewers including service providers assess interactions to debug services, maintain safety, and improve response quality. The review encompasses "interactions, summaries, excerpts and inferences from your relevant emails and files" along with "summaries, inferences, and generated media based on your Google Photos library."

Before reviewers examine interactions personalized using Connected Apps, Google implements steps to disconnect data from user accounts and reduce personal information "unless it's to address abuse or harm." If users submit feedback or if abuse concerns arise, reviewers may access portions of Gmail inboxes or Photos library media.

The data usage extends to training generative AI models. Google confirmed that Gemini Apps activity, including data from connected apps, contributes to service improvements "including by training generative AI models" when the Keep Activity setting remains enabled. The training incorporates user prompts, AI responses, and information about how models generated those responses.

This approach emerged from Google's August 2025 personalization rollout that automatically enabled a "Personal Context" feature analyzing conversations to extract preferences and behavioral patterns. That implementation activated by default without explicit consent, requiring users to manually disable functionality through settings menus.

Personal Intelligence rollout details

Google announced Personal Intelligence availability for Gemini AI Pro and AI Ultra subscribers in the United States starting January 14, 2026. The beta feature requires users to explicitly enable connections through Personal Intelligence settings rather than activating automatically. Users select which specific applications to connect, maintaining granular control over data sharing permissions.

The system builds on earlier personalization features introduced in August 2025 that enabled Gemini to reference conversation history for targeted responses. Personal Intelligence extends those capabilities by accessing data stored in other Google services rather than limiting analysis to chat transcripts.

Google Photos integration requires additional configuration steps. Users must enable Face Groups within Photos and select a face group representing themselves before Personal Intelligence can access photograph data. This requirement reflects the sensitive nature of facial recognition data and location information embedded in photo metadata.

Josh Woodward, Vice President at Google Labs for Gemini & AI Studio, emphasized the reasoning capabilities enabled by cross-source analysis in the January 14 announcement. "Standing in line at the shop, I realized I didn't know the tire size," Woodward explained. "I asked Gemini. These days any chatbot can find these tire specs, but Gemini went further. It suggested different options: one for daily driving and another for all-weather conditions, referencing our family road trips to Oklahoma found in Google Photos."

The integration represents what Google frames as a shift from information retrieval to agentic task completion. Rather than presenting search results that users must evaluate and synthesize, Personal Intelligence delivers actionable recommendations derived from comprehensive understanding of individual circumstances.

Implications for tracking and personalization

The icon implementation addresses concerns about transparency in AI systems that draw from personal data sources. Marketing professionals tracking brand visibility across AI platforms face accuracy challenges from personalization features that cause identical queries to generate different responses for different users.

SEO professional Lily Ray documented in November 2025 how ChatGPT and similar systems rewrite queries based on user location, interests, and previous interactions. This personalization mechanism creates fundamental measurement problems for tracking tools monitoring brand mentions across AI platforms. The same query produces substantially different results depending on stored information about each user.

Google's implementation extends this personalization challenge. Personal Intelligence doesn't just adjust responses based on abstract user profiles - it incorporates actual content from emails, photographs, and browsing history. Two users asking identical questions will receive dramatically different answers if their connected data differs.

The visibility of source icons provides insight into which services contributed to specific recommendations. However, it doesn't resolve the underlying measurement problem. Tracking tools cannot replicate the personalized data environment that shapes responses for individual users. Brand visibility measurements conducted by tools will diverge from what actual users experience when Personal Intelligence applies their connected data.

This dynamic affects content optimization strategies. Analysis published by PPC Land indicates that AI search interfaces may capture more visitors than traditional search for certain topics by early 2028. The timeline could accelerate if Google implements AI Mode as the default search experience. Publishers adapting successfully focus on creating content that AI systems cannot easily replicate - in-depth analysis, unique perspectives, and first-person experiences.

Competitive positioning and privacy tensions

Personal Intelligence arrives as Google responds to competitive pressures from AI-powered tools gaining adoption in professional and consumer environments. The company extended AI Mode to Workspace accounts in July 2025, bringing conversational search interfaces to millions of business users. The Workspace integration provides Google with valuable data about professional AI search usage patterns.

The rollout timing coincides with broader developments in Google's AI-enhanced search offerings including Canvas functionality for study planning, Search Live with video input for real-time visual analysis, and enhanced Chrome desktop integration through Google Lens. These developments demonstrate systematic efforts to embed artificial intelligence throughout the search experience rather than treating AI Mode as an isolated feature.

The data access model contrasts with Google's public statements about content creator relationships. Google executives contradicted independent research in August 2025 when they claimed AI features increase website referrals while studies documented substantial traffic declines for publishers. "Our AI responses feature prominent links, visible citation of sources, and in-line attribution," according to Liz Reid, VP and Head of Google Search.

The icon system extends this attribution approach to personal data rather than web sources. Instead of crediting external publishers, the icons reveal when Google services themselves provided the information. This creates a fundamentally different relationship between the AI, the user, and content sources. Personal Intelligence doesn't primarily synthesize web content - it synthesizes the user's own data stored across Google's ecosystem.

Privacy advocates have raised concerns about the data collection implications. The automatic activation of personalization features in August 2025 established a pattern of opt-out rather than opt-in mechanisms. While Personal Intelligence requires explicit enablement, the underlying infrastructure builds on systems that default to maximum data access unless users actively restrict permissions.

The geographic restrictions limiting Personal Intelligence to United States users reflect regulatory environments in other jurisdictions. European Union, United Kingdom, and Swiss users cannot access the feature, likely due to stricter data protection requirements under GDPR and related frameworks. Age restrictions prevent users under 18 from utilizing Personal Intelligence regardless of geographic location.

Technical architecture and implementation

The system architecture relies on Gemini's advanced reasoning capabilities combined with Google Search's comprehensive web index. Personal Intelligence processes queries through what Google terms "query fan-out technique" - breaking down inquiries into multiple subtopics while simultaneously searching across connected data sources.

This approach differs substantially from traditional search algorithms that rank individual pages based on relevance signals. Personal Intelligence synthesizes information from multiple applications to construct responses that address complex, multi-part questions without requiring users to formulate separate searches for each component.

The implementation uses server-side processing rather than on-device analysis. When users submit queries, the request travels to Google's infrastructure where Gemini models access connected services through established APIs. The responses return with metadata indicating which services contributed information, enabling the client application to render appropriate icons.

The technical specifications reveal that Personal Intelligence utilizes customized versions of Google's Gemini models specifically optimized for personal data integration. These models differ from the general-purpose Gemini versions available through standard interfaces. The customization focuses on reasoning across heterogeneous data types - text from emails, images from Photos, metadata from Search - to construct coherent responses.

Market implications and future developments

The icon implementation represents an initial transparency mechanism that Google may expand as Personal Intelligence evolves. The company has not disclosed plans for additional attribution features, but the infrastructure supports more detailed source tracking. Future iterations could potentially identify specific emails, photographs, or search queries that informed responses rather than simply indicating which applications contributed.

The subscription requirement limits Personal Intelligence to Google AI Pro and AI Ultra subscribers. Pricing for these plans varies, but the restriction excludes free Gemini users from accessing Connected Apps functionality. This tiering strategy positions advanced personalization as a premium feature rather than a baseline capability.

Industry observers note that Personal Intelligence adoption patterns will likely vary significantly across user demographics. Users comfortable with extensive data sharing may rapidly enable all available connections to maximize AI capabilities. Privacy-conscious users may selectively enable specific services or avoid the feature entirely despite subscription access.

The technical infrastructure supporting Personal Intelligence creates possibilities for expanded agentic capabilities. Google announced experiments with agentic features in AI Mode during November 2025, including functionality that enables users to complete tasks like restaurant reservations directly through search. Personal Intelligence provides the contextual understanding necessary for such agents to operate effectively - knowing user preferences, calendar availability, location history, and communication patterns.

The data retention implications remain significant. According to Google's documentation, disconnecting an app or deleting data in a connected application does not automatically delete corresponding data from Gemini Apps Activity. That information can still contribute to product improvement including training generative AI models. Users must separately delete Gemini Apps activity to prevent continued usage, unless trained reviewers have already examined the content.

The icon system emerged without prior public disclosure during development phases. Google did not announce plans to implement visual source attribution when launching Personal Intelligence on January 14. The feature appeared in production implementations shortly after, with industry observers documenting it through direct usage rather than official communications.

This implementation pattern reflects Google's iterative approach to AI features. The company frequently deploys capabilities in limited rollouts, gathers usage data, and adjusts functionality based on observed behavior. Personal Intelligence represents a substantial expansion of Gemini's access to user data, and the icon system provides a visible mechanism for users to understand when and how that access occurs during interactions.

The visibility of data sources through icons may influence user trust in AI recommendations. When users can see that suggestions draw from their actual emails and photographs rather than generic web information, responses may carry greater perceived relevance and credibility. Conversely, explicit disclosure of data access may heighten privacy concerns for users who prefer not to consider how extensively AI systems analyze their personal information.

Timeline

- August 13, 2025 - Google Gemini introduces personalization features enabling the AI to reference past conversations for targeted responses

- August 17, 2025 - Google Gemini automatically enables Personal Context feature analyzing user conversations to extract preferences and behavioral patterns

- October 2, 2025 - Gemini replaces Google Assistant on smart home devices with enhanced AI capabilities

- November 9, 2025 - LLM tracking tools face accuracy crisis as personalization features create variation in AI responses

- November 18, 2025 - Google launches Gemini 3 with generative UI for dynamic search experiences

- January 14, 2026 - Google launches Personal Intelligence beta connecting Gmail, Google Photos, YouTube, and Search for personalized Gemini responses

- January 28, 2026 - Glenn Gabe documents app icons appearing in AI Mode showing which Google services contributed to personalized responses

- February 5, 2026 - Search Engine Roundtable reports on icon feature for Personal Intelligence citations

Summary

Who: Google deployed visual attribution icons for its Personal Intelligence feature that displays which connected applications - Gmail, Google Photos, YouTube, or Search - contributed information to AI-generated responses in Gemini and AI Mode.

What: Small, tappable icons representing Google services now appear beside AI responses that draw from personal data. Users can select icons to view citations and click through to source content. The system reveals when Gemini analyzes emails, photographs, search history, or video preferences to construct personalized recommendations and answers.

When: SEO consultant Glenn Gabe documented the implementation on January 28, 2026, following Google's January 14, 2026 launch of Personal Intelligence beta for Gemini AI Pro and AI Ultra subscribers in the United States.

Where: Icons appear in AI Mode within Google Search and in the standalone Gemini app when Personal Intelligence draws from connected services to generate responses. The feature currently reaches only U.S. subscribers with explicit Connected Apps permissions enabled.

Why: The icon system provides transparency about which Google services the AI accesses when generating personalized responses, addressing privacy concerns about extensive data integration. The visual attribution mechanism distinguishes Personal Intelligence from generic AI responses by revealing the specific applications - containing users' emails, photos, and browsing history - that informed recommendations and task completion.