Researchers analyzing four million Claude AI conversations discovered a striking concentration pattern: only 5% of occupational tasks account for 59% of all artificial intelligence interactions, according to a research paper published October 29, 2025, on arXiv. The study, authored by Peeyush Agarwal, Harsh Agarwal, and Akshat Rana, provides the first systematic evidence linking real-world generative AI usage to comprehensive task characteristics across the economy.

The analysis used Anthropic's Economic Index dataset, which mapped millions of anonymized user interactions with the Claude AI assistant to standardized occupational tasks from the U.S. Department of Labor's O*NET database. Tasks requiring high creativity, complexity, and cognitive demand attracted the most AI engagement, while highly routine tasks saw minimal adoption. The findings challenge assumptions about which work activities benefit from AI augmentation.

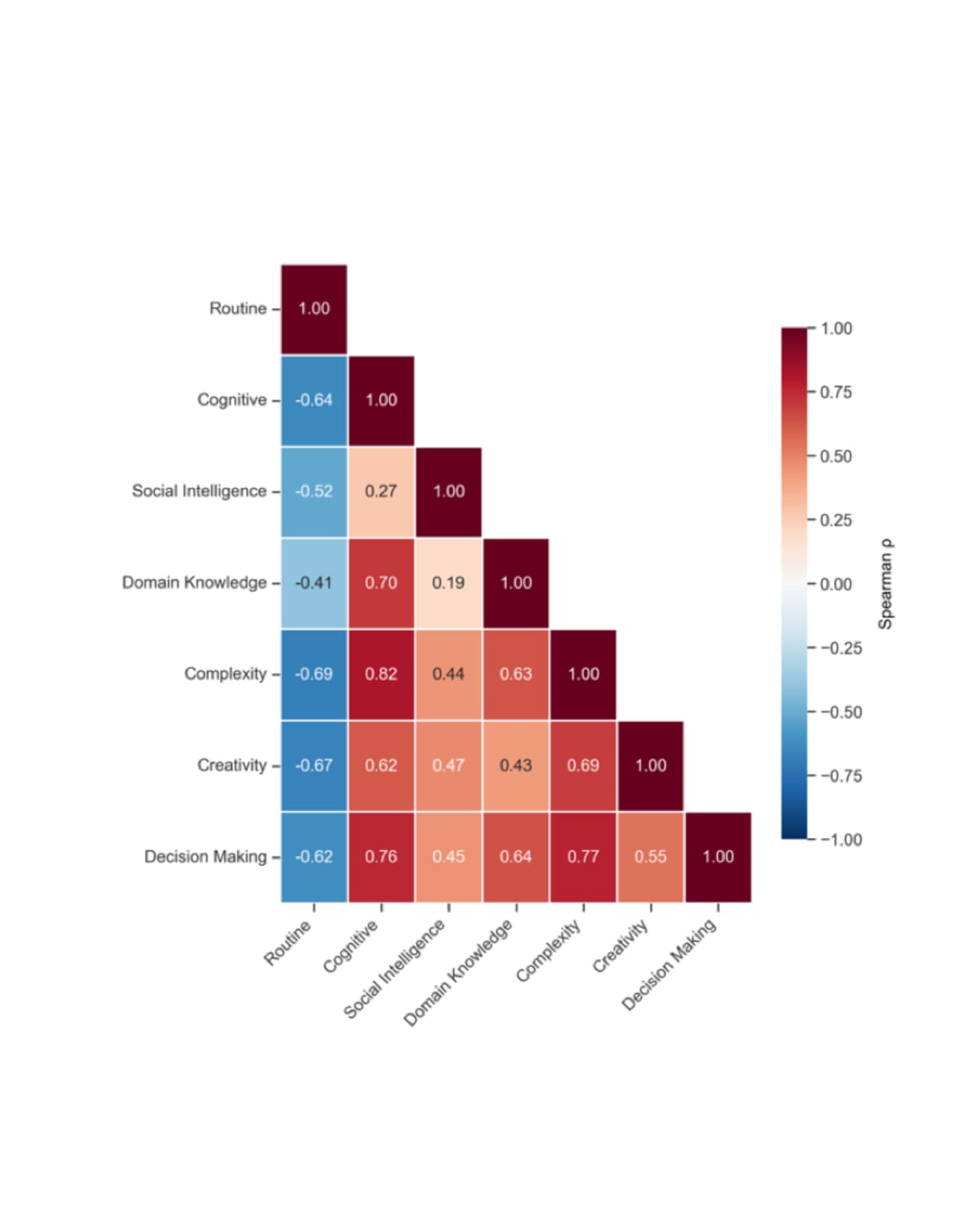

The researchers developed a scoring framework that evaluated each task across seven key dimensions: Routine, Cognitive, Social Intelligence, Creativity, Domain Knowledge, Complexity, and Decision Making. Each dimension comprised five specific parameters, creating 35 measurable characteristics that were scored using large language models. This methodology enabled quantitative analysis of what drives AI adoption at the task level rather than the occupation level.

Tasks demanding idea generation showed the strongest correlation with AI usage, with a Spearman correlation coefficient of 0.173. Information processing tasks followed at 0.157, while originality requirements reached 0.151. Conversely, tasks characterized by predictable outcomes correlated negatively at -0.135, with frequency of repetition at -0.131. The pattern suggests AI currently serves as a brainstorming and synthesis tool rather than an execution system for well-defined procedures.

The concentration of AI usage extends beyond individual task characteristics. The distribution of AI interactions across O*NET tasks proved sharply right-skewed, with the median task accounting for only 0.006% of AI conversations. Three-quarters of all tasks fell below 0.017% usage. This extreme "long tail" distribution indicates current AI deployment remains highly selective rather than representing broad-based application across work activities.

Three distinct task archetypes emerged from multivariate analysis using principal component analysis and K-means clustering. The researchers labeled these categories as "Dynamic Problem Solving," "Procedural & Analytical Work," and "Standardized Operational Tasks." Each archetype exhibited statistically distinct profiles across the seven characteristics and showed significantly different rates of AI adoption.

Dynamic Problem Solving tasks, comprising 2,100 tasks or the largest group, attracted the highest mean AI usage at 3.31%. These tasks scored lowest on routineness at 3.36 and highest on cognitive demands at 8.53, creativity at 6.43, complexity at 8.43, and decision making at 8.25. The archetype represents work requiring substantial intellectual flexibility and original thinking.

Procedural & Analytical Work, consisting of 1,017 tasks, showed moderate AI usage at 2.45%. These tasks demonstrated moderate routineness at 5.8, low social intelligence at 4.51, and low creativity at 3.39. The profile suggests structured analytical activities that follow established frameworks but require cognitive effort. Standardized Operational Tasks, the smallest group with 397 tasks, exhibited the lowest AI usage at 0.014%. These tasks scored highest on routineness at 7.08 and lowest across all other dimensions, including cognitive demands at 4.6, social intelligence at 3.1, and creativity at 1.53.

The analysis revealed a striking characteristic: social intelligence showed near-zero correlation with AI usage across all five of its constituent parameters. Tasks requiring interpersonal interaction, emotional management, collaboration, or social perceptiveness demonstrated no statistical relationship with AI adoption patterns. This finding positions social intelligence as potentially durable human comparative advantage in an AI-augmented economy.

Within the Creativity dimension, significant variation appeared across parameters. Idea generation correlated much more strongly with AI usage than innovation requirements, suggesting current AI application focuses on divergent thinking phases rather than convergent implementation. Tasks requiring artistic or aesthetic components showed lower correlation than pure conceptual work.

High-usage tasks, defined as the top 10th percentile, exhibited a distinct signature compared to low-usage tasks in the bottom 10th percentile. High-usage tasks scored substantially higher on Cognitive (8.8 versus 6.8), Complexity (8.7 versus 6.5), Creativity (7.1 versus 2.8), and Decision Making (8.3 versus 6.6) dimensions. They scored significantly lower on Routine (2.9 versus 6.2). Social Intelligence showed minimal difference between groups at 6.1 versus 5.7.

The researchers acknowledged several limitations. The usage data derives from a single AI model family, Claude, whose user base may not represent the entire workforce. The LLM-based scoring method for task characteristics, while systematically applied, serves as a proxy for human judgment and may carry inherent biases. The analysis provides a cross-sectional snapshot rather than capturing dynamic evolution of AI use as technology advances and adoption patterns change.

Anthropic released its Economic Index on February 10, 2025, revealing that approximately 36% of occupations use AI for at least a quarter of their associated tasks, while only 4% of occupations utilize AI across three-quarters of their tasks. Computer-related tasks saw the largest AI usage at 37.2% of all queries, followed by writing tasks in educational and communication contexts at 10.3%.

The concentration pattern has significant implications for marketing professionals. Tasks involving content generation, strategic planning, and campaign conceptualization align with the high-usage archetype characteristics. Routine execution tasks like standard reporting or repetitive media buying operations show characteristics associated with low AI adoption.

Research from IAB Europe in September 2025 found that 85% of companies already deploy AI-based tools for marketing purposes, with targeting and content generation leading adoption at 64% and 61% respectively. However, the concentrated usage patterns revealed in the Adobe paper suggest substantial variation in which specific marketing tasks benefit from AI assistance.

The findings challenge assumptions about AI replacing routine work. While earlier automation technologies primarily targeted repetitive tasks, current generative AI adoption shows opposite patterns. Tasks with high routineness and predictable outcomes correlate negatively with usage, while cognitively demanding creative work correlates positively.

Marketing measurement challenges documented throughout 2025 may explain some adoption patterns. Research released December 2, 2025, by Funnel and Ravn Research found that 86% of in-house marketers struggle to determine the impact of each marketing channel on overall performance despite unprecedented access to analytics tools. AI tools that synthesize information and generate insights address these information processing challenges that characterize high-usage tasks.

The paper identifies cognitive offloading as the central psychological dimension of current AI adoption. Individuals leverage AI to overcome initial high-friction stages of knowledge work, particularly brainstorming, outlining, and synthesizing information. The pattern appears across all task categories but manifests most strongly in complex cognitive work.

Domain knowledge requirements showed moderate positive correlation with AI usage at 0.084, suggesting specialized expertise neither strongly attracts nor repels AI adoption. Tasks requiring specialized knowledge still see substantial AI application when they involve high cognitive demands and complexity. This pattern challenges assumptions that expert domains would resist AI integration.

The researchers scored tasks using Gemini 2.5 Pro with temperature set to zero, providing each O*NET task description and requesting ratings on a 1-10 scale for each specific parameter. The seven primary characteristic scores represent the arithmetic mean of their five constituent parameter scores. This approach aligns with the emerging "LLM-as-a-Judge" paradigm, where language models serve as scalable alternatives to human experts for complex evaluation tasks.

Principal component analysis revealed that the first two components explained 82.3% of variance across the seven characteristics. The inter-correlation matrix showed that Cognitive, Complexity, and Decision Making characteristics correlated strongly and positively with each other, all above 0.75, while correlating strongly negatively with Routine, all below -0.61. Social Intelligence exhibited weaker correlations with all other characteristics.

The study utilized the Clio system developed by Anthropic, which enables privacy-preserving analysis of conversations while maintaining user privacy. The research analyzed approximately one million conversations on Claude.ai Free and Pro plans during December 2024 and January 2025. The methodology maintains enterprise-grade confidentiality standards while extracting aggregate patterns.

Enterprise AI adoption faces persistent scaling problems despite widespread deployment. McKinsey released research on November 9, 2025, revealing that while 88% of respondents report regular AI use in at least one business function, only approximately one-third report their companies have begun scaling AI programs across the enterprise. The concentrated usage patterns revealed in the Adobe paper provide potential explanation: most tasks may not yet exhibit characteristics that attract heavy AI adoption.

Marketing organizations face decisions about which specific activities warrant AI investment. The research framework provides evidence-based guidance. Tasks combining high creativity requirements with substantial cognitive complexity but low routineness represent the highest-probability AI adoption candidates. Standard operational activities may require different automation approaches than conversational AI interfaces.

The paper introduces three key contributions to understanding AI's impact on work. First, systematic evidence linking real-world AI usage patterns to intrinsic task characteristics. Second, a comprehensive multi-dimensional framework for characterizing occupational tasks that captures the multifaceted nature of modern AI capabilities. Third, distinct task archetypes revealing deeper patterns in AI adoption than individual characteristics alone.

Future implications span multiple domains. Labor markets may experience fundamental transformation as knowledge work shifts from information processing toward task delegation, decision making, and quality evaluation. Educational priorities require reorientation toward critical thinking, task delegation capabilities, and analytical skills to distinguish between valuable AI contributions and potential errors.

Business strategy implications suggest organizations should systematically analyze jobs requiring high cognitive complexity and creativity for AI-powered product development. These represent tasks the data shows people readily offload and represent clear market signals for accelerating adoption. Organizations should investigate low adoption rates for routine tasks to determine whether these have been optimized by other technologies or whether organizational barriers prevent utilization.

Policy considerations focus on facilitating labor market transitions by equipping workers with skills to operate within high-complementarity archetypes. Policymakers might consider financial incentives to accelerate workforce adaptation to AI-augmented work environments. Tax rebates or exemptions on AI skills training for companies could facilitate smoother labor market transitions.

The researchers acknowledge that O*NET task descriptions, while comprehensive, may not capture all nuances of modern work or may group distinct activities together. The cross-sectional analysis provides a snapshot in time rather than capturing dynamic evolution. The single AI model family data source may not represent the entire workforce user base.

Claude Code demonstrations have revealed significant productivity gains for specific engineering tasks. A Google principal engineer acknowledged on January 3, 2026, that Claude Code reproduced complex distributed systems architecture in one hour that her team spent a full year building. Such cases exemplify the extreme concentration patterns identified in the research, where specific high-complexity tasks attract disproportionate AI usage.

The concentrated adoption pattern raises questions about return on investment for AI infrastructure. If only 5% of tasks drive 59% of usage, organizations may achieve most benefits by identifying and optimizing these high-value applications rather than pursuing universal deployment across all activities.

Marketing applications that align with high-usage characteristics include strategic brief development, creative concept generation, multi-channel campaign planning, competitive analysis synthesis, and performance report narrative creation. Activities aligned with low-usage characteristics include media buying execution, standard performance reporting, routine asset trafficking, and established workflow procedures.

The durable human value emerges not in direct competition with AI's cognitive capabilities, but in complementary domains. While the data demonstrates widespread willingness to delegate complex cognitive tasks to AI systems, human advantage focuses on oversight and contextual application of AI outputs. Social intelligence maintains its distinct position, remaining statistically decoupled from AI adoption patterns and suggesting interpersonal capabilities continue representing human comparative advantage.

The implications extend to marketing technology development. Platforms focusing on information synthesis, conceptual exploration, and strategic analysis align with high-adoption task characteristics. Tools emphasizing process automation for well-defined workflows may require different technological approaches than conversational AI interfaces optimized for exploratory work.

Timeline

- October 22, 2024: Anthropic releases Claude 3.5 Sonnet with computer use capabilities

- December 2024-January 2025: Research team analyzes approximately one million conversations on Claude.ai Free and Pro plans

- February 10, 2025: Anthropic launches Economic Index revealing 36% of occupations use AI for at least a quarter of associated tasks

- March 2025: Anthropic launches Claude Code commercial version

- May 1, 2025: Anthropic unleashes Claude integration tool with Research capability

- August 26, 2025: Anthropic launches Claude for Chrome extension research preview

- September 2025: IAB Europe reveals 85% AI adoption across digital advertising companies

- October 26, 2025: Paper submitted to arXiv (version 1)

- October 29, 2025: Revised paper published on arXiv (version 2) showing 5% of tasks account for 59% of AI interactions

- November 9, 2025: McKinsey research shows only one-third of companies scaling AI programs beyond pilots

- December 2, 2025: Funnel research reveals 86% of marketers struggle to determine channel impact despite data abundance

- January 3, 2026: Google engineer publicly acknowledges Claude Code reproduced year-long project in one hour

Summary

Who: Researchers Peeyush Agarwal from Netaji Subhas University of Technology, Harsh Agarwal from Adobe Inc., and Akshat Rana from Netaji Subhas University of Technology conducted the analysis, utilizing Anthropic's Economic Index dataset of Claude AI interactions.

What: The research paper reveals extreme concentration in AI usage patterns, with just 5% of occupational tasks accounting for 59% of all interactions with Claude AI, demonstrating that tasks requiring high creativity, complexity, and cognitive demand but low routineness attract the most engagement while highly routine work remains largely untouched.

When: The paper was published on arXiv on October 29, 2025 (version 2), analyzing conversation data from December 2024 and January 2025, following initial submission on October 26, 2025.

Where: The study analyzed approximately one million conversations on Claude.ai Free and Pro plans, mapping them to O*NET occupational task categories representing the U.S. workforce, though implications extend globally to knowledge work across all markets.

Why: The research addresses a critical gap in understanding which intrinsic task characteristics drive users' decisions to delegate work to AI systems, moving beyond occupation-level analysis to task-level insights that inform workforce development, business strategy, and policy decisions as generative AI transforms the nature of knowledge work.