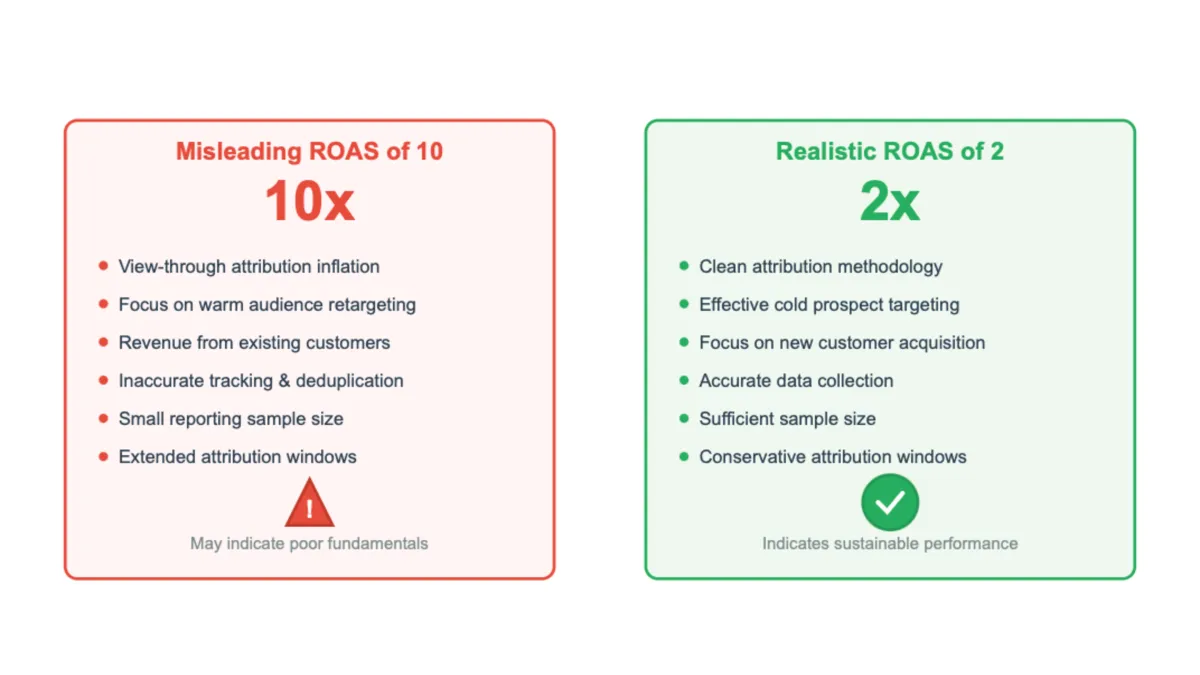

Digital marketing professionals are raising concerns about inflated Return on Ad Spend (ROAS) metrics that may be misleading advertisers about their campaign performance. According to a recent industry discussion, marketers showing ROAS figures of 10 or higher may actually be performing worse than those reporting more modest returns of 2.

The debate emerged from observations by Bram Van der Hallen, a digital marketing specialist at Edge.be, who highlighted systematic issues with how ROAS is calculated and reported across Meta's advertising platform. "That one marketer showing you a Meta Ads ROAS of 2 is probably doing a better job than that other marketer showing you a ROAS of 10," Van der Hallen stated in his analysis.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: Digital marketing professionals including Bram Van der Hallen from Edge.be, Daniel Lewis (Performance Marketing Strategist), and Alexandra Fusco from ThoughtMetric, along with other industry experts discussing Meta advertising measurement challenges.

What: Industry professionals warn that inflated ROAS metrics mislead advertisers about campaign performance, with figures of 10+ often indicating worse actual performance than modest returns of 2 due to attribution problems, audience targeting issues, and data collection inaccuracies.

When: The discussion emerged from recent industry observations about systematic ROAS measurement problems across Meta's advertising platform.

Where: The conversation took place within the digital marketing community, specifically focusing on Meta (Facebook/Instagram) advertising platform performance measurement.

Why: Attribution methodology flaws, view-through crediting, retargeting focus, existing customer revenue attribution, tracking inaccuracies, and small sample sizes combine to artificially inflate ROAS metrics, leading to poor strategic decisions and unrealistic performance expectations.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

The discussion centers on attribution methodology flaws that artificially inflate performance metrics. View-through attribution represents one of the primary culprits behind these distortions. This measurement approach credits conversions to ads that users viewed but didn't click, often overestimating the actual impact of advertising campaigns.

Marketing professionals have identified several technical factors contributing to ROAS inflation. Audience targeting strategies frequently focus on users already familiar with brands through retargeting campaigns, creating artificially high conversion rates. These warm audiences naturally convert at higher rates, making campaigns appear more successful than they would be with cold prospect targeting.

Purchase value attribution presents another significant challenge. Many high-ROAS campaigns derive their revenue primarily from existing customers rather than new acquisitions. This skews performance metrics because existing customers typically require less convincing to make purchases, regardless of advertising exposure.

Data collection accuracy remains problematic across the industry. Tracking implementations often lack proper event deduplication, leading to multiple conversion credits for single purchases. Small reporting sample sizes further compound these issues by creating statistical noise that can dramatically skew performance indicators.

Daniel Lewis, a performance marketing strategist, supported these observations through the discussion. When asked about optimization strategies, he questioned whether advertisers should "optimizing towards 7DC instead of 7DC+1D-view, to get rid of 1 day view conversions." This technical consideration reflects the complexity marketers face when attempting to isolate genuine advertising impact from coincidental conversions.

The attribution window selection significantly impacts reported performance. Meta's default attribution setting of 7-day click plus 1-day view-through often includes conversions that would have occurred without advertising exposure. Lewis noted concerns about "spending money on view-through conversions which is wasted spend from existing customers."

Alexandra Fusco, Director of Marketing at ThoughtMetric, emphasized the importance of moving beyond platform-reported numbers. "Inflated ROAS is one of the biggest traps in performance marketing," Fusco explained. "We've seen how much clarity improves when you move beyond platform-reported numbers and start looking at clean data that actually reflects customer behavior."

The technical challenges extend beyond simple measurement errors. Attribution models fundamentally struggle to account for customer journey complexity in multi-touchpoint environments. Users may interact with brands through various channels before converting, making it difficult to assign accurate credit to specific advertising touchpoints.

Industry professionals stress that realistic reporting prevents poor decision-making based on false performance indicators. Van der Hallen warned that unrealistic expectations stemming from inflated metrics can lead to budget misallocation and strategic errors. "Realistic reports will prevent you from making bad decisions based on false numbers and unrealistic expectations," he stated.

The scaling challenge represents a particularly important consideration for advertisers. While achieving high ROAS with retargeting campaigns targeting warm audiences remains relatively straightforward, maintaining performance when expanding to cold prospect audiences proves significantly more difficult. This scaling limitation often reveals the true effectiveness of advertising strategies.

Budget allocation decisions become critical when working with accurate attribution data. Advertisers must balance the efficiency of retargeting campaigns against the growth potential of prospecting efforts. Understanding these dynamics requires sophisticated attribution modeling that goes beyond simple last-click or view-through crediting.

The conversation highlighted the need for marketers to develop deeper attribution literacy. Many professionals lack comprehensive understanding of how different attribution models affect reported performance. This knowledge gap can lead to overconfidence in campaign performance and subsequent scaling failures.

Industry experts recommend focusing on incrementality testing to measure true advertising impact. These methodologies compare performance between exposed and unexposed user groups, providing more accurate assessments of advertising effectiveness than standard attribution approaches.

The discussion reflects broader challenges within the digital advertising ecosystem. Platform-provided metrics often prioritize advertiser satisfaction over measurement accuracy, creating systematic biases toward positive performance reporting. Advertisers must develop independent measurement capabilities to navigate these limitations effectively.

Marketing attribution technology has evolved significantly, but fundamental challenges persist. Multi-touch attribution models attempt to address some limitations but introduce their own complexities and potential biases. The industry continues seeking more accurate measurement methodologies.

Professional marketers increasingly recognize that moderate performance metrics often indicate more sustainable and scalable strategies than exceptional figures. This perspective shift reflects growing sophistication in attribution understanding and campaign optimization approaches.

Key Terms

Return on Ad Spend (ROAS) ROAS measures the revenue generated for every dollar spent on advertising. Calculated by dividing total revenue by advertising costs, this metric serves as a primary performance indicator for digital campaigns. However, ROAS calculations can be misleading when attribution models incorrectly assign conversion credit, making campaigns appear more profitable than they actually are.

View-Through Attribution This measurement methodology credits conversions to advertisements that users viewed but did not click. The system assumes that exposure alone influenced the purchase decision, even without direct interaction. While view-through attribution captures some genuine advertising impact, it often overestimates campaign effectiveness by crediting coincidental conversions that would have occurred regardless of ad exposure.

Attribution Window The attribution window defines the timeframe during which conversions receive credit after ad exposure or interaction. Meta's default setting uses a 7-day click window combined with a 1-day view window, meaning conversions within these periods get attributed to advertising. Longer attribution windows typically inflate performance metrics by capturing more conversions, while shorter windows provide more conservative measurements.

Multi-Touch Attribution This advanced attribution methodology distributes conversion credit across multiple advertising touchpoints throughout the customer journey. Rather than assigning full credit to the last interaction, multi-touch models recognize that customers often engage with various advertisements before converting. These models attempt to provide more accurate performance measurement but require sophisticated implementation and interpretation.

Event Deduplication Event deduplication prevents multiple tracking systems from crediting the same conversion multiple times. Without proper deduplication, a single purchase might be counted by Facebook Pixel, Google Analytics, and other tracking platforms simultaneously, artificially inflating conversion numbers. This technical implementation ensures accurate measurement across integrated marketing technology stacks.

Incrementality Testing Incrementality testing measures the true causal impact of advertising by comparing performance between exposed and unexposed user groups. This methodology uses control groups to isolate advertising effects from organic conversions that would occur without marketing intervention. Incrementality testing provides more accurate performance assessment than standard attribution models, though it requires careful experimental design and statistical analysis.

Cold Prospect Targeting Cold prospect targeting focuses advertising efforts on users who have no prior brand awareness or engagement history. These audiences represent new customer acquisition opportunities but typically convert at lower rates and require higher advertising investment. Cold targeting performance often reveals the true scalability potential of advertising strategies beyond retargeting existing audiences.

Warm Audience Retargeting Retargeting campaigns focus on users who have previously interacted with a brand through website visits, social media engagement, or past purchases. These warm audiences naturally convert at higher rates because they already demonstrate interest or familiarity with the brand. While retargeting generates efficient performance metrics, over-reliance on these audiences can limit growth potential and artificially inflate overall campaign performance.

Statistical Noise Statistical noise refers to random variations in data that can obscure true performance trends, particularly problematic with small sample sizes. In advertising measurement, noise can make campaigns appear highly successful or unsuccessful based on limited data rather than genuine performance patterns. Larger sample sizes and longer observation periods help reduce noise impact on decision-making.

Customer Journey Complexity Modern customer journeys involve multiple touchpoints across various channels and devices before conversion occurs. This complexity makes it challenging to accurately assign credit to specific advertising interactions. Customers might see display ads, search for brands, visit websites multiple times, and engage with social media before purchasing, creating attribution challenges that simple measurement models struggle to address effectively.

Timeline

- March 2025: Google reintroduces last-touch attribution models amid platform competition concerns

- March 2025: Attribution research reveals fundamental challenges with 54% of advertisers using flawed last-touch models

- October 2024: IAB introduces privacy-first attribution protocol addressing measurement transparency

- August 2024: Amazon DSP expands conversion modeling for anonymous inventory challenges

- May 2025: European digital advertising reaches €118.9 billion with measurement challenges persisting across platforms