A comprehensive study testing artificial intelligence tools for pay-per-click advertising guidance revealed significant accuracy issues across five major platforms. The research, conducted by WordStream and published July 10, 2025, found that 20% of all AI responses to PPC-related questions contained inaccurate information.

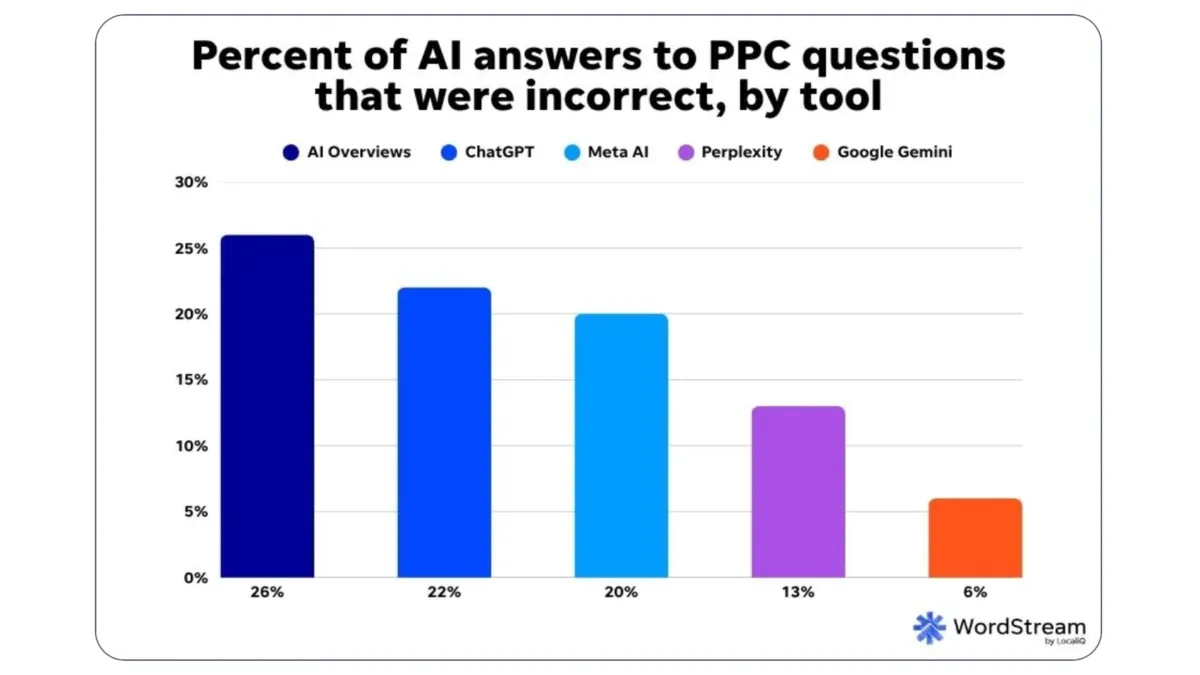

The experiment tested ChatGPT, Google AI Overviews, Google Gemini, Perplexity, and Meta AI with 45 identical questions about PPC best practices, platform capabilities, and industry trends. Google AI Overviews demonstrated the poorest performance with 26% incorrect answers, while Google Gemini achieved the highest accuracy rate with only 6% incorrect responses.

According to the study results, ChatGPT generated incorrect answers 22% of the time, Meta AI produced wrong information in 20% of responses, and Perplexity showed a 13% error rate. The findings highlight critical reliability concerns for advertisers increasingly dependent on AI assistance for campaign management and strategic decisions.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: WordStream conducted the study testing five AI platforms (ChatGPT, Google AI Overviews, Google Gemini, Perplexity, Meta AI) for PPC advertising accuracy.

What: Research revealed 20% error rate across 225 AI responses to 45 PPC questions, with Google AI Overviews showing highest inaccuracy (26%) and Google Gemini demonstrating best performance (6% error rate).

When: The study was published July 10, 2025, testing current AI capabilities against established PPC best practices and recent industry changes.

Where: Testing focused on major AI platforms accessible to advertisers globally, with implications for digital marketing professionals managing PPC campaigns across Google, Meta, and other advertising platforms.

Why: Rising AI adoption in advertising workflows necessitates accuracy assessment to protect ad spend and campaign performance, especially as platforms integrate AI-powered features and advertisers increasingly rely on automated guidance for strategic decisions.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Accuracy breakdown reveals platform-specific weaknesses

The WordStream research examined fundamental PPC topics including Google Ads policies, bidding strategies, Quality Score optimization, and performance metrics. Questions ranged from basic support contact information to complex strategy recommendations and current industry trends.

Google AI Overviews' poor performance proves particularly concerning given their prominent placement in search results. According to industry statistics cited in the study, AI Overviews appear on 55% of searches, potentially exposing millions of users to inaccurate advertising guidance. The study noted that 70% of consumers report trusting generative AI search results, creating substantial risk for misinformed decision-making.

Google Gemini's superior accuracy suggests better underlying data and training for advertising scenarios. The study authors noted that Google announced at Google Marketing Live 2025 the launch of an AI agent called Marketing Advisor within the Google Ads platform. If built using similar technology to Gemini, this tool could provide more reliable guidance than current alternatives.

Platform-specific biases emerged throughout testing. Meta AI consistently framed responses through a Facebook Ads perspective, even when answering Google Ads questions. For example, when asked about PPC ROI metrics, Meta AI suggested tracking "profit per impression or click" and "break-even cost per conversion" - metrics more applicable to Facebook advertising than search campaigns.

Technical limitations expose knowledge gaps

Several tools failed completely on advanced PPC tasks. When asked to write a Google Ads script for automatically pausing high-cost keywords, both Google Gemini and AI Overviews declined the request. Gemini cited security concerns and potential account damage, while AI Overviews provided only basic information about JavaScript requirements without actual code.

This pattern suggests Google-owned AI tools may deliberately discourage advanced PPC strategies, potentially directing users toward Google support representatives trained to promote additional services. Only ChatGPT, Perplexity, and Meta AI provided functional scripts for the automation request.

Keyword research capabilities proved inadequate across most platforms. Four of five tools failed to deliver quality keyword recommendations when prompted for "high volume, high intent" terms with "low cost and competition." Instead of providing niche, long-tail keywords as requested, tools suggested broad, highly competitive terms like "Best Online Master's Programs" and "Top Computer Science Colleges."

According to WordStream's own keyword research data, education-related terms currently rank among the most expensive and competitive in Google Ads. The Education and Instruction sector experienced the largest year-over-year increase in average cost per lead, contradicting the tools' suggestions for budget-conscious campaigns.

Performance analysis reveals outdated information

Multiple platforms provided outdated Google Ads feature information. Both Meta AI and AI Overviews referenced Enhanced CPC bidding, which Google phased out in October 2024. They also mentioned text ads, replaced by Responsive Search Ads as the default format since 2023, and incorrectly referred to "ad extensions" rather than the current "ad assets" terminology.

Cost and performance data accuracy varied significantly between tools. ChatGPT dramatically overestimated expensive keyword costs, suggesting average CPCs reaching $300 for high-cost industries. Gemini and Perplexity provided more accurate estimates using WordStream's 2025 benchmark data, while Meta AI relied on outdated 2024 figures.

When presented with poor campaign performance data - 13 clicks, 1.19% click-through rate, $48.66 average CPC, zero conversions, and $632.59 spent over two weeks - AI tools demonstrated concerning response patterns. ChatGPT provided an overly encouraging assessment, potentially reflecting recent updates making the tool "overly flattering and agreeable" according to user feedback from April 2025.

Meta AI incorrectly characterized the 1.19% CTR as "not terrible" by comparing it to an outdated 1.5-2% average range. According to WordStream's current Google Ads benchmarks, the actual average CTR is 7.52%, making the sample performance significantly worse than Meta AI indicated.

Cross-platform reliability concerns for marketing decisions

The study revealed that asking identical questions to different AI tools produces inconsistent answers, even for basic metrics. When questioned about average Facebook lead generation costs, tools provided dramatically different ranges. The correct answer of $21.98, according to WordStream's Facebook Ads benchmark report, fell within broad ranges provided by some tools but lacked precision needed for budget planning.

Meta AI achieved perfect accuracy on Facebook Ads questions while struggling with Google Ads information. This platform-specific expertise suggests advertisers must match AI tools to their specific advertising platforms rather than relying on general-purpose solutions.

ChatGPT uniquely promoted itself as a PPC support option when asked about Google Ads assistance. The response included "ChatGPT (Me!)" alongside legitimate marketing agencies and platforms, demonstrating potential conflicts between AI tool providers and objective guidance.

Industry implications for AI-assisted advertising

The findings arrive as artificial intelligence becomes increasingly integrated into advertising workflows. StackAdapt recently launched an AI assistant processing over 1,700 platform messages in 30 days, while Google explores Model Context Protocol integration for third-party AI tools accessing Google Ads accounts.

AI search behavior transformation adds complexity to advertising accuracy requirements. Microsoft Advertising reports that generative AI enables conversational search experiences generating doubled click-through rates and 53% increased purchases when AI assists customer journeys.

The study's timing coincides with Google's AI Mode advertising rollout, where users issue queries 2-3 times longer than traditional search. These extended queries provide enhanced targeting opportunities but require accurate AI interpretation for effective campaign optimization.

Perplexity AI's advertising strategy demonstrates the broader trend toward AI-integrated advertising. The platform launched sponsored questions and side media placements while emphasizing that answers remain unbiased by advertiser influence.

Recommendations for AI-assisted PPC management

The research concludes with specific guidance for advertisers navigating AI tool limitations. Users should select platforms based on specific advertising needs rather than assuming universal capability. Gemini shows superiority for Google Ads questions, while Meta AI excels at Facebook Ads information.

Prompt quality significantly impacts response accuracy. Detailed, specific questions with relevant context produce better results than broad queries. However, even well-crafted prompts cannot overcome fundamental knowledge gaps or training limitations across platforms.

Cross-verification remains essential for any AI-generated PPC advice. The study emphasizes that no single tool achieved 100% accuracy, making independent confirmation through industry publications, expert sources, or multiple AI platforms necessary for reliable guidance.

The findings suggest that while AI tools can streamline certain PPC workflows, they cannot replace human expertise or authoritative industry resources. Advertisers must treat AI assistance as supplementary support requiring careful validation rather than definitive strategic guidance.

Timeline

- October 2024: Enhanced CPC bidding phased out by Google

- November 12, 2024: Perplexity AI reveals advertising formats with sponsored questions and side media

- December 2024: Perplexity reaches $9 billion valuation as AI advertising model evolves

- April 2025: ChatGPT update rolled back due to "overly flattering" user feedback

- May 2025: Google I/O announces AI Mode features and Marketing Advisor development

- July 2, 2025: Microsoft details generative AI impact on search advertising

- July 9, 2025: Perplexity launches Comet browser for premium subscribers

- July 10, 2025: WordStream publishes AI accuracy study results