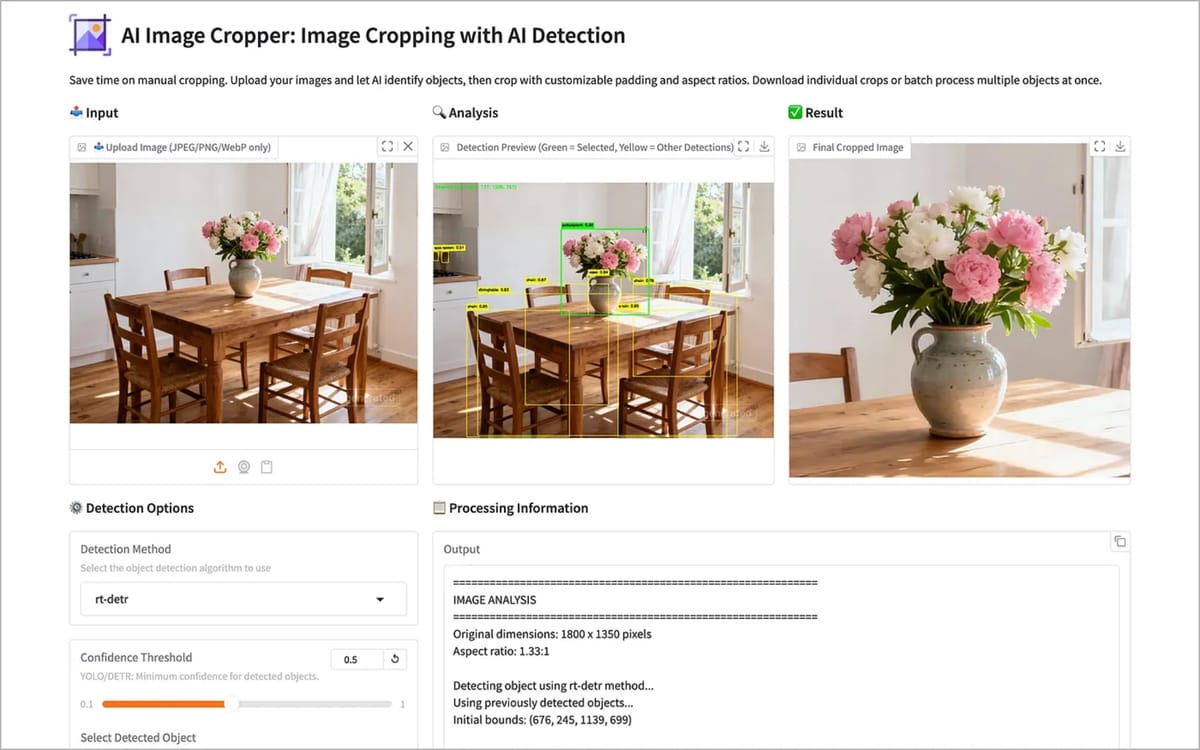

Technology consultant Gary Stafford published documentation on November 14, 2025, detailing an open-source Python tool that uses deep learning models to automatically identify, crop, and extract objects from advertising images. The AI Image Cropper project addresses time-consuming manual workflows advertisers face when processing large image volumes for campaigns across multiple platforms.

The tool supports both interactive web-based workflows through a user interface and automated batch processing via command-line operations for unattended image processing at scale. Stafford's implementation integrates three state-of-the-art object detection models: YOLO (You Only Look Once), DETR (DEtection TRansformer), and RT-DETR (Real-Time DEtection TRansformer).

Sign up for the free weekly newsletter

Your go-to source for digital marketing news.

No spam. Unsubscribe anytime.

Technical foundation and detection methods

Traditional computer vision approaches relied on hand-crafted algorithms analyzing edges, gradients, textures, and color distributions. These classical techniques—including contour detection, edge detection through Canny algorithms, GrabCut segmentation, and saliency detection—dominated image processing from the 1980s through the early 2010s. While computationally efficient and requiring no training data, they struggle with complex backgrounds, partial occlusion, varying lighting conditions, and objects that blend with environments.

Modern deep learning models address these limitations through learned features. YOLO, first published in June 2015 by researchers at the University of Washington and Allen Institute for AI, fundamentally changed object detection by treating it as a single regression problem rather than a classification task. Previous detectors like R-CNN required thousands of forward passes per image. YOLO's breakthrough was processing entire images once to predict all bounding boxes and class probabilities simultaneously.

The YOLO family evolved dramatically since 2015, with Joseph Redmon leading development through YOLOv3 in 2018. After Redmon stepped away from computer vision research in 2020, the community continued development through YOLOv4, which introduced CSPDarknet and Mosaic augmentation. Subsequent versions added anchor-free detection, transformer components, and improved training strategies. The current implementation uses YOLOv12x, a 119 MB PyTorch model file.

DETR introduced a radically different approach in May 2020 through the paper "End-to-End Object Detection with Transformers." Before DETR, object detectors relied on complex, hand-crafted pipelines with region proposals, anchor boxes, and non-maximum suppression. DETR simplified this by treating object detection as a direct set prediction problem, predicting all objects simultaneously rather than sequentially processing region proposals. By adapting transformers initially designed for natural language processing tasks to vision, DETR demonstrated that self-attention mechanisms used for language understanding could capture spatial relationships among objects in images.

RT-DETR represents Baidu's 2023 solution to making transformer-based detectors fast enough for real-time use. While DETR introduced an elegant approach, its slow inference speed made it impractical for applications like video processing or autonomous vehicles. RT-DETR addresses this with an efficient hybrid encoder that dramatically reduces computational costs while maintaining accuracy, and IoU-aware query selection that helps the model focus on high-confidence detections.

Performance characteristics and testing

Stafford conducted performance testing across three image collections: 13 sample images included with the project, 26 Pexels images of dogs, and 250 images from the Roboflow Vehicles-OpenImages computer vision dataset. Testing used an M4 Max processor with 36 GB of memory.

For 13 sample images, YOLO processed all images in 13.2 seconds total (1.02 seconds per image), detecting 73 total objects. DETR required 43.9 seconds (3.38 seconds per image) detecting 71 objects. RT-DETR completed processing in 41.1 seconds (3.16 seconds per image) detecting 77 objects.

The 26-image dog dataset showed YOLO processing in 24.6 seconds (0.95 seconds per image) detecting 28 objects. DETR required 91.4 seconds (3.52 seconds per image) detecting 29 objects. RT-DETR processed in 84.7 seconds (3.26 seconds per image) detecting 31 objects.

For the 250-image dataset, YOLO completed processing in 266.3 seconds (1.07 seconds per image) detecting 630 objects. DETR required 836.4 seconds (3.35 seconds per image) detecting 574 objects. RT-DETR processed in 812.4 seconds (3.25 seconds per image) detecting 652 objects.

Testing with an NVIDIA GeForce RTX 4080 SUPER with 16 GB of memory showed dramatic performance improvements for GPU-accelerated processing compared to CPU-only operations.

Practical applications for marketing

The tool addresses four primary use cases for marketing professionals. E-commerce product photography workflows can extract individual products from multi-item photos for catalog listings. Social media content creation enables automatic cropping of portrait images to multiple aspect ratios—Instagram 1:1, Stories 9:16, Posts 4:5—without manual intervention. Dataset preparation for machine learning projects allows extraction of labeled objects from scene images for training computer vision models. Document processing applications can detect and extract specific elements including signatures, logos, and stamps from scanned documents.

Amazon has expanded AI-powered creative tools throughout 2024 and 2025, introducing Image Generator and Video Generator for advertisers. According to Amazon Ads findings, brands using Image Generator between October 2023 and June 2024 saw almost 5% more sales per advertiser on average.

Meta introduced Creative breakdown functionality on July 11, 2025, enabling performance analysis by individual creative elements including Flexible format components and AI-generated images. The update addressed advertiser demands for transparency in AI-driven optimization processes. In April 2025, Meta reported 30% more advertisers using AI creative tools in Q1, while Advantage+ sales campaigns showed an average 22% boost in return on ad spend.

Google launched Asset Studio on September 10, 2025, consolidating creative production with Imagen 4 integration for advanced image creation and Veo technology for video generation. The platform enables advertisers to generate, edit, and scale advertising assets using artificial intelligence without external design tools.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

Object detection and dataset training

The COCO (Common Objects in Context) dataset is widely used for training and evaluating deep learning models in object detection, including YOLO. COCO contains 330,000 images, of which 200,000 have annotations for object detection, segmentation, and captioning. The dataset comprises 80 object categories, including everyday objects like cars, bicycles, and animals, as well as more specific categories such as umbrellas, handbags, and sports equipment.

For specialized applications that require detecting objects outside these categories, models would need fine-tuning on domain-specific datasets. This limitation affects advertisers working with industry-specific products or branded items not represented in standard training datasets.

The tool's architecture provides seven detection algorithms: contour-based boundary detection using pixel intensity gradients, saliency-based attention highlighting visually distinctive regions, edge detection through Canny algorithm detecting sharp intensity transitions, GrabCut graph-cut algorithm separating foreground objects from background, YOLO CNN-based real-time detector trained on 80 COCO object classes, DETR transformer-based detector using self-attention that excels with overlapping objects, and RT-DETR bridging the gap between transformer intelligence and YOLO-level speed.

Automation and batch processing capabilities

The batch processing system is optimized for high throughput through model caching, where YOLO loads once and persists across all images. Efficient memory management processes one image at a time to avoid memory bloat. Error resilience ensures failures on individual objects don't stop the batch job. Quality control provides configurable confidence thresholds to filter low-quality detections. Automatic file naming includes object label and confidence score for easy filtering.

For instance, processing a family photo to extract all detected person objects using YOLO with 10% padding saves results to a single directory. The output logs show successful cropping of 6 objects with individual files named by detection index, object label, and confidence score. Each cropped family member becomes a separate file for further use in advertising campaigns or social media content.

E-commerce product extraction demonstrates extracting all chair objects from a directory of multiple home furnishings images using DETR with 1:1 aspect ratio, 70% confidence threshold, and 10% padding. The resulting individually cropped chairs from six different images become ready for catalog listings without manual intervention.

Machine learning practitioners preparing image training datasets can isolate subjects from their environments. A hypothetical dataset of dogs from stock photography sources can be processed to extract all dogs from images with 80% confidence threshold and 10% padding, producing individually cropped subjects ready for association with accompanying metadata.

Commercial alternatives and market context

Commercial smart cropping tools fall into three categories. Consumer tools like Canva, Fotor, and Google Photos offer free or low-cost AI cropping with automatic focal point detection, optimized for social media and personal use. Professional tools such as Luminar Neo and Neurapix (a Lightroom plugin) provide advanced content-aware cropping for photographers. Enterprise solutions like Cloudinary deliver API-based cropping at scale for high-volume applications, while background-removal services including Remove.bg and Clipping Magic double as smart croppers by automatically focusing on subjects.

Adobe Photoshop combines subject detection through Select Subject with the Crop tool and optionally Content-Aware Crop, allowing intelligent reframing while ensuring the subject remains prominent and the background is automatically extended or filled for a cohesive result using Generative AI. This workflow serves banner creation, portrait editing, and dynamic reframing tasks where background synthesis preserves composition. Automating subject detection and cropping in Photoshop is possible and increasingly effective due to AI advancements, but the process has strengths and limitations depending on requirements and image complexity.

Adobe expanded GenStudio on October 28, 2025, introducing proprietary AI model customization through Adobe Firefly Foundry alongside integrations with major advertising platforms including Amazon Ads, Google Marketing Platform, Innovid, LinkedIn, and TikTok. The announcements arrived at Adobe MAX, with the company reporting that 99% of Fortune 100 companies have used AI in an Adobe application.

MNTN launched QuickFrame AI in October 2025, enabling studio-quality ad creation in 12 minutes combining Google Veo, ElevenLabs, and Stability AI. The platform integrates multiple generative AI technologies into a single creative workspace for enterprise advertisers.

Industry automation trends

The AI Image Cropper release coincides with broader automation trends across advertising technology. Amazon announced Ads Agent on November 11, 2025, at its annual unBoxed conference. The artificial intelligence agent automates campaign management tasks across Amazon Marketing Cloud and Multimedia Solutions with Amazon DSP, representing a technical advancement in how advertisers interact with Amazon's advertising platforms.

LiveRamp introduced agentic AI tools on October 1, 2025, including AI-Powered Segmentation enabling marketers to create audience segments using natural language prompts. The feature processes first-party, second-party, and third-party data sources to generate precise segments. Marketers can explore, build, and activate segments within minutes through conversational interfaces.

McKinsey identified AI agents reshaping the advertising landscape with $1.1 billion in equity investment in 2024. The July 27, 2025 report revealed that agentic AI represents artificial intelligence systems that operate autonomously to plan and execute complex workflows without constant human supervision. Unlike traditional AI that responds to specific prompts, agentic AI creates virtual coworkers capable of managing entire marketing campaigns, from audience analysis to budget optimization.

The Ad Context Protocol launched on October 15, 2025, seeking to create an open-source specification for the use of Model Context Protocol for advertising use cases. The protocol attempts to establish infrastructure allowing AI agents to execute advertising tasks across fragmented platforms. Scope3, Yahoo, PubMatic, The Weather Company, and numerous other vendors back the initiative.

IAB Tech Lab released its Agentic RTB Framework version 1.0 for public comment on November 13, 2025, introducing standardized specifications for deploying containerized agents within real-time bidding infrastructure. The framework enters a public comment period extending through January 15, 2026.

Configuration and implementation details

The tool uses the uv package manager for Python dependency management. Installation requires cloning the GitHub repository, running uv sync to install dependencies, and sourcing the virtual environment. The web interface launches at http://127.0.0.1:7860 with an intuitive drag-and-drop interface. For batch processing thousands of images, the command-line interface provides comprehensive automation options.

All defaults are centralized in a configuration file for easy tuning. Default confidence thresholds vary by detection method: 0.7 for DETR, 0.5 for YOLO, and 0.5 for RT-DETR. Default padding adds 8% breathing room around detected objects. Batch processing saves to a "cropped_images" directory with 95% JPEG quality.

Model caching occurs in two locations: the HuggingFace cache directory and within the project's models subdirectory. First calls to detection methods experience delays during initial model downloads, but subsequent calls utilize cached models for faster processing.

The Gradio web UI provides real-time object detection with live preview, interactive object selection through dropdown menus for multiple detections, and one-click batch cropping of all detected objects. Detailed image analysis includes original dimensions, aspect ratio, detection method results, initial bounds, all detected objects with confidence scores, selected object details, bounds with padding, and adjusted bounds for custom aspect ratios.

Technical requirements and limitations

The tool requires Python and supports common image formats including JPG, JPEG, PNG, and WEBP. Network configuration for the bash tool is currently disabled. The filesystem mounts several directories as read-only, including user uploads, transcripts, and skills directories.

Package management follows standard Python conventions. npm works normally with global packages installing to a designated directory. pip requires the --break-system-packages flag for all installations. Virtual environments can be created if needed for complex Python projects. Tools should always be verified for availability before use.

The tool demonstrates how modern object detection models like YOLO and DETR can be packaged into practical, production-ready tools. By offering both interactive and command-line interfaces, it serves diverse use cases from exploratory work in the Gradio UI to fully automated batch processing pipelines. Multiple detection methods provide flexibility for different image types: YOLO offers the best speed-accuracy trade-off for most use cases, DETR excels at complex scenes with overlapping objects, and batch processing enables automation without human intervention.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Timeline

- June 2015: Joseph Redmon and colleagues publish YOLO, fundamentally changing object detection by treating it as a single regression problem

- May 2020: DETR (DEtection TRansformer) introduced in paper "End-to-End Object Detection with Transformers"

- 2023: Baidu releases RT-DETR (Real-Time DEtection TRansformer) addressing transformer-based detector speed limitations

- October 15, 2024: Amazon unveils AI Creative Studio and Audio Generator at unBoxed 2024 event

- July 11, 2025: Meta unveils Creative breakdown for Flexible formats and AI-generated image ads

- July 27, 2025: McKinsey identifies AI agents reshaping advertising landscape with $1.1 billion in equity investment

- September 10, 2025: Google launches Asset Studio for advertising creative production with Imagen 4 integration

- October 1, 2025: LiveRamp introduces agentic AI tools for marketing automation

- October 15, 2025: Ad Context Protocol launches for advertising automation

- October 28, 2025: Adobe expands GenStudio with custom AI models and ad platform integrations

- October 30, 2025: MNTN launches QuickFrame AI to create studio-quality ads in 12 minutes

- November 11, 2025: Amazon launches AI agent for automated campaign management

- November 13, 2025: IAB Tech Lab releases Agentic RTB Framework version 1.0 for public comment

- November 14, 2025: Gary Stafford publishes documentation for AI Image Cropper open-source tool combining YOLO, DETR, and RT-DETR

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Summary

Who: Technology consultant Gary Stafford developed and published documentation for an open-source Python tool that automates image cropping for advertisers, content creators, e-commerce businesses, and machine learning practitioners. The tool integrates research from the University of Washington, Allen Institute for AI, Facebook AI Research, Baidu, and Peking University through their published object detection models.

What: The AI Image Cropper project provides a Python-based tool leveraging YOLO, DETR, and RT-DETR object detection models to automatically identify, crop, and extract objects from images. The tool supports both interactive web-based workflows through a Gradio user interface and automated batch processing via command-line operations for processing thousands of images without human intervention. It offers seven detection algorithms, maintains aspect ratio controls, provides configurable padding, and includes confidence tuning capabilities.

When: Stafford published the documentation on November 14, 2025, coinciding with broader industry adoption of AI-powered creative automation tools. Major platforms including Amazon, Meta, Google, Adobe, and MNTN have launched AI creative tools throughout 2024 and 2025. The timing reflects accelerating adoption of artificial intelligence for advertising content production.

Where: The open-source code is available on GitHub under the repository name "ai-image-cropper." The tool operates locally on user hardware, processing images in a designated working directory. The web interface launches at http://127.0.0.1:7860. Models are cached locally in the HuggingFace cache directory and within the project's models subdirectory. The tool has been tested on M4 Max processors and NVIDIA GeForce RTX 4080 SUPER GPUs.

Why: Manual image cropping is time-consuming and error-prone, especially when dealing with hundreds or thousands of images for advertising campaigns. Traditional computer vision approaches struggle with complex backgrounds, partial occlusion, and varying lighting conditions. Modern deep learning models address these limitations through learned features. The automation enables advertisers to process large image volumes for e-commerce catalogs, social media content across multiple aspect ratios, machine learning dataset preparation, and document processing workflows without manual intervention. The development addresses mounting pressure on advertisers to deliver continuous streams of fresh, personalized creative across multiple distribution channels while traditional production models have become too slow and costly.