Traffic to the internet's most established websites fell more than 11% over the past five years, revealing the structural pressures traditional publishers face as artificial intelligence reshapes how people find information online, according to data from Similarweb analyzed by Axios and published January 3, 2026.

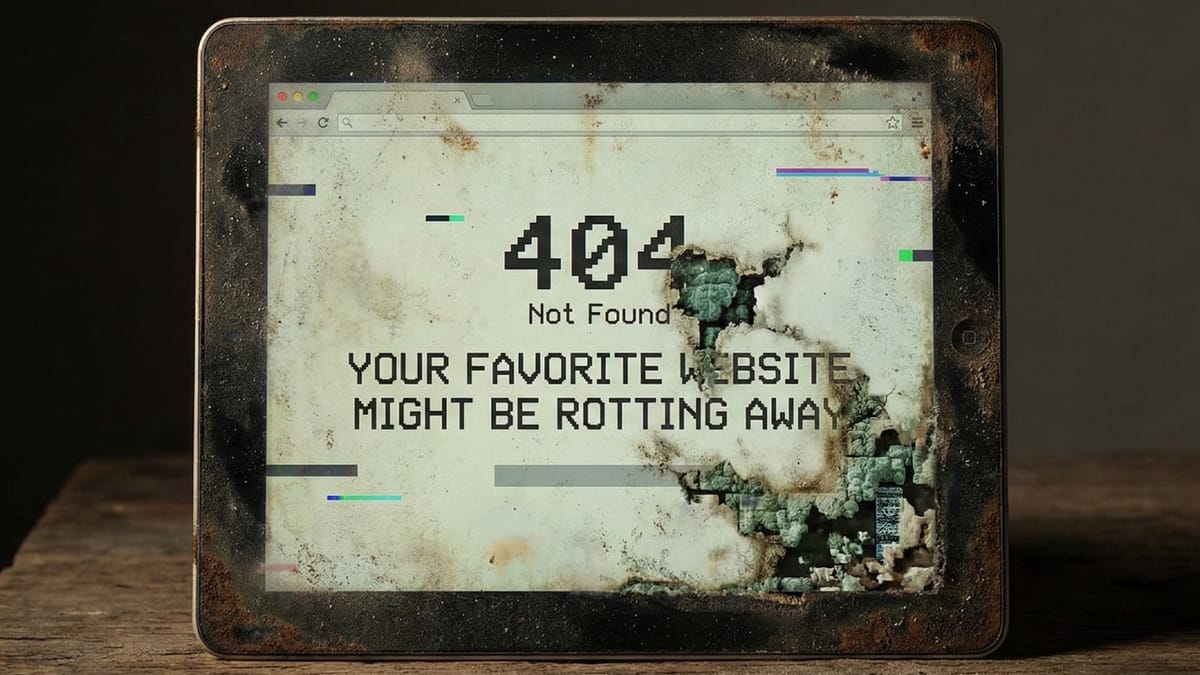

The decline affects older websites that built their businesses during the search-dominated era of the internet. These sites don't simply disappear when traffic drops. They persist on the open web for years, accumulating broken links and outdated information that clutters search results while providing diminishing value to both human readers and AI systems training on web content.

Internet usage continues growing globally, but the traffic patterns tell a story of redistribution rather than expansion. Overall traffic to the top 1,000 websites remained relatively stable at approximately 300 billion average monthly web visits over the past five years. The aggregate even showed modest growth of 1.8% between November 2024 and November 2025.

Remove newer websites from that calculation, however, and a different picture emerges. Sites that existed five years ago experienced a 1.6% traffic decline over the past year when measured on the same basis. The figure excludes websites that disappeared entirely during the measurement period.

Free weekly newsletter

Your go-to source for digital marketing news.

No spam. Unsubscribe anytime.

A quarter of web pages have vanished

Research from Pew Research Center documented the scale of content decay across the internet. The study found that 25% of all webpages that existed at some point between 2013 and 2023 are no longer accessible. The disappearance rate varies significantly by content age, with pages created in 2013 showing 38% inaccessibility by October 2023, while pages from 2023 showed only 8% inaccessibility.

News organizations face particular vulnerability to link decay. According to the Pew findings, 23% of news webpages contain at least one broken link. Government websites demonstrate similar problems, with 21% of government pages containing broken links.

The broken link problem compounds as time passes. Pages created in 2014 showed 35% inaccessibility, declining gradually to 31% for 2015 content, 30% for 2016 material, and 26% for 2017 pages. The pattern accelerated again in subsequent years, with 2018 showing 31% inaccessibility and 2019 reaching 32% before declining to 27% for 2020 content.

Content from more recent years showed better preservation rates. Pages from 2021 demonstrated 22% inaccessibility, 2022 showed 15% inaccessibility, and 2023 content reached only 8% inaccessibility by the October 2023 measurement date.

The slow degradation of older websites creates significant challenges for both users seeking information and AI systems attempting to gather training data. Search engines return results pointing to pages that no longer exist or redirect elsewhere. Users encounter frustrating dead ends. AI training systems ingest outdated or incorrect information that undermines model quality.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

Publishers struggle while some sites neglect maintenance

Some publishers have invested resources in maintaining and updating their web properties despite traffic challenges. These efforts include refreshing content, fixing broken links, updating outdated information, and ensuring technical infrastructure remains functional. The investments represent significant ongoing costs without corresponding revenue increases.

Many websites, however, take a different approach. According to the Axios analysis, numerous older sites remain online but receive minimal maintenance. These properties continue consuming server resources and domain registrations while providing increasingly limited value to visitors. The neglected sites accumulate technical debt, outdated content, and broken functionality that degrades user experience.

The maintenance challenge extends beyond individual pages to entire site architectures. Older content management systems fall behind on security updates. Third-party services integrated into pages disappear or change APIs. Images hosted on external services become unavailable. Embedded content from social media platforms breaks as those platforms evolve their embedding systems.

Publishing industry advocates have spent years arguing that AI firms scraping publisher content would eventually eliminate incentives for creating new material. The concern centers on a potential feedback loop where AI systems reduce publisher traffic, publishers reduce content creation, and AI systems then lack fresh training data beyond increasingly outdated material.

Data from multiple sources supports the traffic reduction thesis. Analysis from Ahrefs found that AI Overviews reduced organic clicks to top-ranking websites by 34.5% when present in search results. The measurement compared click-through rates for identical search queries with and without AI summary features.

Additional research showed AI chatbot referral traffic to top media and news websites running approximately 96% lower than traditional Google search referrals. The dramatic difference reflects fundamental changes in how AI-mediated discovery differs from link-based search.

OpenAI leads compensation attempts

The recognition of potential content exhaustion has prompted some AI companies to establish direct publisher relationships. OpenAI has emerged as the most active participant in these arrangements, according to industry reporting. The company has signed multiple agreements compensating publishers for content access.

The deals vary in structure and terms. Some include direct licensing fees paid upfront or on recurring schedules. Others incorporate revenue-sharing components where publishers receive payments based on how frequently AI systems reference their content. The specific terms typically remain confidential under non-disclosure provisions.

Publishers have advocated for establishing a "two-sided web marketplace" where AI companies pay for content on a per-usage basis. The concept mirrors dynamics from the search era when publishers received traffic and advertising revenue from search engine referrals. The proposed framework would create direct payment flows from AI companies to content creators.

The compensation arrangements remain limited in scale relative to the publishing industry's size. Dozens of publishers worldwide have signed deals with various AI companies, but thousands of publishers exist without such agreements. The payments represent small fractions of what those publishers previously earned from search traffic.

Implementation challenges prevent rapid scaling of compensation models. Measuring content usage in AI systems proves technically complex. Determining fair payment rates lacks industry standards. Negotiating individual deals with numerous publishers requires substantial administrative resources. Smaller publishers lack negotiating leverage to secure favorable terms.

Some publishers have taken defensive measures by blocking AI crawlers from accessing their content. Research findings suggest this strategy often backfires. Studies documented that publishers blocking AI crawlers experienced 23% total traffic losses and 14% human traffic declines compared to publishers allowing AI access.

Traffic patterns shift toward AI experiences

The traffic redistribution reflects changing user behaviors around information discovery. Traditional search workflows involved submitting queries, reviewing multiple result listings, clicking through to various websites, and synthesizing information across sources. This multi-step process generated substantial traffic to publisher sites.

AI-powered search experiences compress these workflows into single interactions. Users receive synthesized answers within search interfaces, often eliminating the need to visit external websites. The compression improves user convenience while reducing publisher traffic and revenue opportunities.

Google's Liz Reid has defended the company's AI search features by arguing they generate "higher quality" clicks than traditional search. The executive defined quality clicks as those where users don't immediately return to search results, suggesting genuine interest in destination content.

The quality argument provides limited comfort to publishers experiencing absolute traffic declines. Higher engagement per visit doesn't offset revenue losses when overall visit volume drops substantially. Publishers require specific traffic volumes to support their business models regardless of per-visit quality metrics.

Google has claimed that websites experiencing traffic losses had other factors contributing to declines beyond AI features. The company pointed to methodology concerns in third-party studies and suggested some publishers would have lost traffic regardless of AI implementation.

Publisher groups have disputed these characterizations. Industry organizations representing news outlets have described AI features as systematic appropriation of publisher work for using content without generating corresponding traffic and revenue.

Google has expanded access to AI-powered search experiences to provide seamless transitions from traditional search results into conversational interfaces. The expansion further reduces friction in AI-mediated discovery while potentially decreasing publisher referrals. Users can now engage with AI features more directly within search interfaces where publisher links appear less prominently.

The measurement gap

Tracking traffic sources has become more complicated in the AI era. Google has addressed some publisher concerns about attribution and measurement capabilities. The additions don't fully solve measurement challenges. AI systems often synthesize information from multiple sources without clearly attributing specific claims to individual publishers. Users may consume information through AI interfaces without visiting source websites, creating information flows that bypass traditional analytics entirely.

Industry research has quantified the acceleration of traffic declines. Teads, a major advertising technology platform working with thousands of premium publishers, reported 10-15% pageview declines during the third quarter of 2025. CEO David Kostman attributed the losses to "AI summaries and changes in discovery" during the company's November 6, 2025 earnings call.

The Teads disclosure provided one of the first quantified assessments from an advertising platform with direct visibility into publisher traffic patterns. The company's position serving advertisements across numerous publisher websites gives it comprehensive data about aggregate traffic trends that individual publishers cannot observe.

Traffic diversification has become essential for publishers seeking to reduce dependence on search referrals. Marketing teams increasingly explore alternative channels including paid social media, native advertising, email marketing, and direct audience relationships. The diversification efforts attempt to build revenue streams less vulnerable to algorithmic changes at large platforms.

Some publishers have achieved traffic recoveries after algorithm updates, demonstrating that algorithmic changes remain significant factors alongside AI feature implementation.

The interplay between AI features and traditional search algorithms creates complex causation questions when publishers analyze traffic changes. Isolating AI impact from broader algorithmic shifts requires sophisticated analytical approaches that many publishers lack resources to implement.

Looking forward

The "web rot" phenomenon described in the Axios analysis represents more than temporary disruption. The combination of declining traffic to older sites, inadequate maintenance incentives, and AI-mediated discovery creates structural changes in how the internet functions as an information ecosystem.

Publishers face stark choices about resource allocation. Maintaining extensive archives of older content requires ongoing investment with diminishing returns. Some publishers have begun removing or consolidating older material to focus resources on current content more likely to generate traffic. These decisions further reduce the pool of accessible historical information.

The question of who pays for content creation and maintenance in an AI-mediated web remains unresolved. Current compensation arrangements cover small fractions of the publishing industry. Scaling these systems requires technical infrastructure, industry standards, and business models that don't yet exist at necessary levels.

The deterioration of older websites affects more than commercial publishers. Government information, academic content, civic resources, and cultural materials all face similar preservation challenges. The decay extends across the web's institutional memory, not just commercial media properties.

Link rot compounds as time passes. Each broken link makes content less valuable and harder to verify. Users lose trust in online information when sources frequently prove inaccessible. AI training systems struggle to evaluate source quality when original materials have disappeared.

The publishing industry's push for a two-sided marketplace faces scaling challenges that prevent rapid implementation. While OpenAI and a few other companies have established pilot programs, these efforts remain limited compared to the scope of content being used for AI training. The gap between current compensation levels and publishers' revenue losses continues widening.

The five-year traffic decline documented by Similarweb represents only the beginning of these structural shifts. AI capabilities continue advancing rapidly. User adoption of AI-powered discovery tools accelerates. Publishers continue adapting strategies, but the fundamental tension between AI efficiency and publisher business models persists without clear resolution paths.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Timeline

- 2013-2023: Pew Research Center documents that 25% of web pages from this period became inaccessible

- November 2020-November 2025: Overall traffic to top 1,000 websites remains stable at ~300 billion monthly visits

- April 2024: Ahrefs study finds AI Overviews reduce clicks to top websites by 34.5%

- August 2024: Over 35% of top 1,000 websites block OpenAI's GPTBot

- May 2025: Brazilian publishers demand action as AI Overviews cut traffic by 34%

- July 2025: Google disputes Pew study showing AI Overviews reduce clicks by half

- August 2025: Microsoft Clarity introduces AI channel groups for tracking traffic

- October 2025: Google's Liz Reid defends AI search features on Bold Names podcast

- November 2025: Teads reports 10-15% pageview decline in Q3 2025

- November 2024-November 2025: Traffic to sites existing five years prior declined 1.6%

- December 2025: Research shows blocking AI crawlers reduced publisher traffic by 23%

- January 3, 2026: Axios publishes analysis of five-year traffic decline to established websites

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Summary

Who: Traditional website publishers, content creators, news organizations, and AI companies including OpenAI compete for user attention and navigate changing internet economics documented by Similarweb and analyzed by Axios reporter Sara Fischer.

What: Traffic to established websites that existed five years ago declined more than 11% during that period while overall internet usage remained stable, creating "web rot" where older sites persist with broken links and outdated content. Research found 25% of web pages from 2013-2023 became inaccessible, with 23% of news pages and 21% of government pages containing broken links.

When: The five-year traffic decline was measured from 2020 through November 2025, with the Axios analysis published January 3, 2026. Pew Research Center documented web page inaccessibility through October 2023, while Teads reported accelerating pageview declines in the third quarter of 2025.

Where: The traffic patterns affect the global web ecosystem, with particular impact on English-language publishers in the United States and other developed markets where AI-powered search features have launched first. The phenomenon extends across news sites, government pages, and general web content.

Why: This matters for the marketing community because it represents a fundamental restructuring of how information discovery works online. Publishers losing 34.5% of organic clicks to AI features face existential revenue challenges while older content accumulates broken links and becomes unreliable for both human readers and AI training systems. The lack of scaled compensation models means publishers continue losing revenue faster than new monetization approaches can replace it. Marketing strategies built around search traffic and content marketing must adapt to AI-mediated discovery that reduces website visits while the web's infrastructure slowly degrades through inadequate maintenance of older properties.