Facebook announced comprehensive measures on July 14, 2025, targeting accounts that repeatedly share unoriginal content without proper attribution or meaningful enhancements. The initiative builds on existing efforts that resulted in action against 500,000 accounts engaged in spammy behavior during the first half of 2025.

According to the announcement, the social media platform removed approximately 10 million profiles impersonating large content producers. These accounts face reduced distribution across all their content and loss of access to Facebook monetization programs for specified periods. The enforcement targets creators who "reuse or repurpose another creator's content repeatedly without crediting them, taking advantage of their creativity and hard work."

Platform executives emphasized the distinction between acceptable content sharing and problematic behavior. Facebook encourages creators to reshare content with commentary through reaction videos or participate in trends while adding unique perspectives. The policy targets repeated reposting without permission or meaningful enhancements that dilute the feed experience and hinder emerging voices.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: Facebook announced enforcement measures affecting content creators who repeatedly share unoriginal material without proper attribution, while protecting authentic creators from content exploitation.

What: Comprehensive measures including reduced content distribution, monetization program restrictions, automatic duplicate video detection, and attribution link testing for creators violating unoriginal content policies.

When: Announced July 14, 2025, with gradual rollout planned over coming months following action against 500,000 accounts during first half of 2025.

Where: Enforcement applies across Facebook platform properties including Feed, monetization programs, and content recommendation systems globally.

Why: Platform aims to protect authentic creators from exploitation by both human and AI-generated content farms, improve feed quality, reduce spam content including AI slop, and maintain advertiser confidence while supporting sustainable creator economy growth amid artificial intelligence content proliferation challenges.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Technical implementation details

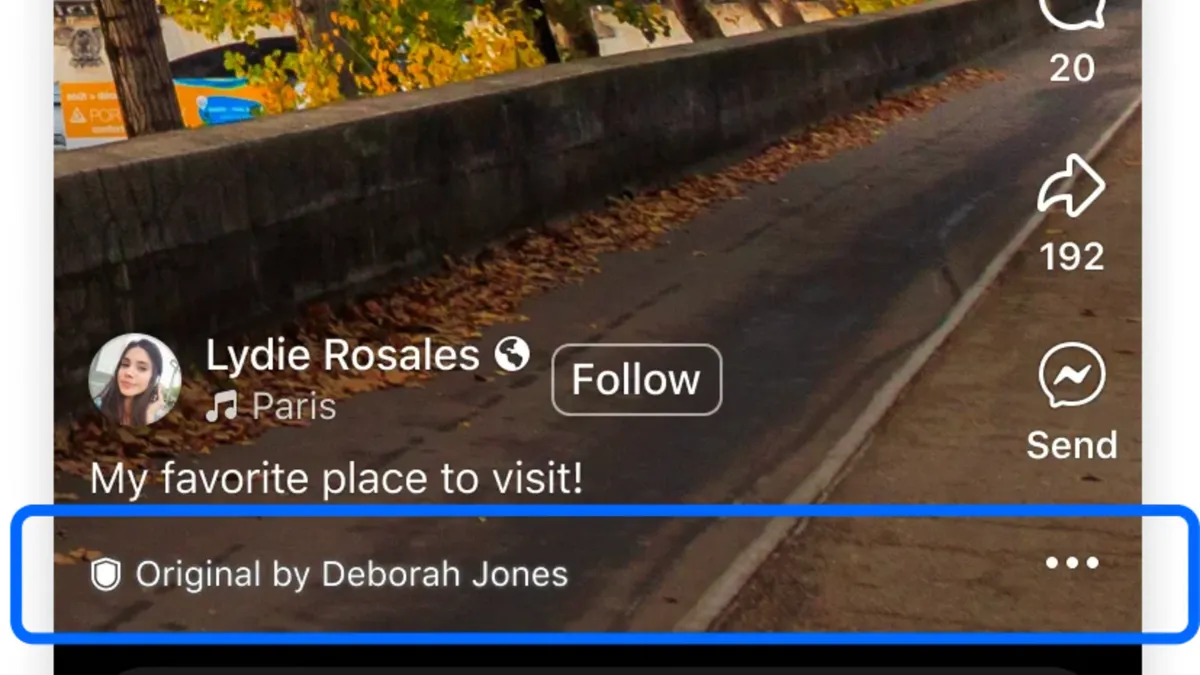

The platform's systems now detect duplicate videos automatically, reducing distribution of copies to prioritize original creators' visibility. Facebook tests attribution links on duplicate videos that direct viewers to original content sources. These technical measures supplement existing manual review processes for policy violations.

Content recommendation algorithms receive updates to better identify mass-produced material. The system evaluates video fingerprints, metadata patterns, and engagement metrics to distinguish between legitimate content sharing and repetitive posting behavior. Machine learning models trained on violation patterns can identify accounts exhibiting suspicious upload frequencies or content similarity scores.

Facebook provides post-level insights through Professional Dashboard to help creators understand distribution challenges. Creators can access risk assessments for content recommendation or monetization penalties through the Support home screen in their Page or professional profile main menu. These transparency tools address frequent creator concerns about content visibility and earning potential.

Context for marketing professionals

The announcement follows broader industry trends toward content authenticity and creator protection. YouTube recently clarified its "inauthentic content" policies following creator confusion about monetization requirements, while enhanced detection systems for unoriginal material took effect across major platforms.

Marketing professionals observe increasing platform investment in content quality controls as advertiser demand for brand-safe environments intensifies. Platform monetization programs fuel mass production of low-quality AI content, creating challenges for authentic creators seeking audience attention and revenue opportunities.

Facebook's approach contrasts with competitor strategies. Facebook's unified Content Monetization Program consolidates multiple earning streams into simplified systems, while new enforcement measures protect original content producers from exploitation. The dual approach addresses creator earning opportunities while maintaining content quality standards.

Enforcement mechanisms and creator guidelines

Facebook established specific best practices for content optimization. Pages and profiles receive maximum distribution when consisting primarily of original content filmed or created by account owners. Creators can share identical content across multiple Pages or profiles without penalties.

Meaningful enhancements require creative editing, voiceover, or commentary beyond simple clip compilation or watermark addition. Content must demonstrate clear value additions through storytelling techniques, educational elements, or entertainment perspectives. Authentic storytelling resonates with viewers and performs favorably in algorithm assessments.

The platform discourages visible third-party watermarks and content obviously recycled from other applications. High-quality captions with relevant hashtags, minimal capital letters, and five or fewer hashtags achieve optimal performance. These technical specifications reflect algorithm preferences for clean, professional content presentation.

Platform monetization access depends on policy compliance. Creators violating unoriginal content guidelines lose earning opportunities through Performance Bonus, In-stream ads, and Ads on Reels programs. Distribution reduction affects all content types including photos, videos, and text posts across Facebook properties.

Implementation timeline and creator resources

Facebook plans gradual rollout over coming months to ensure seamless creator transitions. The phased approach allows creators to adjust content strategies while maintaining existing audience relationships. Platform support resources include educational materials about policy changes and optimization techniques.

Professional Dashboard enhancements provide detailed analytics about content performance and policy compliance status. Creators can monitor distribution metrics, audience engagement patterns, and monetization eligibility through centralized interfaces. These insights help content producers understand algorithm preferences and optimization opportunities.

The platform's creator education initiatives include webinars, documentation updates, and community support forums. Facebook emphasizes ongoing commitment to supporting authentic creators while combating exploitation of creative work. Regular policy reviews address emerging challenges from technology advances and creator behavior patterns.

AI slop phenomenon and platform monetization challenges

Facebook's enforcement measures arrive amid broader industry concerns about what experts term "AI slop" - low-quality artificial intelligence-generated content designed primarily to capture engagement. Platform monetization programs fuel mass production of such content across social media platforms, including Facebook's Creator Bonus Program, YouTube Partner Program, and other revenue-sharing systems.

The AI slop phenomenon gained widespread attention following a June 23, 2025 HBO Last Week Tonight segment highlighting how platform payment structures incentivize rapid content production using AI tools. Content creators discovered that generative AI enables hundreds of video uploads with minimal time investment, potentially scaling earnings significantly through engagement-based monetization models.

Facebook's unoriginal content policies directly address AI slop concerns by targeting mass-produced material without meaningful human enhancement. The platform's enforcement mechanisms evaluate content similarity patterns, upload frequencies, and engagement metrics to identify exploitation of monetization systems. These measures protect authentic creators from being overwhelmed by algorithmically-generated competition.

YouTube's parallel content quality initiatives

YouTube has implemented similar measures targeting inauthentic content while maintaining creator monetization opportunities. YouTube improved detection systems for unoriginal content starting July 15, 2025, with enhanced identification of mass-produced and repetitious material that violates existing monetization policies.

The video platform clarified its approach to AI content following creator confusion about policy restrictions. YouTube emphasized that "authentic content creation using AI tools remains acceptable while mass-produced spam content continues to be prohibited." This distinction parallels Facebook's focus on meaningful enhancements versus repetitive posting.

YouTube introduced mandatory AI disclosure requirements effective May 21, 2025, for creators using artificial intelligence to generate realistic content. However, these disclosures don't restrict monetization eligibility, demonstrating platforms' nuanced approach to AI integration rather than blanket prohibitions.

Industry implications

The enforcement expansion demonstrates platform recognition of creator economy sustainability challenges amid the AI slop epidemic. Authentic content producers require protection from exploitation to maintain ecosystem health and advertiser confidence. Quality content standards support long-term platform viability in competitive social media markets while addressing AI-generated content concerns.

Content duplication issues extend beyond Facebook to industry-wide challenges involving both human and artificial intelligence content creation. Zero-click searches threaten content creator revenues as search platforms provide direct answers rather than directing users to original sources. These trends reshape content monetization strategies across digital platforms facing AI-generated content proliferation.

Marketing budgets increasingly prioritize platforms demonstrating commitment to content quality and creator protection against AI slop infiltration. Facebook's measures align with advertiser preferences for brand-safe environments while supporting sustainable creator ecosystems. The approach balances content abundance with quality control through technological solutions targeting both human and AI-generated policy violations.

Revenue sharing models face scrutiny as platforms implement stricter content standards addressing AI slop concerns. Facebook's $2 billion annual creator payments demonstrate significant financial stakes in policy enforcement effectiveness against artificial and human content exploitation. Successful implementation could influence competitor approaches to content authenticity and creator protection measures.

Platform competition intensifies around creator retention and authentic content promotion while managing AI slop challenges. Facebook's comprehensive approach combining enforcement, education, and monetization opportunities positions the platform favorably against competitors relying solely on algorithmic solutions or manual review processes for content quality control.

Key Terms Explained

Content Monetization Programs These are platform-operated systems that enable creators to earn revenue from their content through various payment structures. Facebook's Content Monetization Program consolidates multiple earning streams including In-stream ads, Ads on Reels, and Performance Bonus into unified systems. These programs typically pay creators based on engagement metrics, view counts, or advertiser revenue sharing, creating direct financial incentives for content production that can sometimes encourage quantity over quality.

Algorithmic Content Distribution This refers to automated systems that determine which content appears in user feeds based on machine learning models analyzing engagement patterns, user behavior, and content quality signals. Facebook's algorithm evaluates factors like video fingerprints, metadata patterns, and audience retention to decide content visibility. Understanding algorithmic preferences becomes crucial for creators seeking organic reach, as these systems can amplify authentic content while suppressing repetitive or low-quality material.

AI Slop A term describing low-quality, often bizarre artificial intelligence-generated content designed primarily to capture engagement and exploit monetization systems. AI slop typically involves mass-produced videos, images, or text created with minimal human input, prioritizing algorithmic performance over viewer value. This phenomenon emerged as creators discovered they could use AI tools to generate hundreds of pieces of content with minimal time investment, potentially scaling earnings through engagement-based payment models.

Performance Bonus Systems These are creator incentive programs that provide additional payments beyond standard advertising revenue sharing, typically based on content performance metrics like views, engagement rates, or audience growth. Facebook's Performance Bonus rewards creators for achieving specific milestones, encouraging consistent content production. However, these systems can inadvertently incentivize creators to prioritize viral content creation over quality, contributing to the proliferation of repetitive or exploitative content strategies.

Brand Safety Frameworks Comprehensive policies and technological systems designed to ensure advertiser content appears alongside appropriate, high-quality material that aligns with brand values. These frameworks evaluate content for potential risks including misleading information, inappropriate imagery, or association with controversial topics. For platforms like Facebook, maintaining brand safety becomes critical for advertiser confidence and revenue sustainability, directly influencing content moderation policies and creator monetization eligibility.

Content Attribution Systems These are technological solutions that identify original content sources and provide proper credit to creators when their material gets shared or repurposed. Facebook tests attribution links on duplicate videos that direct viewers to original content sources, helping protect creator intellectual property. Effective attribution systems balance content sharing benefits with creator protection, ensuring original producers receive recognition and potential traffic from viral distribution.

Creator Economy Sustainability This concept encompasses the long-term viability of content creation as a profession, requiring platforms to balance creator earning opportunities with content quality standards. Sustainable creator economies protect authentic producers from exploitation while maintaining advertiser confidence and user experience quality. Facebook's approach addresses sustainability by removing exploitative accounts while preserving monetization opportunities for genuine creators, supporting ecosystem health over short-term engagement metrics.

Engagement Farming A practice where creators produce content specifically designed to generate high interaction rates through controversial topics, emotional manipulation, or algorithm exploitation rather than providing genuine value. Engagement farming often involves repetitive content formats, clickbait techniques, or artificial controversy creation to boost metrics that determine monetization eligibility. Platforms combat this through sophisticated detection systems that evaluate content authenticity and meaningful audience engagement patterns.

Zero-Click Search Impact This phenomenon occurs when search engines and AI systems provide direct answers to user queries without directing traffic to original content sources, potentially reducing website visits and advertising revenue for content creators. As search platforms increasingly display information directly rather than linking to sources, creators face reduced monetization opportunities from traditional web traffic. This trend forces content strategies to focus on direct audience relationships and platform-native monetization rather than external traffic generation.

Cross-Platform Content Syndication The practice of sharing identical or similar content across multiple social media platforms to maximize reach and monetization opportunities. While platforms generally allow creators to share their original content across different channels, syndication becomes problematic when it involves unauthorized reproduction of others' work or mass distribution of low-quality material. Effective syndication strategies require understanding each platform's unique audience preferences and content format requirements while maintaining authenticity and value.

Timeline

- July 14, 2025: Facebook announces stronger measures against unoriginal content creators

- First half 2025: Platform takes action against 500,000 accounts for spammy behavior

- First half 2025: Facebook removes 10 million profiles impersonating content producers

- October 2024: Facebook launches unified Content Monetization Program consolidating earning streams

- July 2025: YouTube improves detection systems for unoriginal content with enhanced identification

- June 2025: Platform payments fuel AI slop flood across social media platforms, highlighting monetization-driven content quality concerns

- July 2025: YouTube improves detection systems for unoriginal content with enhanced identification systems

- July 2025: YouTube clarifies monetization policies addressing AI content and mass production concerns

- May 2025: YouTube introduces mandatory AI disclosure requirements for synthetic content transparency