Google this week introduced a simplified removal process for non-consensual explicit images from Search, according to Phoebe Wong, Product Manager at Google. The February 10, 2026 announcement represents the company's latest effort to reduce barriers faced by victims of intimate image abuse, building on protections the search giant has developed over several years.

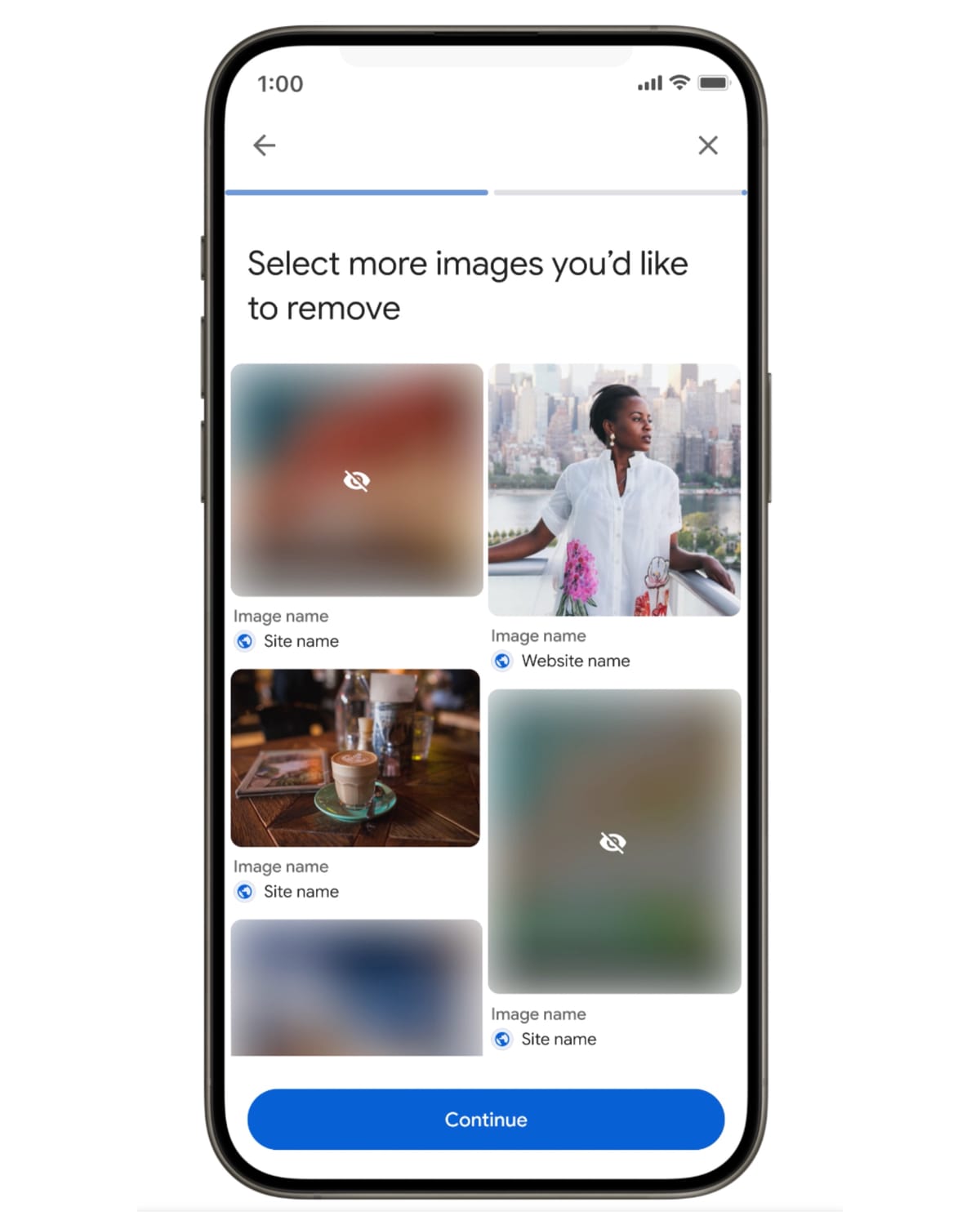

The new tool integrates directly into Search's image interface. Users can access the removal process by clicking three dots on an image, selecting "remove result," then choosing "It shows a sexual image of me." This streamlined approach eliminates the need to navigate separate forms or help center documentation that previously characterized the removal request process.

A significant change allows victims to submit multiple images simultaneously rather than filing individual requests for each piece of content. The batch submission capability addresses a core challenge in content removal - the administrative burden of reporting numerous instances of the same violation across different search results and queries.

Proactive filtering extends protection beyond individual takedowns

The February 10 update introduces ongoing safeguards that function independently of individual removal requests. According to Wong, victims can opt into filters that "proactively filter out any additional explicit results that might appear in similar searches." This automated protection represents a shift from reactive content removal to preventive filtering.

The technical implementation of these filters builds on systems Google deployed for non-consensual deepfakes in July 2024. That earlier update reduced exposure to explicit image results by over 70 percent for affected individuals through automated filtering triggered by successful removal requests. The September 2025 partnership with StopNCII introduced hash-based identification systems that enable detection without storing actual imagery.

Google's approach to non-consensual intimate imagery removal has evolved through multiple phases since the company first established removal request mechanisms. The search engine's help center documentation distinguishes between three categories eligible for removal: real intimate imagery regardless of whether it was originally consensual, fabricated sexual content created through photo editing or artificial intelligence, and search results that associate individuals with sexual material without justification.

Support resources and request tracking centralized in new hub

The removal workflow now includes immediate access to support organizations after submission. Wong stated that users "find links to expert organizations that provide emotional and legal support" directly following their removal request. This integration acknowledges that content removal represents only one component of addressing intimate image abuse.

Request tracking consolidates in Google's "Results about you" hub, where victims can monitor status updates for all submissions in a single interface. Email notifications alert users when request status changes, eliminating the need to repeatedly check the hub for updates. The centralized tracking system provides transparency into Google's review process and resolution timeline.

The February 10 announcement did not specify average review duration or approval rates for removal requests. Google maintains discretion in determining whether submitted content violates its non-consensual explicit imagery policy. The company can implement either full removal - where websites disappear entirely from search results - or partial removal, where content remains absent only from queries containing the individual's name.

Technical implementation raises questions about scope and enforcement

The new removal process operates across Google Search's image results but may not extend to other Google properties or third-party platforms that index content. According to the announcement, the tool is "rolling out over the coming days in most countries" with plans to "expand it to more regions soon." The phased rollout suggests geographic variations in availability, though Google did not identify which countries receive initial access.

Platform liability for non-consensual intimate imagery has become a significant regulatory concern across multiple jurisdictions. A January 2025 German court ruling under the Digital Services Act established that Google can be held responsible for content appearing in search results once the company gains knowledge of violations. That decision applied specifically to trademark infringement but demonstrated broader platform accountability frameworks emerging in Europe.

The distinction between removal from search results and deletion from source websites remains critical. Google's tool removes links from its search index but does not delete content from the websites hosting the images. Victims seeking complete removal must contact individual website operators or pursue legal action against content hosts. This limitation appears throughout Google's content removal documentation for various policy violations.

Google's child protection policy, implemented October 22, 2025, demonstrates the company's most aggressive enforcement approach with immediate account suspensions for violations. Non-consensual explicit imagery of adults receives different treatment, with removal processes rather than automatic enforcement actions against website operators or advertisers.

Privacy safeguards required for automated detection systems

The proactive filtering system announced February 10 requires Google to analyze image characteristics without storing or indexing the content itself. Hash-based detection, as implemented through the StopNCII partnership, creates digital fingerprints that enable matching without maintaining actual images in Google's systems.

Privacy concerns around automated content analysis have intensified as detection capabilities expand. Google faces scrutiny over real-time bidding data practices that privacy organizations argue expose sensitive information to foreign adversaries. A January 16, 2025 complaint filed with the Federal Trade Commission alleged that Google's advertising system processes bid requests containing detailed personal information across 35.4 million websites.

The intersection of content removal systems and privacy protections creates technical challenges. Automated filters must distinguish between consensual and non-consensual intimate imagery, assess whether content features the requesting individual, and determine whether results violate policy standards - all while minimizing human review of sensitive material.

Google's approach to synthetic sexually explicit content established in May 2024 prohibits advertising that depicts nudity or sexual acts created using artificial intelligence or digital manipulation tools. The policy addresses deepfake pornography proliferation but operates through advertising restrictions rather than search result removal.

Implementation timeline and victim advocacy feedback

The February 10 announcement stated that Google "will continue to listen to feedback and work with experts to provide tools that protect people online." This commitment suggests ongoing development beyond the initial rollout announced today. The company did not identify which advocacy organizations contributed to the tool's design or which experts provided input on victim needs.

Previous Google updates addressing non-consensual content followed extended consultation periods. The July 2024 deepfake protections resulted from "extensive collaboration with experts and feedback from victims," according to that announcement. The September 2025 StopNCII partnership emerged from advocacy by survivor groups and civil liberties organizations that emphasized the burden individual removal requests placed on victims.

The removal tool's effectiveness depends partly on victim awareness and accessibility. Google provides the interface in Search's image results but did not announce educational campaigns or outreach to inform potential users about the new capability. Victims must know to look for the three-dot menu on image results and understand which option addresses their specific situation.

Support organization accessibility varies significantly across regions. The announcement's reference to "expert organizations that provide emotional and legal support" does not specify whether Google verifies these resources or maintains updated contact information as organizations change availability. Legal support options differ dramatically between jurisdictions with varying laws addressing intimate image abuse.

Broader context of search content removal policies

Google maintains numerous content removal pathways addressing different policy violations and legal requirements. The company's Removals report in Search Console enables website owners to request temporary blocks on their own content. Personal information removal requests operate through separate systems that address doxxing, identity theft risks, and other privacy concerns.

Court-ordered content removal creates different obligations. An Indian court ruling in November 2025 required Google to de-index approximately 60 URLs related to criminal proceedings following the subject's acquittal. That case demonstrated judicial willingness to order search engine content filtering based on privacy claims, though implementation challenges emerged when website operators received removal notices without access to underlying court orders.

The technical architecture supporting multiple removal pathways must accommodate different legal standards, policy requirements, and user needs. Google's systems distinguish between copyright takedowns, defamation claims, privacy violations, and safety concerns - each with distinct review processes and enforcement mechanisms.

Platform content moderation at scale presents fundamental challenges. Google's 2024 Ads Safety Report revealed that the company suspended over 39.2 million advertiser accounts, removed over 5.1 billion advertisements, and restricted over 9.1 billion advertisements. These enforcement numbers demonstrate the volume of policy violations across Google's platforms.

Marketing and advertising implications of content policies

For marketing professionals, Google's content policies shape which products and services can be advertised and under what conditions. The company's sexual content advertising policy underwent significant changes in September 2025 when Google removed restrictions on mature cosmetic procedures, allowing healthcare providers to advertise surgical and non-surgical treatments previously classified alongside sexually suggestive content.

These policy adjustments reflect evolving societal attitudes and regulatory requirements. Google must balance user safety protections, advertiser access to audiences, publisher monetization needs, and compliance with varying international regulations. The February 10 non-consensual imagery tool operates independently of advertising policies but demonstrates similar tensions between openness and protection.

The distinction between organic search results and paid advertising creates different policy frameworks. While the new removal tool addresses search result visibility, advertising policies govern which products can be promoted through paid placements. Non-consensual intimate imagery cannot appear in either context, but enforcement mechanisms differ significantly.

Platform liability continues evolving as regulators examine technology companies' responsibilities for user-generated and third-party content. The Digital Services Act implementation in Europe creates specific obligations for Very Large Online Platforms including Google, requiring enhanced content moderation standards, transparency reporting, and external auditing.

The February 10 announcement did not address whether Google shares removal request data with law enforcement or other platforms. Cross-platform coordination would enhance protection by preventing removed content from appearing on other search engines or social media sites, but raises privacy concerns about sharing victim identities and case details.

Technical challenges in distinguishing consensual and non-consensual content

Automated systems must determine content consent status - a complex assessment that may require understanding context, relationship dynamics, and whether all depicted individuals approved publication. Google's announcement described filtering "additional explicit results that might appear in similar searches" but did not explain how systems distinguish between different categories of intimate imagery.

The technical detection challenge intensifies with synthetic content. AI-generated deepfakes may depict individuals in fabricated sexual situations using their likeness without permission. Google's July 2024 update addressed this category specifically, noting the difficulty in "distinguishing between legitimate explicit content (such as an actor's consensual nude scenes) and non-consensual fake content."

Hash-based detection through StopNCII partnership enables matching of known images across platforms. However, this approach requires victims to submit hashes of their intimate imagery - a process that may feel invasive despite technical safeguards. Alternative detection methods using visual similarity algorithms risk over-blocking legitimate content or missing variations of the same image.

The February 10 tool's proactive filtering represents an advancement beyond individual URL removal. Traditional content removal requires victims to identify each specific search result, submit the URL, and wait for Google's review. Automated filtering should theoretically detect new instances of the same content without additional victim reports.

Implementation questions remain regarding filter accuracy and scope. Will the system detect cropped versions, color-adjusted copies, or images with added text overlays? How does Google prevent false positives that might remove legitimate news coverage or artistic content? The announcement provided no technical specifications about detection thresholds or review processes for automated removals.

Rollout timeline and regional availability

The phased implementation "over the coming days" suggests infrastructure deployment rather than immediate universal access. Google typically stages feature rollouts to monitor technical performance, gather user feedback, and address unforeseen issues before broader deployment. The announcement noted plans to "expand it to more regions soon" without specifying which countries receive initial access.

Regional variations in laws addressing non-consensual intimate imagery may influence rollout priorities. Some jurisdictions criminalize distribution of such content, while others lack specific legislation. Google's content policies generally apply globally but enforcement may vary based on local legal requirements and cultural contexts.

The tool's availability in "most countries" excludes certain territories. Google's platform policies prohibit publisher products in Crimea, Cuba, the so-called Donetsk People's Republic, Luhansk People's Republic, Iran, North Korea, and Syria due to sanctions and export controls. Whether the removal tool faces similar geographic restrictions remains unclear.

Language support presents another potential limitation. If the interface operates only in English initially, non-English speakers may face accessibility barriers. The announcement did not specify whether the three-dot menu option "It shows a sexual image of me" appears in multiple languages or requires English language settings.

Victim burden reduction as policy objective

Google's characterization of the update as reducing "the burden that victims of non-consensual explicit imagery face" acknowledges that previous removal processes imposed significant costs on affected individuals. Requiring victims to repeatedly report content, track multiple submissions, and navigate complex help documentation created administrative obstacles that compounded psychological harm.

The batch submission capability directly addresses this burden by allowing multiple images in a single form. However, victims must still identify relevant search queries, locate offending images in results, and document each instance for submission. The proactive filtering aims to eliminate this ongoing monitoring requirement by automatically detecting similar content.

Support resource integration reflects understanding that victims need assistance beyond content removal. Legal options, counseling services, and advocacy organizations provide different forms of support that victims may not know how to access. Connecting individuals with these resources immediately after submission creates an intervention point when victims are actively seeking help.

The centralized tracking hub reduces uncertainty about request status. Previous systems required victims to remember which URLs they had submitted and when, check email for updates, and potentially re-submit requests if they received no response. Consolidated tracking with notifications provides transparency and reduces the need for victims to repeatedly engage with Google's systems.

Industry context and competitive approaches

Other platforms have implemented similar protections with varying approaches. The StopNCII partnership announced in September 2025 involves multiple technology companies beyond Google, creating cross-platform detection capabilities. Victims who create hashes through StopNCII can potentially prevent content from appearing on participating platforms without filing separate removal requests with each company.

Social media platforms generally maintain more restrictive policies on intimate imagery than search engines, prohibiting both consensual and non-consensual content in most circumstances. Search engines face different challenges because they index third-party websites rather than hosting user-uploaded content directly. This creates tension between providing comprehensive search results and protecting individuals from harmful content visibility.

The advertising technology industry's approach to sensitive content shapes which websites can monetize through Google's ad networks. Google's dishonest behavior policy prohibits advertising products designed to enable surveillance without consent, though this addresses different conduct than non-consensual imagery distribution. Platform policies must address overlapping concerns about privacy violations, consent, and harmful content across multiple product areas.

Competition authorities increasingly scrutinize how major platforms implement content policies. The European Commission investigation announced November 13, 2025 examined allegations that Google demotes news publishers hosting sponsored content. While that case addresses spam rather than harmful content, it demonstrates regulatory interest in how Google's policies affect which content appears in search results and how enforcement decisions are made.

Measurement and accountability questions

Google's announcement did not include metrics on removal request volume, approval rates, or average resolution time. These transparency measures would help evaluate the tool's effectiveness and identify potential gaps in protection. Without baseline data, assessing whether the new process achieves its stated objective of reducing victim burden becomes challenging.

Previous Google announcements about content removal included specific metrics. The July 2024 deepfake update cited a 70 percent reduction in exposure to explicit image results for affected searches. The child protection policy implementation in October 2025 revealed suspensions of over 39.2 million advertiser accounts in 2024. The February 10 announcement's absence of quantitative data leaves open questions about scale and impact.

External oversight of removal decisions could enhance accountability. The Technical Committee system established in antitrust remedies demonstrates one model for independent monitoring of Google's practices. However, applying similar oversight to content removal raises privacy concerns about sharing case details with external parties.

Victim advocacy organizations may provide independent assessment of the tool's effectiveness based on feedback from individuals they support. These organizations' perspectives could identify gaps between Google's stated objectives and experienced outcomes, highlighting areas where the removal process falls short of victim needs.

The February 10 update represents an incremental advancement in Google's approach to non-consensual explicit imagery rather than a fundamental restructuring. The company maintains existing policies and review processes while simplifying access, enabling batch submissions, and adding proactive filtering. Whether these changes substantially reduce harm depends on implementation details not yet observable.

Timeline

- May 2024: Google updates advertising policies to address synthetic sexually explicit content created using AI

- July 31, 2024: Google announces systems to demote sites with high volumes of explicit deepfake removals, reducing exposure by over 70%

- August 19, 2025: Google announces upcoming expansion of child protection policy beyond imagery to broader exploitation

- September 4, 2025: Google removes mature cosmetic procedure restrictions from sexual content advertising policy

- September 17, 2025: Google partners with StopNCII to use hash-based detection for non-consensual intimate imagery

- October 22, 2025: Google finalizes Child Sexual Abuse and Exploitation policy with immediate suspension enforcement

- November 10, 2025: Indian court orders Google to remove URLs from search results in defamation case

- January 16, 2025: Privacy organizations file FTC complaint over Google's real-time bidding data practices

- February 10, 2026: Google introduces simplified removal process for non-consensual explicit images with batch submission and automated filtering

Summary

Who: Google introduced the new removal tool for victims of non-consensual explicit imagery, developed by Product Manager Phoebe Wong and the Search team. The tool affects individuals whose intimate images appear in Google Search results without their consent, along with advocacy organizations that provide support resources.

What: Google launched a simplified removal process allowing users to request removal of multiple non-consensual explicit images simultaneously through a three-dot menu in Search's image results. The system includes proactive filtering that automatically removes similar explicit content from future searches, centralized request tracking in the "Results about you" hub, and immediate access to support organizations. The tool builds on previous systems including the July 2024 deepfake protections and September 2025 StopNCII partnership.

When: The announcement occurred February 10, 2026, with rollout beginning "over the coming days" in most countries. Google plans to expand availability to additional regions soon but did not specify the complete implementation timeline.

Where: The tool operates within Google Search's image results interface globally, though initial availability is limited to "most countries" with planned expansion. The removal process affects content visibility in Google Search but does not delete images from source websites. Geographic variations may exist based on local regulations and infrastructure deployment.

Why: The update addresses the administrative burden victims face when reporting non-consensual intimate imagery by eliminating the need to submit individual requests for each image. Google aims to reduce victim harm by simplifying access, enabling batch submissions, providing ongoing automated protection, and connecting affected individuals with support resources. The tool represents the latest phase in Google's multi-year effort to combat non-consensual intimate imagery following advocacy from survivors and civil liberties organizations.