Google Ads introduced a streamlined approach to broad match keyword experiments on June 30, 2025, fundamentally changing how advertisers test keyword strategies. The new methodology eliminates the need for campaign duplication, instead dividing traffic and budget within existing campaigns between control and treatment groups.

According to the official Google Ads documentation, the new approach aims at "delivering faster results and reducing some of the common experimentation errors, by diverting traffic and budget within the existing campaign instead of creating a campaign copy for the experiment treatment arm."

Get the PPC Land newsletter ✉️ for more like this.

Summary

Who: Google Ads platform serving advertisers using Search campaigns with Smart Bidding strategies

What: New Broad Match experiments methodology that tests keyword match types within single campaigns instead of creating campaign duplicates

When: Announced June 30, 2025, available immediately through Recommendations and Experiments pages

Where: Available globally through Google Ads interface in Recommendations and Experiments sections

Why: To deliver faster experimental results, reduce setup errors, and shorten learning periods by maintaining traffic within existing campaign structures rather than splitting across duplicate campaigns

The traditional broad match experimentation process required advertisers to create complete campaign copies. Search campaigns would be duplicated, with one version serving as the control group maintaining original keywords, while the duplicate campaign acted as the treatment arm with broad match versions added. This approach often created synchronization issues between campaigns and extended learning periods as traffic split between entirely separate campaign structures.

The updated system operates differently. Traffic and budget allocation occurs within a single existing search campaign. The control group receives a percentage portion of the campaign with original keywords maintained. The treatment group gets the remaining percentage of traffic and budget, receiving both original keywords and broad match versions of those same keywords.

This architectural change delivers several technical advantages. Setup errors decrease significantly since both control and treatment arms exist within the same campaign structure. Synchronization issues between separate campaigns disappear entirely. Learning periods shorten because traffic remains consolidated within one campaign rather than distributed across multiple campaign copies.

Statistical significance emerges faster under this model. Traditional broad match experiments required extended periods to accumulate sufficient data across separate campaigns. The new approach concentrates traffic volume, enabling quicker statistical conclusions about broad match performance versus exact and phrase match alternatives.

Implementation follows two distinct pathways. Advertisers can access the feature through the Recommendations page by selecting "View XX recommendations" under the "Add broad match keywords" recommendation card. From there, they select the three-dot icon beside their target campaign and choose "Apply as an experiment."

Alternatively, the Experiments page provides direct access. Users navigate to the "All Experiments" section and locate the recommended experiments area. The "Add Broad Match keywords" card contains a "Create Experiment" option that launches the setup process.

The setup process requires specific parameters. Advertisers must define start and end dates for the experiment duration. An experiment name helps organize multiple tests running simultaneously. Google Ads automatically handles traffic distribution between control and treatment groups once the experiment launches.

Marketing measurement capabilities have become increasingly sophisticated, with Google emphasizing that organizations conducting monthly experiments can achieve significant performance improvements over time.

Results appear under the "Experiments" tab with an expanded "Experiment summary" view available when selecting the specific experiment campaign. This centralized reporting eliminates the need to compare performance across separate campaigns as required in traditional broad match testing.

Advertisers have two options for applying experimental results. During experiment creation, they can select "Apply your experiment changes if results are favorable" to enable automatic implementation if performance metrics improve. Alternatively, manual application occurs after experiment completion by selecting "Apply" from the Experiments table.

The automated application feature represents a significant advancement in experiment management. Previously, advertisers needed to manually monitor experiment results and implement changes across multiple campaigns. The new system can automatically add broad match versions to campaigns when predetermined performance thresholds are met.

Technical requirements limit availability to specific campaign types. The feature supports only campaigns using Smart Bidding strategies. Portfolio bid strategies are explicitly not supported, restricting usage to campaigns with individual automated bidding approaches.

This limitation aligns with Google's broader push toward automated bidding strategies. Recent platform changes have emphasized conversion-based bidding, though some implementations have created controversy among advertisers who experienced unexpected broad match activations.

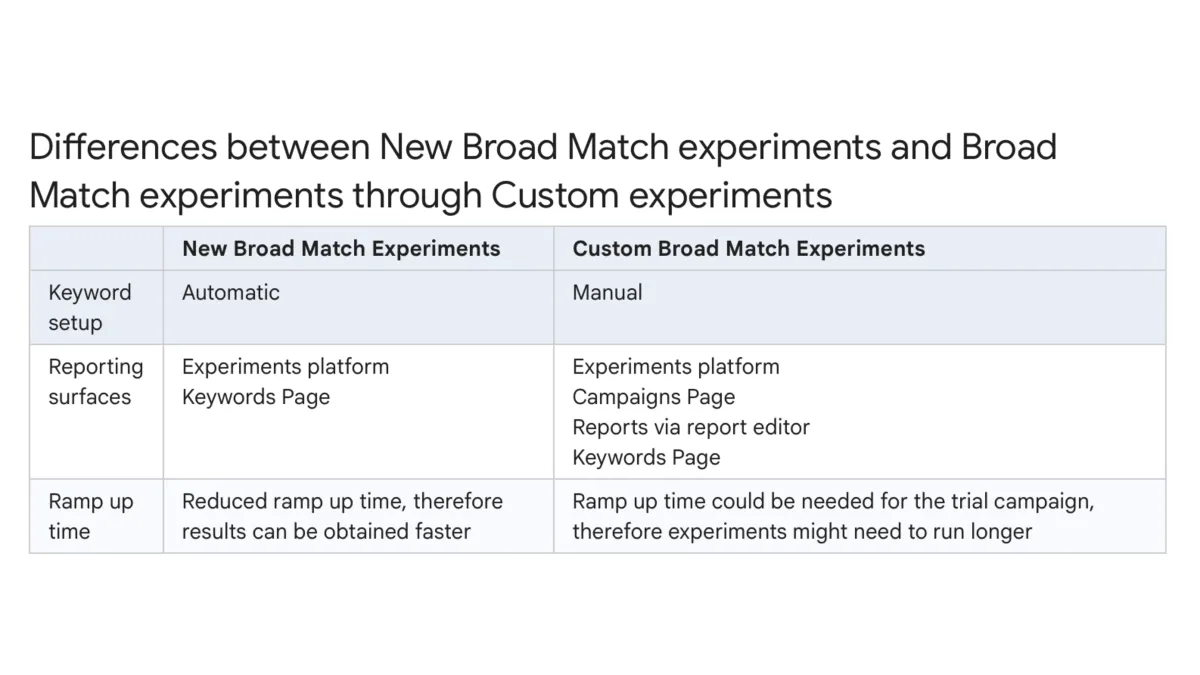

The reporting structure differs substantially from traditional Custom Experiments. New Broad Match Experiments present data through the Experiments platform and Keywords Page. Custom Broad Match Experiments utilize the Experiments platform, Campaigns Page, report editor outputs, and Keywords Page. This consolidated reporting reduces complexity for advertisers managing multiple testing initiatives.

Keyword setup automation distinguishes the new approach from manual Custom Experiment configurations. The automated system identifies appropriate keywords for broad match testing and implements the experimental structure without requiring manual keyword list management.

Ramp-up time improvements provide another operational advantage. Traditional Custom Experiments required separate campaigns to establish performance baselines, extending the period before meaningful data emerged. The new system reduces this ramp-up time by maintaining traffic within established campaign structures.

The announcement comes amid broader changes to Google Ads experimentation capabilities. Video experiments received similar streamlining in October 2024, while Performance Max campaigns gained in-campaign experimentation features in May 2024.

The technical infrastructure supporting these experiments represents Google's continued investment in advertising measurement capabilities. Incrementality testing requirements were recently reduced to $5,000 minimums using Bayesian methodology, making advanced measurement accessible to smaller advertisers.

Industry adoption of the new broad match experiments feature will likely accelerate given the reduced complexity and faster results. Traditional broad match testing often discouraged smaller advertisers due to setup complexity and extended testing periods. The streamlined approach removes these barriers while maintaining statistical rigor.

The feature availability through both Recommendations and Experiments pages ensures discovery by advertisers with different workflow preferences. Recommendations-driven users encounter the feature as part of Google's automated optimization suggestions. Experiment-focused advertisers can access it directly through dedicated testing interfaces.

Training implications for marketing teams should be minimal given the simplified setup process. Traditional broad match experiments required understanding of campaign duplication, traffic splitting across separate campaigns, and complex performance comparison methodologies. The new approach reduces these requirements to basic experiment parameter definition.

Results interpretation becomes more straightforward with consolidated reporting. Advertisers no longer need to calculate performance differences across separate campaigns or account for learning period variations between control and treatment campaign copies. Direct comparison within the same campaign structure provides clearer performance insights.

The automated application option particularly benefits agencies managing multiple client accounts. Manual experiment monitoring and implementation across numerous broad match tests created significant operational overhead. Automated application based on performance thresholds reduces this burden while ensuring optimal timing for broad match adoption.

Google's documentation emphasizes that Custom Experiments remain available for advertisers requiring advanced experimentation setups. This maintains flexibility for complex testing scenarios while providing a simplified default option for standard broad match evaluation.

Timeline

- June 30, 2025: Google Ads announces new Broad Match experiments approach with single-campaign methodology

- May 25, 2025: Google reduces incrementality testing budget requirements to $5,000

- January 5, 2025: Google publishes comprehensive guide on building marketing experimentation capabilities

- December 26, 2024: Google investigates reports of automatic broad match activation during bidding strategy changes

- October 20, 2024: Google simplifies Video experiments for creative performance testing

- May 1, 2024: Google introduces in-campaign experimentation for Performance Max campaigns

- October 2023: Google launches Performance Max Uplift experiments

- October 2023: Google introduces Demand Gen experiments feature