LangChain has a comprehensive six-stage framework for building production-grade AI agents, addressing widespread enterprise failures in agent deployment. The methodology emphasizes Standard Operating Procedure (SOP) design as the critical foundation before technical implementation, according to IBM VP of AI Platform Armand Ruiz who highlighted the framework in a LinkedIn post.

The framework emerged from observations that most companies building AI agents skip essential planning phases. "Most companies building AI agents today skip the most important step: Designing the Standard Operating Procedure," Ruiz stated in his LinkedIn analysis. The post, which generated significant industry engagement, positioned the LangChain framework as a solution to prototype-to-production failures plaguing enterprise AI initiatives.

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: LangChain framework development team, IBM VP of AI Platform Armand Ruiz promoting methodology, enterprise teams including Product Owners, Subject Matter Experts, Engineers, and Support staff implementing structured agent development

What: Six-stage framework for AI agent development emphasizing Standard Operating Procedure design before technical implementation, addressing widespread enterprise failures in prototype-to-production transitions through systematic methodology

When: Framework highlighted in January 2025 LinkedIn post by Armand Ruiz, building on LangChain's ongoing development work and gaining prominence throughout 2025 as enterprises seek systematic approaches

Where: Enterprise environments across industries implementing AI agents, with particular relevance for business process automation, customer service, and workflow optimization requiring reliable, production-grade systems

Why: Most companies skip critical procedural design phases leading to prototype failures; framework provides systematic methodology addressing 35% multi-turn task success rates and enterprise requirements for reliability, compliance, and scalable agent deployment

Subscribe the PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

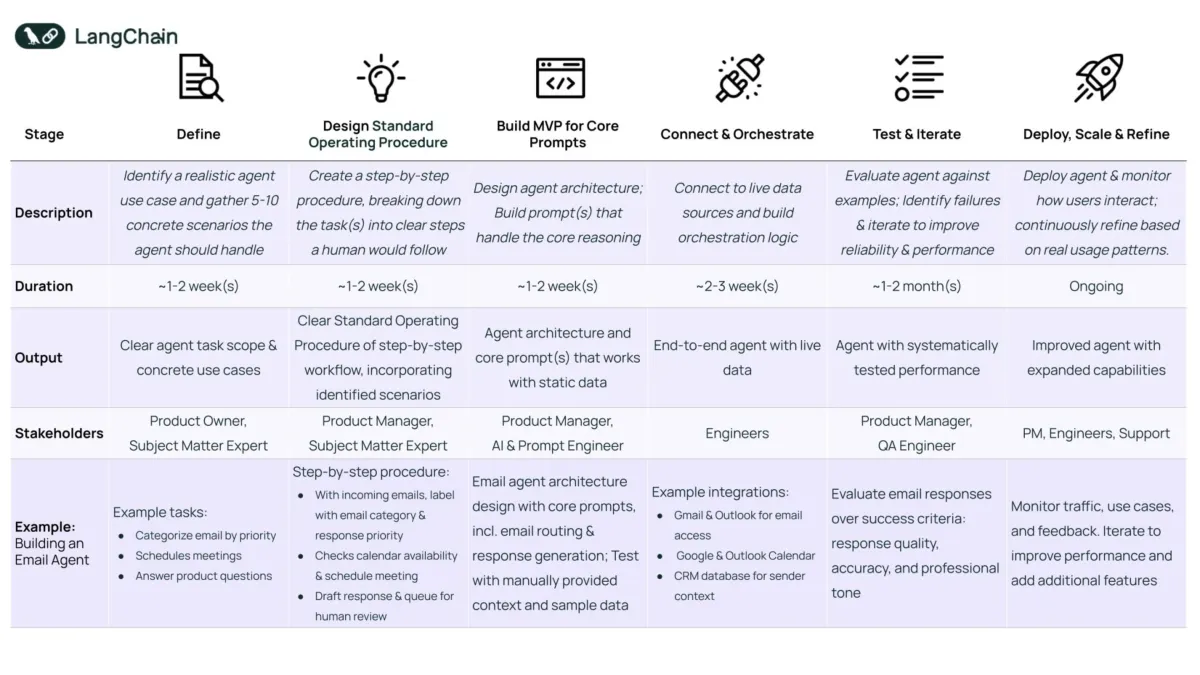

According to the framework documentation, the six stages span from initial definition through continuous refinement. Stage one requires identifying realistic agent use cases and gathering 5-10 concrete scenarios the agent should handle, with estimated duration of 1-2 weeks involving Product Owners and Subject Matter Experts. This foundational phase produces clear agent task scope and concrete use cases for subsequent development phases.

Stage two focuses on designing Standard Operating Procedures through step-by-step workflow creation. The methodology breaks down tasks into clear steps that humans would follow, incorporating identified scenarios from the initial phase. Product Managers and Subject Matter Experts collaborate during this 1-2 week period to produce standardized procedures that form the backbone of agent behavior.

The third stage transitions to technical implementation through MVP development for core prompts. Teams design agent architecture and build prompts that handle core reasoning functionality, working with static data to validate approaches. Product Managers collaborate with AI and Prompt Engineers during this 1-2 week phase to create functional agent architectures with tested core prompts.

Connection and orchestration comprise the fourth stage, requiring 2-3 weeks of engineering work to integrate live data sources and build orchestration logic. The framework emphasizes end-to-end agent functionality with real-time data access, moving beyond static implementations toward production-ready systems. Engineers focus on integration patterns with Gmail, Outlook, Google Calendar, and CRM databases for comprehensive business context.

Stage five implements systematic testing and iteration over 1-2 months, with Product Managers and QA Engineers evaluating agent performance against predefined success criteria. The methodology requires assessment of response quality, accuracy, and professional communication tone. This extended evaluation phase addresses reliability concerns that prevent enterprise adoption of agent technologies.

The final stage enables ongoing deployment, scaling, and refinement based on real usage patterns. Product Managers, Engineers, and Support teams monitor traffic, use cases, and feedback to continuously improve performance and expand capabilities. The framework positions this as an ongoing process rather than a fixed endpoint, reflecting the adaptive nature of production AI systems.

The email agent example demonstrates practical framework application across all six stages. Initial tasks include categorizing emails by priority, scheduling meetings, and answering product questions. The SOP design phase creates structured procedures for incoming email labeling with category and response priority, calendar availability checking, and response drafting with human review queues.

Technical implementation involves email agent architecture design with core prompts for email routing and response generation. The orchestration phase integrates Gmail and Outlook for email access, Google and Outlook Calendar systems, and CRM databases for sender context. Testing evaluates email responses against quality, accuracy, and tone criteria before deployment with ongoing performance monitoring.

Context engineering has emerged as the critical discipline separating successful AI agent implementations from disappointing failures, aligning with the framework's emphasis on structured information architecture. Industry practitioners identify information quality as the determining factor between successful implementations and prototype failures, supporting LangChain's methodical approach.

Enterprise adoption challenges validate the framework's structured methodology. Salesforce research published in June 2025 revealed that leading AI agents achieve only 35% success rates in multi-turn business scenarios, demonstrating significant gaps between current capabilities and enterprise requirements. The LangChain framework addresses these limitations through systematic workflow design and iterative improvement processes.

Agent engineering represents a new discipline combining software engineering, prompting, product development, and machine learning methodologies according to Ruiz's analysis. The framework codifies this interdisciplinary approach through structured phases that ensure proper foundation before technical complexity. Traditional approaches that prioritize prompt engineering or integration without procedural foundations typically fail to advance beyond prototype stages.

The framework's emphasis on human-reviewable processes addresses enterprise security and compliance requirements. Each stage includes stakeholder definitions ranging from Product Owners and Subject Matter Experts to Engineers and Support teams, ensuring appropriate oversight throughout development cycles. This collaborative approach contrasts with developer-centric methodologies that may lack business context validation.

Production deployment patterns align with the framework's systematic approach. LangChain's State of AI Agents Report indicates that 51% of organizations currently deploy agents in production, with 78% maintaining active implementation plans. Mid-sized companies with 100-2,000 employees demonstrate 63% adoption rates, suggesting that structured methodologies enable successful scaling beyond prototype phases.

Technical architecture considerations support the framework's orchestration phase requirements. Google's September 2024 technical whitepaper outlined three-layer architecture for AI agents, consisting of model layers, orchestration layers, and tools layers. The document specifically highlighted integration patterns using LangChain and LangGraph frameworks for production-grade implementations.

The framework addresses cost efficiency concerns through structured evaluation phases. Analysis indicates that systematic testing enables optimal model selection, with Google's Gemini-2.5-Flash and Gemini-2.5-Pro providing balanced cost-performance ratios for enterprise applications. The framework's evaluation methodology helps organizations avoid expensive model selections that may not justify costs through improved performance.

Competitive differentiation emerges through the framework's emphasis on procedural design over technical features. While alternative frameworks like Microsoft's AutoGen focus on conversation-based coordination and CrewAI emphasizes role-based execution, LangChain's methodology prioritizes workflow clarity and business process integration. This approach addresses enterprise requirements for reliability and predictability in agent behavior.

Industry engagement with the framework reflects growing recognition of systematic development needs. The LinkedIn post generated substantial discussion among AI practitioners, with responses highlighting similar observations about prototype-to-production failures. The framework provides actionable methodology for organizations struggling to advance beyond experimental implementations.

Microsoft's declaration of traditional web obsolescence through agentic systems supports the framework's vision of autonomous agent workflows. CEO Satya Nadella's announcement of 30% AI code generation milestone demonstrates the transformation toward agent-driven processes, validating LangChain's systematic approach to enterprise agent development.

The framework's six-stage methodology positions procedural design as the critical success factor for enterprise AI agent implementations. By emphasizing Standard Operating Procedure development before technical complexity, the approach addresses widespread failures in prototype-to-production transitions while providing actionable guidance for systematic agent development across enterprise environments.

Training and skill development implications extend beyond technical implementation to include process design capabilities. The framework requires teams to develop expertise in workflow analysis, procedural documentation, and iterative improvement methodologies alongside traditional programming and prompt engineering skills. This interdisciplinary requirement reflects the emerging field of agent engineering as distinct from conventional software development.

Future applications of the framework may extend beyond individual agent development to multi-agent orchestration scenarios. The systematic approach to procedural design and testing provides foundation for complex agent collaborations where multiple specialized systems coordinate to address enterprise challenges requiring distributed expertise and coordinated execution across business functions.

Timeline

- October 2024: LangChain releases foundational framework documentation supporting six-stage methodology

- January 2025: IBM VP Armand Ruiz highlights framework in viral LinkedIn post addressing enterprise agent failures

- March 27, 2025: Zeta Global announces AI Agent Studio general availability implementing systematic agent development approaches

- May 21, 2025: Microsoft CEO Satya Nadella announces agentic web architecture validating systematic agent workflow vision

- June 10, 2025: Salesforce publishes CRMArena-Pro benchmark study demonstrating need for systematic development approaches

- June 22, 2025: Context engineering discipline gains prominence supporting framework's procedural emphasis

- July 2025: Framework gains industry adoption as enterprises seek systematic approaches to production agent deployment