Meta, the parent company of Facebook and Instagram, this week released a statement addressing two significant issues that occurred on its platforms following the attempted assassination of former President Donald Trump earlier this week. The company explained why its AI chatbot, Meta AI, provided inconsistent responses about the incident and why a fact-checking label was incorrectly applied to an authentic photo of Trump after the event. This explanation comes amid growing concerns about the role of AI and content moderation in shaping public discourse around breaking news events.

According to Meta's statement, the first issue involved Meta AI's responses to questions about the assassination attempt. The company acknowledged that AI chatbots, including their own, often struggle with providing accurate information about breaking news or real-time events. This limitation stems from the nature of large language models, which are trained on historical data and cannot instantly incorporate new information. In the case of the Trump incident, Meta AI was initially programmed to provide generic responses rather than risk spreading misinformation during a time of confusion and conflicting reports.

However, this cautious approach led to complaints from users who found the AI refusing to discuss the event at all. Meta admitted that while they have since updated the AI's responses, they should have done so more quickly. The company also noted that in some instances, Meta AI provided incorrect information, including claims that the event didn't happen. These erroneous responses, known in the AI industry as "hallucinations," highlight an ongoing challenge in how AI systems handle real-time events.

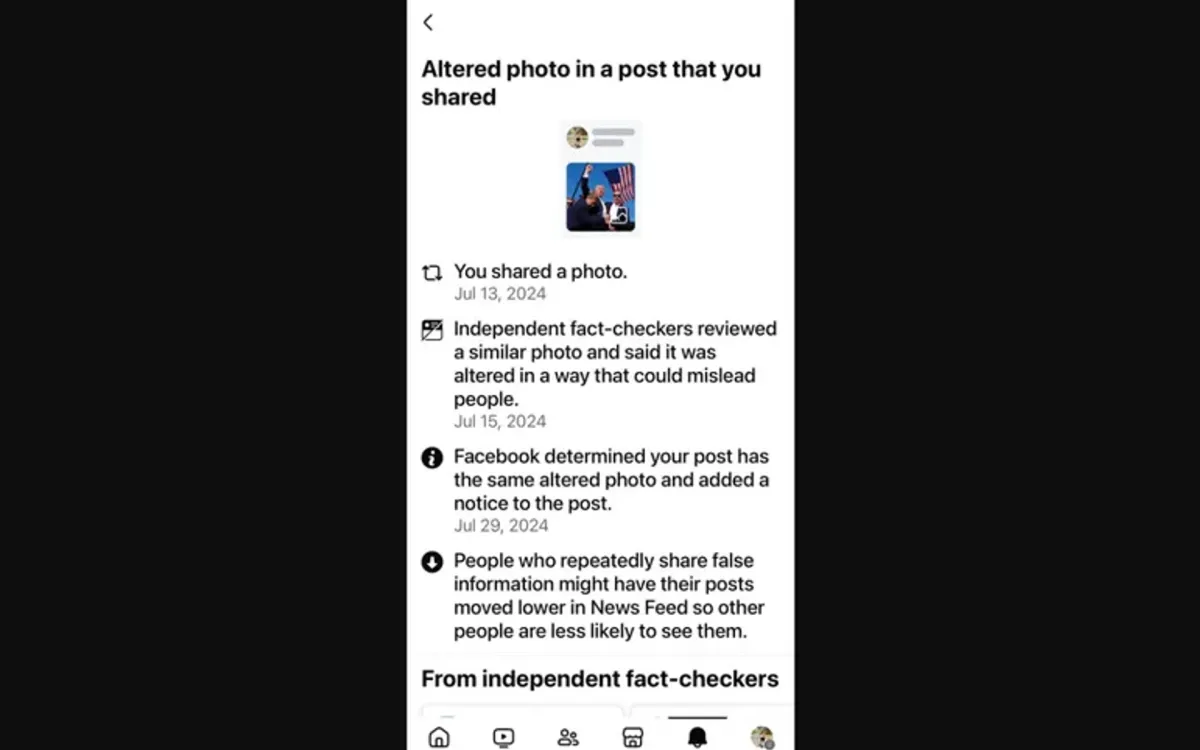

The second issue Meta addressed involved the circulation of a doctored photo of former President Trump with his fist raised, which made it appear as though Secret Service agents were smiling. Initially, Meta's fact-checking system correctly labeled this altered image. However, due to the subtle differences between the doctored photo and the original, the system erroneously applied the fact-check label to the authentic image as well. Meta stated that their teams worked quickly to correct this mistake once it was identified.

These incidents underscore the complex challenges facing tech companies as they navigate the intersection of AI, content moderation, and breaking news events. The rapid spread of information on social media platforms during crises can often outpace the ability of both human moderators and AI systems to accurately verify and contextualize content. This gap can lead to the proliferation of misinformation or, in cases like this, the incorrect labeling of authentic content.

Meta's transparency in addressing these issues reflects the growing scrutiny tech companies face regarding their handling of sensitive political content and misinformation. The company emphasized its commitment to improving its systems and acknowledged the potential for these errors to create impressions of bias, even when none is intended.

The challenges Meta faced with its AI responses highlight broader industry-wide issues in the development and deployment of generative AI systems. As these technologies become more prevalent in daily online interactions, their limitations in handling real-time events and nuanced content become more apparent. The incident serves as a reminder of the current state of AI technology – while advanced, it is not infallible and requires careful oversight and rapid correction when errors occur.

Meta's experience also raises important questions about the role of AI in disseminating news and information during critical events. While AI can process and analyze vast amounts of data quickly, its inability to contextualize information in real-time or understand the subtle nuances of developing situations can lead to the spread of misinformation or confusion.

The fact-checking label issue points to the delicate balance platforms must strike between combating misinformation and avoiding the over-censorship of legitimate content. Automated systems, while efficient, can sometimes lack the nuanced understanding required to differentiate between similar but critically different pieces of content. This incident serves as a reminder of the ongoing need for human oversight in content moderation processes, particularly for high-stakes events like political assassinations or attempts.

Looking forward, Meta's statement indicates a commitment to addressing these issues and improving their AI and fact-checking systems. The company acknowledges that as AI features evolve and more people use them, continued refinement based on user feedback will be necessary. This approach aligns with the broader tech industry's recognition that AI systems, while powerful, require ongoing development and careful implementation, especially in sensitive areas like news dissemination and content moderation.

The incidents also highlight the need for public understanding of the limitations of current AI technologies. As users increasingly interact with AI-powered tools and chatbots, it's crucial to maintain a critical perspective and seek verification from multiple sources, especially for breaking news events.

In conclusion, Meta's response to the AI and fact-checking issues surrounding the Trump assassination attempt news demonstrates the ongoing challenges in managing the intersection of technology, information dissemination, and content moderation. As AI continues to play a larger role in our digital interactions, incidents like these serve as important reminders of the need for transparency, rapid response to errors, and continued improvement of these systems.

Key facts from Meta's statement

Meta AI initially provided generic responses about the Trump assassination attempt to avoid spreading misinformation

Some users reported Meta AI refusing to discuss the event entirely

In some cases, Meta AI provided incorrect information, including denying the event occurred

A fact-checking label was incorrectly applied to an authentic photo of Trump due to similarities with a doctored image

Meta acknowledged the need for quicker updates to AI responses during breaking news events

The company emphasized its commitment to improving AI and fact-checking systems

Meta recognized the potential for these errors to create impressions of bias, even when unintended

The incidents highlight industry-wide challenges in AI's handling of real-time events and nuanced content moderation