Meta unveils advanced AI infrastructure with new custom chips

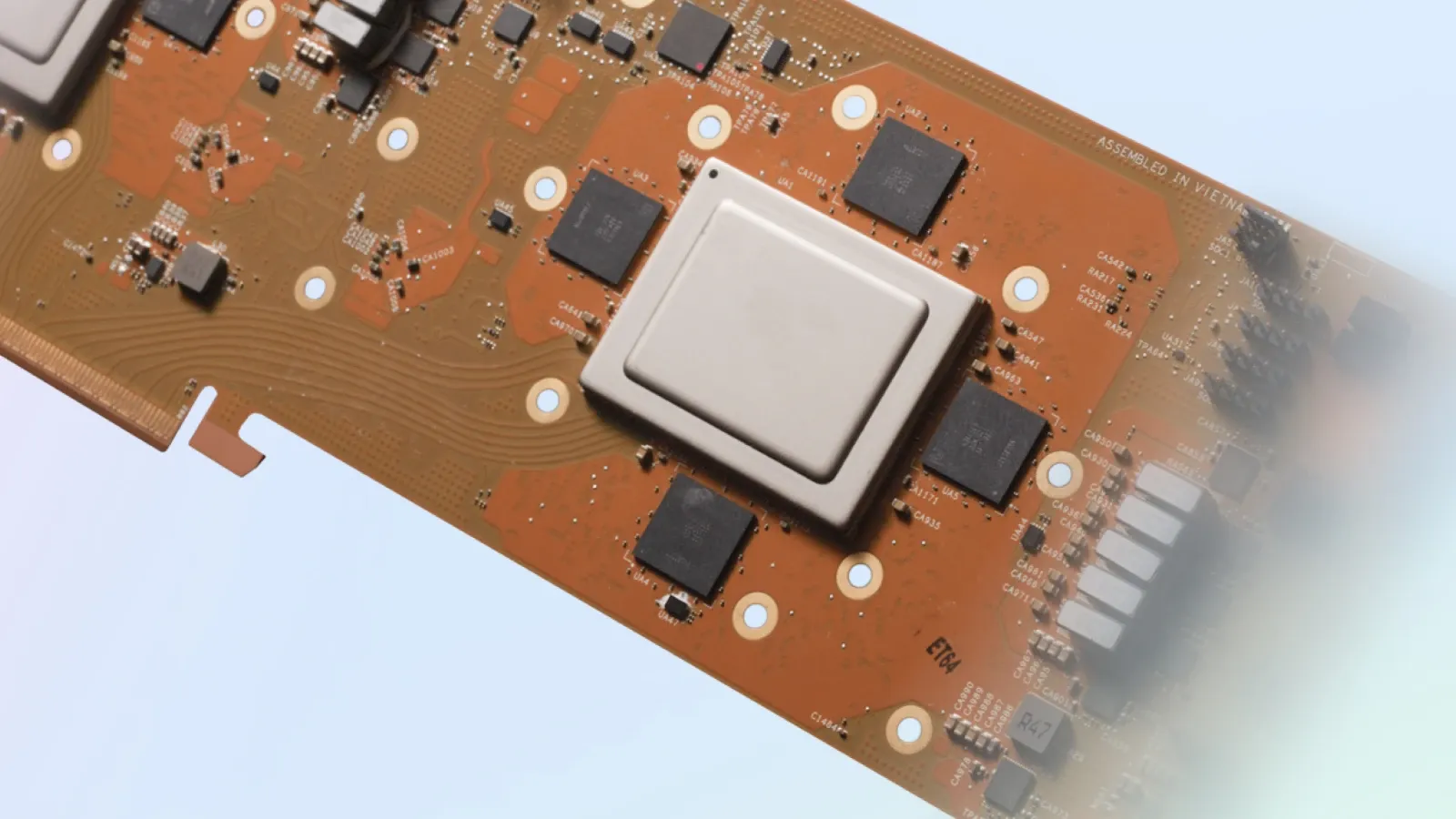

Meta this week announced a significant leap in its artificial intelligence (AI) infrastructure. The next generation of its custom-designed Meta Training and Inference Accelerator (MTIA) chip promises to revolutionize the company's AI capabilities across its platforms.

Meta this week announced a significant leap in its artificial intelligence (AI) infrastructure. The next generation of its custom-designed Meta Training and Inference Accelerator (MTIA) chip promises to revolutionize the company's AI capabilities across its platforms.

Enhanced Performance: The new MTIA chip outperforms its predecessor, enabling faster, more efficient processing of Meta's AI models.

Focus on Recommendations: Designed for Meta's ranking and recommendation models, the chip powers the personalized suggestions that drive user experience on Facebook and Instagram.

AI-Centric Investment: This new chip underscores Meta's commitment to building AI-powered infrastructure to support cutting-edge experiences, including future generative AI products.

Next-Generation Efficiency

The first-generation MTIA chip, introduced last year, set a benchmark for in-house custom silicon development at Meta. The latest iteration goes even further, doubling compute and memory bandwidth for unmatched efficiency in serving AI-driven recommendations.

Real-World Results

Deployed in Meta's data centers, the MTIA chip is already powering AI models in production. This translates to:

- Scalability: More compute power for complex AI workloads.

- Flexibility: Supports both simple and complex ranking models.

- Superior Performance: Outperforms commercially available GPUs.

Meta's custom silicon investment isn't stopping here. The company is developing MTIA to support even more advanced AI use cases and is working on next-generation hardware systems to complement its in-house solutions.

MTIA chips and AI are crucial for Meta's success

MTIA chips and AI are not just about today's Meta; they represent the infrastructure to power the company's future. It's about staying ahead in personalizing experiences, driving innovation, and efficiently handling the massive scale of AI needed to deliver on Meta's long-term visions.

1. Efficiency and Performance:

- Tailored Design: MTIA chips are custom-designed for Meta's specific AI needs, unlike general-purpose GPUs. This leads to superior efficiency in running ranking and recommendation algorithms, the backbone of Meta's platforms.

- Reduced Reliance: By developing in-house chips, Meta reduces dependence on external suppliers like Nvidia, potentially providing more control and adaptability for future needs.

- Cost Savings: While upfront investment in custom silicon is high, optimized efficiency translates to potential long-term operational cost savings on energy and hardware.

2. User Experience:

- Hyper-Personalization: AI powering ranking and recommendation systems is what makes Facebook and Instagram feeds feel distinctly tailored to each user. MTIA chips improve this core experience.

- Content Understanding: AI models help Meta understand text, images, and videos deeply, allowing for better content moderation, improved accessibility features, and smarter ad targeting.

- Responsiveness: Quicker AI processing means real-time features, seamless transitions within the app, and faster loading times, all enhancing user satisfaction.

3. Competitive Edge:

- Innovation Driver: In the ever-evolving tech landscape, custom AI infrastructure gives Meta an edge in developing new AI-powered products and services before competitors.

- Generative AI Race: With the rise of tools like ChatGPT, strong AI infrastructure is essential for Meta to compete in the world of generative AI experiences.

- Data Sovereignty: Potential for reduced reliance on external vendors could align with Meta's long-term strategies for increased control over user data and how it's processed.

4. Scaling AI Ambitions:

- Beyond Recommendations: While MTIA currently focuses on recommendations, Meta's AI goals are far broader - image and video understanding, language processing, metaverse applications, etc.

- Increased Demand: As Meta's user base grows and AI use cases expand, efficient infrastructure is a must to support the massive computational needs of advanced AI models.

- Research to Products: Meta invests heavily in fundamental AI research. Custom silicon is needed to move those research breakthroughs into real-world applications quickly and at scale.