Artificial intelligence has failed to convince most Americans that it will make them better at thinking, deciding, or connecting with other humans. New research released December 1, 2025, reveals persistent skepticism about AI's capacity to enhance core cognitive abilities and interpersonal relationships, even among the technology's heaviest users.

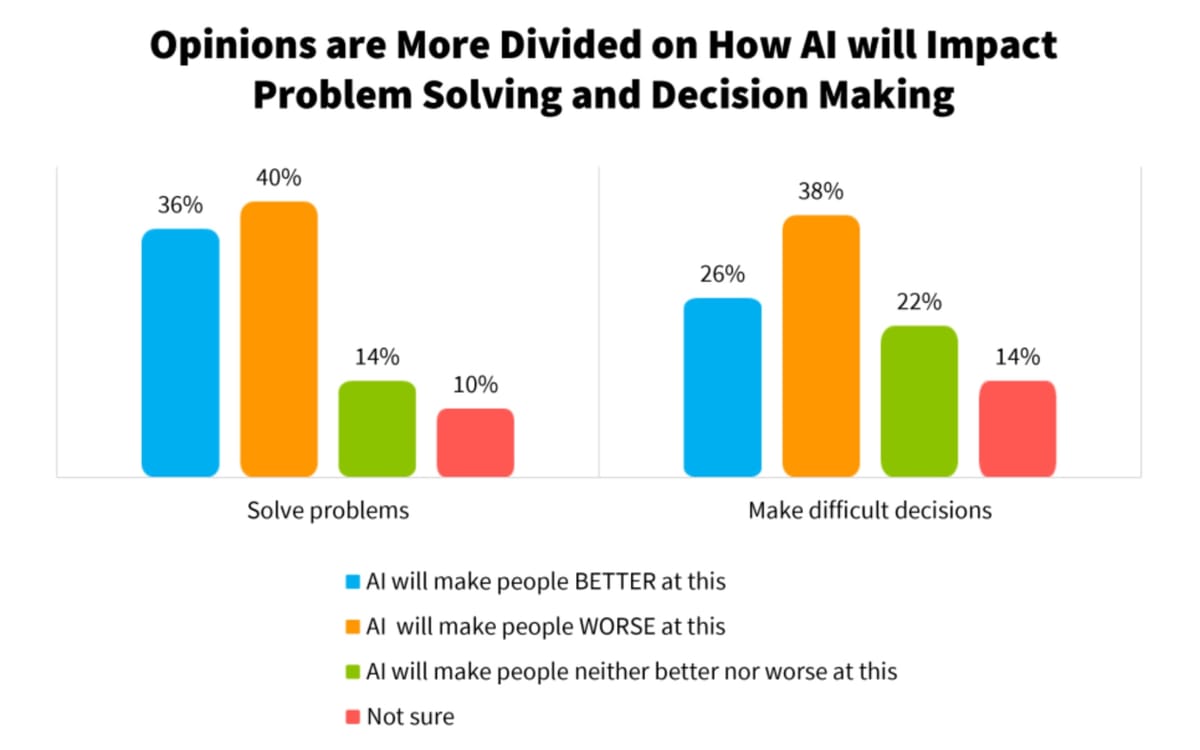

The survey data from SSRS and Project Liberty Institute shows majorities of U.S. adults view AI's impact negatively on abilities to think creatively and form meaningful human relationships. Opinions on problem-solving and decision-making capabilities lean negative as well, though slightly more balanced than the overwhelming skepticism directed toward creativity and relationships.

These findings align with earlier research from Project Liberty Institute, Elon University's Imagining the Digital Future Center, and Pew Research Center documenting generally negative public perceptions of AI's effects on uniquely human traits and cognitive skills.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Heavy users remain unconvinced

Four percent of U.S. adults report using AI tools "almost constantly," representing the technology's most dedicated user base. This group demonstrates more optimism about AI's potential than the general population, yet their enthusiasm has clear limits. Fewer than half of daily users—46 percent—believe AI will make people better at solving problems. Only 39 percent think it will improve decision-making abilities.

The skepticism becomes more pronounced when examining AI's effect on human relationships. Among the 37 percent of U.S. adults who use AI daily, more than four times as many say the technology will make people worse at forming meaningful connections compared to those who expect improvements. Fifty-three percent anticipate deterioration in relationship-building abilities, while just 12 percent foresee enhancement.

These patterns create challenges for product developers attempting to build consumer AI applications. The data suggests even extensive hands-on experience with AI tools does not necessarily convert users into believers in the technology's capacity to augment fundamental human capabilities.

Youth skepticism poses marketing problem

Younger adults, typically the primary target audience for new AI-enabled tools, demonstrate particularly negative views about artificial intelligence's impact. The technology shows especially weak performance among this demographic when measuring attitudes toward creative thinking and forming meaningful human relationships.

This skepticism among 18-to-29-year-olds carries strategic implications for advertising technology companies deploying agentic AI capabilities throughout 2025. While platforms have rushed to integrate autonomous AI systems into campaign management, the survey suggests younger consumers—who represent crucial long-term adoption prospects—harbor serious doubts about AI's role in human-centric activities.

The findings challenge assumptions that digital natives will naturally embrace AI integration across all aspects of life. Instead, the data indicates this generation approaches the technology with discernment, weighing specific use cases against potential downsides.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

Thoughtful trade-offs, not reactionary fear

In-depth interviews conducted with a subset of 18-to-29-year-old survey respondents reveal nuanced thinking rather than blanket resistance to artificial intelligence. According to the research, this generation is "thoughtfully weighing the pros and cons of AI across different settings, deliberating about which trade-offs they are comfortable making in their own lives."

Most young adults interviewed acknowledged benefits to AI-enabled tools and platforms, particularly for instrumental, fact-based tasks. However, they also recognized more subtle potential harms when AI replaces traditionally human-to-human interactions.

One 27-year-old respondent named Greg (names changed to protect privacy) articulated this balanced perspective: "What makes me excited about AI is that it's not done yet, it's a work in progress."

The interviews revealed AI has prompted many young adults to think more deliberately about unique value that human beings contribute to different interactions and settings. For some, exposure to AI-powered systems has made the "human element" in experiences like job applications, counseling, or artistic creation more apparent than before. This awareness has led them to reflect on whether that human dimension represents something worth preserving.

Several young adults raised concerns extending beyond specific contexts to broader behavioral changes. They mentioned general impatience with tasks and other people, unwillingness to learn through trial and error, and prioritizing ends over means as potential consequences of increasing AI reliance.

Sector-specific applications show promise

While overall sentiment trends negative, the research identifies contexts where respondents see more potential value. Instrumental, fact-based tasks represent the strongest use case for AI adoption in public perception. These applications require data processing, pattern recognition, and computational analysis rather than creativity, empathy, or interpersonal sensitivity.

This distinction matters for marketing professionals managing AI-powered campaign optimization where technical analysis supports rather than replaces human strategic judgment. The survey suggests consumers differentiate between AI handling mechanical tasks versus making decisions requiring uniquely human qualities.

The advertising industry has deployed AI extensively across technical infrastructure throughout 2025. Amazon launched AI agents for automated campaign management in November. StackAdapt introduced its Ivy assistant in July for processing natural language queries in programmatic advertising. These implementations focus primarily on data analysis and workflow automation rather than creative or relational functions.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Trust challenges compound adoption barriers

The SSRS findings document skepticism extending beyond technical capabilities to fundamental questions about AI's role in human society. This pattern aligns with broader consumer trust challenges facing the advertising industry as AI integration accelerates.

Research released in July 2025 showed 59 percent of consumers expressing discomfort with their data being used to train AI systems. Two-thirds reported increased concerns about unauthorized data access, cybercrime threats, and identity theft. These privacy anxieties intersect with the capability skepticism documented in the SSRS survey to create multiple barriers to AI acceptance.

Media experts surveyed in December 2025 reflected similar caution, with 36 percent indicating they are cautious about advertising within AI-generated content and will take extra precautions before doing so. Forty-six percent identified increasing levels of AI-generated content unsuitable for brands as a serious threat to media quality.

The combination of capability doubts and trust concerns creates compound challenges for platforms attempting to scale AI adoption. Users must believe both that AI can perform claimed functions and that deployment serves their interests rather than primarily benefiting technology providers.

Measurement and transparency gaps

The skepticism documented in the SSRS research reflects broader implementation challenges facing organizations deploying AI systems. Research published in November 2025 found most companies remain in pilot mode despite executive claims of enterprise-ready AI programs, with inadequate data governance frameworks undermining implementation quality.

According to Publicis Sapient analysis, executives often mistake generative AI experimentation or basic tool usage for full integration. One consultant characterized the gap as "confidence without measurement is belief, not certainty." This disconnect between stated AI readiness and actual capability mirrors public skepticism about whether the technology can deliver claimed benefits.

Marketing organizations face particular measurement challenges. Survey data released in November 2025 showed 72 percent of marketers plan to increase AI usage over the next 12 months, yet only 45 percent feel confident in their ability to apply it successfully. Among those reporting low confidence, 40 percent cited insufficient organizational understanding of AI or large language models.

The inability to track results against appropriate goals ranked as a significant barrier for 44 percent of marketers surveyed. Many rely on proxy metrics like clicks or web traffic that fail to capture AI's broader business impact, creating measurement gaps that could fuel consumer skepticism about actual value delivered.

Platform deployment outpaces user readiness

Major technology companies have accelerated AI feature releases throughout 2025 despite persistent public skepticism. Google expanded AI Mode to all U.S. users in May 2025. The company's AI Overviews now serve over 1.5 billion users monthly, with expansion to additional English-language countries scheduled for later in the year.

However, accuracy concerns shadow these deployments. Research published in July 2025 testing AI tools for pay-per-click advertising guidance found 20 percent of responses contained inaccurate information. Google AI Overviews demonstrated the poorest performance with 26 percent incorrect answers, potentially exposing millions of users to flawed guidance.

These reliability issues compound the capability skepticism documented in the SSRS survey. If users doubt AI can enhance their thinking, creativity, or relationships, accuracy problems provide concrete evidence supporting those concerns rather than evidence to change minds.

Industry analysis from October 2025 predicted one-third of companies will erode brand trust and customer experience through premature deployment of generative AI chatbots and virtual agents in 2026. Forrester characterized the risk as pressure to reduce operational costs driving organizations to implement customer-facing AI systems in contexts where failure is likely.

Human value becomes more visible

The SSRS research documents an unexpected consequence of AI deployment: the technology has made many people more conscious of uniquely human contributions to interactions and outputs. Several young adults interviewed for the study mentioned that encountering AI systems highlighted the rare and often difficult-to-measure value humans contribute to various activities.

This heightened awareness of human distinctiveness represents both a challenge and an opportunity for AI developers. Users who become more conscious of what makes human interaction valuable may resist AI substitution in those domains while remaining open to deployment in areas where human involvement adds less unique value.

For advertising applications, this suggests continued openness to AI-powered analytics and campaign management toolsthat augment rather than replace human strategic thinking. Newton Research's integration with Snowflake Cortex AI in November 2025 exemplifies this approach, enabling brands to run media mix modeling and incrementality analysis while marketers retain decision-making authority.

The distinction between augmentation and replacement appears crucial for public acceptance. According to the SSRS findings, most respondents recognize AI's utility for specific tasks while wanting to preserve human elements in activities where those contributions matter most.

Product development implications

The nuanced reflections captured in the SSRS interviews suggest humans are approaching AI adoption rationally rather than emotionally. They test tools, evaluate usefulness, and weigh integration decisions before fully incorporating AI into their lives. This measured approach contradicts assumptions that resistance stems primarily from fear or misunderstanding.

Kristen Purcell, Jeb Bell, and Jessica Theodule authored the research, with special thanks to Darby Steiger, Rachel Askew, Melissa Silesky, Hannah Ritz and the SSRS qualitative team. The study positions AI adoption as an ongoing evaluation process where users continuously assess whether specific applications deliver sufficient value to justify potential downsides.

For product developers, this means successful AI tools must demonstrate clear utility in contexts users already find appropriate for technological assistance. Marketing AI as a universal solution for all human activities conflicts with public sentiment favoring selective deployment based on careful consideration of trade-offs.

The research also suggests transparency about AI's limitations could build trust more effectively than overselling capabilities. If users expect AI to struggle with creativity, relationships, and complex decision-making, products that acknowledge these boundaries while excelling at data processing and routine tasks may achieve better adoption than those promising comprehensive cognitive enhancement.

Regulatory and policy context

The skepticism documented in the SSRS survey arrives as regulators worldwide increase scrutiny of AI deployment. European data protection authorities adopted Guidelines 3/2025 in September, establishing complex compliance requirements where Digital Services Act and General Data Protection Regulation obligations intersect.

Australia proposed dual-track privacy compliance frameworks in August, fundamentally challenging existing reform proposals by focusing on whether data use serves individual best interests rather than mere consent mechanisms. These regulatory developments reflect official concern about AI's impact on consumer welfare that aligns with public skepticism in the SSRS data.

Privacy lawsuits targeting AI applications have increased throughout 2025. Industry predictions released in Octoberforecast a 20 percent surge in privacy litigation during 2026 as consumers and law firms broaden focus beyond tracking pixels to encompass AI applications themselves.

The combination of public skepticism, regulatory pressure, and legal risk creates incentives for companies to deploy AI more cautiously than current adoption rates suggest. Marketing organizations face particular pressure to demonstrate AI usage serves consumer interests rather than primarily reducing operational costs or enhancing targeting capabilities at the expense of privacy.

Industry adaptation patterns

Marketing technology platforms have responded to AI skepticism with varying strategies. Some emphasize transparency and user control. Others focus on demonstrating concrete value through pilot programs and measurable outcomes. Still others have slowed deployment pending better understanding of consumer acceptance.

Survey data from media experts released in December 2025 showed 52 percent identified social media as facing serious challenges across the industry during the next 12 months, with AI-generated content concerns contributing to this assessment. Eighty-one percent stated increasing levels of AI-generated content in retail media networks will be a significant concern requiring monitoring.

These professional concerns mirror consumer skepticism in the SSRS data. Both audiences question whether AI deployment in certain contexts serves genuine needs or creates new problems requiring additional oversight and intervention.

The marketing community faces decisions about deployment pace and transparency. Research from Raptive released in July 2025 showed reader trust dropping nearly 50 percent for content perceived as AI-generated, with corresponding 14 percent declines in brand advertisement effectiveness. These findings suggest aggressive AI deployment without consumer acceptance risks damaging existing business models.

Looking ahead

The SSRS research documents public sentiment at a specific moment in AI's development. Future surveys may reveal shifting attitudes as technology improves and deployment patterns evolve. However, the current snapshot shows clear boundaries around where Americans currently see value in AI assistance.

Developers hoping to expand AI's role in human cognition, creativity, and relationships face the challenge of changing minds already skeptical based on actual usage experience. The finding that even heavy users doubt AI's capacity to enhance creative thinking or interpersonal connections suggests simple exposure will not automatically generate acceptance.

Marketing professionals navigating this landscape must balance platform capabilities against consumer comfort. Industry analysis from McKinsey published in July 2025 identified agentic AI as the most significant emerging trend for marketing organizations, with $1.1 billion in equity investment flowing into the technology during 2024.

However, investment momentum alone cannot overcome skepticism rooted in evaluation of AI's actual impact on human abilities. The SSRS data suggests successful deployment requires demonstrating value in domains users already find appropriate rather than attempting to expand AI into all aspects of human activity regardless of public acceptance.

The research team emphasized the human nature of current AI adoption patterns. People are testing, evaluating, and making considered judgments about where artificial intelligence fits into their lives. This rational approach means winning acceptance requires delivering genuine value in contexts users find appropriate rather than marketing AI as a universal solution disconnected from actual needs and preferences.

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Timeline

- December 1, 2025: SSRS and Project Liberty Institute release survey showing majorities of U.S. adults view AI negatively for creativity and relationship-building

- November 2025: 72% of marketers plan AI adoption growth while only 45% feel confident, MiQ survey reveals

- November 2025: Amazon launches AI agent for automated campaign management across Amazon Marketing Cloud and DSP

- November 2025: Data governance gaps expose AI confidence crisis, Publicis Sapient research shows most organizations remain in pilot mode

- October 2025: Forrester predicts 33% of companies will harm trust with premature AI deployment in 2026

- October 2025: Marketing professionals question AI reliability as deployment challenges mount

- July 2025: StackAdapt launches Ivy AI assistant for programmatic advertising with natural language queries

- July 2025: Raptive study shows AI content cuts reader trust by 50% and reduces brand ad effectiveness 14%

- July 2025: Consumer trust crisis emerges as 59% express discomfort with data used to train AI systems

- July 2025: McKinsey identifies agentic AI as most significant emerging trend for marketing organizations

- Previous research: Project Liberty Institute, Elon University's Imagining the Digital Future Center, and Pew Research Center document negative public perceptions of AI's impact on core cognitive skills

Subscribe PPC Land newsletter ✉️ for similar stories like this one

Summary

Who: SSRS and Project Liberty Institute conducted the research, surveying U.S. adults and conducting in-depth interviews with 18-to-29-year-olds. Research authors include Kristen Purcell (SSRS), Jeb Bell (Project Liberty Institute), and Jessica Theodule (Project Liberty Institute).

What: Survey data reveals majorities of Americans view AI negatively for thinking creatively and forming meaningful human relationships. Even among the 37% who use AI daily, skepticism persists—with only 46% believing AI will improve problem-solving and just 39% expecting better decision-making. Qualitative interviews show young adults thoughtfully weighing AI trade-offs rather than displaying reactionary fear.

When: The findings were released December 1, 2025, building on earlier research from Project Liberty Institute, Elon University's Imagining the Digital Future Center, and Pew Research Center showing similar patterns of public skepticism toward AI's impact on uniquely human traits.

Where: The research examines attitudes across the U.S. adult population, with specific focus on heavy AI users (4% who use tools "almost constantly") and younger adults aged 18-29 who represent primary target audiences for AI-enabled products but demonstrate particularly negative views.

Why: The research matters because it documents persistent skepticism about AI's capacity to enhance core human abilities even among heavy users, creating challenges for product developers and marketing professionals deploying AI systems. The findings suggest public resistance stems from thoughtful evaluation rather than fear or misunderstanding, requiring companies to demonstrate genuine value in appropriate contexts rather than marketing AI as a universal solution. This skepticism compounds with trust challenges, accuracy concerns, and regulatory pressure to create multiple barriers to AI acceptance across consumer and professional audiences.