Reddit's new profile privacy feature has created challenges for content moderation according to community moderators tracking spam operations. The feature allows users to hide their posting history and activity from public view, but several moderators report that automated accounts are exploiting this privacy option to conceal evidence of coordinated inauthentic behavior.

According to a post shared January 19, 2025 in r/ModSupport, moderators observe bot farms utilizing the privacy feature to avoid detection. "My recommendation is to extend the minimum account age to 1 year before profiles can be hidden," wrote the moderator who reported the issue. "The anti-detect browser users (aka bot farms) usually buy these accounts in bulk, but it comes with a monetary cost. So it's easier for them to just mass register new accounts."

The moderator explained that increasing financial barriers would reduce bot farming activities. "Increase the $ cost of bot farming to reduce bot farms," the post stated. The recommendation stems from economic analysis showing that most spam operations prefer creating new accounts rather than purchasing aged accounts due to cost considerations.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Technical implementation raises moderation concerns

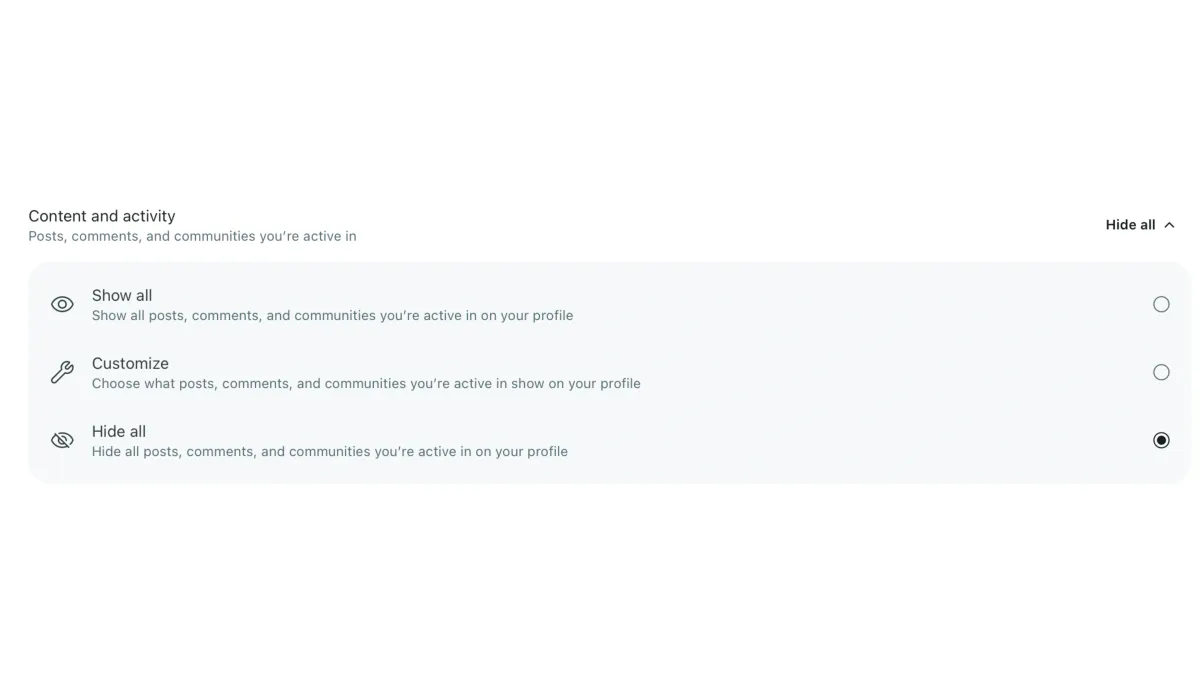

Reddit implemented profile curation settings that allow users to manage what content appears on their profiles. According to Reddit's official documentation, the feature includes three options: showing all posts and comments, customizing which content appears, or hiding all activity from public view.

The privacy controls affect both posts and comments across all communities where users participate. However, the system maintains limited visibility for moderation purposes. According to Reddit's policy, "Mods of communities you participate in and redditors whose profile posts you engage with can still see your full profile for moderation."

This 28-day visibility window for moderators has proven insufficient for tracking sophisticated spam operations. Several moderators noted that spam accounts often coordinate activities across multiple communities over longer timeframes, making the limited visibility period problematic for comprehensive investigation.

A Reddit administrator responded to the concerns stating that "when they interact in communities you moderate, you can see all of their content for 28 days." The administrator added that violating content can still be reported and "it is likely our systems will detect them proactively too."

Community response reveals enforcement challenges

Multiple moderators shared experiences with the privacy feature's impact on content moderation. One moderator wrote: "As user I will no longer interact with any other user that has their whole profile hidden." Another stated their policy had become permanent bans for hidden profile users who break rules.

The enforcement challenges extend beyond individual communities. "Oftentimes, they go to related and similar communities with less strict moderation and start spamming," the original poster explained. "I have to appeal to those communities by asking them to report the bot farm, but I can't appeal to communities when their profile is hidden."

Several moderators expressed support for maintaining the privacy feature while addressing abuse. One moderator who uses profile privacy for personal safety wrote: "My profile is private because I've had large numbers of users trying to dox me because I mod queer subs, and showing up in completely unrelated spaces to cause trouble."

Technical tools for tracking problematic accounts remain available through external services. One moderator recommended using archived Reddit data through third-party tools, though these require additional technical knowledge and resources.

Buy ads on PPC Land. PPC Land has standard and native ad formats via major DSPs and ad platforms like Google Ads. Via an auction CPM, you can reach industry professionals.

Bot detection becomes increasingly sophisticated

The spam operations being tracked utilize advanced techniques to avoid detection. According to the moderator documentation, these operations employ "anti-detect browsers" that modify browser fingerprints and rotating residential proxy networks to mask IP addresses.

The technical sophistication extends to account management strategies. Spam operators typically register new accounts in bulk rather than purchasing aged accounts due to cost considerations. This economic factor influenced the recommendation for extending minimum account age requirements before privacy features become available.

Current detection methods rely heavily on profile analysis to identify coordinated behavior patterns. Multiple moderators noted that profile visibility enables identification of accounts that suddenly become active after long dormancy periods, participate in unusual community combinations, or exhibit repetitive posting patterns.

Reddit's automated detection systems operate independently of profile visibility settings. However, moderators argue that human analysis remains crucial for identifying sophisticated campaigns that avoid automated detection mechanisms.

Industry implications for content moderation

The situation reflects broader challenges facing social media platforms balancing user privacy with content moderation effectiveness. PPC Land has documented similar tensions across platforms implementing enhanced privacy controls while maintaining community safety standards.

Reddit's advertising platform has expanded significantly with features like Dynamic Product Ads and Custom Audience API, making spam prevention increasingly important for maintaining advertiser confidence.

The platform's growing commercial importance amplifies moderation challenges. Reddit's recent partnerships with major technology companies, including data licensing agreements, increase pressure to maintain content quality while preserving user privacy options.

Content authenticity becomes particularly critical as Reddit develops AI-powered features. The platform's Community Intelligence initiative relies on genuine user conversations to provide marketing insights, making spam detection essential for product integrity.

Proposed solutions face implementation complexity

The proposed one-year minimum account age requirement represents one potential solution, but implementation faces practical challenges. Aged account markets already exist for users seeking to bypass various platform restrictions, suggesting that determined actors might adapt to higher age requirements.

Alternative approaches discussed include enhanced moderator tools, improved automated detection systems, and graduated privacy controls based on account history and behavior patterns. Some moderators suggested allowing privacy controls while maintaining extended visibility windows for moderation purposes.

Reddit's current 28-day visibility window represents a compromise between privacy and moderation needs. However, multiple moderators indicated this timeframe proves insufficient for tracking coordinated campaigns that operate over longer periods.

The platform faces pressure to address these concerns while maintaining user trust in privacy features. Any modifications to the current system must balance legitimate privacy needs against content quality requirements.

Market response reflects platform priorities

Reddit administrators acknowledged the feedback but emphasized the feature's privacy benefits. The response indicates platform priorities favor user privacy options despite moderation challenges, reflecting broader industry trends toward enhanced user control over personal data.

The situation demonstrates ongoing tensions between automated and human-based moderation approaches. While automated systems can process large volumes of content, human moderators provide contextual analysis crucial for identifying sophisticated spam operations.

Market observers note that Reddit's advertising growth depends partly on maintaining content quality and user engagement. Spam reduction serves commercial interests while supporting community experience, creating alignment between moderation effectiveness and business objectives.

Platform competition also influences moderation policies. As users increasingly value privacy controls, Reddit faces pressure to provide features matching competitor offerings while maintaining unique community characteristics.

The controversy highlights Reddit's evolution from discussion platform to advertising-supported business with diverse stakeholder interests. Balancing user privacy, community safety, and commercial viability requires ongoing policy adjustments as new challenges emerge.

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Timeline

- January 2025: Reddit introduces profile privacy controls allowing users to hide posting history

- January 19, 2025: r/ModSupport post documents bot farms exploiting privacy features

- July 2024: Reddit implements exclusive search partnership with Google

- June 2025: Reddit launches Community Intelligence platform for marketing insights

- April 2024: Reddit introduces Dynamic Product Ads for e-commerce advertising

- August 2024: Reddit launches Custom Audience API for advanced targeting

- May 2024: OpenAI announces Reddit partnership for data licensing

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

Summary

Who: Reddit moderators, bot operators, platform administrators, and community users affected by new privacy controls

What: Introduction of profile privacy features allowing users to hide posting history, which bot farms are exploiting to conceal coordinated spam operations

When: Privacy features introduced in January 2025, with moderation concerns reported January 19, 2025

Where: Across Reddit's platform and communities, particularly affecting r/ModSupport discussions and various subreddits experiencing increased spam

Why: Reddit implemented privacy controls to protect user safety and data, but insufficient safeguards allow abuse by automated accounts seeking to avoid detection while conducting spam operations

Subscribe PPC Land newsletter ✉️ for similar stories like this one. Receive the news every day in your inbox. Free of ads. 10 USD per year.

PPC Land explains

Bot farms: Coordinated networks of automated accounts designed to manipulate online platforms through systematic posting, voting, and engagement activities. These operations typically employ multiple accounts managed by software or human operators to simulate authentic user behavior while promoting specific content, products, or viewpoints. Bot farms represent a significant challenge for social media platforms as they undermine content authenticity and can distort community discussions.

Profile privacy: User control features that allow individuals to restrict public access to their posting history, comments, and activity across platform communities. These privacy controls serve legitimate purposes including protection from harassment, stalking, and unwanted attention, particularly for users in vulnerable communities or those discussing sensitive topics. The feature reflects growing user demands for personal data control and platform transparency.

Content moderation: The systematic process of reviewing, filtering, and managing user-generated content to maintain community standards and platform policies. Effective moderation combines automated detection systems with human oversight to identify violations, spam, harassment, and other problematic behaviors. This process becomes increasingly complex as platforms grow and user privacy expectations evolve.

Anti-detect browsers: Specialized software tools that modify browser fingerprints and technical signatures to avoid detection by platform security systems. These applications enable users to mask their digital identity, change apparent device characteristics, and circumvent account-based restrictions. Spam operators frequently utilize anti-detect browsers to manage multiple accounts while avoiding platform enforcement mechanisms.

Community guidelines: Established rules and standards governing acceptable behavior, content, and interactions within online platforms and specific communities. These guidelines typically address spam, harassment, hate speech, and other harmful activities while attempting to preserve legitimate discourse and user expression. Enforcement of community guidelines requires balancing automated detection with human judgment.

Automated detection: Technological systems employing algorithms, machine learning, and pattern recognition to identify problematic content and behavior without human intervention. These systems analyze posting patterns, content similarity, account characteristics, and engagement metrics to flag potential violations. While automated detection enables large-scale content review, it often requires human oversight for complex or nuanced situations.

Account age requirements: Platform policies that restrict certain features or capabilities based on how long user accounts have existed. These requirements serve as barriers to abuse by making it more difficult and expensive for bad actors to quickly establish accounts with full platform access. Age-based restrictions represent one method for balancing new user accessibility with spam prevention.

Residential proxies: Network infrastructure that routes internet traffic through legitimate residential IP addresses rather than data center connections. These tools help mask the true location and identity of users by making their traffic appear to originate from ordinary home internet connections. Spam operators use residential proxies to avoid IP-based detection and blocking mechanisms.

Coordinated inauthentic behavior: Systematic efforts by multiple accounts or users to manipulate platform algorithms, discussions, or content visibility through deceptive means. This behavior includes activities like vote manipulation, artificial engagement generation, and coordinated posting campaigns designed to amplify specific messages or suppress others. Platforms invest significant resources in detecting and preventing such coordination.

Moderation tools: Software features and interfaces that enable community managers and platform administrators to review user behavior, apply enforcement actions, and maintain content quality. These tools include user profile analysis, content filtering systems, reporting mechanisms, and action logging capabilities. Effective moderation tools must balance comprehensive oversight capabilities with user privacy protections.